SparkPi is known to Spark developers and is equivalent to a "Hello World" program of Apache Spark. This topic describes how to submit a SparkPi job in the Data Lake Analytics (DLA) console.

Prerequisites

- A virtual cluster (VC) is created before a SparkPi job is submitted. For more information

about how to create a VC, see Create a virtual cluster.

Note When you create a VC, you must set Engine to Spark.

- Your RAM user is granted the permissions to submit SparkPi jobs. This operation is required only when you log on to DLA as a RAM user. For more information, see Grant permissions to a RAM user.

Procedure

- Log on to the DLA console.

- In the top navigation bar of the Overview page, select the region where the VC resides.

- In the left-side navigation pane, choose .

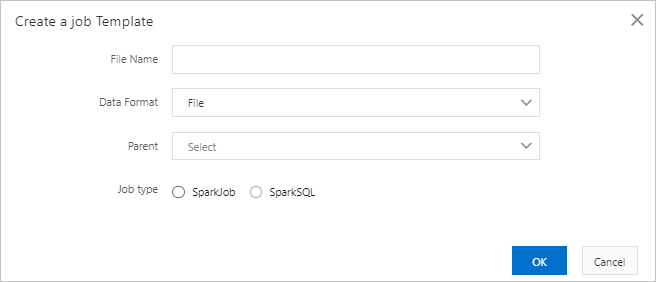

- On the Parameter Configuration page, click Create Job. In the Create a job Template dialog box, configure the parameters shown in the following figure and click OK.

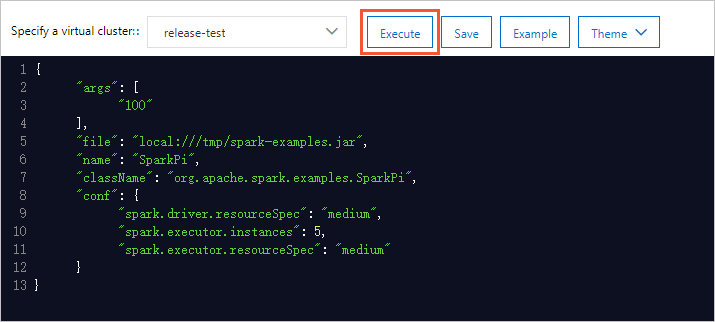

- On the Parameter Configuration page, retain the default configuration of the SparkPi job in the code editor. Then,

click Execute.