Service Mesh (ASM) supports a multi-master control plane architecture, which is an architecture where multiple ASM instances jointly manage multiple Kubernetes clusters. Compared to adding multiple Kubernetes clusters to an ASM instance, the multi-master control plane architecture offers significant advantages in configuration isolation and configuration push. This makes it more suitable for building multiple Container Service for Kubernetes (ACK) clusters in a peer-to-peer manner for disaster recovery. This topic describes how to build a multi-master control plane architecture that contains two ASM instances based on two ACK clusters.

Background information

The multi-master control plane architecture is an architecture that uses ASM to manage multiple Kubernetes clusters. In the architecture, multiple ASM instances manage the data plane components of their respective Kubernetes cluster and push configurations to the mesh proxies within the clusters. In this case, the instances in a specific cluster can discover services in another cluster based on ASM root certificates.

Compared to directly adding multiple clusters to an ASM instance, this architecture has the following advantages:

Low latency in configuration push: Multiple clusters are typically deployed across regions, zones, or VPCs. In such cases, you can add the mesh proxies within the nearest Kubernetes cluster to ASM instances. This helps reduce the latency in configuration push.

Excellent configuration isolation and environment isolation performance: Multiple clusters are managed by multiple ASM instances. Each ASM instance can deploy different control plane resources to achieve the canary release or isolation for configurations and versions. When you upgrade an ASM instance, you can upgrade multiple control planes in batches. This helps ensure the availability of the online environment.

Guaranteed stability: In extreme situations such as zone unavailability, region unavailability, or network failures, if you connect a control plane to all clusters, the clusters may fail to be configured and started due to inability to connect to the control plane. However, in a multi-master control plane architecture, the mesh proxies in available regions or zones can connect to the control plane normally, ensuring the configuration pushes for ASM instances and the normal startup of mesh proxies.

To build a multi-master control plane architecture, you need to create multiple ASM instances that reuse the same ASM root certificate. The certificate is the root certificate used by the control plane to issue identity authentication certificates for mesh proxies. By reusing the same ASM root certificate, mutual trust and mTLS protocol access can be achieved between mesh proxies connected to each ASM instance.

Prerequisites

Two Kubernetes clusters have been created, named cluster-1 and cluster-2. To enable the clusters, select Use EIP to Expose API Server. For more information, see Create an ACK managed cluster.

Step 1: Create two ASM instances reusing root certificates and create a cluster for each instance

Log on to the ASM console. In the left-side navigation pane, choose .

On the Mesh Management page, click Create ASM Instance. The following table describes the parameters that you must configure:

Parameter

Example

Service mesh name

mesh-1.

Region

Select the region where the cluster-1 resides.

Istio version

Select v1.22.6.71-g7d67a80b-aliyun or later.

Kubernetes Cluster

Select cluster-1. A VPC and a vSwitch are automatically added to an ASM instance after the instance is created.

For specific operations and other configurations, see Create an ASM instance. It may take 2 to 3 minutes that the instance status changes to Running.

Return to the Mesh Management page, click Create ASM Instance. The following table describes the parameters that you must configure:

Parameter

Example

Service mesh name

mesh-2.

Region

Select the region where cluster-2 resides.

Istio version

Select v1.22.6.71-g7d67a80b-aliyun or later.

Kubernetes Cluster

Select cluster-2. A VPC and a vSwitch are automatically added to an ASM instance after the instance is created.

ASM Root Certificate

Click Show Advanced Settings, select Reuse an Existing Root Certificate of ASM Instance, and select mesh-1 from the drop-down list.

The specific operations and other configurations are the same as for instance mesh-1. It may take 2 to 3 minutes that the instance status changes to Running.

Step 2: Add a cluster to the ASM instance in only service discovery mode

After Step 1 is complete, clusters, cluster-1 and cluster-2, are added to and managed by ASM instances, mesh-1 and mesh-2, respectively. In addition, you need to add cluster-2 and cluster-1 to mesh-1 and mesh-2 respectively in only service discovery mode. This helps enable each instance to discover services and service endpoints in another cluster.

Add cluster-2 to mesh-1 in only service discovery mode.

Log on to the ASM console. In the left-side navigation pane, choose .

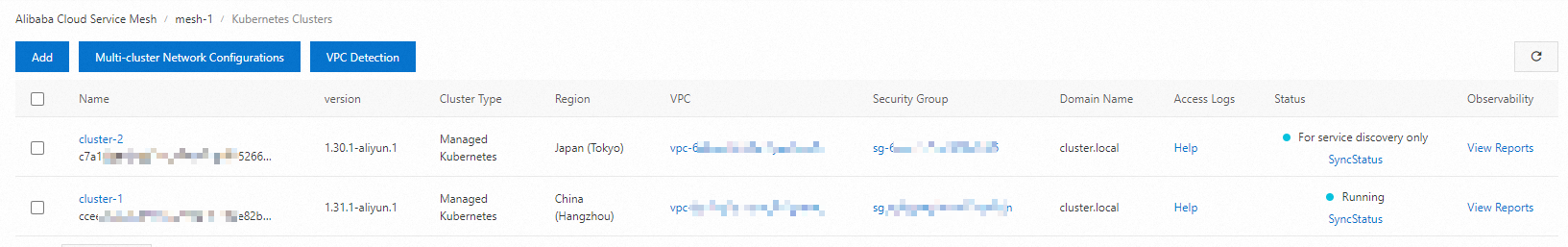

On the Mesh Management page, click the name of mesh-1. In the left-side navigation pane, select Cluster & Workload Management >Kubernetes Clusters, and click Add.

On the Add Kubernetes Cluster page, find cluster-2 that you want to add to mesh-1, and click Add (For Service Discovery Only) in the Actions column of the cluster. In the dialog box that appears, click OK. After you add the cluster, click the name of mesh-1. On the ASM Instance > Basic Information page, the status of the mesh-1 changes to Updating. Wait a few seconds and click the

in the upper-right corner. The status of the ASM instance becomes Running. The waiting duration varies with the number of clusters that you added. On the Kubernetes Clusters page, you can view the information about the added cluster.

in the upper-right corner. The status of the ASM instance becomes Running. The waiting duration varies with the number of clusters that you added. On the Kubernetes Clusters page, you can view the information about the added cluster.

Add cluster-1 to mesh-2 in only service discovery mode.

Log on to the ASM console. In the left-side navigation pane, choose .

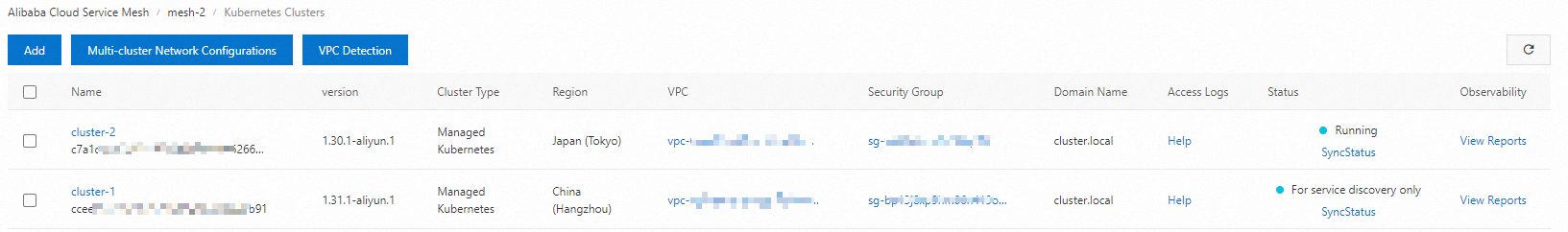

On the Mesh Management page, click the name of mesh-2. In the left-side navigation pane, select Cluster & Workload Management >Kubernetes Clusters, and click Add.

On the Add Kubernetes Cluster page, find the cluster-1 that you want to add to mesh-2, and click Add (For Service Discovery Only) in the Actions column of the cluster. In the dialog box that appears, click OK. After you add the cluster, click the name of mesh-2. On the ASM Instance > Basic Information page, the status of mesh-2 changes to Updating. Wait a few seconds and click the

in the upper-right corner. The status of the ASM instance becomes Running. The waiting duration varies with the number of clusters that you added. On the Kubernetes Clusters page, you can view the information about the added cluster.

in the upper-right corner. The status of the ASM instance becomes Running. The waiting duration varies with the number of clusters that you added. On the Kubernetes Clusters page, you can view the information about the added cluster.

If a Kubernetes cluster is added to an ASM instance in only service discovery mode, the ASM instance only discovers services and service endpoints in the cluster but does not deliver any data-plane components that run in the cluster. Any modifications made to the ASM instance cannot be applied to Kubernetes clusters added in only service discovery mode.

For Service Discovery Only is enabled in only in scenarios where multi-master control plane architectures are created. To use an ASM instance to manage a Kubernetes cluster within other clusters, add the cluster to the ASM instance. For specific operations, see Add a cluster to an ASM instance.

Step 3 (Optional): Configure a multi-cluster network between two ASM instances

If two Container Service for Kubernetes (ACK) clusters, cluster-1 and cluster-2, are deployed across VPCs and regions and the two clusters are not connected through Cloud Enterprise Network (CEN), you need to configure a multi-cluster network between the two ASM instances and deploy cross-cluster mesh proxies for cluster-1 and cluster-2. This ensures that applications and services in different clusters can access each other through cross-cluster mesh proxies. For more information about ASM cross-cluster mesh proxies, see Use ASM cross-cluster mesh proxy to implement cross-network communication among multiple clusters.

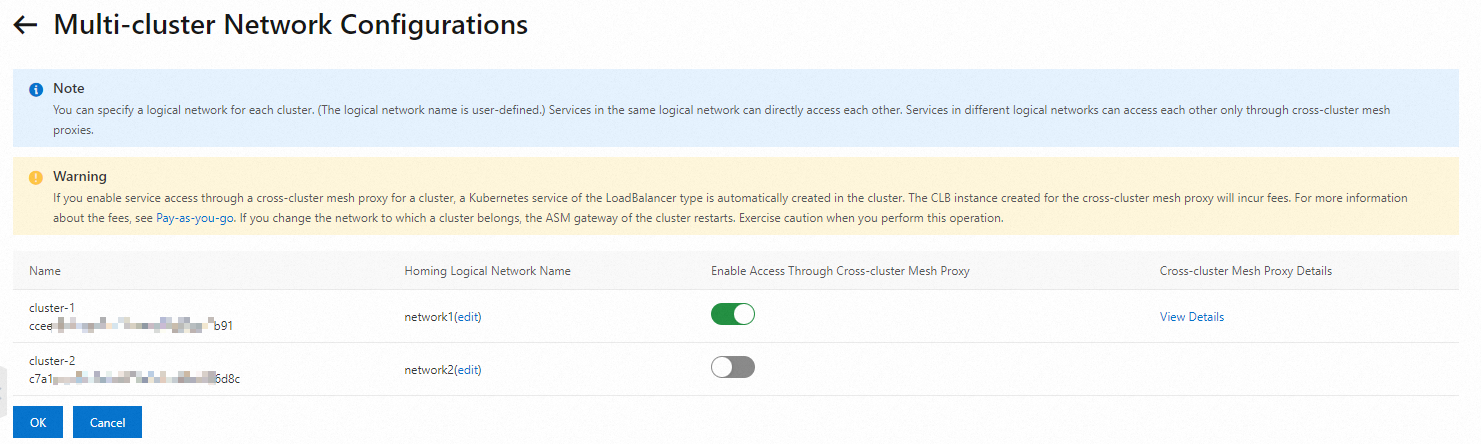

Configure a multi-cluster network among cluster-1 and cluster-2 in mesh-1.

Log on to the ASM console. In the left-side navigation pane, choose .

On the Mesh Management page, click the name of mesh-1. In the left-side navigation pane, select Cluster & Workload Management >Kubernetes Clusters.

Click the Multi-cluster Network Configurations button, and configure the multi-cluster network as follows.

Set Homing Logical Network Name to network1 for cluster-1 and enable Enable Access Through Cross-cluster Mesh Proxy for cluster-1.

Set Homing Logical Network Name to network2 for cluster-2.

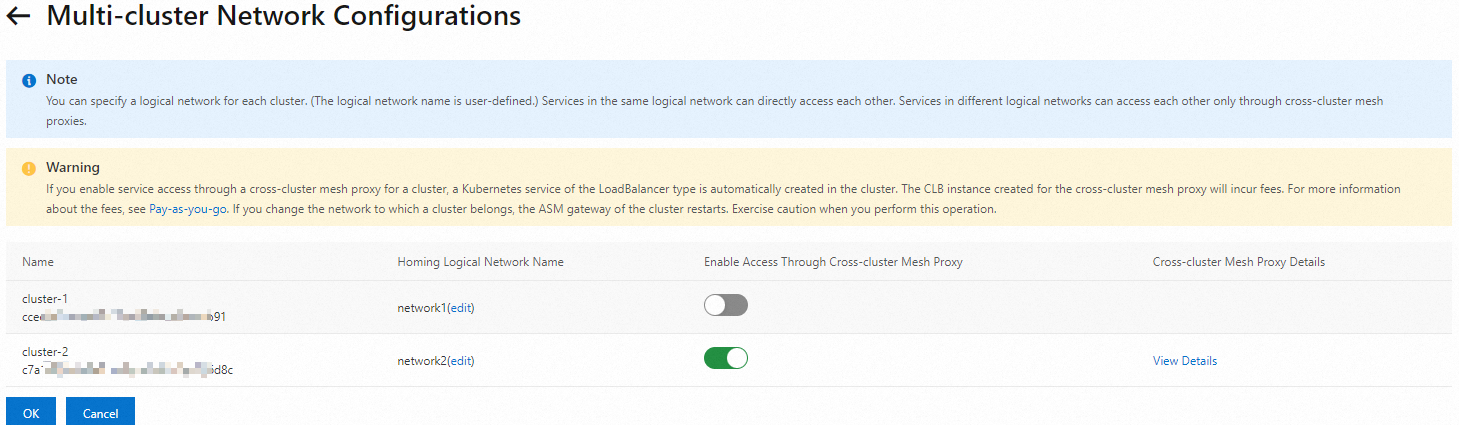

Configure a multi-cluster network among cluster-1 and cluster-2 in mesh-2.

Log on to the ASM console. In the left-side navigation pane, choose .

On the Mesh Management page, click the name of mesh-2. In the left-side navigation pane, select Cluster & Workload Management >Kubernetes Clusters.

Click the Multi-cluster Network Configurations button, and configure the multi-cluster network as follows.

Set Homing Logical Network Name to network2 for cluster-2 and enable Enable Access Through Cross-cluster Mesh Proxy for cluster-2.

Set Homing Logical Network Name to network1 for cluster-1.

Step 4: Deploy sample applications in two ASM instances

As shown in the figure below, a sleep application and a helloworld service V1 are deployed in cluster-1, and a helloworld service V2 is deployed in cluster-2. Services in the two clusters can access each other through the ASM cross-cluster mesh proxy.

Enable sidecar automatic injection feature for the default namespace in both mesh-1 and mesh-2 instances. For more information, see Manage global namespaces.

Create a sleep application and a helloworld service V1 using the following content.

Deploy the preceding YAML file in cluster-1.

Create a helloworld service V2 using the following content.

Deploy the preceding YAML file in cluster-2.

Step 5: Verify whether applications or services deployed in multi-master control plane architecture can access each other

Run the following command by using the kubeconfig file of cluster-1.

kubectl exec -it deploy/sleep -- sh -c 'for i in $(seq 1 10); do curl helloworld:5000/hello; done;'Expected output:

Hello version: v1, instance: helloworld-v1-7b888xxxxx-xxxxx Hello version: v1, instance: helloworld-v1-7b888xxxxx-xxxxx Hello version: v2, instance: helloworld-v2-7b949xxxxx-xxxxx Hello version: v2, instance: helloworld-v2-7b949xxxxx-xxxxx Hello version: v1, instance: helloworld-v1-7b888xxxxx-xxxxx Hello version: v2, instance: helloworld-v2-7b949xxxxx-xxxxx Hello version: v2, instance: helloworld-v2-7b949xxxxx-xxxxx Hello version: v2, instance: helloworld-v2-7b949xxxxx-xxxxx Hello version: v2, instance: helloworld-v2-7b949xxxxx-xxxxx Hello version: v1, instance: helloworld-v1-7b888xxxxx-xxxxxThe response shows that the version of the helloworld service switches between V1 and V2.

Run the following command to change the number of replicas of the pod in the cluster-1 where the sleep application resides to 0.

kubectl scale deploy sleep --replicas=0Create a separate sleep application using the following content.

apiVersion: v1 kind: ServiceAccount metadata: name: sleep --- apiVersion: v1 kind: Service metadata: name: sleep labels: app: sleep service: sleep spec: ports: - port: 80 name: http selector: app: sleep --- apiVersion: apps/v1 kind: Deployment metadata: name: sleep spec: replicas: 1 selector: matchLabels: app: sleep template: metadata: labels: app: sleep spec: terminationGracePeriodSeconds: 0 serviceAccountName: sleep containers: - name: sleep image: registry.cn-hangzhou.aliyuncs.com/acs/curl:8.1.2 command: ["/bin/sleep", "infinity"] imagePullPolicy: IfNotPresentDeploy the preceding YAML file in cluster-2. For more information, see Create a stateless application by using a Deployment.

Run the following command by using the kubeconfig file of cluster-2.

kubectl exec -it deploy/sleep -- sh -c 'for i in $(seq 1 10); do curl helloworld:5000/hello; done;'Expected output:

Hello version: v1, instance: helloworld-v1-7b888xxxxx-xxxxx Hello version: v2, instance: helloworld-v2-7b949xxxxx-xxxxx Hello version: v2, instance: helloworld-v2-7b949xxxxx-xxxxx Hello version: v2, instance: helloworld-v2-7b949xxxxx-xxxxx Hello version: v2, instance: helloworld-v2-7b949xxxxx-xxxxx Hello version: v1, instance: helloworld-v1-7b888xxxxx-xxxxx Hello version: v2, instance: helloworld-v2-7b949xxxxx-xxxxx Hello version: v1, instance: helloworld-v1-7b888xxxxx-xxxxx Hello version: v2, instance: helloworld-v2-7b949xxxxx-xxxxx Hello version: v2, instance: helloworld-v2-7b949xxxxx-xxxxxThe response shows that the version of the helloworld service switches between V1 and V2.