This topic describes the common query commands and metrics that are related to memory.

Linux memory

The value of the MemTotal metric is less than the RAM capacity because the BIOS and kernel initialization of the Linux boot process consume memory. The value of the MemTotal metric can be obtained by running the free command.

dmesg | grep Memory

Memory: 131604168K/134217136K available (14346K kernel code, 9546K rwdata, 9084K rodata, 2660K init, 7556K bss, 2612708K reserved, 0K cma-reserved)Linux memory query commands:

You can use the following formula to calculate the Linux memory based on the queried memory data:

total = used + free + buff/cache // Total memory = Used memory + Free memory + Cache memoryThe used memory includes the memory consumed by the kernel and all processes.

kernel used=Slab + VmallocUsed + PageTables + KernelStack + HardwareCorrupted + Bounce + X

Process memory

The memory consumed by processes includes the following parts:

The physical memory to which the virtual address space is mapped.

The memory consumed for generating a page cache for disk reading and writing.

Physical memory to which the virtual address space is mapped

Physical memory: the memory consumed for installing the hardware (RAM capacity).

Virtual memory: the memory provided by the operating system for program running. Programs run in two modes: user mode and kernel mode.

User mode: User mode is the non-privileged mode for user programs.

User space consists of the following items:

Stack: the function stack used for function calls

Memory mapping segment (MMap): the area for memory mapping

Heap: the dynamically allocated memory

BBS: the space where uninitialized static variables reside

Data: the space where initialized static constants reside

Text: the space where binary executable codes reside

Programs running in user mode use MMap to map the virtual address to the physical memory.

Kernel mode: Running programs need to access the kernel data of the operating system.

Kernel space consists of the following items:

Direct mapping space: uses simple mapping to map virtual addresses to the physical memory.

Vmalloc: the dynamic mapping space of the kernel. Vmalloc is used to map continuous virtual addresses to non-contiguous physical memory.

Persistent kernel mapping space: maps virtual addresses to high-end memory of the physical memory.

Fixed mapping space: meets specific mapping requirements.

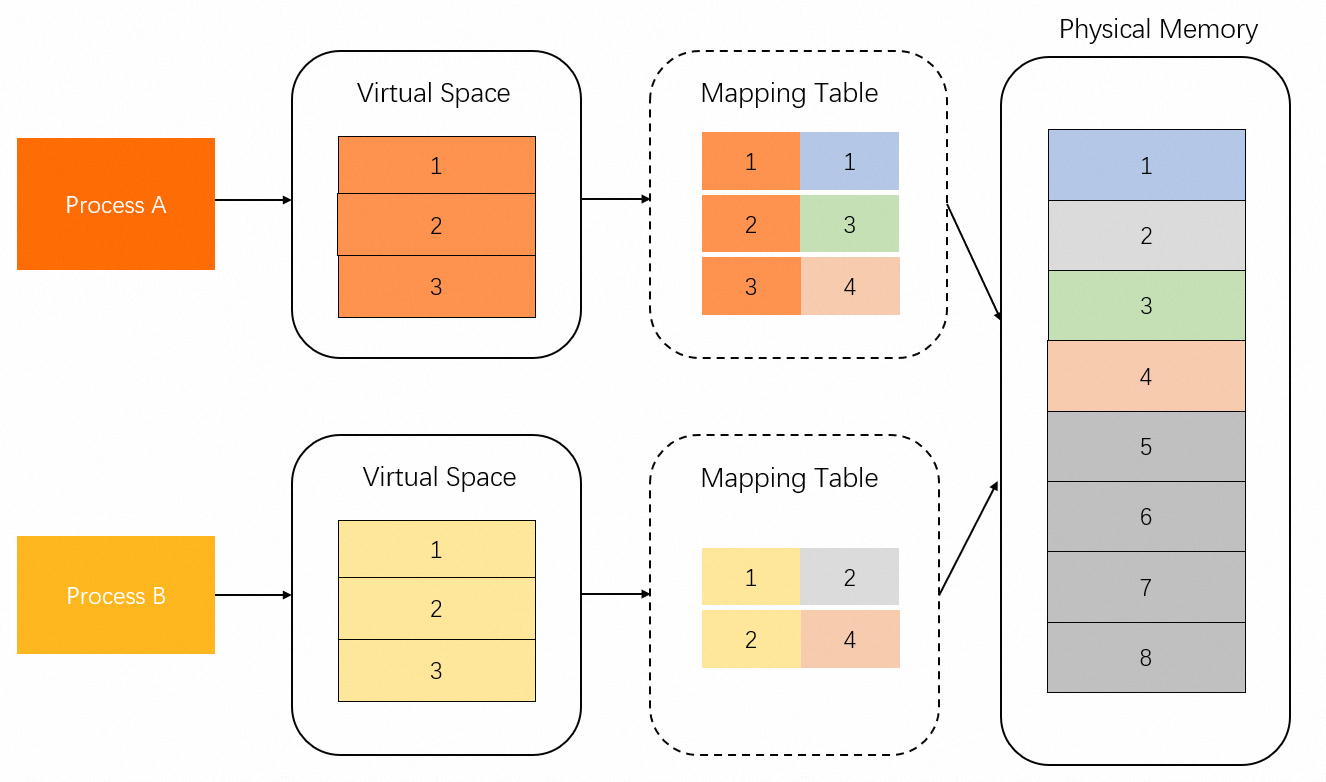

The physical memory to which virtual addresses are mapped is divided into shared memory and exclusive memory. As shown in the following figure, Memory 1 and 3 is exclusively occupied by Process A, Memory 2 is exclusively occupied by Process B, and Memory 4 is shared by Processes A and B.

Page cache

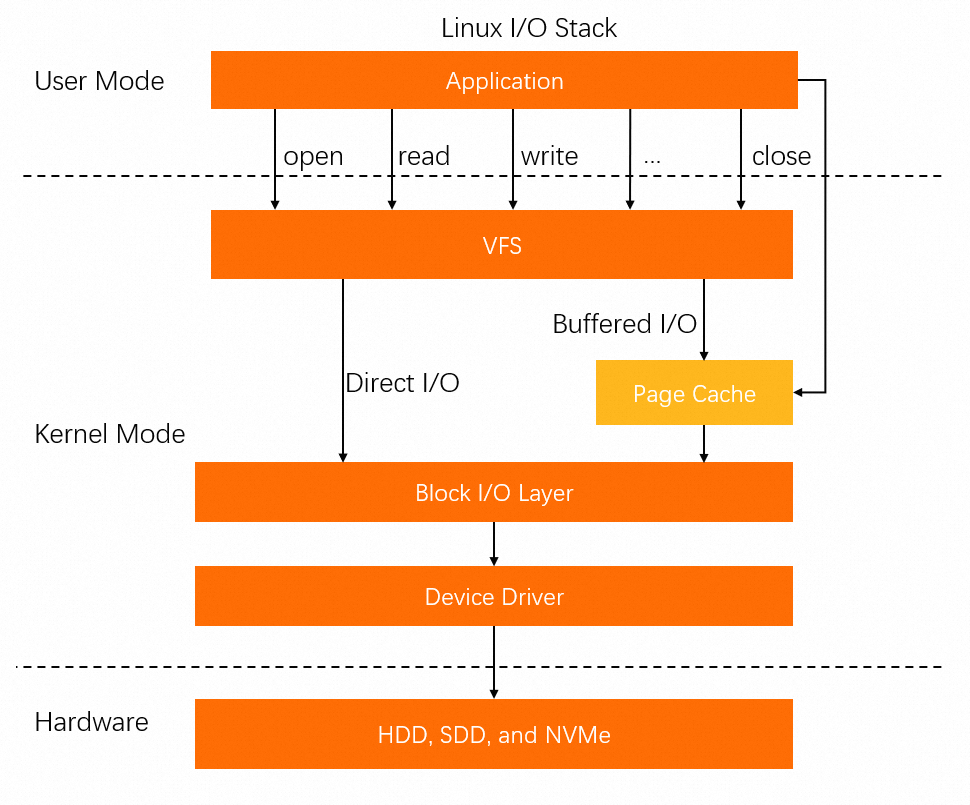

To map process files, you can use MMap files for direct mapping. You can also use syscall that is related to buffered I/O to write data to the page cache. In this case, the page cache occupies some memory.

Process memory metrics

Memory metrics of single processes

Process resources are stored in the following locations:

Anonymous (anonymous pages): Anonymous memory is the stack space used by the programs. No corresponding files exist in the disk.

File-backed (file pages): Resources are stored in disk files that contain code blocks and font information.

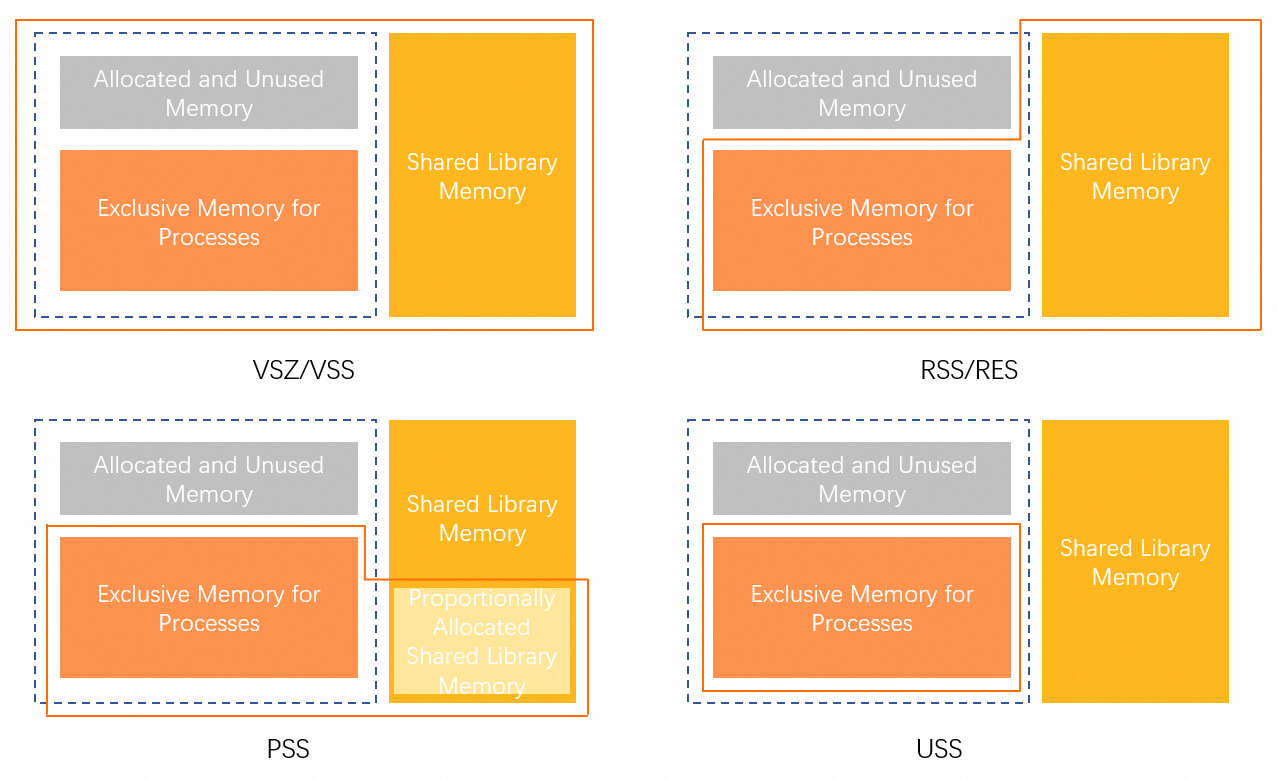

The following metrics are related to single-process memory:

anno_rss (RSan): the exclusive memory for all types of resources.

file_rss(RSfd): all memory occupied by file-backed resources.

shmem_rss(RSsh): the shared memory of the Anonymous resources.

The following table describes the commands used to query metrics.

Command | Metric | Description | Formula |

| VIRT | The virtual address space. | None |

RES | The physical memory of RSS mapping. | anno_rss + file_rss + shmem_rss | |

SHR | The shared memory. | file_rss + shmem_rss | |

MEM% | The memory usage. | RES / MemTotal | |

| VSZ | The virtual address space. | None |

RSS | The physical memory of RSS mapping. | anno_rss + file_rss + shmem_rss | |

MEM% | The memory usage. | RSS / MemTotal | |

| USS | The exclusive memory. | anno_rss |

PSS | The memory proportionally allocated. | anno_rss + file_rss/m + shmem_rss/n | |

RSS | The physical memory of RSS mapping. | anno_rss + file_rss + shmem_rss |

Memory Working Set Size (WSS) is a reasonable calculation method to evaluate the memory required to keep processes running. However, WSS cannot be accurately calculated due to the restriction of Linux page claim.

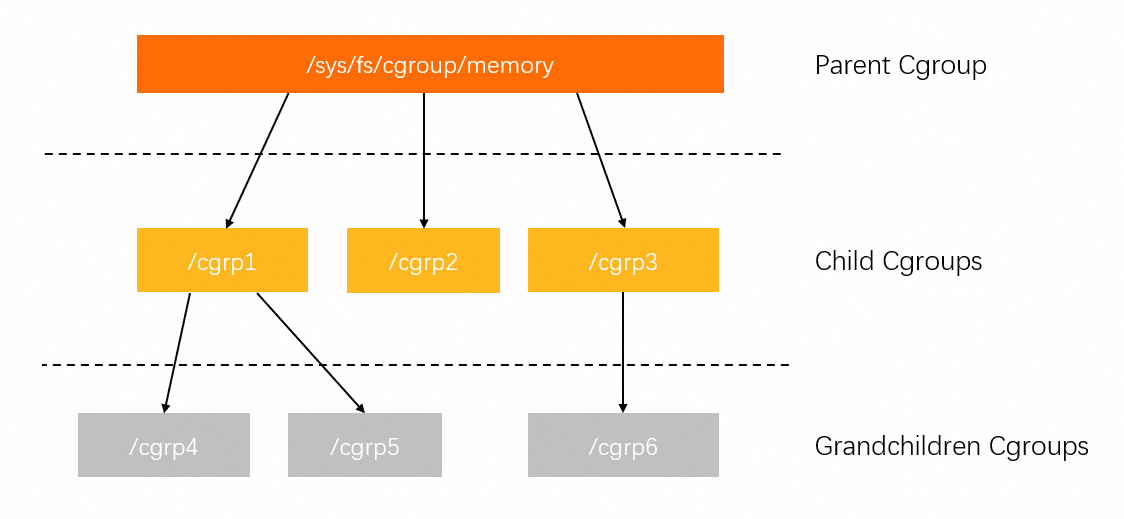

Memory metrics of cgroups

Control groups (cgroups) are used to limit, account for, and isolate a group of Linux process resources. For more information, see Red Hat Linux 6 documentation.

Cgroups are hierarchically managed. Each hierarchy is attached to one or more subsystems and contains a set of files. The files contain the metrics of the subsystems. For example, the memory control group (memcg) file contains memory metrics.

The memcg file contains the following metrics:

cgroup.event_control # Call the eventfd operation.

memory.usage_in_bytes # View the used memory.

memory.limit_in_bytes # Configure or view the current memory limit.

memory.failcnt # View the number of times that the memory usage reaches the limit.

memory.max_usage_in_bytes # View the historical maximum memory usage.

memory.soft_limit_in_bytes # Configure or view the current soft limit of the memory.

memory.stat # View the memory usage of the current cgroup.

memory.use_hierarchy # Specify whether to include the memory usage of child cgroups into the memory usage of the current cgroup, or check whether the memory usage of child cgroups is included into the memory usage of the current cgroup.

memory.force_empty # Reclaim as much memory as possible from the current cgroup.

memory.pressure_level # Configure notification events for memory pressure. This metric is used with cgroup.event_control.

memory.swappiness # Configure or view the current swappiness value.

memory.move_charge_at_immigrate # Specify whether the memory occupied by a process is moved when the process is moved to another cgroup.

memory.oom_control # Configure or view the oom controls configurations.

memory.numa_stat # View the numa-related memory.We recommend that you pay attention to the following metrics:

memory.limit_in_bytes: You can use the metric to configure or view the memory limit of the current cgroup. This metric is similar to the memory limit metric of Kubernetes and Docker.

memory.usage_in_bytes: You can use the metric to view the total memory used by all processes in the current cgroup. The metric value is approximately equal to the value of the RSS+Cache metric in the memory.stat file.

memory.stat: You can use the metric to view the memory usage of the current cgroup.

Field in the memory.stat file

Description

cache

The size of cached pages.

rss

The sum of anno_rss memory of all processes in the cgroup.

mapped_file

The sum of file_rss and shmem_rss memory of all processes in the cgroup.

active_anon

The memory occupied by all anonymous processes and swap cache in the active Least Recently Used (LRU) cache list, including

tmpfs(shmem). Unit: bytes.inactive_anon

The memory occupied by all anonymous processes and swap cache in the inactive LRU cache list, including

tmpfs(shmem). Unit: bytes.active_file

Memory used by all file-backed processes in the active LRU list. Unit: bytes.

inactive_file

Memory used by all file-backed processes in the inactive LRU list. Unit: bytes.

unevictable

The unevictable memory. Unit: bytes.

Metrics prefixed with

total_apply to the current cgroup and all child cgroups. For example, the total_rss metric indicates the sum of the RSS metric value of the current cgroup and the RSS metric values of all child cgroups.

Summary

The following table lists the differences between single-process metrics and cgroup metrics.

Metric | Single process | cgroup (memcg) |

RSS | anon_rss + file_rss + shmem_rss | anon_rss |

mapped_file | None | file_rss + shmem_rss |

cache | None | PageCache |

The anno_rss metric is the only RSS metric of cgroups. anno_rss is similar to the USS metric of a single process. Therefore, the value of mapped_file plus the value of RSS equals to the RSS metric value of a single process.

You need to separately calculate page cache data in a single process. The memory calculated in the memcg file of a cgroup already contains page cache data.

Memory statistics in Docker and Kubernetes

Memory statistics in Docker and Kubernetes are similar to Linux memcg statistics, except that the definitions of memory usage are different.

docker stats command

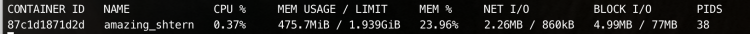

The following figure provides a sample response.

For more information about how to run the docker stats command, see Docker documentation.

func calculateMemUsageUnixNoCache(mem types.MemoryStats) float64 {

return float64(mem.Usage - mem.Stats["cache"])

}LIMIT is similar to the memory.limit_in_bytes metric of cgroups.

MEM USAGE is similar to the memory.usage_in_bytes-memory.stat [total_cache] metric of cgroups.

kubectl top pod command

The kubectl top command uses metrics-server and Heapster to obtain the value of working_set in Cadvisor that indicates the size of memory used by pods (excluding pause containers). The following code shows how to obtain the memory of a pod in a metrics-server. For more information, see Kubernetes documentation.

func decodeMemory(target *resource.Quantity, memStats *stats.MemoryStats) error {

if memStats == nil || memStats.WorkingSetBytes == nil {

return fmt.Errorf("missing memory usage metric")

}

*target = *uint64Quantity(*memStats.WorkingSetBytes, 0)

target.Format = resource.BinarySI

return nil

}The following code shows how to calculate the value of working_set in Cadvisor. For more information, see Cadvisor documentation.

func setMemoryStats(s *cgroups.Stats, ret *info.ContainerStats) {

ret.Memory.Usage = s.MemoryStats.Usage.Usage

ret.Memory.MaxUsage = s.MemoryStats.Usage.MaxUsage

ret.Memory.Failcnt = s.MemoryStats.Usage.Failcnt

if s.MemoryStats.UseHierarchy {

ret.Memory.Cache = s.MemoryStats.Stats["total_cache"]

ret.Memory.RSS = s.MemoryStats.Stats["total_rss"]

ret.Memory.Swap = s.MemoryStats.Stats["total_swap"]

ret.Memory.MappedFile = s.MemoryStats.Stats["total_mapped_file"]

} else {

ret.Memory.Cache = s.MemoryStats.Stats["cache"]

ret.Memory.RSS = s.MemoryStats.Stats["rss"]

ret.Memory.Swap = s.MemoryStats.Stats["swap"]

ret.Memory.MappedFile = s.MemoryStats.Stats["mapped_file"]

}

if v, ok := s.MemoryStats.Stats["pgfault"]; ok {

ret.Memory.ContainerData.Pgfault = v

ret.Memory.HierarchicalData.Pgfault = v

}

if v, ok := s.MemoryStats.Stats["pgmajfault"]; ok {

ret.Memory.ContainerData.Pgmajfault = v

ret.Memory.HierarchicalData.Pgmajfault = v

}

workingSet := ret.Memory.Usage

if v, ok := s.MemoryStats.Stats["total_inactive_file"]; ok {

if workingSet < v {

workingSet = 0

} else {

workingSet -= v

}

}

ret.Memory.WorkingSet = workingSet

}Therefore, the Memory Usage queried by running the kubectl top pod command can be calculated based on the following formula: Memory Usage = Memory WorkingSet = memory.usage_in_bytes - memory.stat[total_inactive_file].

Summary

Command | Ecosystem | Calculation method of Memory Usage |

| Docker | memory.usage_in_bytes - memory.stat[total_cache] |

| Kubernetes | memory.usage_in_bytes - memory.stat[total_inactive_file] |

If you use the Top and PS commands to query memory usage metrics, the Memory Usage metric in cgroups can be calculated with these metrics by using the following formulas.

cgroup ecosystem | Formula |

Memcg | rss + cache (active cache + inactive cache) |

Docker | rss |

K8s | rss + active cache |

Java statistics

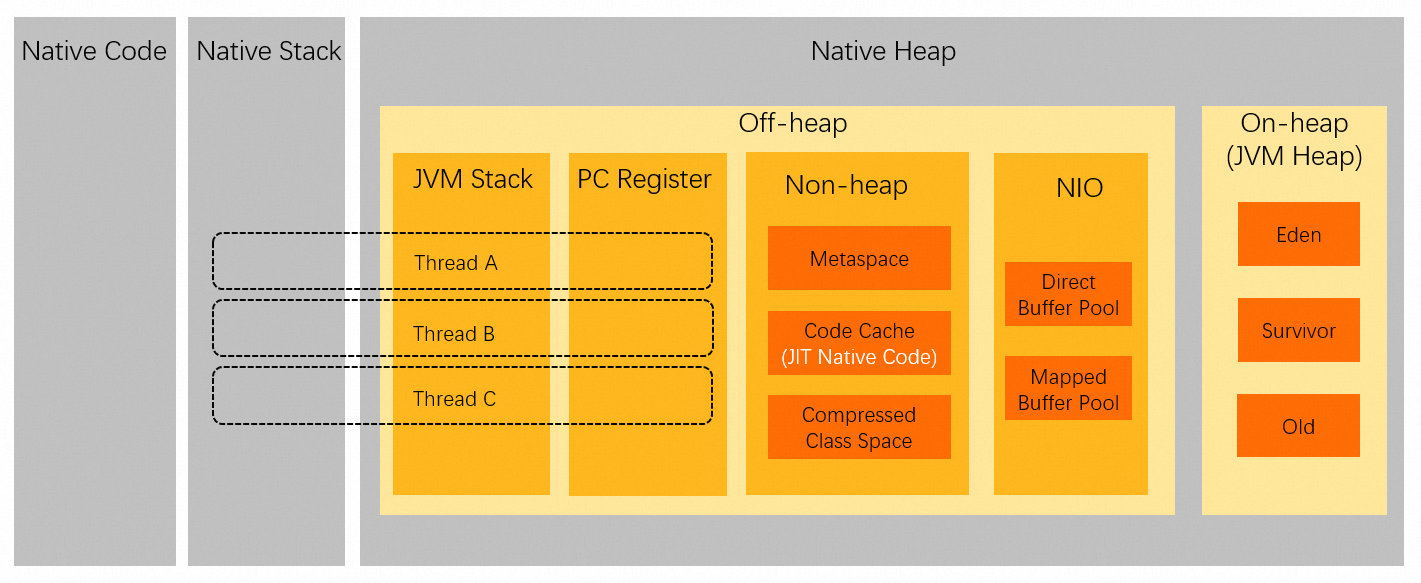

Virtual address spaces of Java processes

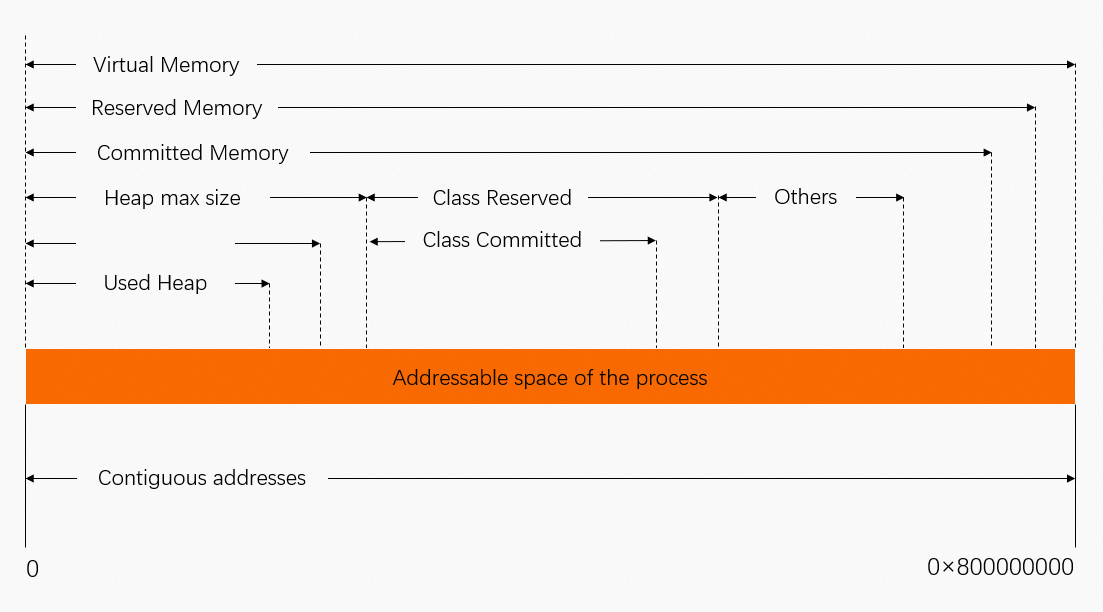

The following figure shows the distribution of data storage in the virtual address spaces of Java processes.

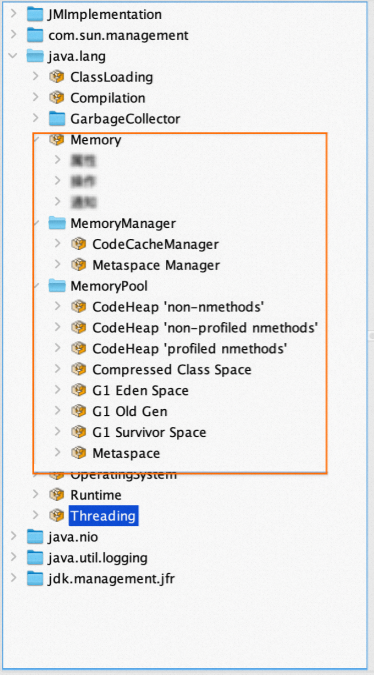

Use JMX to obtain memory metrics

You can obtain the memory metrics of Java processes by using exposed JMX data. For example, you can use JConsole to obtain memory metrics.

Memory data is revealed through MBeans.

Exposed JMX metrics do not contain all the memory metrics of JVM processes. For example, the memory consumed by Java threads is not contained in the exposed JMX metrics. Therefore, the accumulated result of exposed JMX memory-usage data is not equal to the RSS metric value of JVM processes.

JMX MemoryUsage tool

JMX reveals MemoryUsage by using MemoryPool MBeans. For more information, see Oracle documentation.

The used metric indicates the consumed physical memory.

NMT tool

Java Hotspot VM provides the Native Memory Tracking (NMT) for memory tracking. For more information, see Oracle documentation.

NMT is not suitable for production environments due to overheads.

You can use NMT to obtain the following metrics:

jcmd 7 VM.native_memory

Native Memory Tracking:

Total: reserved=5948141KB, committed=4674781KB

- Java Heap (reserved=4194304KB, committed=4194304KB)

(mmap: reserved=4194304KB, committed=4194304KB)

- Class (reserved=1139893KB, committed=104885KB)

(classes #21183)

( instance classes #20113, array classes #1070)

(malloc=5301KB #81169)

(mmap: reserved=1134592KB, committed=99584KB)

( Metadata: )

( reserved=86016KB, committed=84992KB)

( used=80663KB)

( free=4329KB)

( waste=0KB =0.00%)

( Class space:)

( reserved=1048576KB, committed=14592KB)

( used=12806KB)

( free=1786KB)

( waste=0KB =0.00%)

- Thread (reserved=228211KB, committed=36879KB)

(thread #221)

(stack: reserved=227148KB, committed=35816KB)

(malloc=803KB #1327)

(arena=260KB #443)

- Code (reserved=49597KB, committed=2577KB)

(malloc=61KB #800)

(mmap: reserved=49536KB, committed=2516KB)

- GC (reserved=206786KB, committed=206786KB)

(malloc=18094KB #16888)

(mmap: reserved=188692KB, committed=188692KB)

- Compiler (reserved=1KB, committed=1KB)

(malloc=1KB #20)

- Internal (reserved=45418KB, committed=45418KB)

(malloc=45386KB #30497)

(mmap: reserved=32KB, committed=32KB)

- Other (reserved=30498KB, committed=30498KB)

(malloc=30498KB #234)

- Symbol (reserved=19265KB, committed=19265KB)

(malloc=16796KB #212667)

(arena=2469KB #1)

- Native Memory Tracking (reserved=5602KB, committed=5602KB)

(malloc=55KB #747)

(tracking overhead=5546KB)

- Shared class space (reserved=10836KB, committed=10836KB)

(mmap: reserved=10836KB, committed=10836KB)

- Arena Chunk (reserved=169KB, committed=169KB)

(malloc=169KB)

- Tracing (reserved=16642KB, committed=16642KB)

(malloc=16642KB #2270)

- Logging (reserved=7KB, committed=7KB)

(malloc=7KB #267)

- Arguments (reserved=19KB, committed=19KB)

(malloc=19KB #514)

- Module (reserved=463KB, committed=463KB)

(malloc=463KB #3527)

- Synchronizer (reserved=423KB, committed=423KB)

(malloc=423KB #3525)

- Safepoint (reserved=8KB, committed=8KB)

(mmap: reserved=8KB, committed=8KB)JVM is divided into various memory areas with different purposes, such as Java heap and class, and additional memory blocks. In addition, exposed JMX data does not contain the memory usage of threads. However, Java programs generally have tens of thousands of threads that consume a large amount of memory.

For more information about the memory types of hotspots, see Corretto documentation.

Reserved and Committed metrics

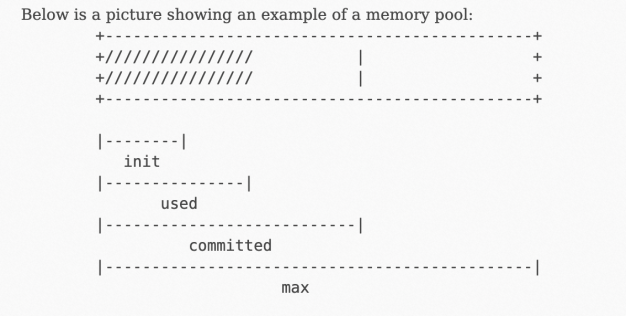

NMT statistics reveal Reserved and Committed metrics. However, neither Reserved nor Committed can map used physical memory.

The following figure shows the mapping relationships among the Reserved and Committed metrics of virtual addresses, and physical addresses. The value of the Committed metric is always greater than the value of the Used metric. In this case, the Used metric is similar to the RSS metric of JVM processes.

Summary

The metrics collected by common Java application tools are mainly exposed by JMX. JMX exposes some memory pools that can be tracked within JVM. However, the sum of these memory pools cannot be mapped to the RSS metric of JVM processes.

NMT exposes the details of the internal memory usage of JVM, but the measurement result is not the Used metric, but the Committed metric. Therefore, the total value of Committed may be slightly greater than the value of RSS.

NMT cannot track some memory outside JVM. For example, NMT cannot track the memory usage if Java programs have additional Malloc behaviors. In this case, the value of the RSS metric is greater than the memory usage data of NMT.

If the value of the Committed metric obtained by NMT and the value of the RSS metric are extremely different, memory leaks may have occurred.

You can use other NMT metrics for further troubleshooting:

Use NMT baseline and diff to troubleshoot in JVM areas.

Use NMT and pmap to troubleshoot memory issues outside JVM.