Container Service for Kubernetes (ACK) supports topology-aware GPU scheduling based on the scheduling framework. This feature selects a combination of GPUs from GPU-accelerated nodes to achieve optimal GPU acceleration for training jobs. This topic describes how to use topology-aware GPU scheduling to achieve optimal GPU acceleration for PyTorch distributed jobs.

Prerequisites

- Create an ACK Pro cluster.

- Arena is installed.

- Install ack-ai-installer.

The following table describes the versions that are required for the system components.

Component

Required version

Kubernetes

V1.18.8 and later

Helm

3.0 and later

Nvidia

418.87.01 and later

NVIDIA Collective Communications Library (NCCL)

2.7+

Docker

19.03.5

OS

CentOS 7.6, CentOS 7.7, Ubuntu 16.04 and 18.04, and Alibaba Cloud Linux 2.

GPU

V100

Limits

- Topology-aware GPU scheduling is applicable to only Message Passing Interface (MPI) jobs that are trained by using a distributed framework.

- The resources that are requested by pods must meet specific requirements before the pods can be created to submit and start jobs. Otherwise, the requests remain pending for resources.

Procedure

Configure nodes

Run the following command to set the node label and explicitly enable topology-aware GPU scheduling for nodes:

kubectl label node <Your Node Name> ack.node.gpu.schedule=topologyAfter topology-aware GPU scheduling is enabled for nodes, regular GPU scheduling cannot be enabled. You can run the following command to change the label and enable regular GPU scheduling:

kubectl label node <Your Node Name> ack.node.gpu.schedule=default --overwriteSubmit a job

Submit a Message Passing Interface (MPI) job and set --gputopology to true.

arena submit --gputopology=true --gang ***Example 1: Train VGG16

Use topology-aware GPU scheduling to train VGG16

Run the following command to submit a job to the cluster:

arena submit mpi \ --name=pytorch-topo-4-vgg16 \ --gpus=1 \ --workers=4 \ --gang \ --gputopology=true \ --image=registry.cn-hangzhou.aliyuncs.com/kubernetes-image-hub/pytorch-benchmark:torch1.6.0-py3.7-cuda10.1 \ "mpirun --allow-run-as-root -np "4" -bind-to none -map-by slot -x NCCL_DEBUG=INFO -x NCCL_SOCKET_IFNAME=eth0 -x LD_LIBRARY_PATH -x PATH --mca pml ob1 --mca btl_tcp_if_include eth0 --mca oob_tcp_if_include eth0 --mca orte_keep_fqdn_hostnames t --mca btl ^openib python /examples/pytorch_synthetic_benchmark.py --model=vgg16 --batch-size=64"Run the following command to query the status of the job:

arena get pytorch-topo-4-vgg16 --type mpijobExpected output:

Name: pytorch-topo-4-vgg16 Status: RUNNING Namespace: default Priority: N/A Trainer: MPIJOB Duration: 11s Instances: NAME STATUS AGE IS_CHIEF GPU(Requested) NODE ---- ------ --- -------- -------------- ---- pytorch-topo-4-vgg16-launcher-mnjzr Running 11s true 0 cn-shanghai.192.168.16.173 pytorch-topo-4-vgg16-worker-0 Running 11s false 1 cn-shanghai.192.168.16.173 pytorch-topo-4-vgg16-worker-1 Running 11s false 1 cn-shanghai.192.168.16.173 pytorch-topo-4-vgg16-worker-2 Running 11s false 1 cn-shanghai.192.168.16.173 pytorch-topo-4-vgg16-worker-3 Running 11s false 1 cn-shanghai.192.168.16.173Run the following command to print the job log:

arena logs -f pytorch-topo-4-vgg16Expected output:

Model: vgg16 Batch size: 64 Number of GPUs: 4 Running warmup... Running benchmark... Iter #0: 205.5 img/sec per GPU Iter #1: 205.2 img/sec per GPU Iter #2: 205.1 img/sec per GPU Iter #3: 205.5 img/sec per GPU Iter #4: 205.1 img/sec per GPU Iter #5: 205.1 img/sec per GPU Iter #6: 205.3 img/sec per GPU Iter #7: 204.3 img/sec per GPU Iter #8: 205.0 img/sec per GPU Iter #9: 204.9 img/sec per GPU Img/sec per GPU: 205.1 +-0.6 Total img/sec on 4 GPU(s): 820.5 +-2.5

Use regular GPU scheduling to train VGG16

Run the following command to submit a job to the cluster:

arena submit mpi \ --name=pytorch-4-vgg16 \ --gpus=1 \ --workers=4 \ --image=registry.cn-hangzhou.aliyuncs.com/kubernetes-image-hub/pytorch-benchmark:torch1.6.0-py3.7-cuda10.1 \ "mpirun --allow-run-as-root -np "4" -bind-to none -map-by slot -x NCCL_DEBUG=INFO -x NCCL_SOCKET_IFNAME=eth0 -x LD_LIBRARY_PATH -x PATH --mca pml ob1 --mca btl_tcp_if_include eth0 --mca oob_tcp_if_include eth0 --mca orte_keep_fqdn_hostnames t --mca btl ^openib python /examples/pytorch_synthetic_benchmark.py --model=vgg16 --batch-size=64"Run the following command to query the status of the job:

arena get pytorch-4-vgg16 --type mpijobExpected output:

Name: pytorch-4-vgg16 Status: RUNNING Namespace: default Priority: N/A Trainer: MPIJOB Duration: 10s Instances: NAME STATUS AGE IS_CHIEF GPU(Requested) NODE ---- ------ --- -------- -------------- ---- pytorch-4-vgg16-launcher-qhnxl Running 10s true 0 cn-shanghai.192.168.16.173 pytorch-4-vgg16-worker-0 Running 10s false 1 cn-shanghai.192.168.16.173 pytorch-4-vgg16-worker-1 Running 10s false 1 cn-shanghai.192.168.16.173 pytorch-4-vgg16-worker-2 Running 10s false 1 cn-shanghai.192.168.16.173 pytorch-4-vgg16-worker-3 Running 10s false 1 cn-shanghai.192.168.16.173Run the following command to print the job log:

arena logs -f pytorch-4-vgg16Expected output:

Model: vgg16 Batch size: 64 Number of GPUs: 4 Running warmup... Running benchmark... Iter #0: 113.1 img/sec per GPU Iter #1: 109.5 img/sec per GPU Iter #2: 106.5 img/sec per GPU Iter #3: 108.5 img/sec per GPU Iter #4: 108.1 img/sec per GPU Iter #5: 111.2 img/sec per GPU Iter #6: 110.7 img/sec per GPU Iter #7: 109.8 img/sec per GPU Iter #8: 102.8 img/sec per GPU Iter #9: 107.9 img/sec per GPU Img/sec per GPU: 108.8 +-5.3 Total img/sec on 4 GPU(s): 435.2 +-21.1

Example 2: Train ResNet50

Use topology-aware GPU scheduling to train ResNet50

Run the following command to submit a job to the cluster:

arena submit mpi \ --name=pytorch-topo-4-resnet50 \ --gpus=1 \ --workers=4 \ --gang \ --gputopology=true \ --image=registry.cn-hangzhou.aliyuncs.com/kubernetes-image-hub/pytorch-benchmark:torch1.6.0-py3.7-cuda10.1 \ "mpirun --allow-run-as-root -np "4" -bind-to none -map-by slot -x NCCL_DEBUG=INFO -x NCCL_SOCKET_IFNAME=eth0 -x LD_LIBRARY_PATH -x PATH --mca pml ob1 --mca btl_tcp_if_include eth0 --mca oob_tcp_if_include eth0 --mca orte_keep_fqdn_hostnames t --mca btl ^openib python /examples/pytorch_synthetic_benchmark.py --model=resnet50 --batch-size=64"Run the following command to query the status of the job:

arena get pytorch-topo-4-resnet50 --type mpijobExpected output:

Name: pytorch-topo-4-resnet50 Status: RUNNING Namespace: default Priority: N/A Trainer: MPIJOB Duration: 8s Instances: NAME STATUS AGE IS_CHIEF GPU(Requested) NODE ---- ------ --- -------- -------------- ---- pytorch-topo-4-resnet50-launcher-x7r2n Running 8s true 0 cn-shanghai.192.168.16.173 pytorch-topo-4-resnet50-worker-0 Running 8s false 1 cn-shanghai.192.168.16.173 pytorch-topo-4-resnet50-worker-1 Running 8s false 1 cn-shanghai.192.168.16.173 pytorch-topo-4-resnet50-worker-2 Running 8s false 1 cn-shanghai.192.168.16.173 pytorch-topo-4-resnet50-worker-3 Running 8s false 1 cn-shanghai.192.168.16.173Run the following command to print the job log:

arena logs -f pytorch-topo-4-resnet50Expected output:

Model: resnet50 Batch size: 64 Number of GPUs: 4 Running warmup... Running benchmark... Iter #0: 331.0 img/sec per GPU Iter #1: 330.6 img/sec per GPU Iter #2: 330.9 img/sec per GPU Iter #3: 330.4 img/sec per GPU Iter #4: 330.7 img/sec per GPU Iter #5: 330.8 img/sec per GPU Iter #6: 329.9 img/sec per GPU Iter #7: 330.5 img/sec per GPU Iter #8: 330.4 img/sec per GPU Iter #9: 329.7 img/sec per GPU Img/sec per GPU: 330.5 +-0.8 Total img/sec on 4 GPU(s): 1321.9 +-3.2

Use regular GPU scheduling to train ResNet50

Run the following command to submit a job to the cluster:

arena submit mpi \ --name=pytorch-4-resnet50 \ --gpus=1 \ --workers=4 \ --image=registry.cn-hangzhou.aliyuncs.com/kubernetes-image-hub/pytorch-benchmark:torch1.6.0-py3.7-cuda10.1 \ "mpirun --allow-run-as-root -np "4" -bind-to none -map-by slot -x NCCL_DEBUG=INFO -x NCCL_SOCKET_IFNAME=eth0 -x LD_LIBRARY_PATH -x PATH --mca pml ob1 --mca btl_tcp_if_include eth0 --mca oob_tcp_if_include eth0 --mca orte_keep_fqdn_hostnames t --mca btl ^openib python /examples/pytorch_synthetic_benchmark.py --model=resnet50 --batch-size=64"Run the following command to query the status of the job:

arena get pytorch-4-resnet50 --type mpijobExpected output:

Name: pytorch-4-resnet50 Status: RUNNING Namespace: default Priority: N/A Trainer: MPIJOB Duration: 10s Instances: NAME STATUS AGE IS_CHIEF GPU(Requested) NODE ---- ------ --- -------- -------------- ---- pytorch-4-resnet50-launcher-qw5k6 Running 10s true 0 cn-shanghai.192.168.16.173 pytorch-4-resnet50-worker-0 Running 10s false 1 cn-shanghai.192.168.16.173 pytorch-4-resnet50-worker-1 Running 10s false 1 cn-shanghai.192.168.16.173 pytorch-4-resnet50-worker-2 Running 10s false 1 cn-shanghai.192.168.16.173 pytorch-4-resnet50-worker-3 Running 10s false 1 cn-shanghai.192.168.16.173Run the following command to print the job log:

arena logs -f pytorch-4-resnet50Expected output:

Model: resnet50 Batch size: 64 Number of GPUs: 4 Running warmup... Running benchmark... Iter #0: 313.1 img/sec per GPU Iter #1: 312.8 img/sec per GPU Iter #2: 313.0 img/sec per GPU Iter #3: 312.2 img/sec per GPU Iter #4: 313.7 img/sec per GPU Iter #5: 313.2 img/sec per GPU Iter #6: 313.6 img/sec per GPU Iter #7: 313.0 img/sec per GPU Iter #8: 311.3 img/sec per GPU Iter #9: 313.6 img/sec per GPU Img/sec per GPU: 313.0 +-1.3 Total img/sec on 4 GPU(s): 1251.8 +-5.3

Performance comparison

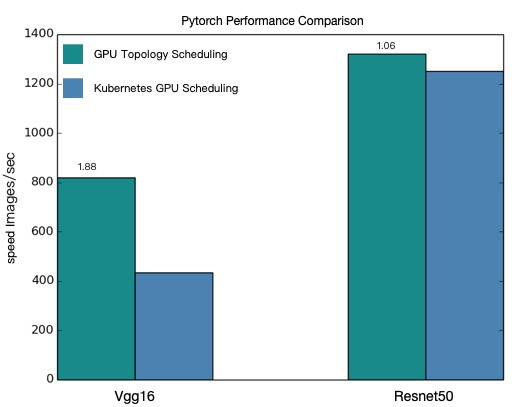

The following figure shows the performance comparison between topology-aware GPU scheduling and regular GPU scheduling based on the preceding examples.

The figure shows that after topology-aware GPU scheduling is enabled, the PyTorch distributed jobs are significantly accelerated.

The performance values in this topic are theoretical values. The performance of topology-aware GPU scheduling varies based on your model and cluster environment. The actual performance statistics shall prevail. You can repeat the preceding steps to evaluate your models.