The static resource allocation mechanism of the native Kubernetes ResourceQuota can lead to low resource utilization in clusters. To resolve this issue, Container Service for Kubernetes (ACK) provides the capacity scheduling feature based on the scheduling framework extension mechanism. This feature uses elastic quota groups to support resource sharing while ensuring resource quotas for users, which effectively improves cluster resource utilization.

Prerequisites

An ACK managed Pro cluster that runs Kubernetes 1.20 or later is created. To upgrade the cluster, see Create an ACK managed cluster.

Key features

In a multi-user cluster environment, administrators allocate fixed resources to ensure sufficient resources for different users. The traditional mode uses the native Kubernetes ResourceQuota for static resource allocation. However, due to differences in time and patterns of resource usage among users, some users may experience resource constraints while others have idle quotas. This results in lower overall resource utilization.

To resolve this issue, ACK supports the capacity scheduling feature on the scheduling side based on the scheduling framework extension mechanism. This feature improves overall resource utilization by using resource sharing and ensures resource allocation for users. The specific features of capacity scheduling are as follows.

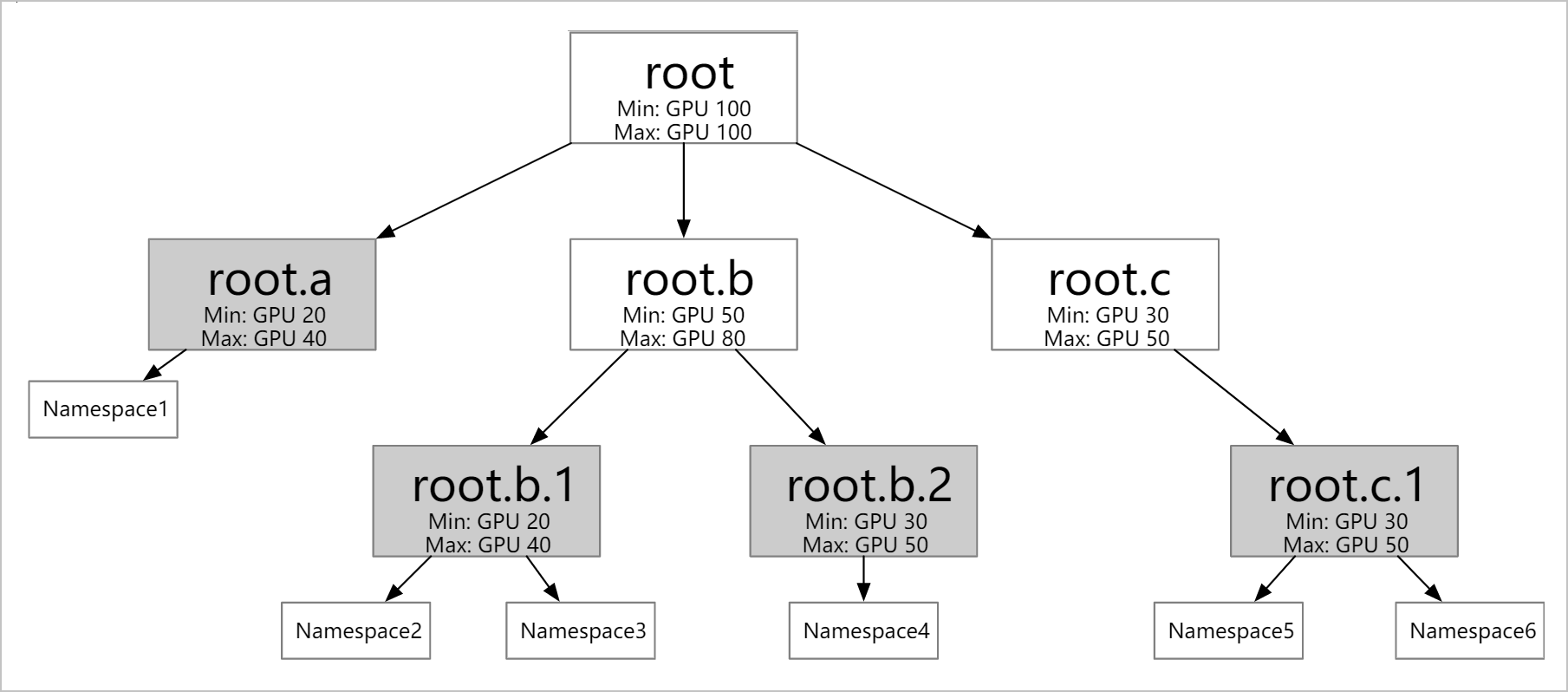

Support for defining resource quotas at different levels: Configure multiple levels of elastic quotas according to business needs, such as company organization charts. The leaf nodes of an elastic quota group can correspond to multiple namespaces, but each namespace can only belong to one leaf node.

Support for resource borrowing and reclaiming between different elastic quotas.

Min: Defines the guaranteed resources that can be used. If the resources of a cluster become insufficient, the total amount of minimum resources for all users must be lower than the total amount of resources of the cluster.

Max: Defines the maximum amount of resources that can be used.

Workloads can borrow idle resource quotas from other users, but the total amount of resources that can be used after borrowing still does not exceed the Max value. Unused Min resource quotas can be borrowed, but can be reclaimed when the original user needs to use them.

Support for configuring various resources: In addition to CPU and memory resources, it also supports configuring extended resources such as GPU and any other resources supported by Kubernetes.

Support for attaching quotas to nodes: Use ResourceFlavor to select nodes and associate ResourceFlavor with a quota in ElasticQuotaTree. After the association, pods in the elastic quota can only be scheduled to nodes selected by ResourceFlavor.

Configuration example of capacity scheduling

In this example cluster, the node is an ecs.sn2.13xlarge machine with 56 vCPUs and 224 GiB of memory.

Create the following namespaces.

kubectl create ns namespace1 kubectl create ns namespace2 kubectl create ns namespace3 kubectl create ns namespace4Create the corresponding elastic quota group according to the following YAML file:

According to the above YAML file, configure the corresponding namespace in the

namespacesfield and configure the corresponding child elastic quota in thechildrenfield. The following requirements must be met:In the same elastic quota, Min ≤ Max.

The sum of the Min values of the child elastic quotas must be less than or equal to the Min value of the parent quota.

The Min of the root node equals Max and is less than or equal to the total resources of the cluster.

Each namespace belongs to only one leaf. A leaf can contain multiple namespaces.

Check whether the elastic quota group is created successfully.

kubectl get ElasticQuotaTree -n kube-systemExpected output:

NAME AGE elasticquotatree 68s

Borrow idle resources

Deploy a service in

namespace1according to the following YAML file. The number of replicas for the pod is 5, and each pod requests 5 vCPUs.Check the deployment status of pods in the cluster.

kubectl get pods -n namespace1Expected output:

NAME READY STATUS RESTARTS AGE nginx1-744b889544-52dbg 1/1 Running 0 70s nginx1-744b889544-6l4s9 1/1 Running 0 70s nginx1-744b889544-cgzlr 1/1 Running 0 70s nginx1-744b889544-w2gr7 1/1 Running 0 70s nginx1-744b889544-zr5xz 0/1 Pending 0 70sSince there are idle resources in the current cluster (

root.max.cpu=40), when the CPU resources requested by pods innamespace1exceed 10 (min.cpu=10), which is configured byroot.a.1, the pods can continue to borrow other idle resources. The maximum CPU resources they can request is 20 (max.cpu=20), which is configured byroot.a.1.When the amount of CPU resources requested by a pod exceeds 20 (

max.cpu=20), any additional pods that request resources will be in the Pending state. Therefore, out of the 5 requested pods, 4 are in the Running state and 1 is in the Pending state.

Deploy a service in

namespace2according to the following YAML file. The number of replicas for the pod is 5, and each pod requests 5 vCPUs.Check the deployment status of pods in the cluster.

kubectl get pods -n namespace1Expected output:

NAME READY STATUS RESTARTS AGE nginx1-744b889544-52dbg 1/1 Running 0 111s nginx1-744b889544-6l4s9 1/1 Running 0 111s nginx1-744b889544-cgzlr 1/1 Running 0 111s nginx1-744b889544-w2gr7 1/1 Running 0 111s nginx1-744b889544-zr5xz 0/1 Pending 0 111skubectl get pods -n namespace2Expected output:

NAME READY STATUS RESTARTS AGE nginx2-556f95449f-4gl8s 1/1 Running 0 111s nginx2-556f95449f-crwk4 1/1 Running 0 111s nginx2-556f95449f-gg6q2 0/1 Pending 0 111s nginx2-556f95449f-pnz5k 1/1 Running 0 111s nginx2-556f95449f-vjpmq 1/1 Running 0 111sSimilar to

nginx1. Since there are idle resources in the current cluster (root.max.cpu=40), when the CPU resources requested by pods innamespace2exceed 10 (min.cpu=10), which is configured byroot.a.2, the pods can continue to borrow other idle resources. The maximum CPU resources they can request is 20 (max.cpu=20), which is configured byroot.a.2.When the amount of CPU resources requested by a pod exceeds 20 (

max.cpu=20), any additional pods that request resources will be in the Pending state. Therefore, out of the 5 requested pods, 4 are in the Running state and 1 is in the Pending state.At this point, the resources occupied by pods in

namespace1andnamespace2in the cluster have reached 40 (root.max.cpu=40), which is configured byroot.

Return borrowed resources

Deploy a service in

namespace3according to the following YAML file. The number of replicas for the pod is 5, and each pod requests 5 vCPUs.Run the following command to check the deployment status of pods in the cluster:

kubectl get pods -n namespace1Expected output:

NAME READY STATUS RESTARTS AGE nginx1-744b889544-52dbg 1/1 Running 0 6m17s nginx1-744b889544-cgzlr 1/1 Running 0 6m17s nginx1-744b889544-nknns 0/1 Pending 0 3m45s nginx1-744b889544-w2gr7 1/1 Running 0 6m17s nginx1-744b889544-zr5xz 0/1 Pending 0 6m17skubectl get pods -n namespace2Expected output:

NAME READY STATUS RESTARTS AGE nginx2-556f95449f-crwk4 1/1 Running 0 4m22s nginx2-556f95449f-ft42z 1/1 Running 0 4m22s nginx2-556f95449f-gg6q2 0/1 Pending 0 4m22s nginx2-556f95449f-hfr2g 1/1 Running 0 3m29s nginx2-556f95449f-pvgrl 0/1 Pending 0 3m29skubectl get pods -n namespace3Expected output:

NAME READY STATUS RESTARTS AGE nginx3-578877666-msd7f 1/1 Running 0 4m nginx3-578877666-nfdwv 0/1 Pending 0 4m10s nginx3-578877666-psszr 0/1 Pending 0 4m11s nginx3-578877666-xfsss 1/1 Running 0 4m22s nginx3-578877666-xpl2p 0/1 Pending 0 4m10sThe

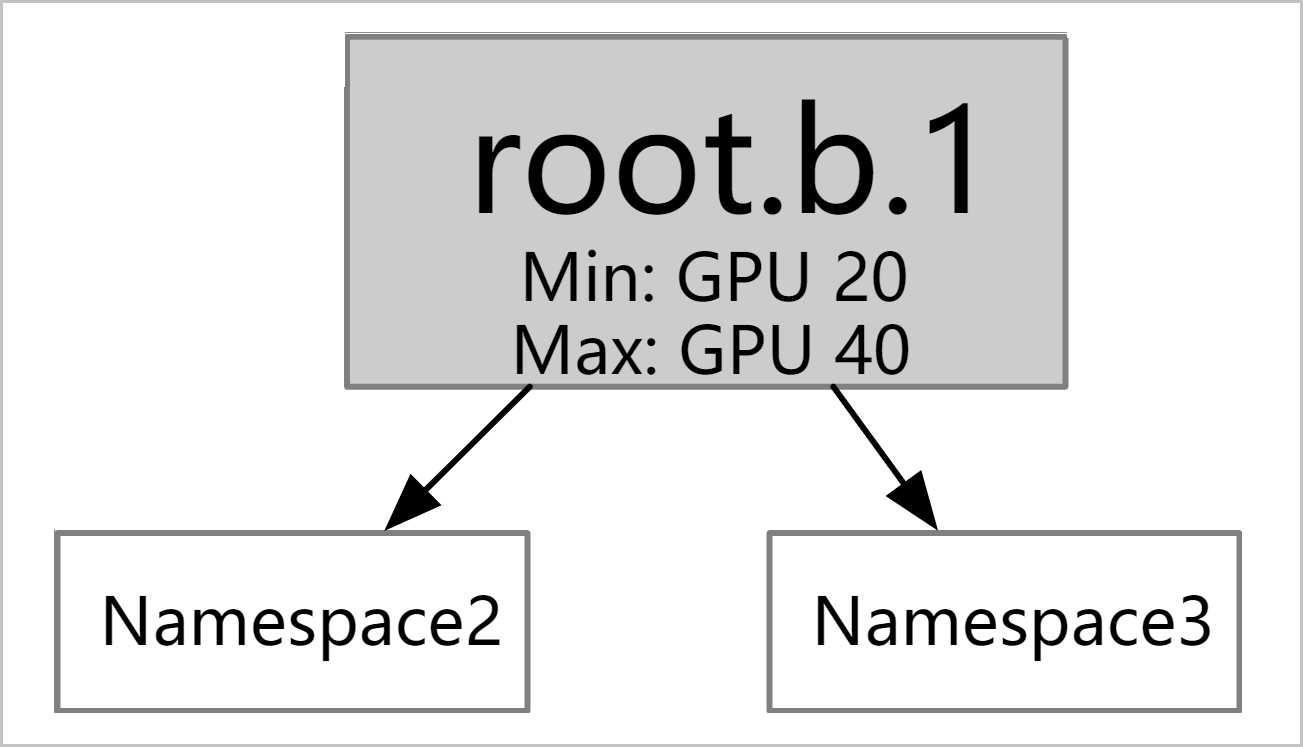

minparameter of the elastic quotaroot.b.1fornginx3is set to10. To ensure that the configuredminresources are available, the scheduler will return the pod resources that were previously borrowed fromroot.bunderroot.a. This allowsnginx3to obtain at least 10 (min.cpu=10) CPU cores to ensure the operation.The scheduler will comprehensively consider factors such as the priority, availability, and creation time of jobs under

root.a, and select corresponding pods to return previously occupied resources (10 vCPUs). Therefore, afternginx3obtains the 10 (min.cpu=10) CPU cores, 2 pods are in a Running state, and the other 3 remain in a Pending state.Deploy a service

nginx4innamespace4according to the following YAML file. The number of replicas for the pod is 5, and each pod requests 5 CPU cores.Run the following command to check the deployment status of pods in the cluster:

kubectl get pods -n namespace1Expected output:

NAME READY STATUS RESTARTS AGE nginx1-744b889544-cgzlr 1/1 Running 0 8m20s nginx1-744b889544-cwx8l 0/1 Pending 0 55s nginx1-744b889544-gjkx2 0/1 Pending 0 55s nginx1-744b889544-nknns 0/1 Pending 0 5m48s nginx1-744b889544-zr5xz 1/1 Running 0 8m20skubectl get pods -n namespace2Expected output:

NAME READY STATUS RESTARTS AGE nginx2-556f95449f-cglpv 0/1 Pending 0 3m45s nginx2-556f95449f-crwk4 1/1 Running 0 9m31s nginx2-556f95449f-gg6q2 1/1 Running 0 9m31s nginx2-556f95449f-pvgrl 0/1 Pending 0 8m38s nginx2-556f95449f-zv8wn 0/1 Pending 0 3m45skubectl get pods -n namespace3Expected output:

NAME READY STATUS RESTARTS AGE nginx3-578877666-msd7f 1/1 Running 0 8m46s nginx3-578877666-nfdwv 0/1 Pending 0 8m56s nginx3-578877666-psszr 0/1 Pending 0 8m57s nginx3-578877666-xfsss 1/1 Running 0 9m8s nginx3-578877666-xpl2p 0/1 Pending 0 8m56skubectl get pods -n namespace4Expected output:

nginx4-754b767f45-g9954 1/1 Running 0 4m32s nginx4-754b767f45-j4v7v 0/1 Pending 0 4m32s nginx4-754b767f45-jk2t7 0/1 Pending 0 4m32s nginx4-754b767f45-nhzpf 0/1 Pending 0 4m32s nginx4-754b767f45-tv5jj 1/1 Running 0 4m32sThe

minparameter of the elastic quotaroot.b.2fornginx4is set to10. To ensure that the configuredminresources are available, the scheduler will return the pod resources that were previously borrowed fromroot.bunderroot.a. This allowsnginx4to obtain at least 10 (min.cpu=10) CPU cores to ensure the operation.The scheduler will comprehensively consider factors such as the priority, availability, and creation time of jobs under

root.a, and select corresponding pods to return previously occupied resources (10 vCPUs). Therefore, afternginx4obtains the 10 (min.cpu=10) CPU cores, 2 pods are in a Running state, and the other 3 remain in a Pending state.At this point, all elastic quotas in the cluster are using their guaranteed resources set by

min.

ResourceFlavor configuration example

Prerequisites

ResourceFlavor is installed by referring to ResourceFlavorCRD (ACK Scheduler does not install it by default).

Only the nodeLabels field is effective in the ResourceFlavor resource.

The scheduler version is higher than 6.9.0. For more information about component release notes, see kube-scheduler. For more information about component upgrade entry, see Components.

ResourceFlavor, as a Kubernetes CustomResourceDefinition (CRD), establishes a binding relationship between elastic quotas and nodes by defining node labels (NodeLabels). When associated with a specific elastic quota, pods under that quota are not only limited by the total amount of quota resources but can also only be scheduled to desired nodes that match the NodeLabels.

ResourceFlavor example

An example of a ResourceFlavor is as follows.

apiVersion: kueue.x-k8s.io/v1beta1

kind: ResourceFlavor

metadata:

name: "spot"

spec:

nodeLabels:

instance-type: spotExample of associating an elastic quota

To associate an elastic quota with ResourceFlavor, you must declare it in ElasticQuotaTree by using the attributes field. The following code shows an example.

apiVersion: scheduling.sigs.k8s.io/v1beta1

kind: ElasticQuotaTree

metadata:

name: elasticquotatree

namespace: kube-system

spec:

root:

children:

- attributes:

resourceflavors: spot

max:

cpu: 99

memory: 40Gi

nvidia.com/gpu: 10

min:

cpu: 99

memory: 40Gi

nvidia.com/gpu: 10

name: child

namespaces:

- default

max:

cpu: 999900

memory: 400000Gi

nvidia.com/gpu: 100000

min:

cpu: 999900

memory: 400000Gi

nvidia.com/gpu: 100000

name: rootAfter submission, pods belonging to Quota child will only be scheduled to nodes with the instance-type: spot label.

References

For more information about release records of kube-scheduler, see kube-scheduler.

kube-scheduler supports gang scheduling, which requires that associated groups of pods must be scheduled successfully at the same time. Otherwise, none of them will be scheduled. kube-scheduler is suitable for big data processing task scenarios, such as Spark and Hadoop. For more information, see Work with gang scheduling.