By Digoal.

PipelineDB is a PostgreSQL-based stream computing database written in pure C. It features high efficiency, and a single 32-core server that can process up to 25.056 billion transactions per day. PipelineDB also boasts several of the powerful functions of PostgreSQL and, as a result of its great performance and capabilities, is helping make stream-computing databases popular.

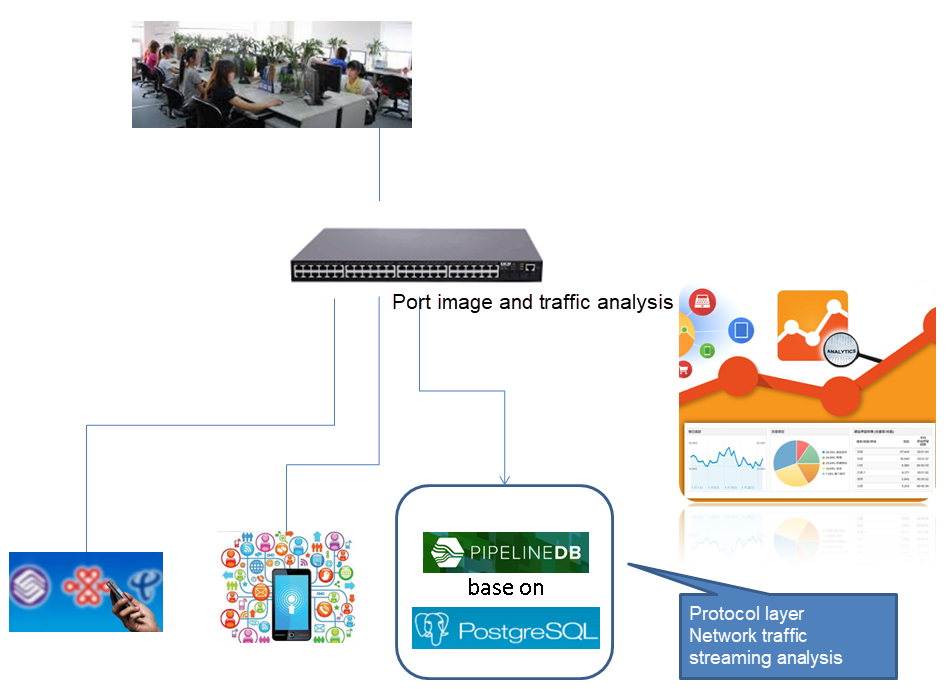

PipelineDB is widely applied in Internet-of-Things scenarios, with increasing numbers of users migrating their systems from other stream computing platforms to PipelineDB.

PipelineDB is relatively easy to use and serves as an efficient database for developers. To use it, you need to first define a stream, and then define the corresponding transform (or the event trigger module) and continuous view (real-time statistics module) based on the stream. After that, you can insert data into the stream. The transform and continuous view will subsequently process the data in the stream in real time. (We will go over this again in this article, of course.)

It is also worth noting that all of PipelineDB's interfaces support SQL operations, which makes PipelineDB that much more convenient. You can use PipelineDB for streaming scenarios where you can define things at any time.

Streams are very much the foundation for PipelineDB, and Continuous Views and Transforms are measures for processing data in streams. Streams can be understood as an abstraction by which clients can push time-series data through Continuous Views. For the same data, you need to define only one stream and write only one copy of data.

To better illustrate this point, consider this: If the multidimensional statistics of data can be completed in one SQL statement (such as one-dimension computing or multi-dimensional computing that supports windows), you only need to define one continuous view or transform. If computing cannot be completed in one SQL statement, then you'll need to define multiple continuous views or transforms. Next, if there are multiple data sources (such as, when different tables have been designed), you'll need to define different streams.

Continuous Views are another one of the fundamental abstractions in PipelineDB, that are much like a regular view, but they select from a combination of streams and tables as inputs and incrementally update in real time as new data is written.

Continuous Views serve as a measure to define a query for statistical analysis. The commands select id, count(*), avg(x), and ... from stream_1 group by ... all belong to a continuous view.

After defining a continuous view, you can insert data into the stream (such as stream_1), and the continuous view continues to collect statistics incrementally. You only need to query the continuous view to check the real-time statistical results.

The database stores real-time statistical results, which are actually merged and persisted in an incremental manner in the memory.

Unlike a continuous view, a transform command is used to trigger an event, or continuously transform incoming time-series data, but does not need to store data. However, you can set conditions for a transform. When any record meets the conditions, an event is triggered.

For example, when a particular monitored sensor value exceeds its value range threshold you set, an alarm is triggered (for example, an alarm notification is sent to a specified server through a REST API), or an alarm is recorded (through the trigger function).

PipelineDB inherits the excellent scalability of PostgreSQL. For example, PipelineDB supports functions related to probability statistics, such as high-level programming language (HLL). These functions are powerful and easy to use; for example, they can be used to count the unique visitors (UV) to a website, the number of times a unique vehicle license number passes through a traffic light, or the radiation radius of a base station based on the mobile phone signal.

Given that data in many scenarios has a specific validity period or is valid within a particular period of time (or time window), PipelineDB provides a sliding window feature, which allows you to define the data validity period for your convenience.

For example, you can collect statistics for the most recent minute in the time window. Next, if you need a heat map that only shows the heat of the last minute, rather than historical data, you can re-define the sliding window, so that less data is retained, lower requirements are imposed on the server. Doing with will also in turn make for a higher efficiency.

When it comes to JOINs, support differs by type. First, stream-stream JOINs, which will be supported in future versions, can be currently implemented indirectly through transforms. On the other hand, stream-table JOINs are supported. To see some more support types, check out this page of the PipelineDB documentation.

PipelineDB is applicable to really any sort of scenario that requires stream processing. To illustrate this, consider the specific scenarios below:

PipelineDB can be used for the stream processing of the data reported by traffic sensors (such as road sensors and traffic lights) to show traffic conditions (completed in a continuous view) in real time, such as flows or fluctuations in traffic. It can also be used for dynamic triggering of event responses (completed in a transform), such as for traffic accidents.

PipelineDB can be used for stream monitoring of sensor data. With these applications, PipelineDB can be used to show when the water quality changes (for example, when the water becomes polluted), an event response is dynamically triggered (completed in a transform).

PipelineDB can also be used in Internet of Vehicles (IoV) applications. For example, PipelineDB can work with PostGIS to track the vehicle locations in real time, merge trajectories, and dynamically draw the dashboard (time-sharing and regional vehicle distribution).

PipelineDB can be used to dynamically track the data of packages in each foot of their journey, or aggregate results, or complete queries without the need to filter multiple entries from a large mass of data (reducing discrete scanning).

PipelineDB can be used for real-time financial data processing. For example, you set the price at which a stock can be bought or sold and use the event processing mechanism of transform to quickly buy or sell a stock. Then, you can use PipelineDB to perform some mathematical model operations on stock index data in real time, output the calculation results in real time, and draw the dashboard.

PipelineDB can be used in criminal investigations. For example, when the license plate of a suspicious vehicle is known, you can use PipelineDB to perform stream processing on license plate information captured and uploaded by video surveillance systems. Based on rules set by transforms, when a suspicious vehicle is identified, an event is triggered, allowing you to quickly see its trajectory.

PipelineDB can be used for the real-time analysis of app feeds. Feeds are configured in many apps to facilitate the tracking of users' behaviors or to follow business processing logic. If the access traffic is heavy, the data volume may be huge as a result. Therefore, before stream processing using PipelineDB can be applied, you may need to first have the data collected and stored in a large data warehouse for offline analysis.

However, offline analysis sometimes may not be enough, such as in a scenario when you need to provide dynamic recommendations for users or carry out promotions based on the real-time behaviors of users or the dashboard. In such cases, real-time stream processing is needed.

PipelineDB can be used for traffic analysis on the network protocol layer. For example, you can use PipelineDB for traffic analysis on office networks, carrier gateways, and some servers.

Beyond these scenarios, there are many more scenarios in which you could use PipelineDB.

For example, anything that requires quick response services, or could benefit from quick response times time, or needs real-time statistics or conversion and event tracking, or could be combined with relevant notifications or subscription models or cross-platform data exchange models.

PipelineDB ultimately came from PostgreSQL, which was developed at the University of California at Berkeley, and therefore inherits many of its functions and capabilities as a result. To be clear, this is a very good thing. PostgreSQL has a solid theoretical foundation; specifically, through 43 years of evolution, PostgreSQL has become the leader in the field in terms of functionality, performance, and scalability to name but three things.

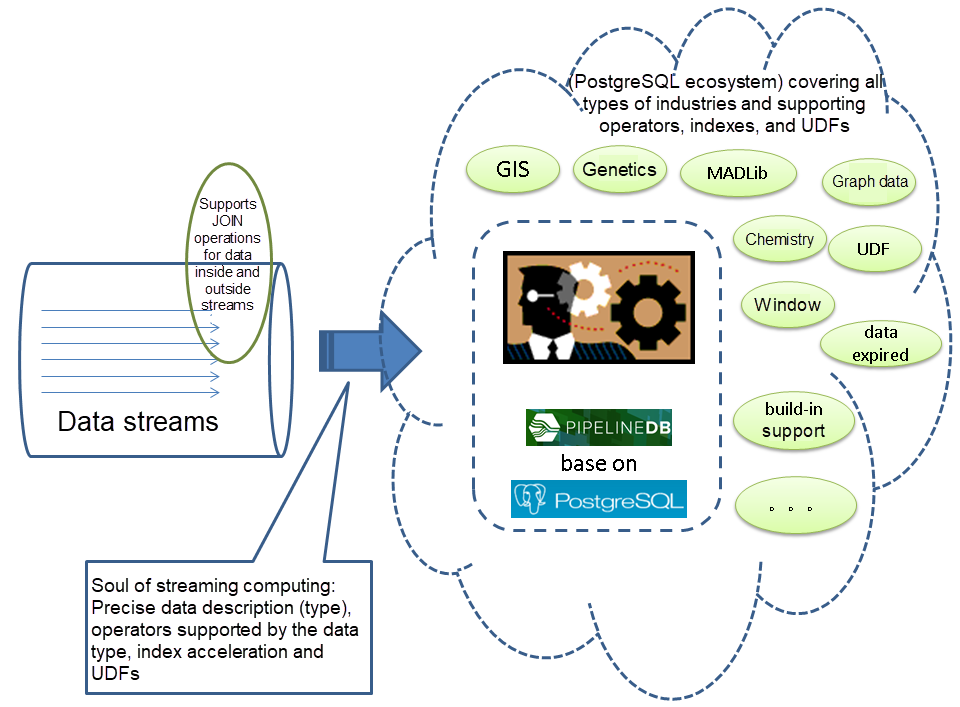

In many ways, computing is the soul of stream computing. And algorithms and their supported functions are the body. Consider the following figure:

In general, PostgreSQL provides extremely rich statistical dimensions and data types. What exactly does that mean? Well, to learn about the built-in data types of PostgreSQL, check out this page. And when it comes to built-in aggregation, window, and mathematical functions, you can check out this page to learn more.

But, to put things short here, PostgreSQL supports several extensions, of which common extensions are MADLib, PostGIS, Route planning, Wavelet, Genetics, Chemistry. Of course, many more extensions are available. All of this is thanks to the BSD-like license of PostgreSQL, which has allowed the PostgreSQL ecosystem to be large.

Of course, with PipelineDB built from PostgreSQL, it also shares these advantages. And, to sum things up, PipelineDB can be used for stream processing in almost any and really all of the scenarios that you could probably think up, making it extremely powerful.

First, you'll want to set up and develop PipelineDB, and then we can test it and deploy it.

Before you can set up PipelineDB, make sure that you have already set up PostgreSQL on your system. For this, you'll want to check that you downloaded the appropriate version, configured your OS accordingly, including configuring the resource limits and firewall, so on. Then, you should have deployed your file system, and also initialized and enabled the database, configured PostgreSQL, so on.

After this, you'll want to deploy the dependency. As part of this, you'll want to install ZeroMQ, which you can find here. Then, you'll want to run the commands below to set up ZeroMQ.

wget https://github.com/zeromq/libzmq/releases/download/v4.2.0/zeromq-4.2.0.tar.gz

tar -zxvf zeromq-4.2.0.tar.gz

cd zeromq-4.2.0

./configure

make

make install

vi /etc/ld.so.conf

/usr/local/lib

ldconfig Next, you'll want to update LibCheck if the operating system version is Red Hat Enterprise Linux (RHEL) 6 or earlier. You can do a Delete Check of an earlier version with the following command:

yum remove check Now, you'll want to use the Install Check command to install the version you'll want. For reference, check out this document and its release information.

wget http://downloads.sourceforge.net/project/check/check/0.10.0/check-0.10.0.tar.gz?r=&ts=1482216800&use_mirror=ncu

tar -zxvf check-0.10.0.tar.gz

cd check-0.10.0

./configure

make

make install Now you'll want to download PipelineDB. You can do so with the following command:

wget https://github.com/pipelinedb/pipelinedb/archive/0.9.6.tar.gz

tar -zxvf 0.9.6.tar.gz

cd pipelinedb-0.9.6 You'll need to fix PipelineDB bugs if the operating system is RHEL 6 or CentOS 6. Then, adjust check.h if the operating system is RHEL 6. You can do so with the following:

vi src/test/unit/test_hll.c

vi src/test/unit/test_tdigest.c

vi src/test/unit/test_bloom.c

vi src/test/unit/test_cmsketch.c

vi src/test/unit/test_fss.c

Add check.h.

#include "check.h" Also, you'll need to correct the libzmq.a path error if the operating system is RHEL 6. (If is of course why it's better to have a newer version.) You can do so with the following command:

vi src/Makefile.global.in

LIBS := -lpthread /usr/local/lib/libzmq.a -lstdc++ $(LIBS) Now correct the test-decoding error with the following command:

cd contrib/test_decoding

mv specs test

cd ../../ And compile PipelineDB with the following command:

export C_INCLUDE_PATH=/usr/local/include:C_INCLUDE_PATH

export LIBRARY_PATH=/usr/local/lib:$LIBRARY_PATH

export USE_NAMED_POSIX_SEMAPHORES=1

LIBS=-lpthread CC="/home/digoal/gcc6.2.0/bin/gcc" CFLAGS="-O3 -flto" ./configure --prefix=/home/digoal/pgsql_pipe

make world -j 32

make install-world Now, you'll want to initialize the cluster. For this, the first thing you'll want to do is to configure the environment variables:

vi env_pipe.sh

export PS1="$USER@`/bin/hostname -s`-> "

export PGPORT=$1

export PGDATA=/$2/digoal/pg_root$PGPORT

export LANG=en_US.utf8

export PGHOME=/home/digoal/pgsql_pipe

export LD_LIBRARY_PATH=/home/digoal/gcc6.2.0/lib:/home/digoal/gcc6.2.0/lib64:/home/digoal/python2.7.12/lib:$PGHOME/lib:/lib64:/usr/lib64:/usr/local/lib64:/lib:/usr/lib:/usr/local/lib:$LD_LIBRARY_PATH

export PATH=/home/digoal/cmake3.6.3/bin:/home/digoal/gcc6.2.0/bin:/home/digoal/python2.7.12/bin:/home/digoal/cmake3.6.3/bin:$PGHOME/bin:$PATH:.

export DATE=`date +"%Y%m%d%H%M"`

export MANPATH=$PGHOME/share/man:$MANPATH

export PGHOST=$PGDATA

export PGUSER=postgres

export PGDATABASE=pipeline

alias rm='rm -i'

alias ll='ls -lh'

unalias vi Assume that the port is 1922 and the directory is /u01:

. ./env_pipe.sh 1922 u01 Now, you can initialize clusters with the following command:

pipeline-init -D $PGDATA -U postgres -E SQL_ASCII --locale=C Now, you'll want to modify configuration. You can do so with the following:

cd $PGDATA

vi pipelinedb.conf

listen_addresses = '0.0.0.0'

port = 1922

max_connections = 2000

superuser_reserved_connections = 13

unix_socket_directories = '.'

shared_buffers = 64GB

maintenance_work_mem = 2GB

dynamic_shared_memory_type = posix

vacuum_cost_delay = 0

bgwriter_delay = 10ms

bgwriter_lru_maxpages = 1000

bgwriter_lru_multiplier = 10.0

max_worker_processes = 128

wal_level = minimal

synchronous_commit = off

full_page_writes = off

wal_buffers = 2047MB

wal_writer_delay = 10ms

max_wal_size = 64GB

min_wal_size = 32GB

checkpoint_timeout = 55min

checkpoint_completion_target = 0.1

random_page_cost = 1.2

effective_cache_size = 400GB

log_destination = 'csvlog'

logging_collector = on

log_truncate_on_rotation = on

log_checkpoints = on

log_connections = on

log_disconnections = on

log_error_verbosity = verbose

log_timezone = 'PRC'

autovacuum = on

log_autovacuum_min_duration = 0

autovacuum_vacuum_scale_factor = 0.05

autovacuum_analyze_scale_factor = 0.1

autovacuum_vacuum_cost_delay = 0

autovacuum_vacuum_cost_limit = 0

datestyle = 'iso, mdy'

timezone = 'PRC'

lc_messages = 'C'

lc_monetary = 'C'

lc_numeric = 'C'

lc_time = 'C'

default_text_search_config = 'pg_catalog.english'

continuous_query_combiner_synchronous_commit = off

continuous_query_combiner_work_mem = 1GB

continuous_view_fillfactor = 50

continuous_query_max_wait = 10

continuous_query_commit_interval = 10

continuous_query_batch_size = 50000

continuous_query_num_combiners = 24

continuous_query_num_workers = 12 Below is the configuration added by PipelineDB:

#------------------------------------------------------------------------------

# PIPELINEDB OPTIONS

#------------------------------------------------------------------------------

# synchronization level for combiner commits; off, local, remote_write, or on

continuous_query_combiner_synchronous_commit = off

# maximum amount of memory to use for combiner query executions

continuous_query_combiner_work_mem = 1GB

# the default fillfactor to use for continuous views

continuous_view_fillfactor = 50

# the time in milliseconds a continuous query process will wait for a batch

# to accumulate

continuous_query_max_wait = 10

# time in milliseconds after which a combiner process will commit state to

# disk

continuous_query_commit_interval = 10

# the maximum number of events to accumulate before executing a continuous query

# plan on them

continuous_query_batch_size = 50000

# the number of parallel continuous query combiner processes to use for

# each database

continuous_query_num_combiners = 24

# the number of parallel continuous query worker processes to use for

# each database

continuous_query_num_workers = 12

# allow direct changes to be made to materialization tables?

#continuous_query_materialization_table_updatable = off

# synchronization level for stream inserts

#stream_insert_level = sync_read

# continuous views that should be affected when writing to streams.

# it is string with comma separated values for continuous view names.

#stream_targets = ''

# the default step factor for sliding window continuous queries (as a percentage

# of the total window size)

#sliding_window_step_factor = 5

# allow continuous queries?

#continuous_queries_enabled = on

# allow anonymous statistics collection and version checks?

#anonymous_update_checks = on Next, you'll want to start PipelineDB. You can do so with the command below:

pipeline-ctl start Now, let's go into the connection method. We'll use the same method for connecting PostgreSQL to connect PipelineDB because PipelineDB is compatible with and built off of PostgreSQL.

psql

psql (9.5.3)

Type "help" for help.

pipeline=# \dt

No relations found.

pipeline=# \l

List of databases

Name | Owner | Encoding | Collate | Ctype | Access privileges

-----------+----------+-----------+---------+-------+-----------------------

pipeline | postgres | SQL_ASCII | C | C |

template0 | postgres | SQL_ASCII | C | C | =c/postgres +

| | | | | postgres=CTc/postgres

template1 | postgres | SQL_ASCII | C | C | =c/postgres +

| | | | | postgres=CTc/postgres

(3 rows)

pipeline=# First to start with the testing steps, you'll want to create a stream structure. You can do so with the command below. Note that, for this command, the parameter id is the KEY, and the parameter val is the stored value. These values are aggregated by ID for statistics.

CREATE STREAM s1 (id int, val int); Now you'll want to create a continuous view. The continuous view will be used to collect statistics in several common dimensions, including count, avg, min, max, and sum, as we discussed previously.

CREATE CONTINUOUS VIEW cv1 AS

SELECT id,count(*),avg(val),min(val),max(val),sum(val)

FROM s1 GROUP BY id; PipelineDB can be used for stream processing in almost all scenarios that you can think of and greatly improves development efficiency. So, for the next neck of this, you'll want to activate stream computing. You can do so with the activate ; command:

Now, you'll want to insert data for stress testing the system we've create. For this, we'll want to conduct a test on one million random groups, and insert random values, which each are equal to or less than 5,000,000.

vi test.sql

\setrandom id 1 1000000

\setrandom val 1 5000000

insert into s1(id,val) values (:id, :val); Now, activate 1000 connections, and start the stress testing to process with about 0.24 million transactions per second.

pgbench -M prepared -n -r -P 1 -f ./test.sql -c 1000 -j 1000 -T 100

...

progress: 2.0 s, 243282.2 tps, lat 4.116 ms stddev 5.182

progress: 3.0 s, 237077.6 tps, lat 4.211 ms stddev 5.794

progress: 4.0 s, 252376.8 tps, lat 3.967 ms stddev 4.998

... If the server has many hard disks and the CPU performance is high, you can deploy two or more PipelineDB instances on one server to distribute traffic among them. For example, if you deploy two PipelineDB instances on a 32-core server, the stream processing capability can reach 0.29 million transactions per second and 25.056 billion transactions per day.

When two PipelineDB instances are deployed, we recommend that you use NUMACTL for control and bind the two PipelineDB instances with two CPUs and memories each. For example, you could do so with this below command:

numactl --physcpubind=0-31 pipeline-ctl startDoing so will improve performance, providing a rather fair result of a stable 25.056 billion transactions per day. Next, you can consider using JStorm framework, which can also bring you great results. In fact, if you decide not to use it, you may need to make a hardware investment that is dozens or even hundreds of times the usual price of PipelineDB to achieve the same results.

Although PipelineDB delivers powerful performance (according to the preceding test, a 32-core server can process about 25.056 billion transactions per day), a single server will always encounter a bottleneck in such scenario. However, deploying your cluster is a good means of avoiding this kind of bottleneck, too. Through distribution, aggregation, as well as deployment, you can effectively increase the efficiency of your system.

Regarding the write operations, if you do not need specific sharding rules, you can consider using HAProxy for your distribution needs. And if you need to add sharding rules, you could use PLPproxy instead. And when it comes to query aggregation, you'll need to use PLProxy, which is actually simple to use; all you'll need to do is to write a dynamic function.

Streaming, Lambda, and Triggered Modes Compared: A Look at How You Can Process Data in Real Time

Conducting a Pivotal Analysis of Multiple Streams for Both Human and Robot Service Channels

digoal - September 18, 2019

digoal - September 6, 2019

digoal - September 20, 2019

digoal - September 6, 2019

digoal - September 6, 2019

Alibaba Clouder - December 12, 2017

IoT Platform

IoT Platform

Provides secure and reliable communication between devices and the IoT Platform which allows you to manage a large number of devices on a single IoT Platform.

Learn More IoT Solution

IoT Solution

A cloud solution for smart technology providers to quickly build stable, cost-efficient, and reliable ubiquitous platforms

Learn More .COM Domain

.COM Domain

Limited Offer! Only $4.90/1st Year for New Users.

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn MoreMore Posts by digoal