By Sangli.

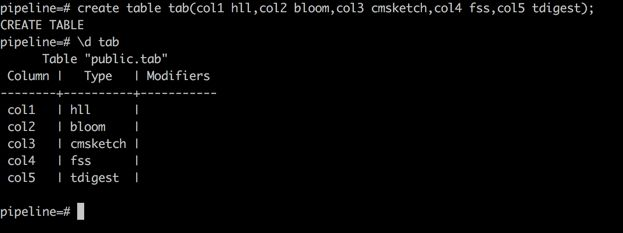

PipelineDB uses five aggregate data types when it comes to physical storage. These data types play an important role in processing continuous data. In this article, we will take a look at what these data types are-along with their structures and algorithms-and then we will go through how PipelineDB uses each one of these data types.

Of course, you can learn more about the PipelineDB's data types, data structures, and algorithms by checking out the official documentation. The relevant page for it is this one. Here, we're going to try to keep things a bit more simple.

So first, let's quickly go over the five data types.

Later in this article, we will go over how PipelineDB uses these data types in the sections that follow this one.

Of course, for all of these, you can learn more from reading the official documentation, specifically these particular places: PipelineDB-specific Types and PipelineDB-specific Functions.

You can also find the corresponding data types and functions on these websites. These data types can be used in common tables if you do not want to use them in Continuous Views (CVs), as we will do in this article.

The specific code is implemented in src/backend/utils/adt/. Okay, now with all of that out of the way, let's look at how PipelineDB uses all of the above data types.

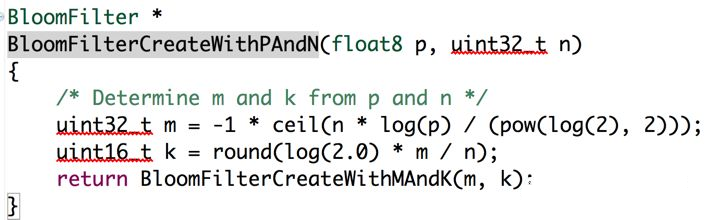

So let's start with Bloom Filter. In PipelineDB, Bloom Filter is implemented in src/backend/pipeline/bloom.c. Consider the following:

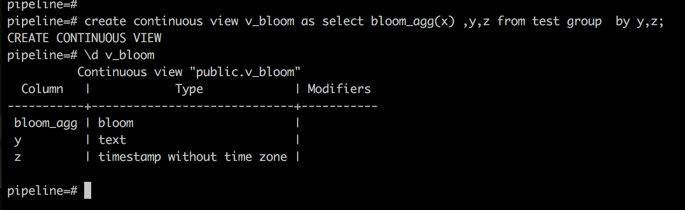

The Bloom Filter data type is used very frequently and is a built-in data type of PipelineDB. We can use Bloom to store data in Continuous Views (CVs).

To illustrate this point, consider the following example. When creating a CV, you can use UDFs directly to generate the Bloom Filter-type fields.

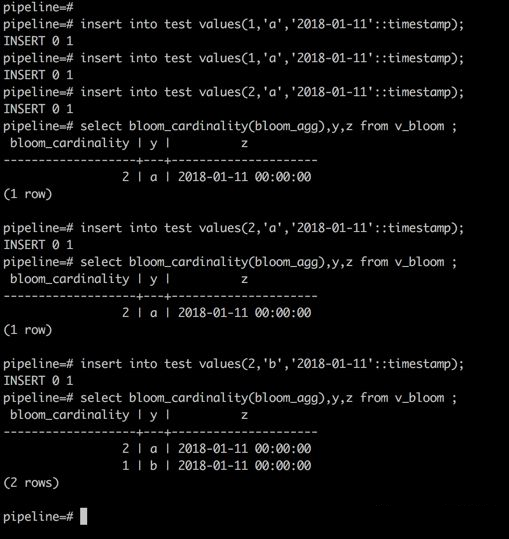

After doing that, you'll want to insert some test data, as shown below:

There are also many UDFs available at this page.

Now there's Count-Min Sketch. In PipelineDB, a Count-Min (CM) Sketch is implemented in src/backend/pipeline/cmsketch.c.

As discussed before, a CM Sketch estimates the frequency of an element in a large stream, which is similar to a Bloom filter. However, a Bloom filter records whether the element exists or not. An error exists when you use the cm sketch.

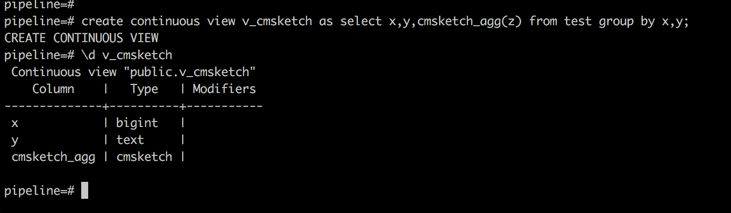

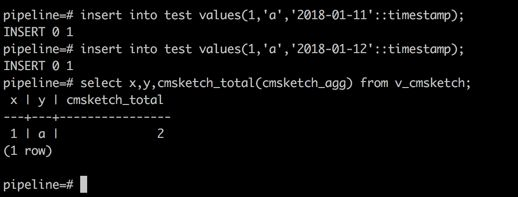

Now consider the following example:

To this, let's insert some test data, as shown below:

To learn more about this, you can check out its description in the PipelineDB's official documentation.

In PipelineDB, Filtered-Space Saving (FSS) Top-K is implemented in src/backend/pipeline/fss.c.

FSS Top-K is used to find the top-k elements in data streams. Our implementation that we show here is based on this paper.

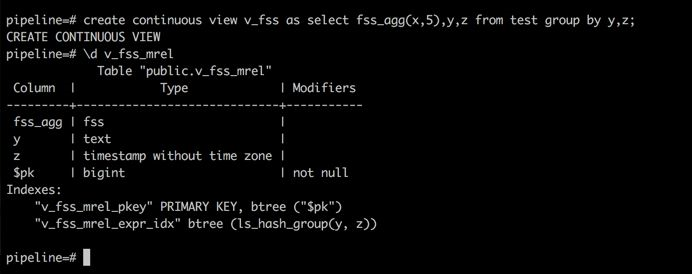

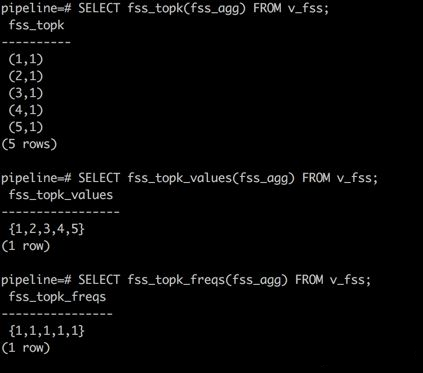

In an actual environment, for example, where there may be computing top-10 elements in the last 5 minutes, FSS Top-K can efficiently save time and memory space. The following shows, more particularly, how FSS Top-K is used in PipelineDB:

Now, into this, insert some test data, as shown below:

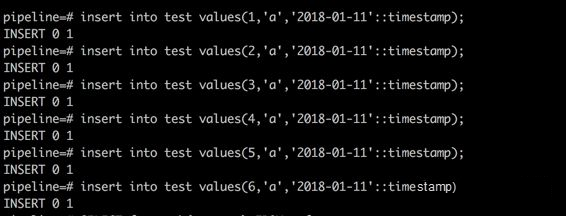

Now, insert six data records, and query v_fss, as done below:

After this, five records are found when fss_topk is used. In each record, the value is 1. The inserted value 6 is missing. However, the output of fss_topk_values contains all the values, and this output is the number of each element.

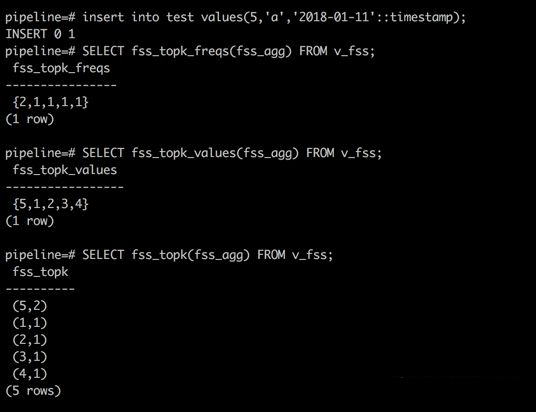

Now, insert a record again, as shown below:

Two records contain the value 5. Now, insert the value 6.

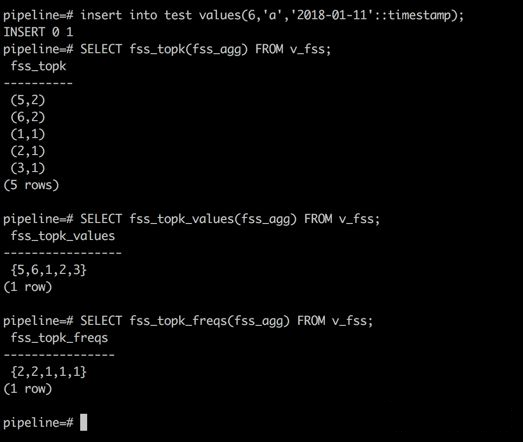

The value 6 appears and takes the place of 4.

If you'd like to learn more, check out the description provided in PipelineDB's official documentation.

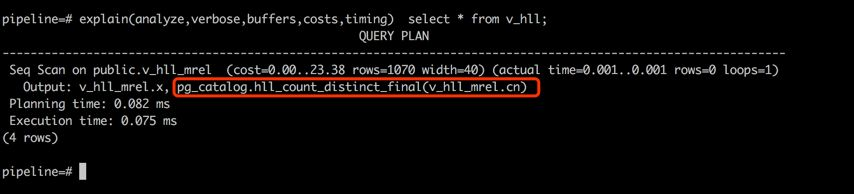

In PipelineDB, HyperLogLog (HLL) is implemented in src/backend/pipeline/hll.c.

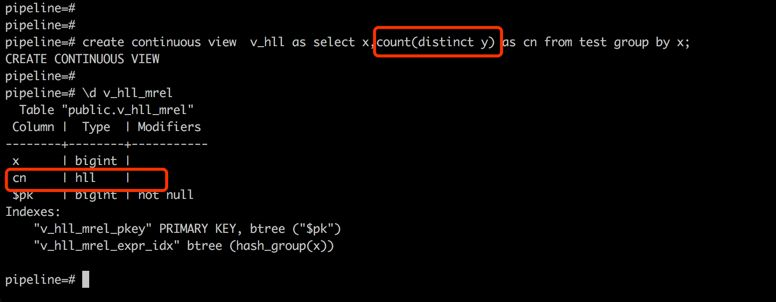

In most practical applications, HyperLogLog (HLL) is used to solve the count (distinct) problem, but in PipelineDB, it is also used to store the count (distinct) data.

Consider this example:

The physical storage format of the count (distinct) field corresponding to the created v_hll is HLL. In the query process, the parser parses this field into the following function:

hll_count_distinct_final The following shows the execution plan:

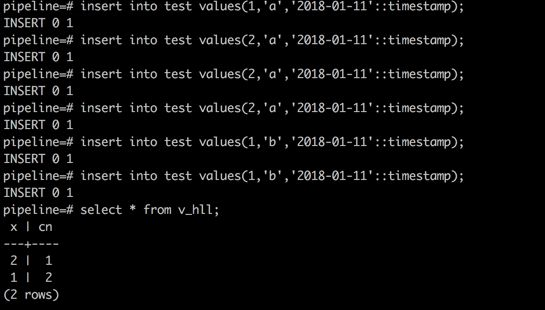

Here's an example:

Regardless of the number of insert operations, the result is count (distinct).

In PipelineDB versions earlier than 0.9.7, this has a rather mediocre efficiency with scan operations sometimes negatively affect performance. In PipelineDB 0.9.7, however, this function has been improved significantly, mitigating these issues. To learn more about the releases and versions of PipelineDB, check out this page. This issue is also discussed at detail in this article, too.

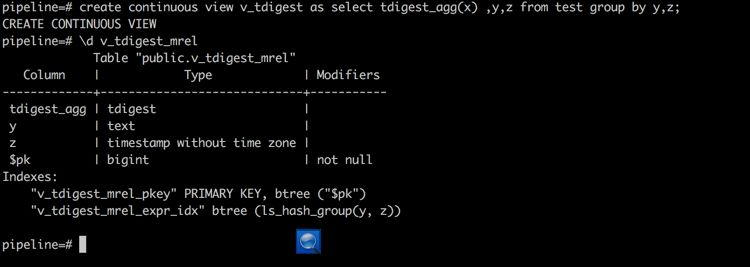

Last, there's T-Digest. In PipelineDB, T-Digest is implemented in src/backend/pipeline/tdigest.c.

As discussed above, T-Digest is mainly used for estimation and calculation based on percentiles and medians. It is similar to the built-in function percentile_cont in the database. This function is seldom used.

The following briefly introduces how to use T-Digest in PipelineDB.

First, consider the following example:

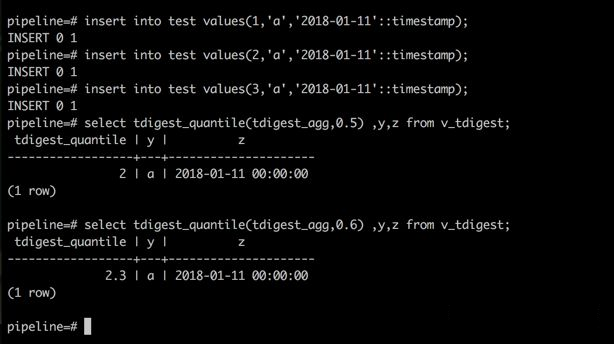

Now, in it, insert data as follows:

For it, the median of 0.5 is 2, and the median of 0.6 is 2.3.

If you're interested in learning more about T-Digest, I recommend that you check out its official description in the PipelineDB documentation. In it, you'll learn how to use probabilistic data structures and algorithms.

Conducting a Pivotal Analysis of Multiple Streams for Both Human and Robot Service Channels

PipelineDB Sharding Cluster for Distributed Stream Computing

digoal - September 20, 2019

digoal - September 6, 2019

Alibaba Clouder - September 6, 2018

digoal - September 17, 2019

digoal - June 26, 2019

OpenAnolis - December 24, 2024

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by digoal