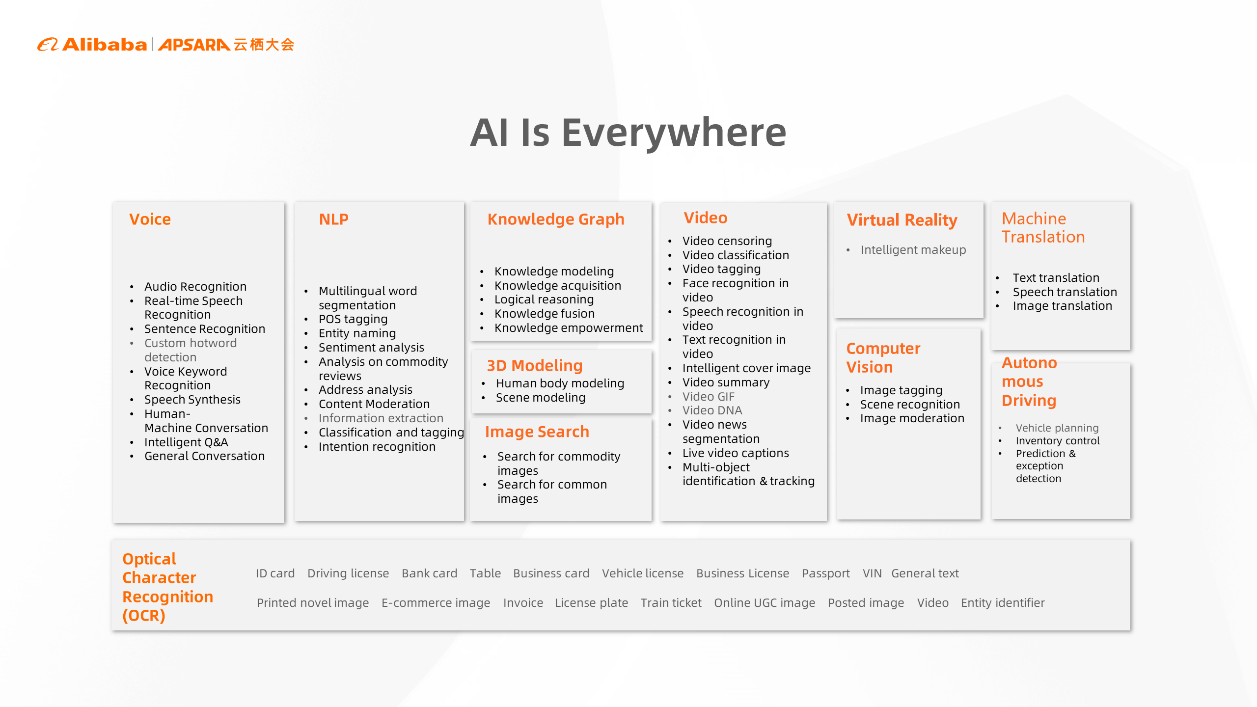

You can see the products you are interested in when you open Taobao and the videos you are interested in when you open TikTok. This is due to AI big data analysis, which includes algorithms such as Natural Language Processing (NLP), Machine Learning Platform for AI, and AI recommendation. Another example is autonomous driving-related technologies mainly based on deep learning and the Tmall Genie based on speech recognition technologies and big data retrieval capabilities. AI has become ubiquitous in life. Voice, Natural Language Processing, image vision, autonomous driving, and OCR are scenarios in the AI field. This means AI is continuously providing inclusive capabilities.

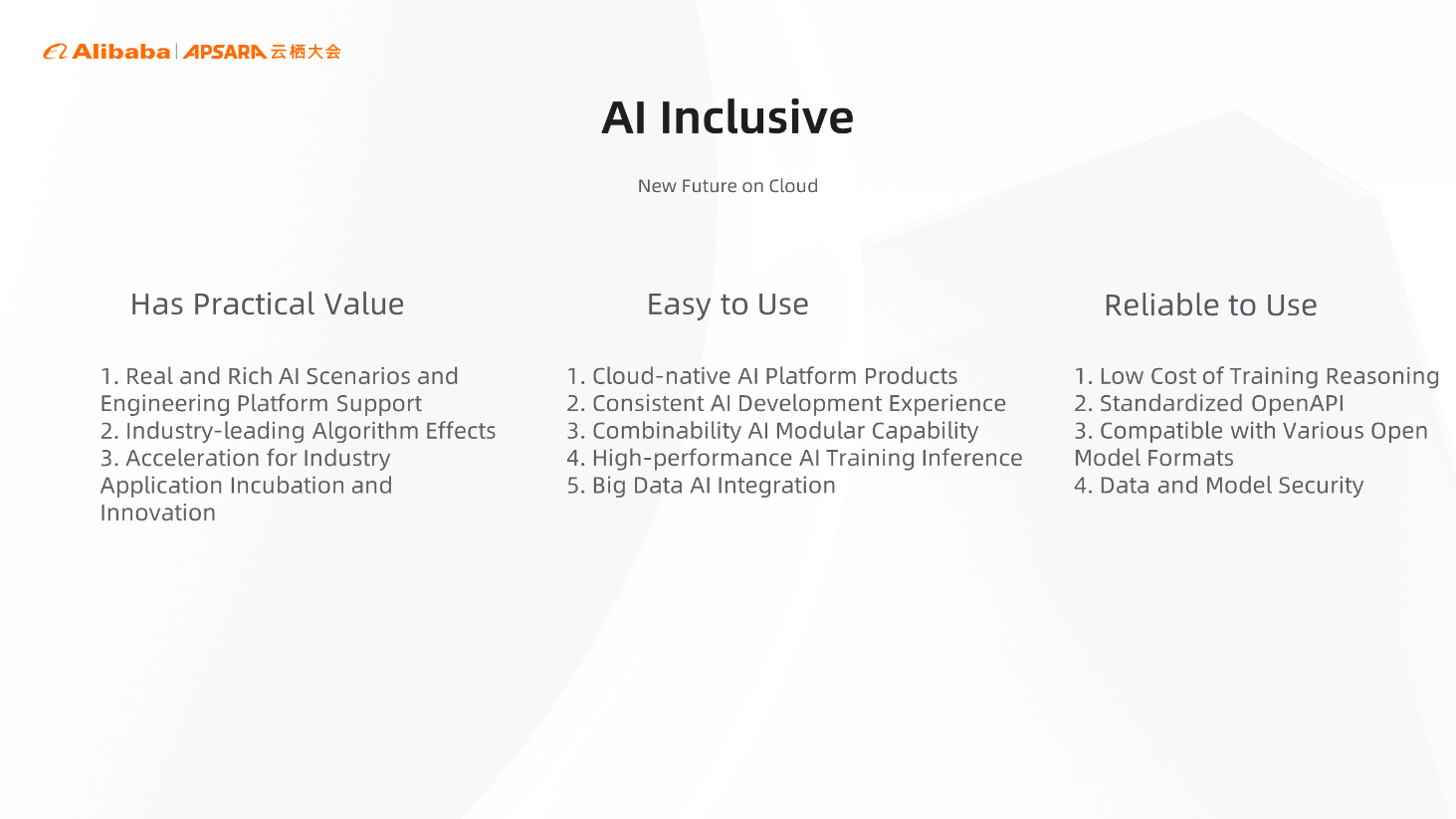

The following three conditions need to be met to make AI widely used and implemented in various scenarios:

Practical Value: We need solutions to the demands of all aspects instead of simple skill showing. It needs to be truly implemented and fully prepared for AI engineering. In addition, it is necessary to be able to accelerate the incubation and innovation of industry applications. For example, the hottest AI drawing Stable Diffusion, which can generate corresponding pictures by inputting key text information, is an innovative project.

Easy to Use: A cloud-native AI platform is required to avoid lengthy and complicated deployment processes and be able to pull AI application scenarios on the cloud with one click. It can provide functions consistent with offline deployment, integrate many performance features on the cloud, accelerate inference optimization, and apply to the system quickly. The AI capability on the cloud is atomized and can be output modularly. AI may only be a small part of the large system. AI inclusiveness needs various functions of AI to be easily migrated to various application systems.

Reliable to Use: Cost control is required, and various data models can have open APIs and open compatible formats. It needs to be able to ensure data and model security, and cloud-native naturally guarantees security.

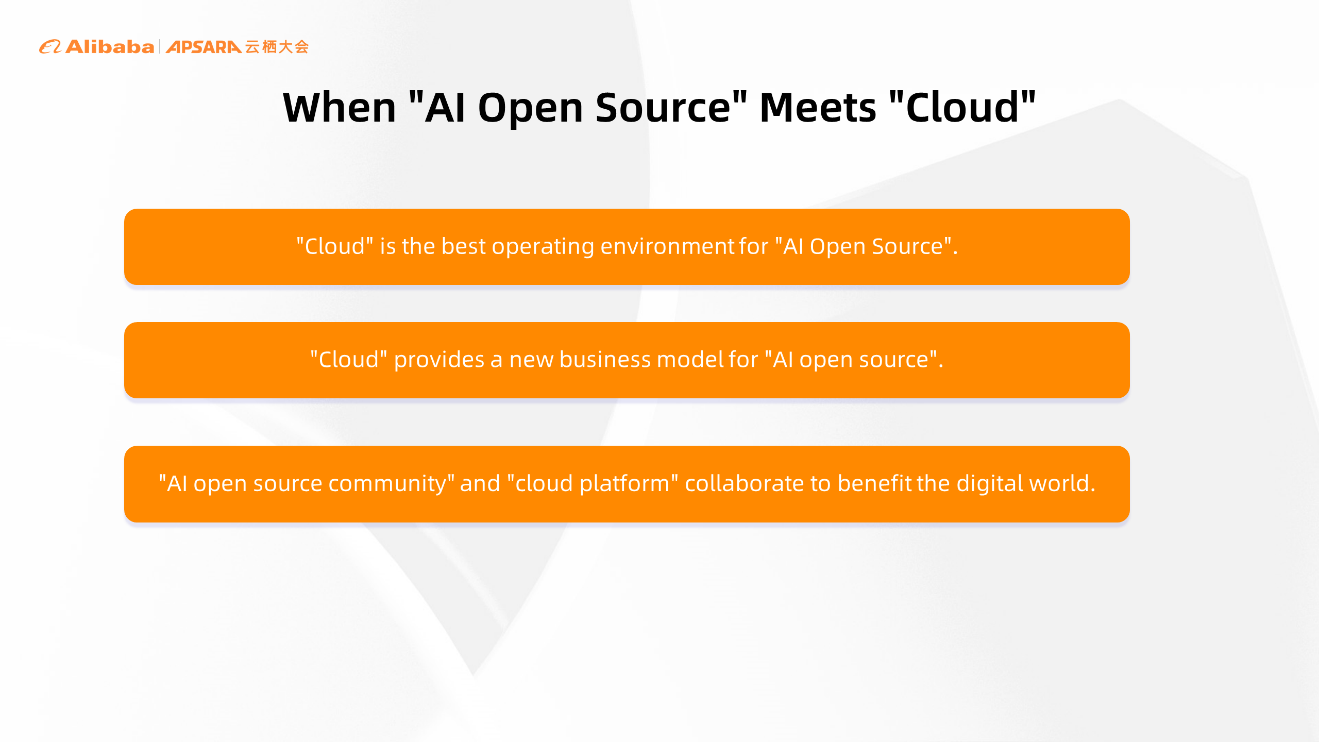

What can the combination of AI open-source and the cloud bring to us?

First, the cloud is the best operating environment for AI open-source. Users do not need to prepare servers, download software, or compile deployment and other complicated preparations.

Secondly, the cloud provides a new business model for open-source AI. For example, community discussions and maintenance can provide additional services to enterprises that use open-source software on the cloud to answer questions.

Third, there will be more interaction between the open-source community and the cloud platform. For example, the cloud provides flexibility and scalability for open-source. On the contrary, the cloud platform can raise demands on the open-source community (such as the advance planning of open-source software adaptation for Serverless service release in the future). In the end, the two work together to benefit the AI digital world.

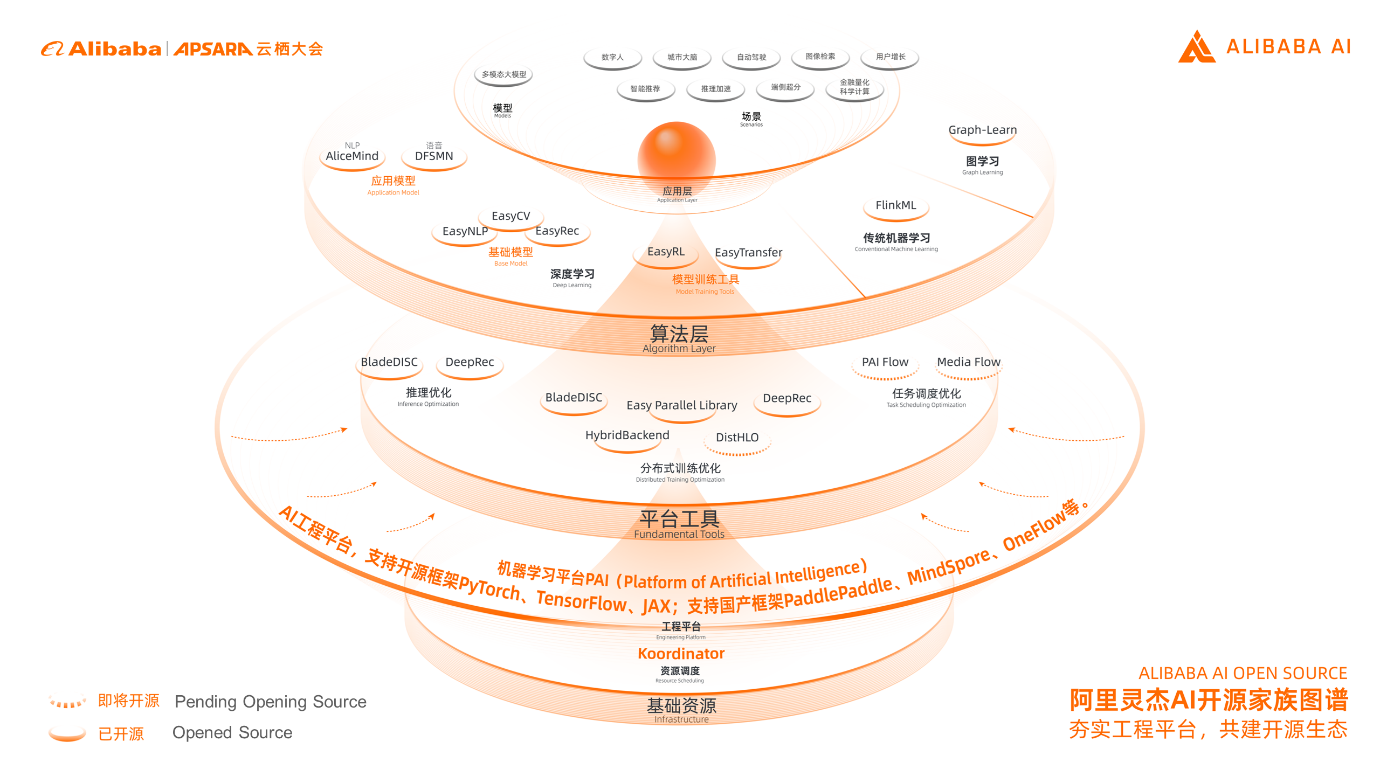

(Map of Alibaba AI Open-Source Family)

There are related projects to implement open-source, including the lowest level of resource scheduling, platform engineering, algorithm application, and algorithm foundation. Machine Learning Platform for AI supports popular open-source projects (such as TensorFlow and PyTorch) and many localized projects (such as OneFlow). It also optimizes distributed training frameworks and distributed inference capabilities. PAI Flow and Media Flow will be open-sourced soon for task scheduling.

The application-level ModelScope has been released on the algorithm side. In addition to large-scale pre-training models, many basic models have also been open-sourced (such as voice, image, text, recommendation, and other related projects). Real-time computing includes Flink Machine Learning, which can be used for real-time machine learning based on real-time data streams. For example, relevant recommendations can appear immediately after users browse some products. In addition, there are graph-based machine learning frameworks, transfer learning, and enhanced learning.

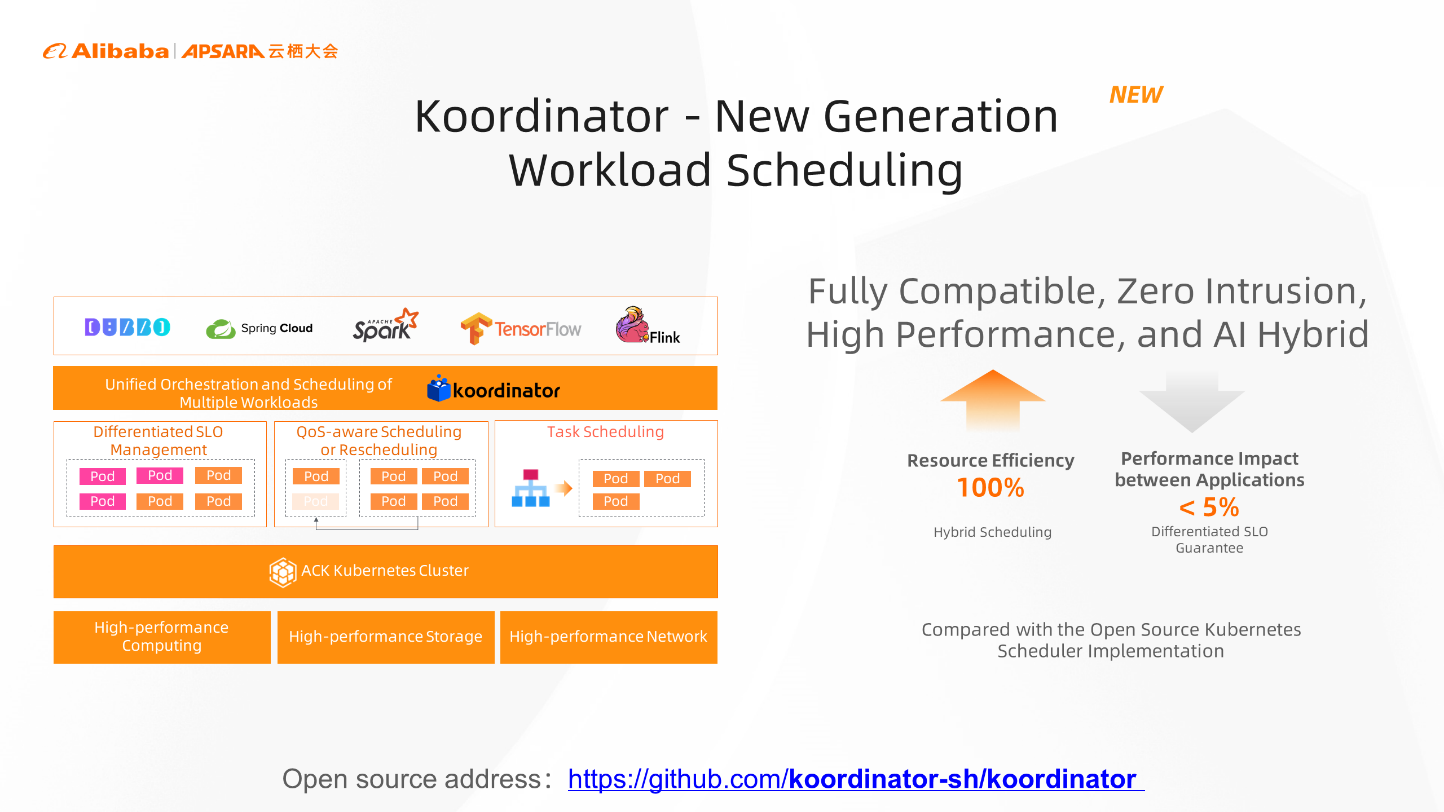

AI applications also put forward higher requirements for scheduling in cloud-native machine learning application scenarios. Therefore, we released Koordinator to solve problems (such as optimization by job scheduling and resource utilization improvement). Koordinator can perform scheduling balancing based on the heatmap of each service and application. For example, if an error occurs on several machines, the koordinator can perceive the change in service stability from the QoS layer and reschedule to disable the slow-response containers and deploy tasks to new containers and servers.

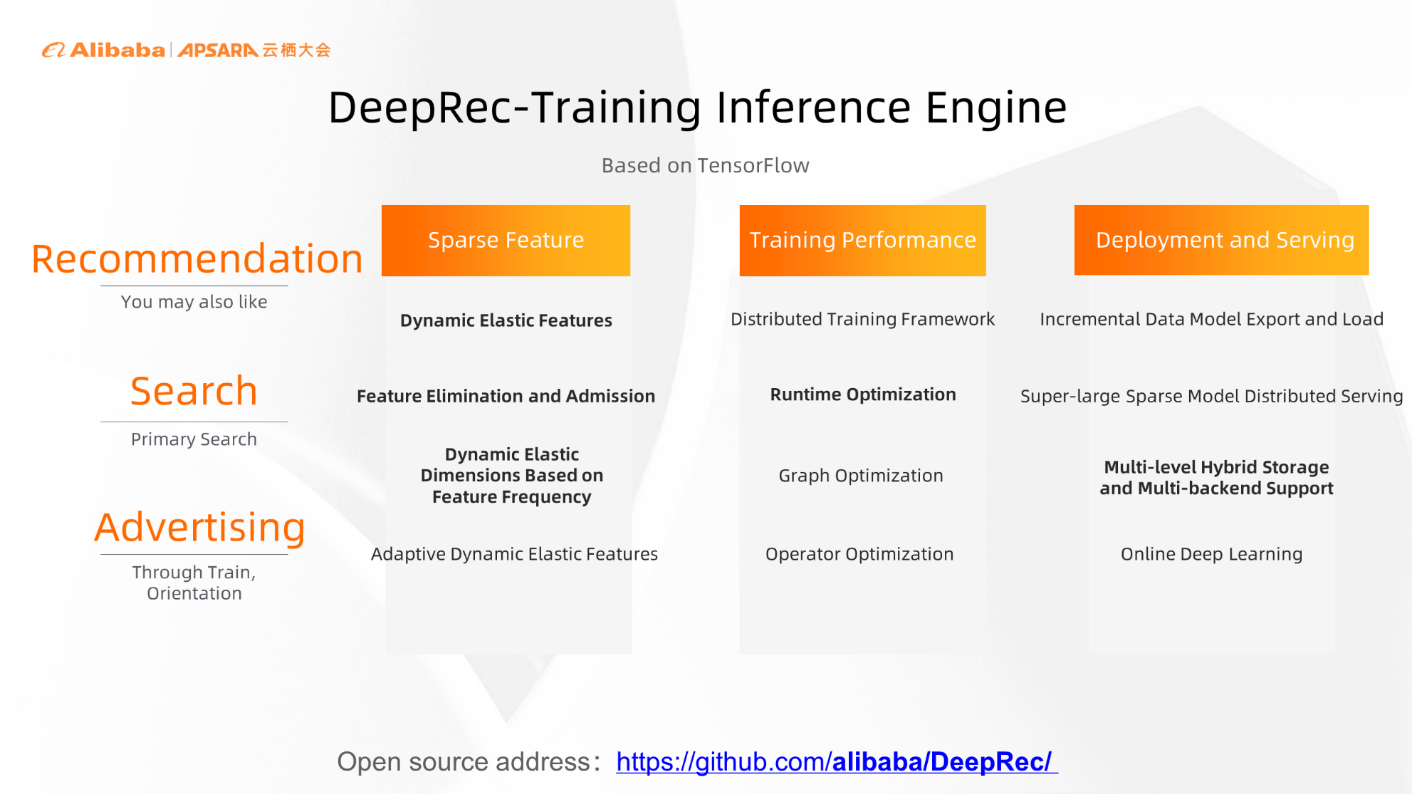

DeepRec is optimized based on Tensorflow in the training inference engine. It mainly serves recommendation, search, and advertising. Search, recommendation, and advertising create structured data generally, which can be simply understood as Table. We have made a lot of optimizations in sparse features, training performance, deployment, and Serving.

Sparse Features: Many features in model data processing are very sparse. For example, the behavior between a person and a commodity is a feature. There are billions of products on Taobao, and one person can browse thousands of products each day. The characteristics of interactive behavior are sparse. Therefore, we introduce dynamic elastic features. Traditionally, a fixed hash Table is used to store features. After the introduction of dynamic elastic features, feature admission and elimination can be introduced. For example, features that expired long ago can be dynamically eliminated, and newly generated features can also be introduced dynamically.

Training Performance: It consists of distributed training frameworks, Runtime optimization, graph optimization, and operator optimization. The input data patterns are similar for training in AI scenarios, and similar computational logics are constantly repeated. Based on this feature, the critical path of computing can be extracted and prioritized for execution, allowing computing resources to be executed more fully and to run in a shorter time.

Deployment and Serving: User experience has been improved on the deployment side. The Serving side mainly provides multi-level hybrid storage. Usually, you must load the model into memory for the prediction service. If it is a GPU, you have to load it into the video memory. We will prioritize the embedding part of the most popular model features to video memory, to memory, and then to the hard disk layer. Large models can be stored on a single machine through a multi-level hybrid storage mode to reduce costs.

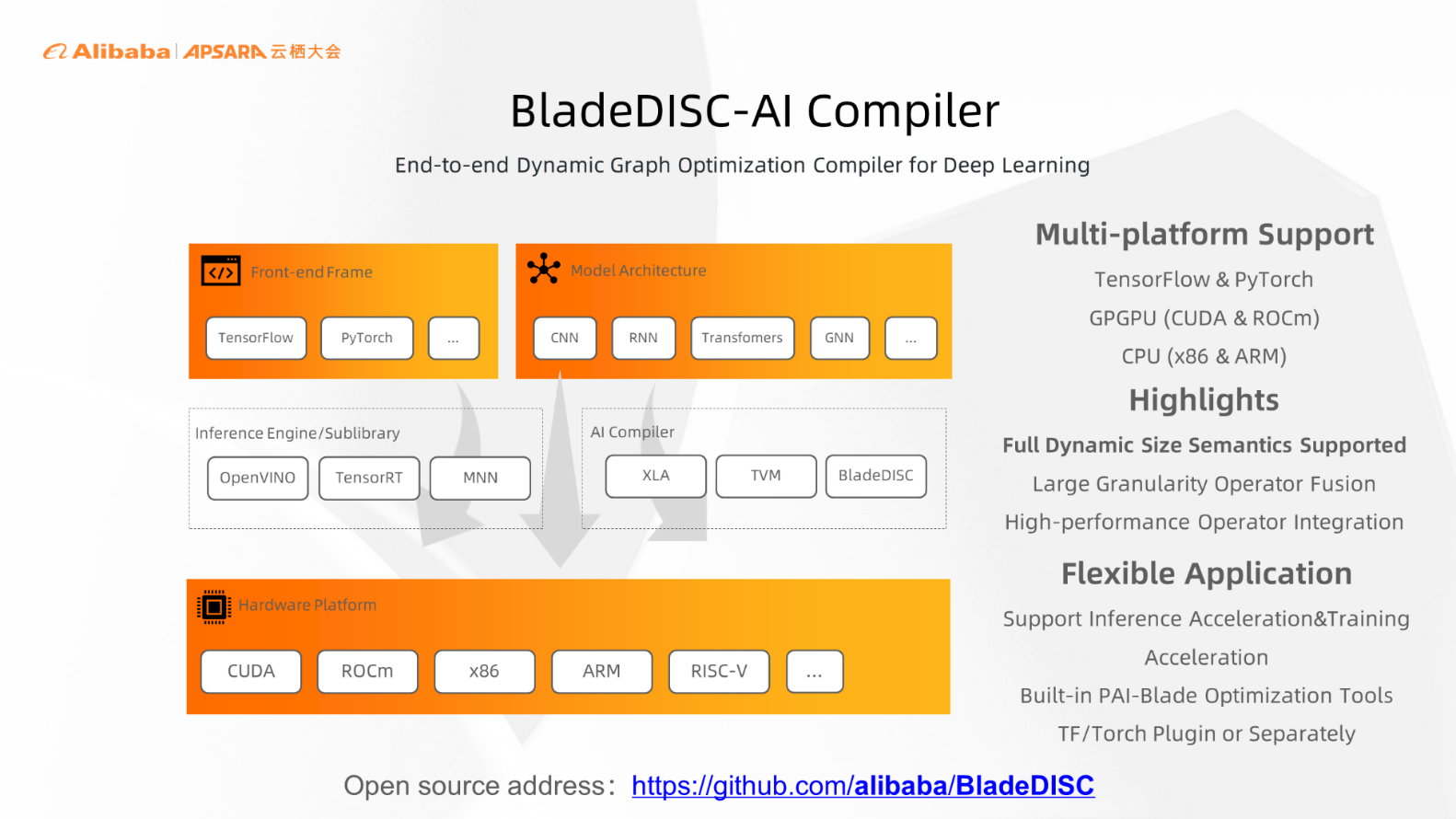

When doing Natural Language Processing, if the input length is inconsistent, we usually fix the length. However, this method has several problems. Long input will be partly discarded, or short input needs to be filled in blank spaces, wasting memory and computing resources. Therefore, we developed BladeDISC to implement a deep learning-oriented, end-to-end multi-scale graph optimization compiler that supports different frameworks, including TensorFlow, PyTorch, GPGPU, CPU, and ARMS.

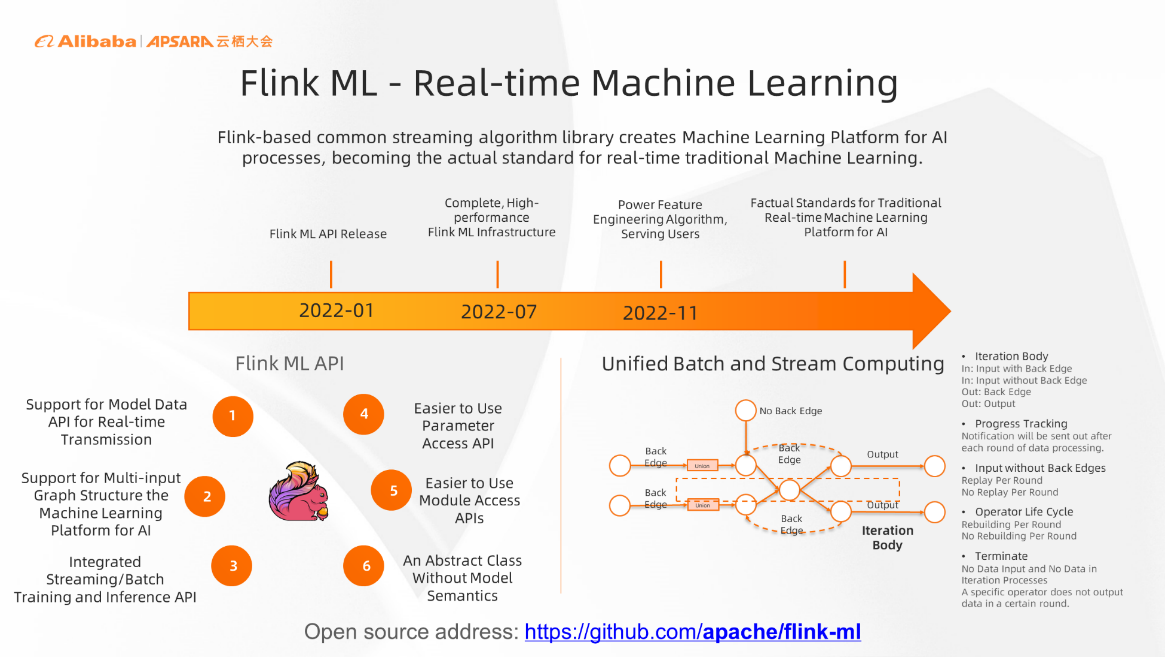

Flink ML is a real-time machine learning algorithm library based on Flink. The Flink ML API released at the beginning of 2022, supports real-time transmission of models and data, as well as multi-input graph structure machine learning algorithms. In addition, although it is called a real-time machine learning algorithm, it can support batch machine learning. Real-time machine learning algorithms are mostly used in structured scenarios. Generally, 70% of the work may prepare data for running models, such as extracting data from raw logs for more feature processing. Therefore, Flink ML will make more investments in real-time feature engineering in the future. The ultimate goal is for Flink ML to become the standard for real-time machine learning.

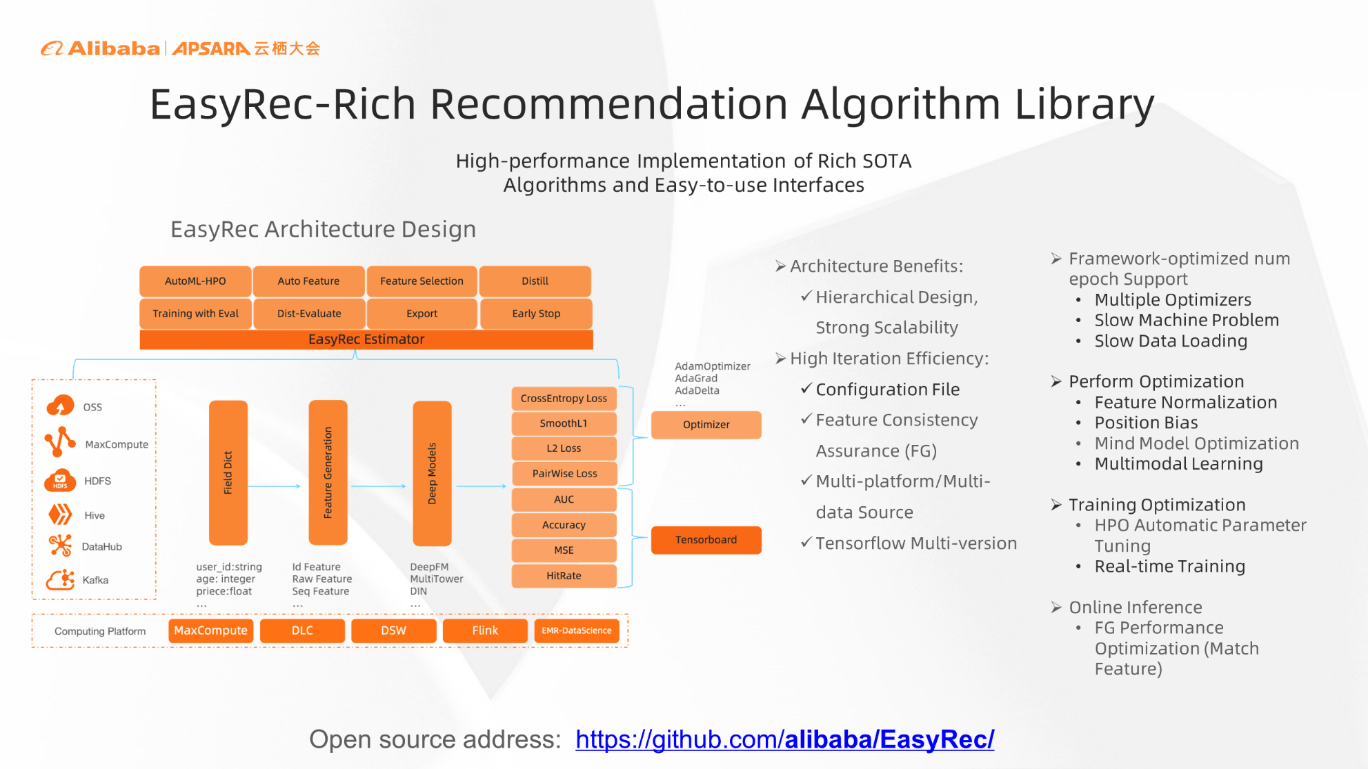

EasyRec is a recommendation algorithm library for recommendation scenarios. We have implemented many algorithms for top-level papers and open-sourced them. We have integrated a lot of performance optimization. Users can quickly enjoy the SOTA algorithm implementation and the best performance implementation. In addition to better performance, EasyRec is well-integrated with the cloud. It supports different platforms at the computing level and the data level. For example, it supports EMR, Flink, MaxCompute, and cloud-native containerized services. In terms of input, traditional real-time data streams are supported (such as HDFS, OSS Object Storage Service, MaxCompute Table, and Kafka).

In addition, we have developed and integrated AutoML functions to support automatic hyperparameter tuning and automatic feature generation. High-level features can be implemented by Auto Feature Engineering, and automatic feature filtering is also supported.

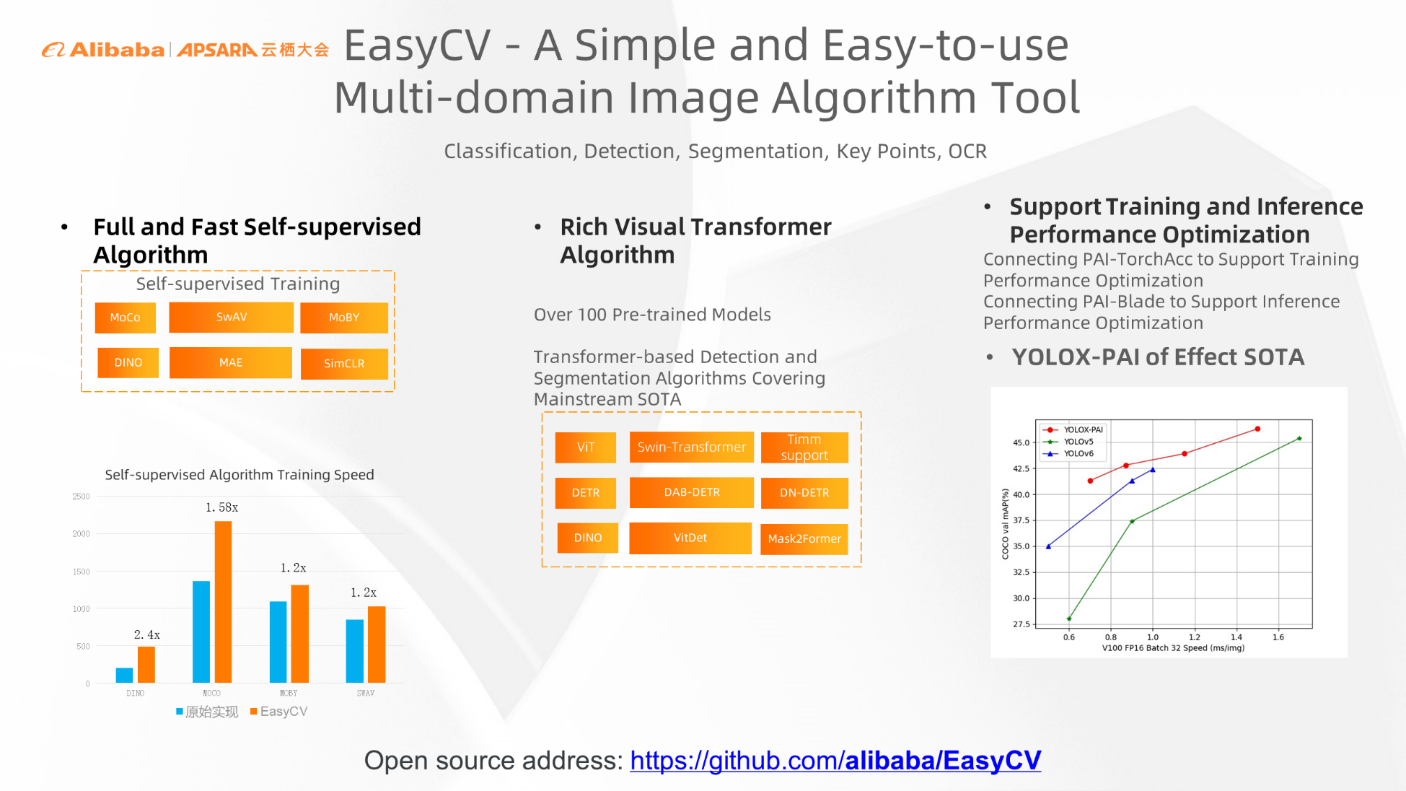

EasyCV work is visual scenarios. EasyNLP works in Natural Language Processing (NLP) scenarios. EasyCV integrates multiple scenarios and domains and integrates many algorithms in the detection, classification, segmentation, and key point OCR algorithms. The performance is improved by more than 20% over the original algorithm.

The optimized YoloX-PAI algorithm implements multi-network support from the backbone level and adds multi-scale image feature fusion to the neck network to improve the effect, which is better than YOLO5 and YOLO6 implemented by the open-source community.

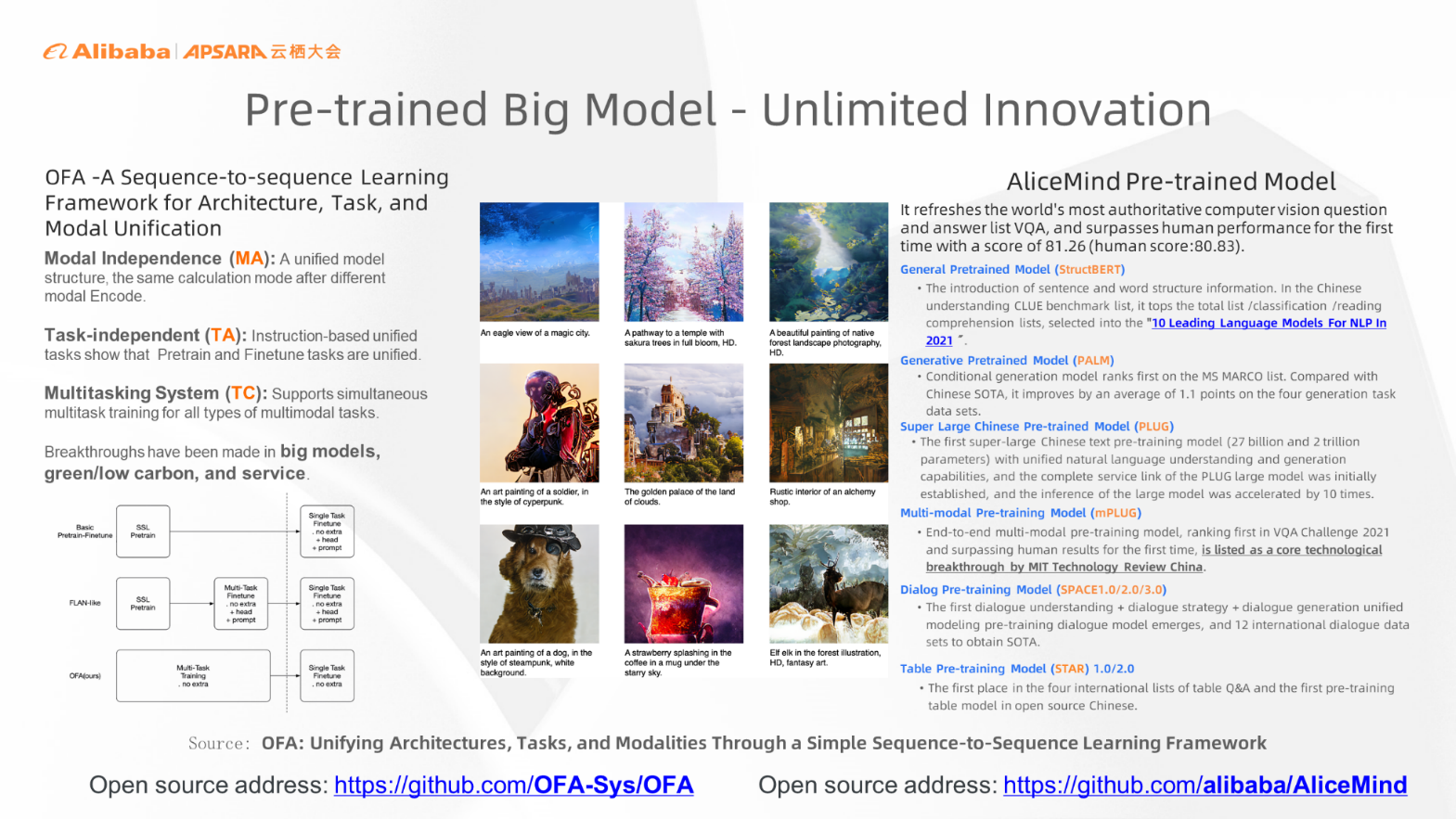

One For All (OFA) and AliceMind are two projects of the DAMO Academy, and their model and code are both open-source.

OFA is a sequence-to-sequence learning framework unrelated to tasks, structures, or modalities. It has made breakthroughs in large-model, low-carbon, and service-oriented aspects. Compared with GPT-3, OFA only needs 1% of computing resources to achieve the same effect. OFA is a large model that cannot be stored in a single machine or video card. Therefore, it has done a lot of work in service and can easily pull up the service. The image in the middle of the preceding figure is the image generated through OFA after the text is input.

AliceMind is a language-oriented pre-training model. Last year, it refreshed the VQA list of the world's most authoritative computer vision questions and answers and surpassed human performance for the first time with a score of 81.26. In addition to the VQA scenario, AliceMind has refreshed many lists in Chinese comprehension generation, dialogue strategy, dialogue generation, and form Q&A, reaching the level of SOTA.

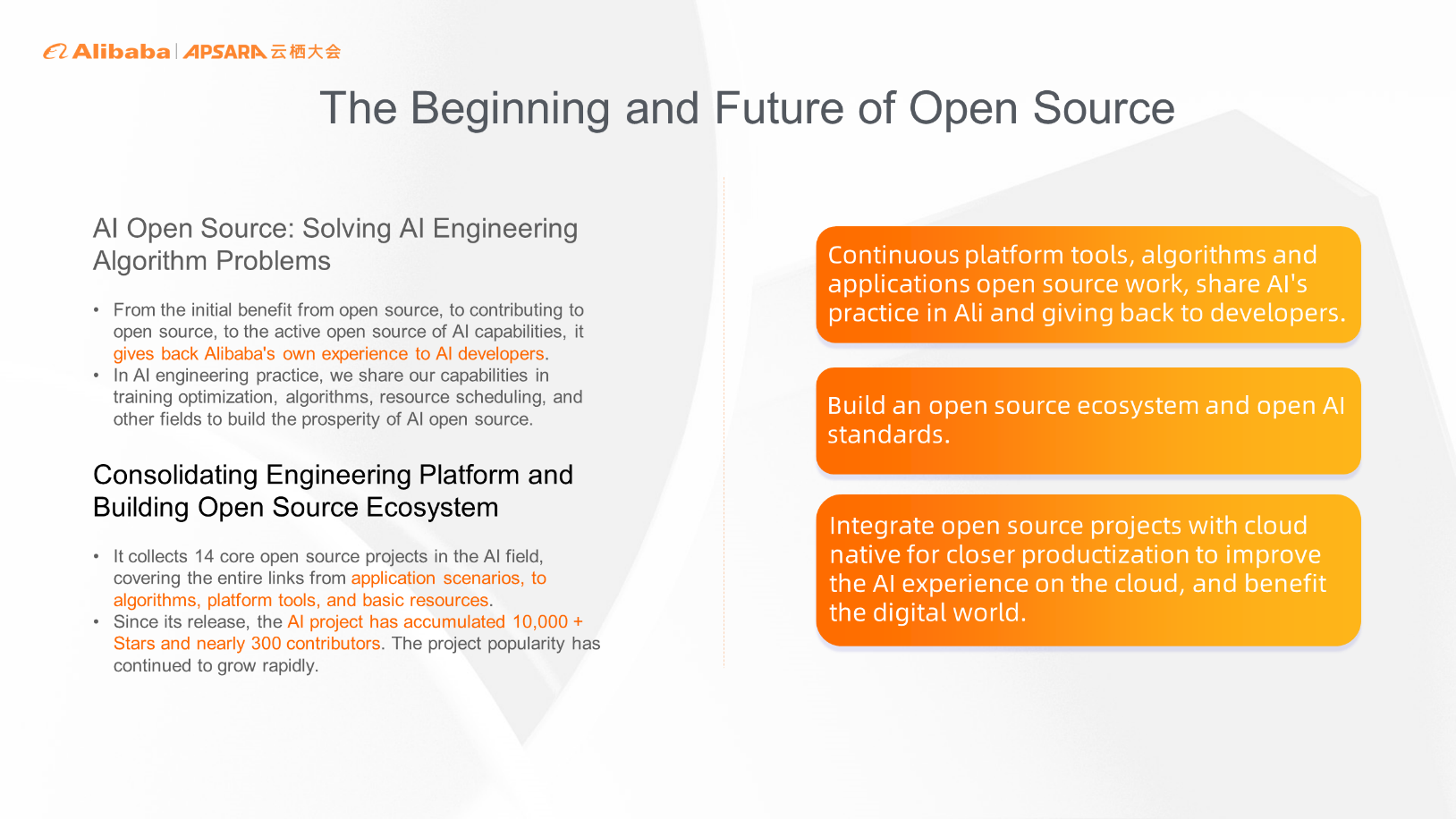

Back to the original aspiration of open-source, many of our work draws on the fruits of open-source projects. We hope to combine Alibaba's applications on the scenarios based on open-source to achieve greater expansion and return the results to the open-source community. Therefore, we will do more continuous open-source work at the platform, algorithm, application, and resource scheduling levels so more developers can enjoy the Alibaba experience in practical scenarios. In addition, I hope more developers can participate in the open-source community, building a more open open-source community and new AI standards. Finally, we hope more open-source products can be integrated with the cloud to make it practical, easy to use, and secure. We want to make AI open-source inclusive in the digital world.

The Open-Source Folks Talk - Episode 4: Big Data and AI Open-Source

The Open-Source Folks Talk - Episode 4: Remain True to Original Aspirations in the Cloud-Native Age

1,320 posts | 464 followers

FollowAlibaba Cloud Community - March 9, 2023

Alibaba Cloud Community - December 20, 2022

Alibaba Cloud Community - March 9, 2023

Alibaba Cloud Community - September 5, 2022

Alibaba Cloud Community - June 2, 2022

Alibaba Cloud Community - September 9, 2022

1,320 posts | 464 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn MoreMore Posts by Alibaba Cloud Community