By Bao Jun (Tuikai)

During the Double 11 Shopping Festival in 2019, Alibaba Luban delivered 1 billion images to the e-commerce business of Alibaba. Refined operations by scenario and target audience were needed to solve a series of problems, from improving the efficiency of traffic processing in public domains to empowering the expressivity of merchants in private domains. This, in turn, poses increasingly high requirements for producing large numbers of high-quality structured images. Technologies related to image creation are also evolving. This article explores the ways Luban has evolved and applied its image creation capabilities over the past year.

The preceding figure shows how we manage the images that are posted by the interviewed stores selling ginger tea on our platform. The content and forms of product-related text and images vary in different scenarios. Such variety is different from the variety of massively personalized products. The former variety is intended to attract consumers, whereas the latter variety is a measure of business operation capability.

A series of questions emerge, such as how to support these two types of variety, how to establish a connection between image data and user attention, and how to empower merchants. The following sections will give tentative answers to these questions from the perspective of image production.

When products are distributed at the consumer-end, their data and features are effectively transferred, collected, and processed in accordance with the standard definition of structuring. This maximizes the effect of models and algorithms.

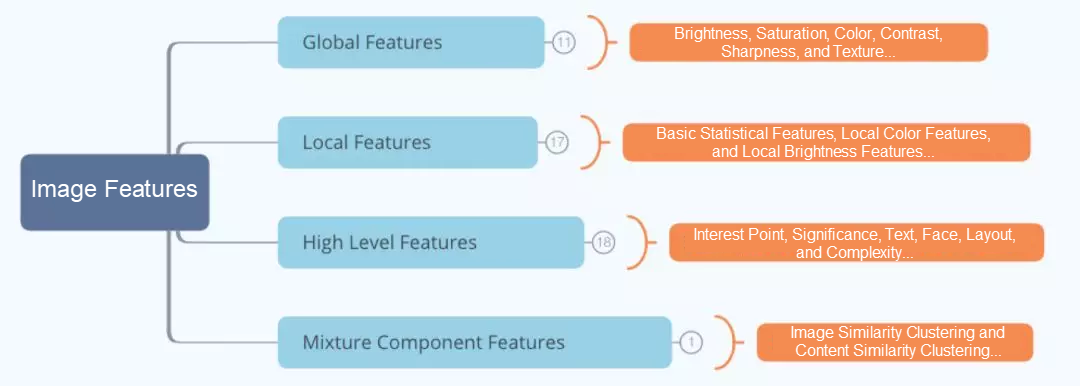

When we try to extract and calculate the features of product image data as a whole, we may encounter the probability problem after the features, including low-level display features and high-level semantically implicit features, are processed by a deep neural network (DNN). We hope to convert the probability into definite input to accurately mine the association between image features and user behaviors.

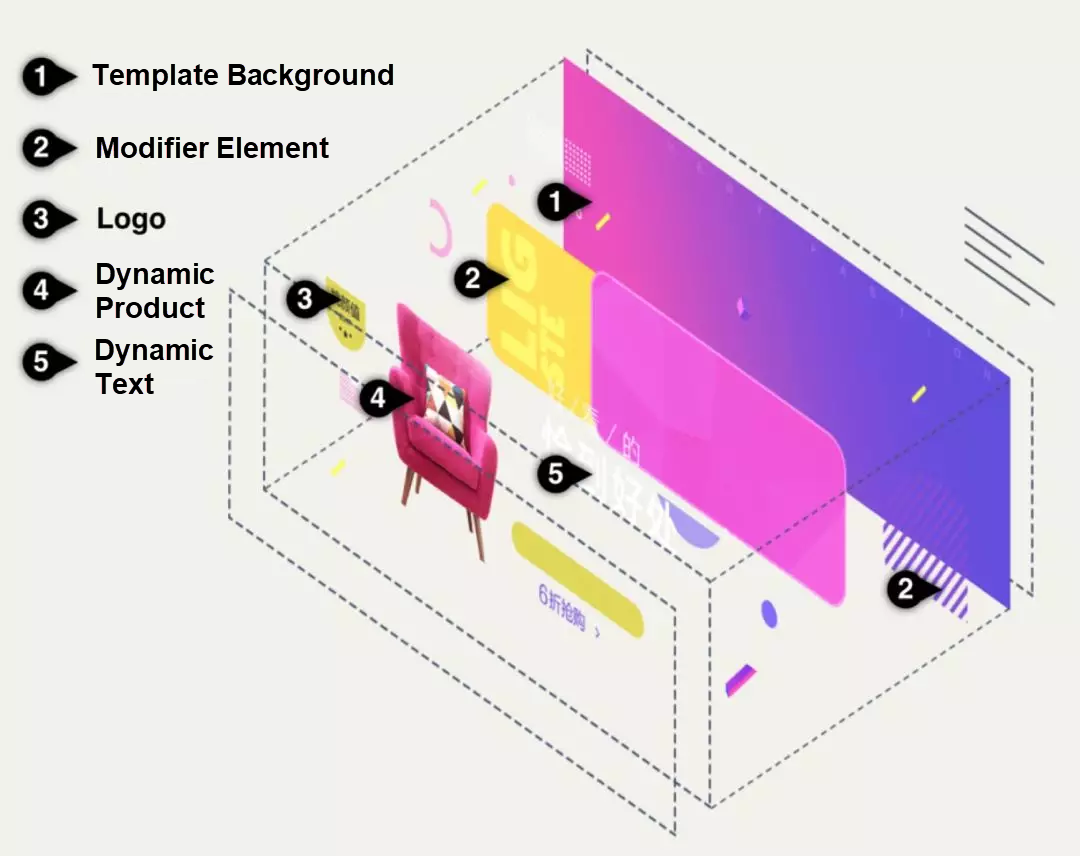

In e-commerce, an image is produced after the photographed picture is edited (sometimes by adding text) with image processing software such as Photoshop. An image is structurally divided into layers based on the layer division standard of the image processing software. An image is structurally described by layer in three dimensions: structure, color, and text (content). The attributes and features of a structured image can be efficiently and accurately transferred, collected, and processed. This makes subsequent image creation and processing possible.

To standardize image production, we have developed tools for business designers to convert a single unstructured image into a self-descriptive domain-specific language (DSL) structured data without changing the designer's existing workflow. This ensures that image data is structured before an image is produced.

After an image is structured, image creation is converted into data matching and sorting processes based on human-machine collaboration. We have accumulated a large amount of design data. Compared to the previous unstructured design concept, image structuring allows accurately applying the design to every layer, element, and character. This helps in accumulating reusable data assets. We select optimal image expressions based on user preferences and product attributes. This is a process of data matching.

The matching process involves two types of data. One type of data is the designer-created design data which is oriented toward specific scenarios or product expressions. This type of data is called templates. The other type of data includes user attributes and user behaviors that have occurred when users browse product images, such as adding products to favorites, clicking products, and buying products.

The matching process is divided into two procedures. The first procedure is the parameter-based matching of product images and templates by defining design constraints. For example, restricting the main color range of product images based on the background color of a template, or restricting the main shape of product images based on the template layout. Then, we use image detection and recognition algorithms to extract the image features of product entities online and match the image features with the template features that are computed online.

The second procedure is matching user features and image features. During the modeling process, we divide data into three feature groups: the user feature group, product feature group, and image feature group. Every possible combination of two groups of feature vectors is paired for predictive modeling through embedding because product features are more closely related to user features in the e-commerce scenario. Paired modeling enables the targeted weighting of prediction results. If three groups of feature vectors are modeled in combination, the relationship of image-related behaviors cannot be effectively learned.

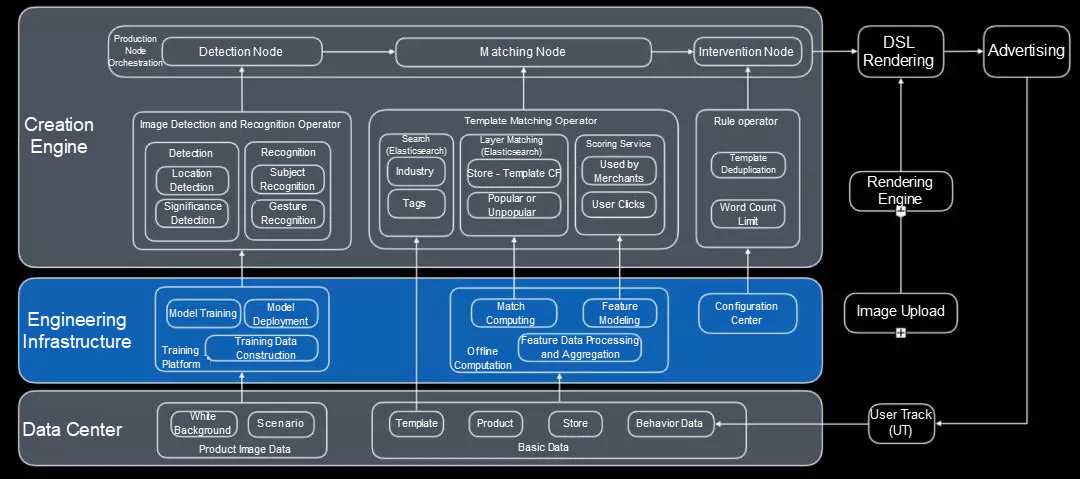

We define a production pipeline to separate the production process from the production capacity. This allows us to deal with complex businesses and provide a fast response. We provide production process orchestration capabilities to meet complex business requirements and provide a pluggable production operator model to improve the response speed.

Images are designed and produced through a series of systematic processes. A workflow engine is used to orchestrate and manage production nodes, allowing business teams to flexibly define and assemble production lines as needed. This satisfies production requirements in various scenarios.

Operators define unified input and output and the required context. The agreed input is computed to achieve the desired effect. Image operators are used for image segmentation, subject recognition, optical character recognition (OCR), and significance detection.

The engine is also applicable to second-party capabilities in an open and user-friendly manner and supports flexible business integration. Currently, we manage 10 core scenarios, 33 production nodes, and 47 operator capabilities. One billion images are created by a scenario based on matrixes by means of orchestration and combination.

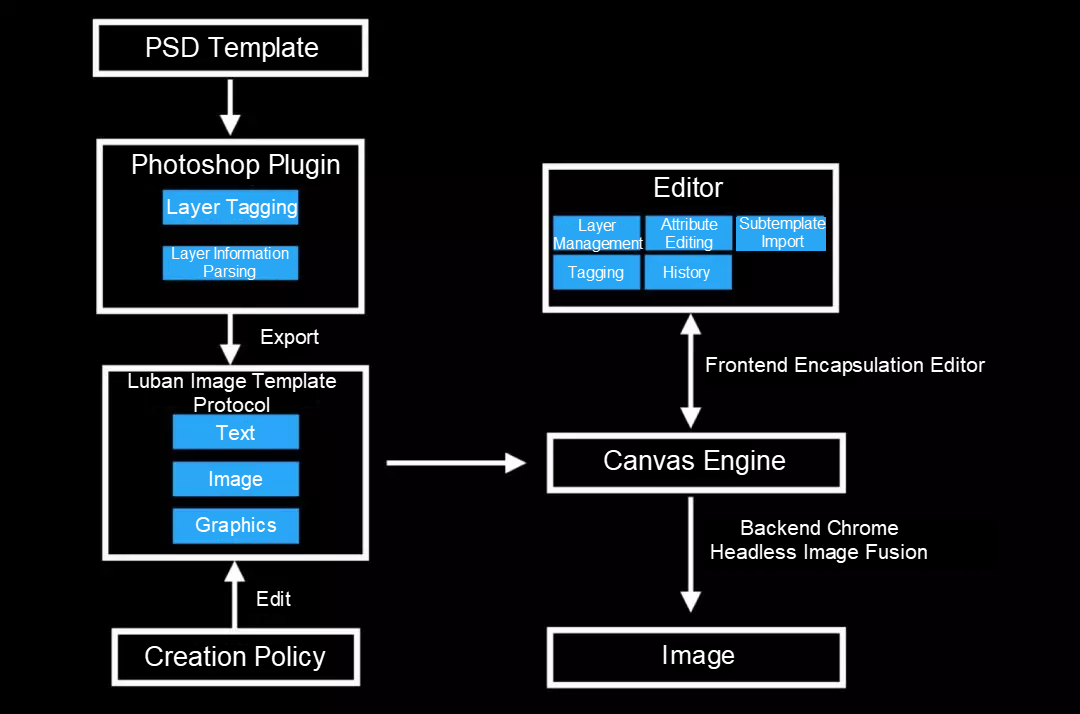

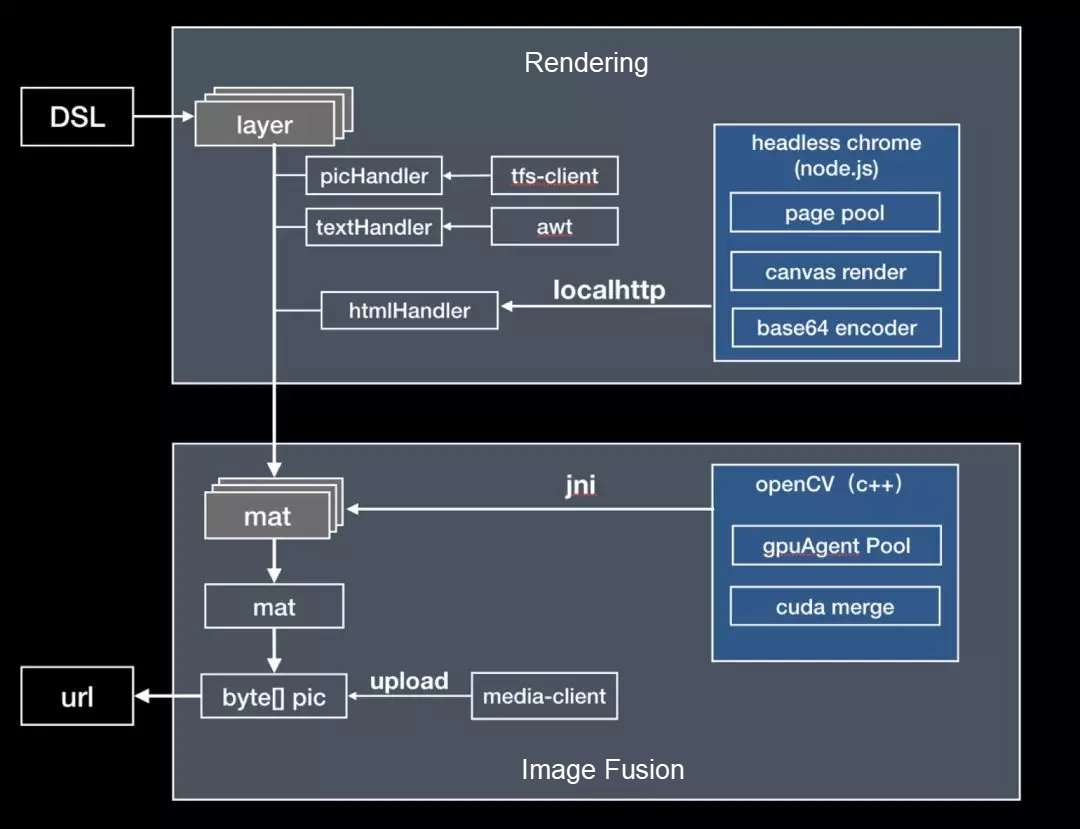

From the macro perspective, production procedures are implemented based on a production architecture. From the micro perspective, rendering is a production technique. Our primary goal is to ensure the same rendering effect at the server end and business end under the same domain-specific language (DSL) protocol. We need to support direct image rendering at the serve rend and provide the WYSIWYG secondary editing capability at the business end. To meet these requirements, we developed a rendering solution that is homogenous at the server and business ends. The solution provides visual image editing capabilities at the business end through UI encapsulation. It also enables image production on the cloud by loading the canvas engine through the Puppeteer headless browser.

Rendering is intended to bring back visual design. This is especially true of text rendering. It is difficult to render with rich text effects at the business end due to font library installation problems, while the server end lacks standard protocol definitions for text effects. With homogeneous rendering, we combine the business end protocol with the server end font library for flexible visual restoration.

For the homepage banners on Taobao, a template that assigns a single style to each individual character improves the click rate by an average of 13% on average in an A/B bucket test compared with common templates.

Any performance improvement is useless in the production of one billion images if high-performance engineering is not guaranteed. During the Double 11 Shopping Festival, Luban achieved an average response time (RT) for image fusion of less than 5 ms, and the average RT from DSL resolution to storage in Object Storage Service (OSS) in the uplink was less than 200 ms. The overall system throughput experienced a year-on-year increase of 50% without adding servers. The engines at the server end are divided into two types:

The rendering engine converts structured layer data into independent image data streams. The handler converts between different types of layers and implements parallel rendering. The image fusion engine combines the rendered layer data from multiple images through encoding and compression and uploads the resulting image.

Performance optimization is divided into the following steps:

As an important carrier of product information, images allow merchants to express their intentions and help consumers with decision making. Images are used in the search and recommendation processes in public domains and in-store details in private domains. Technology and data enable merchants to continuously optimize image production so that structured images are better understood by machines and distributed more efficiently. This helps merchants strengthen their business operations.

Multidimensional image features help to describe target consumers in both general and detailed manners. This helps merchants better satisfy and even stimulate consumer interests.

Are you eager to know the latest tech trends in Alibaba Cloud? Hear it from our top experts in our newly launched series, Tech Show!

How Did Alibaba Engineers Use SwiftUI to Develop an App in Five Days?

Alibaba Clouder - November 22, 2019

Alibaba Clouder - December 19, 2018

xungie - November 23, 2020

Alibaba F(x) Team - February 26, 2021

Alibaba Clouder - November 27, 2018

Alibaba Cloud Community - July 25, 2022

Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More CT Image Analytics Solution

CT Image Analytics Solution

This technology can assist realizing quantitative analysis, speeding up CT image analytics, avoiding errors caused by fatigue and adjusting treatment plans in time.

Learn MoreMore Posts by 淘系技术