In this article, Liang Shimu, large-scale data processing expert at Alimama, will share typical application scenarios and advanced features of MaxCompute in Alimama's digital marketing solution.

By Liang Shimu, Large-Scale Data Processing Expert at Alimama

MaxCompute is a fast, fully hosted GB/TB/PB level data warehouse solution, which is widely used in Alibaba Group, including Alibaba's digital marketing platform, Alimama.

Liang Shimu (Zai Si), large-scale data processing expert from Alimama's basic platform sector, shares some typical application scenarios of MaxCompute in Alimama's digital marketing solution. First, we will look at Alimama's ad data stream and explain how to use MaxCompute to deal with advertising issues. Then, we will see how to use MaxCompute based on some typical application scenarios of MaxCompute in Alimama. Finally, we will explore MaxCompute's advanced auxiliary capabilities and its capabilities of optimizing computing and storage.

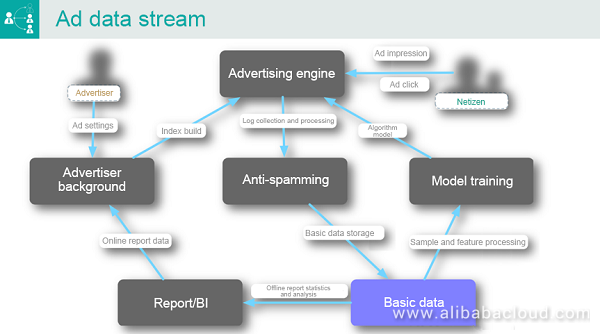

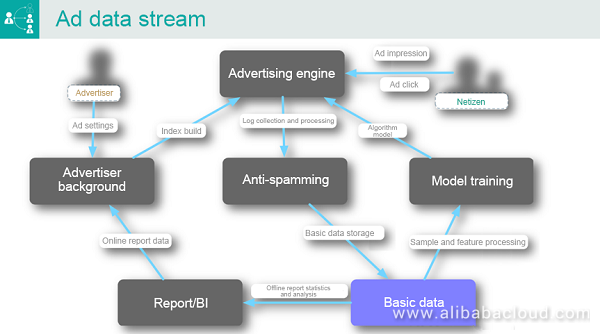

Alimama's Ad Data Stream

The following figure is a simplified version of the ad data stream. To correspond to today's topic of Big Data-related computing, I will introduce traditional ads, such as search ads and targeted ads, from the perspective of the entire ad data stream. In the figure, we can see that the two most important roles are the advertiser and "netizen".

Advertiser Background

In general, an advertiser needs to configure the advertising settings in the background before ad placement. For example, the advertiser can deliver ads targeted specifically to male customers from Beijing, or to customers who searched for dresses on specific websites.

Advertising Engine

After completing the settings, the advertiser will need to use the most important service module — the advertising engine. This service module allows advertisers to deliver ads to netizens who search for related content on specific websites, such as Baidu or Taobao. For example, when you search for dresses on Taobao, the search results include organic search results and ads. These ads are delivered to you through the advertising engine.

Ad impression and ad click have the closest relationships with netizens. When netizens search for items on a platform such as Taobao, the advertising engine determines the ads to be delivered based on two key modules: index and algorithm.

- Index build. When a user searches for a keyword, for example, dress, on Taobao, tens of thousands of products match the search condition. It is impossible to deliver ads for all products to the user. In this case, the first thing that needs to be done is to query the number of products that have the keyword in the ad library. The data relies on advertisers' settings in the background when they determine the keywords for their products.

- Algorithm model. Assume that there are 10,000 candidate ads. The corresponding scoring service scores all ads based on a series of features and some models trained in the background. The ads are then sorted and displayed to users based on specific rules.

Anti-Spamming

When netizens view ads, related logs will be generated in the system background. In this scenario, the anti-spamming module is used to detect operations that are executed through APIs or tools for malicious competition. After finding such operations, the anti-spamming module will determine related penalties, for example, fee deduction.

Basic Data

Data filtered by the anti-spamming module is regarded as trusted and will be saved on the basic data platform, specifically, MaxCompute.

Report/BI

Filtered data is mainly used for report and BI analysis or model training. In the initial filtering phase, the advertising engine obtains a large number of candidate ads. The model training module picks out ads with the highest estimation scores from the candidate ads and delivers the ads to users. It executes the stored original data in various models, varying from the traditional logistic regression model to the up-to-date deep learning model. The executed data is then pushed to the advertising engine for online ad scoring.

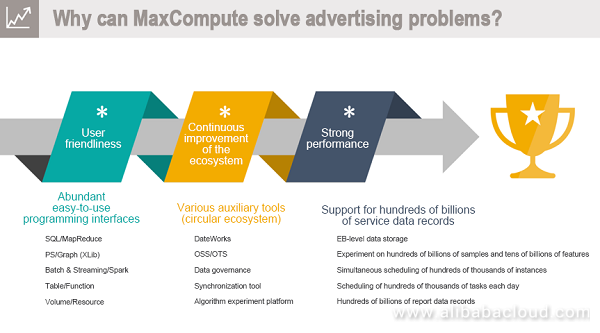

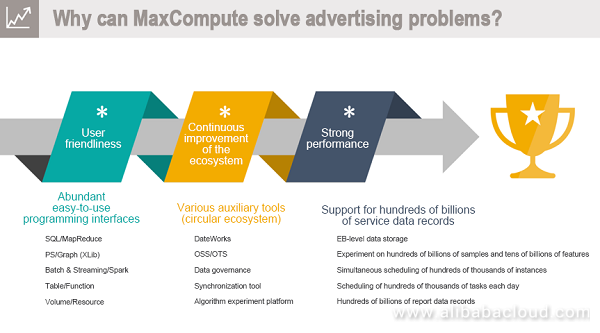

Using MaxCompute to Address Advertising Problems

User Friendliness

MaxCompute supports traditional SQL statements. When SQL statements and UDFs become inapplicable, MaxCompute allows users to write MapReduce jobs to customize the original data. In addition, MaxCompute enables users to perform graph computing, for example, graph learning. It supports both batch and streaming processing methods, for example, Spark Streaming.

One thing that I like very much about MaxCompute is that it provides tables instead of HDFS file paths, as they do in the Hadoop ecosystem. Compared with vacuous files, tables have schemas, making them easier to understand. MaxCompute enables users to operate these tables through APIs and provides related functions, for example, UDFs and UDTFs. At the same time, MaxCompute supports semi-structured data and Volume. It also allows users to operate related resources. All these functions facilitate development.

Continuous Improvement of the Ecosystem

MaxCompute is both a platform and an ecosystem. It can be used in the entire process from development to O&M and management. After data is generated, you can use the synchronization tool to load it to MaxCompute. Then, you can use DataWorks to execute the synchronized data in some simple models for data analysis and processing. Complicated data processing can be implemented on the algorithm experiment platform. Currently, MaxCompute also supports some functions of TensorFlow.

Strong Performance

As a digital marketing solutions provider, Alimama generates a large amount of data. Currently, MaxCompute can store EB-level data and conduct experiments and training on hundreds of billions of samples and tens of billions of features in specific scenarios. MaxCompute can invoke hundreds of thousands of instances simultaneously by executing a MapReduce or SQL job.

Typical Application Scenarios

Next, let's see some typical application scenarios of MaxCompute in Alimama.

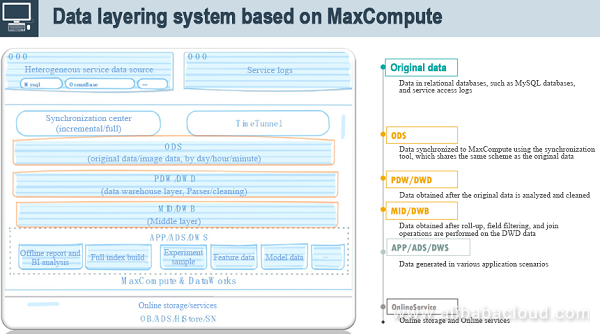

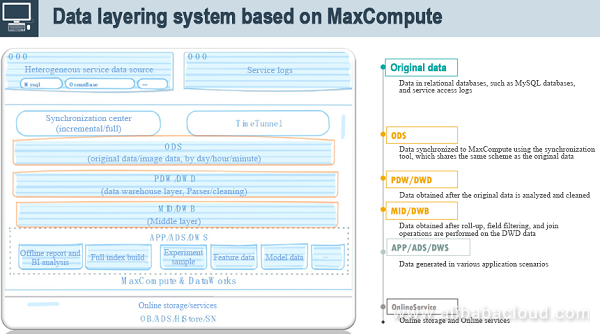

Data Layering

The preceding figure shows the six data layers on MaxCompute.

- Original data layer. The original data consists of data from service databases, for example, MySQL and HBase, and service access logs such as the ad impression and ad click logs. The data is stored on our servers.

- ODS layer. Data at this layer is synchronized to MaxCompute by using the synchronization tool and shares the same schema as the original data. When we need to process the original data offline (including Streaming processing), we can use the synchronization center to perform full or incremental synchronization. At the same time, we can use TimeTunnel to collect logs on all servers. Data synchronized to MaxCompute shares the same schema as the original data. However, data at this layer can be synchronized to offline platforms by day, hour, or even minute in a real-time or quasi-real-time manner.

- PDW/DWD layer. The formats of synchronized data may vary. For example, the logs collected online comply with certain protocols. A series of operations must be performed before we can use the logs. The first thing that needs to be done is data cleaning. Anti-spamming is one of the data cleaning methods. Then, we need to split data into fields that are easy to understand.

- MID/DWB layer. This is the middle layer. When there is too much data, for example, Alimama generates billions of service data records every day, the data cannot be processed or analyzed directly. This is when the middle layer is used. We can perform roll-up, field filtering, and join operations on the DWD data. In addition, most of the subsequent service applications are performed based on the middle layer.

- App/ADS/DWS layer. This layer stores data generated in specific scenarios, such as offline report and BI analysis, full index builds, and model training. I will introduce how to use MaxCompute in these three scenarios later.

- The last layer is used to provide online services and store online data.

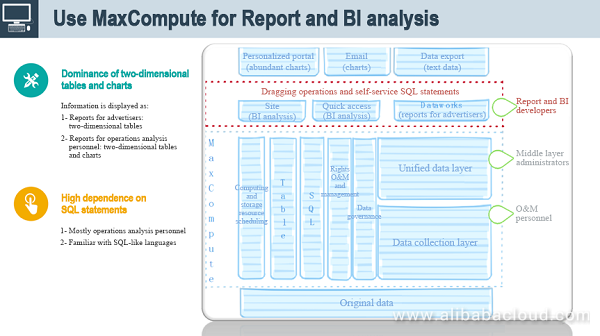

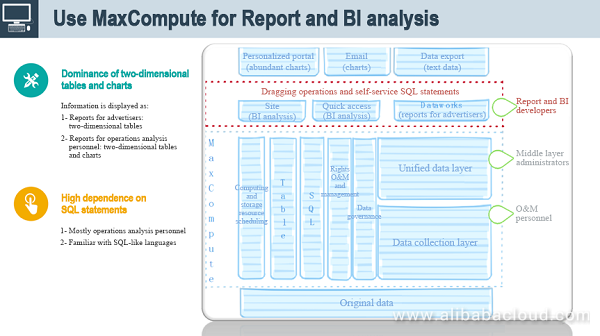

Report and BI Analysis

Report and BI analysis has the following features:

-

Dominance of two-dimensional tables and charts. In most cases, information is displayed to advertisers in two-dimensional tables, so that the advertisers can filter and sort the data records to see the results. Operations personnel, however, also need to analyze charts in detail. We provide the data export function for advertisers, so that they can push data online and view the advertising effects in the background. We also allow operations personnel to internally send the tables and charts as attachments in emails.

-

High dependence on SQL statements. All the functions that we have mentioned are highly dependent on SQL statements. In most cases, you do not need to write Java scripts or UDFs for development. Instead, you only need to write some SQL statements for report and BI analysis.

The following figure shows how MaxCompute is used for report and BI analysis. A user only needs to enter some simple SQL statements and perform some simple preprocessing operations before the user can view the required data.

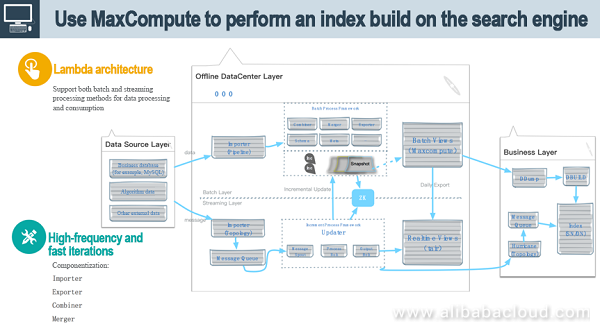

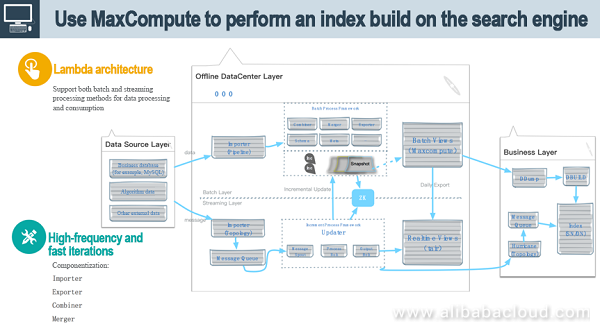

Index Build

Advertisers can change advertising settings in the background. Once the number of data records reaches millions, tens of millions, or even hundreds of millions, dedicated engine services need to be provided for online queries. The following figure shows how Alimama's ad search engine uses MaxCompute for index builds based on the Lambda architecture. The Lambda architecture supports offline data and online streams. It can use both batch and streaming processing methods for data processing and consumption.

During business processing, the system needs to deal with data from various heterogeneous data sources, such as data in the business database (MySQL), algorithm data, and other external data, as shown at the data source layer on the left of the figure. Eventually, the system accumulates the data into the index of the engine at the business layer to support various queries.

During the transfer from the data source layer to the business layer, the data needs to pass through the offline Data Center layer. The offline Data Center layer is divided into an upper part, which is the batch layer, and a lower part, which is the streaming layer. Access to data sources is available in both full and incremental modes. In full mode, the system directly drags a table from MaxCompute, and then runs an offline index build. In another scenario, for example, when an advertiser has made a price change, this change needs to be quickly reflected in the index. Otherwise, an old version of information is kept in the index, which may result in a complaint from the advertiser. Therefore, an incremental stream processing mode is required to reflect the advertiser's changes in the index in real time.

-

Offline part. In this part, we provide a service called Importer, which is similar to a synchronization tool. This service is implemented based on MaxCompute, and most of its functions are run on MaxCompute. Because componentization is implemented at the batch layer, this layer requires a series of operations similar to data combining and merging, and also involves source data schema management and multi-version data management. Offline data is stored in the ODPS and can be checked through batch views of MaxCompute.

-

Online part. Simply put, for example, when the system receives incremental data from MySQL, the system parses and enqueues the data into the message queue. The data is then processed on a platform such as Storm, Spark, or MaxCompute's Streaming, and the components similar to those used in offline processing are used to run an index build. Then, you can check the latest data through real-time views, which are currently implemented by Tair. In addition, real-time data is merged into the offline data at regular intervals, creating multiple versions of data. The merge operation has two functions. On the one hand, the merged data can be directly pumped into the online data in batches. This is especially useful when the online data encounters an error and cannot quickly recover through the incremental process. On the other hand, when performing an offline index build, we need to import the offline data in full mode on a regular basis to prevent the index from becoming bloated. To ensure real-time data updates, an incremental stream is required to inject data into the full data during this period. In addition, to avoid inefficiencies caused by service downtime, we preserve multiple versions of incremental data.

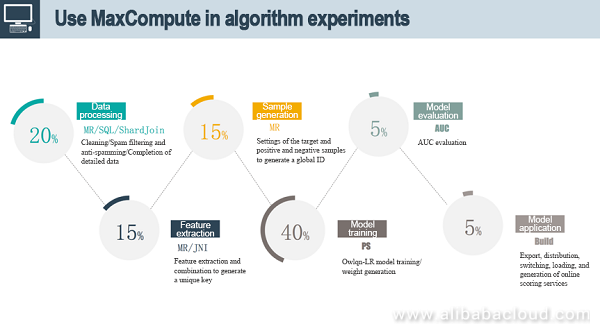

Algorithm Experiments

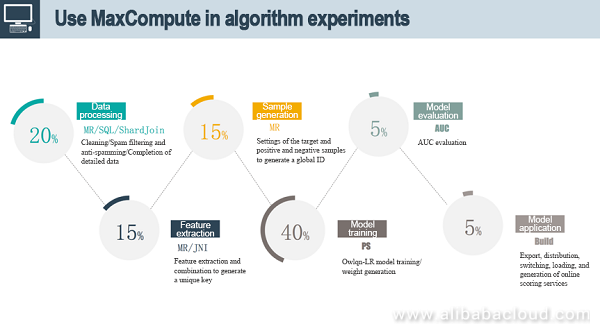

Next, let's take a look at how MaxCompute is used in algorithm experiments. We use the process shown in the following figure not only in algorithm experiments, but also when we update the performance model online every day. The input of the entire process is online logs that record, for example, which users browsed and clicked on which ads. The process output is online scores of features extracted from users' browsing and click-through behavior. The entire process can be abstracted into six steps:

-

Data processing. In addition to the aforementioned data cleaning, spam filtering, and anti-spamming, the simplest operation of data processing is to combine multiple pieces of data into one piece. To achieve this, ShardJoin is used in addition to MapReduce and SQL. ShardJoin was jointly developed by Alimama and MaxCompute to address the processing inefficiency problem when both sides encounter a large amount of data in the process of joining offline data. The principle of ShardJoin is very simple. It simply splits the right table into many small blocks and uses an independent RPC service for queries. Data processing accounts for approximately 20% of the total time for the entire process.

-

Feature extraction. The output of the first step is an entire unprocessed PV table containing a series of attribute fields. Features are then extracted based on this PV table. Typically, a MapReduce job is run, and most important of all, JNI operations are performed. In doing so, features can be extracted and combined to generate unique keys. For example, I want to combine UserId and Price to compute a new feature. Initially, there may be only hundreds of features. However, after the cross join, Cartesian product, and other similar operations are performed, the number of features may reach tens of billions. At this time, the capability of MaxCompute to compute hundreds of billions of samples and tens of billions of features, as mentioned before, works extremely well. This capability is of vital importance to the scheduling and even the entire computing framework. Feature extraction accounts for approximately 15% of the total time for the entire process.

-

Sample generation. After a sample is generated, we generally need to set the target as well as positive and negative samples. A global ID is generated for each feature. The samples are then serialized. Serialization is required because each computing framework has formatting requirements for input samples. Actually, serialization refers to the process of converting the format of input samples. Sample generation accounts for approximately 15% of the total time for the entire process.

-

Model training. The input of model training is the hundreds of billions of samples generated in the previous step, and the output is the weight of each feature. For example, we can get the weight of the feature "male" and the weight of the feature corresponding to the purchasing power of one star. Model training accounts for approximately 40% of the total time for the entire process. This time depends on the model complexity. For example, the time spent on running simple logistic regression or complex deep learning is different.

-

Model evaluation. Once a model is trained, we need to use AUC to evaluate the effect of the model. During sample generation, samples are generally classified into training samples and test samples. The test samples are used to evaluate the trained model. Model evaluation accounts for approximately 5% of the total time for the entire process.

-

Model application. If the trained model evaluation meets certain criteria, the model can be applied online. The model application process is complicated, involving data export, data distribution, service loading, traffic switching, and generation of online scoring services. Model application accounts for approximately 5% of the total time for the entire process.

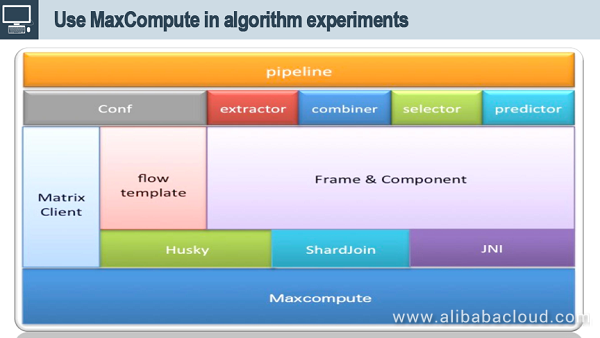

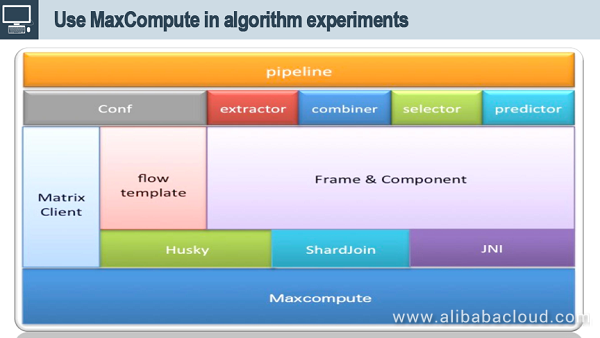

To support algorithm experiments, we build an algorithm experiment framework based on MaxCompute, as shown in the figure below.

The entire model training process does not require too much code development. Generally, the code needs to be modified only in the following two scenarios: Features need to be added, deleted, or modified in the traditional logistic regression process, and various neutral network settings need to be modified during deep learning.

The entire process is highly consistent. We abstract this process into the Matrix solution. From the external perspective, the solution can be viewed as running a pipeline that concatenates a series of jobs. These jobs are ultimately run on MaxCompute. The solution provides the Matrix client externally.

In most cases, users only need to modify the configuration file. For example, users set the feature extraction method to determine which field in which row needs to be extracted from the schema of the original table. Users determine how the extracted features are combined. For example, the cross product operation can be applied on the first and second features to generate new features. Users can also determine feature selection methods, such as filtering out features that occur at low frequencies. In the framework, we componentize the above functions. Users only need to join the required functions like building blocks and configure each block accordingly. For example, specify the input table. Before a sample enters the model, the sample format is fixed. On this basis, we implement the scheduling framework Husky, which mainly implements pipeline management and maximizes parallel execution of jobs.

Advanced Features and Optimizations of MaxCompute

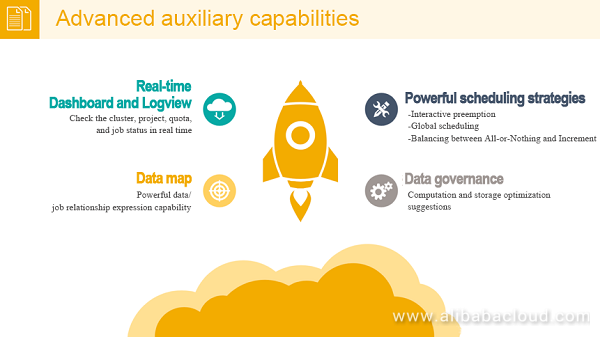

Advanced Auxiliary Capabilities

On MaxCompute, Alimama's resource usage, including the usage of computing and storage resources, once accounted for one-third of the total in the entire Group. Based on our own experience, I'll share some of the advanced features and optimizations of MaxCompute that you may use.

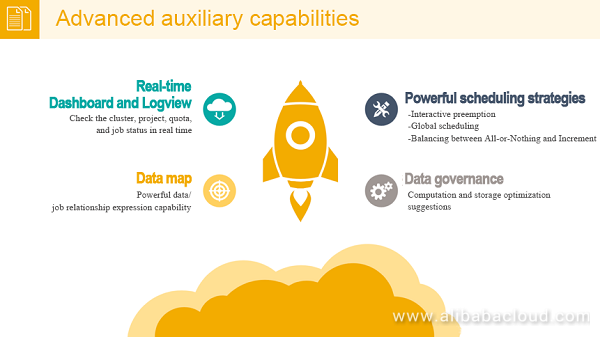

Real-time dashboard and Logview

With Logview, users no longer have to painfully retrieve logs, but can quickly view job status and find the cause of a problem. In addition, Logview offers a variety of real-time diagnostic functions. When a cluster encounters an exception, the real-time dashboard can help users analyze which project, Quta, or job is running in the cluster from various dimensions. The real-time dashboard relies on a series of source data management in the background. Of course, MaxCompute can provide more functions than just the real-time dashboard and Logview. However, it must be pointed out that we are highly dependent on these two functions both for individual and cluster management processes.

Powerful scheduling strategies

There are three major scheduling strategies: preemption, global scheduling, and resource allocation modes.

-

Interactive preemption. Traditionally, resources are preempted in a crude way. When a user submits a job, such as Quta allocation, both Hadoop and MaxCompute will analyze whether to allocate minQuta or maxQuta. We must take into account the issues of sharing and resource preemption. If there is no resource preemption, a job will run for a long time. If you directly kill a job that has been running, the job may need to be run from the start again, resulting in inefficiency. Interactive preemption better solves this problem. Interactive preemption enables an agreement to be reached between various frameworks. For example, before killing a job, the system sends the kill command a certain time in advance and allows the job to continue running for the specified time. If the job has not finished the execution after the specified time elapses, the system kills the job.

- Second, global scheduling. The number of machines running on Alibaba Cloud has reached tens of thousands. When the jobs on a cluster run very slowly, the global scheduling strategy can assign jobs to run on a cluster that is more idle. This scheduling process is transparent to users. Users can only intuitively feel that the speed of the job running changes.

- Third, balancing between All-or-Nothing and Increment, which are two different resource allocation modes. For a simple example, the All-or-Nothing mode is used to execute the training model for graph computation, and the Increment mode is used for SQL execution. The resource allocation modes of the two types of computation are very different. For SQL, if a thousand instances are required to run the Mapper, the system does not have to wait until a thousand instances are all ready. Instead, the system can run the Mapper once an instance is ready because there is no information exchanged between the instances. In contrast, model training requires multiple iterations to be executed one by one. A new round of iteration can take place only after the previous round of iteration is completed. Therefore, it is mandatory that all resources be ready before a new round of iteration can be executed. Taking the two modes into consideration, Alibaba Cloud's dispatchers have done a lot of work in the background to balance the resource allocation between the two modes.

Data map

The data map helps users describe the relationships between data and jobs, facilitating users' subsequent business processing.

Data governance

The work of cluster or job managers is not yet over after the job execution is complete. They need to further check whether the job execution result was satisfactory. Service governance can provide a lot of optimization suggestions at this time. For example, if certain data is not used by anyone after it is obtained, users can determine whether the link related to the data can be canceled. This type of data governance can greatly reduce resource overhead for both internal and external systems.

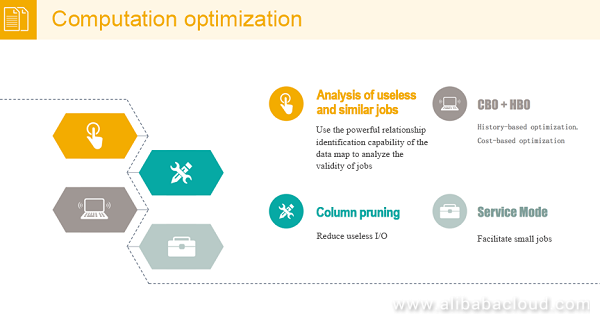

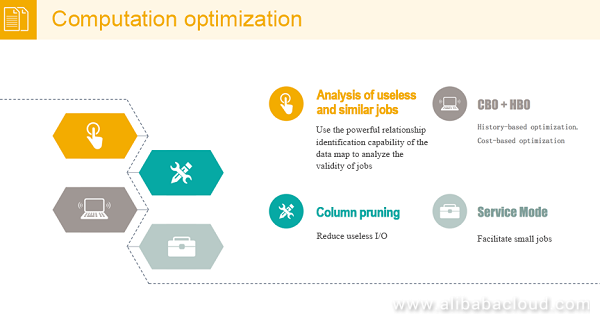

Computation Optimization

Next, we will share our experience with regard to the data governance introduced above. In terms of data governance computation optimization, we mainly use the following four functions of MaxCompute:

-

Analysis of useless and similar jobs. This function is easy to understand. It can help users analyze which jobs are useless and which jobs are similar. To achieve this function, we need to use the powerful relationship identification capability of the data map to analyze the validity of jobs.

-

HBO + CBO. The HBO + CBO function actually addresses the optimization issue. During the execution of a computing job, the CPU and memory usage needs to be preset regardless of whether SQL or MapReduce is used. However, the preset usage is often inaccurate. Two methods are available for solving this problem. In one method, you can run the job for a while, and then compute the CPU and memory usage that each instance probably needs based on the job running status. This method is called history-based optimization (HBO). Another method is cost-based optimization (CBO). Because of the time limit, we will not discuss this method more. If you are interested in this method, we will subsequently organize high-level sharing.

-

Column pruning. This method eliminates the need to list all the fields in the entire table. For example, during execution of the Select * statement, only the first five of ten fields need to be loaded with this method according to SQL semantics. This method significantly improves the overall job execution efficiency and disk I/O performance.

-

Service mode. Traditionally, MapReduce job execution involves the shuffle process, during which data needs to be written onto disks on the Mapper and Reducer ends. The data-to-disk operation is time consuming. For some small or medium jobs that may take only two or three minutes to run, the data-to-disk operation is not involved. MaxCompute can predict the execution time of jobs. For small jobs, the service mode is used to reduce the job execution time.

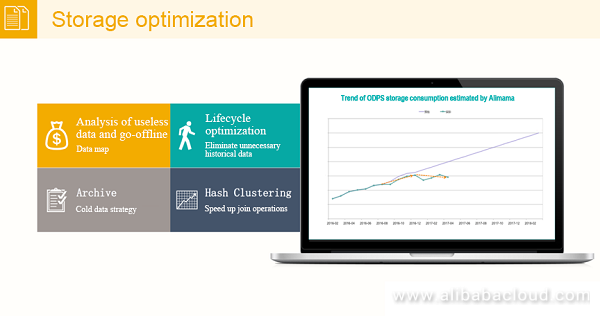

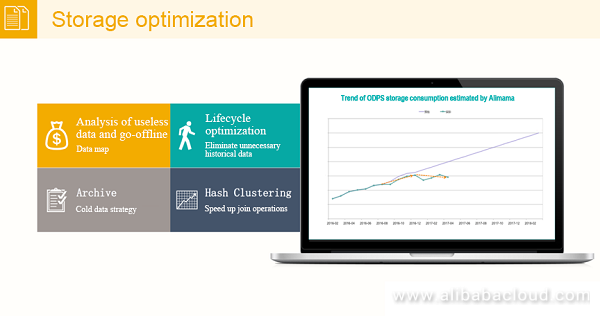

Storage Optimization

In addition to computation optimization, MaxCompute also features storage optimization. Storage optimization is mainly implemented in the following aspects:

-

Analysis of useless data and go-offline. This function has been repeatedly introduced before. It helps users identify useless data and bring the data offline, and its principle is similar to that used for analyzing useless jobs. The difficulty lies in the last mile. Specifically, when we import the data produced from the offline platform into the online data, the elements in the last mile are difficult to trace without highly standardized tools and platforms. Alibaba has already implemented standardization in this area. With the increasing number of services offered by Alibaba Cloud, this difficulty may be overcome so that users can determine which data is really useless.

-

Lifecycle optimization. In the beginning, the time required to retain a table needed to be manually estimated and configured, for example, one year. However, based on the actual access situation, you may find that retaining a table for one to three days is enough. The data governance function of MaxCompute can then be used to help users analyze the appropriate retention time for a table. This capability is of vital importance for storage optimization.

-

Archive. Data can be classified into hot and cold data. In particular, in a distributed file storage system, the data is stored in dual backup mode, which is of great significance. For example, it can make your data more reliable and not get lost. However, it also leads to a large amount of data storage. MaxCompute uses a cold data strategy to replace dual backup. In this strategy, 1.5 backup is used so that only 1.5 times the space is required to achieve the same effect as a dual backup.

-

Hash clustering. This function enables a trade-off between storage optimization and computation optimization. Every time a MapReduce job is executed, a Join operation may need to be performed during the job execution. Every time a job operation is executed, the system may execute a sort operation on a table. However, the Sort operation does not have to be executed every time so we can perform storage optimization about the sort operation in advance.

The following figure shows the trend of ODPS storage consumption estimated by Alimama. As shown in the figure, we can clearly see that the expected consumption has grown almost linearly over time. After using MaxCompute to implement several optimizations, we can clearly see that the storage consumption growth trend is slowing down.

Summary

Today, I have introduced so many optimization features with the hope that everyone can have more understanding and expectations of MaxCompute. If you have more needs, you can submit them to the MaxCompute platform.

To learn more about MaxCompute, visit www.alibabacloud.com/product/maxcompute

Platform For AI

Platform For AI

Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

MaxCompute

MaxCompute

Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution