TimescaleDB is a time-series database plug-in for PostgreSQL, and its automatic sharding function is very popular.

In fact, PostgreSQL plpgsql can also implement a similar function, provided that schemaless mode is used.

There are several examples of schemaless design ideas and applications:

PostgreSQL Schemaless Design and Stress Testing in China Railway Corporation's Ordering System

PostgreSQL Schemaless Implementation (Similar to Mongodb Collection)

Next, with respect to the automatic sharding function, let's take a look at the example of schemaless implementation.

1. First, you should monitor the amount of writes. You can count data writes by using the track_counts parameter (the track_counts parameter is enabled by default).

PostgreSQL pg_stat_reset Eliminates the Hidden Danger of track_counts

postgres=# select * from pg_stat_all_tables where relname='test1';

-[ RECORD 1 ]-------+-------

relid | 31129

schemaname | public

relname | test1

seq_scan | 0

seq_tup_read | 0

idx_scan |

idx_tup_fetch |

n_tup_ins | 1000

n_tup_upd | 0

n_tup_del | 0

n_tup_hot_upd | 0

n_live_tup | 1000

n_dead_tup | 0

n_mod_since_analyze | 1000

last_vacuum |

last_autovacuum |

last_analyze |

last_autoanalyze |

vacuum_count | 0

autovacuum_count | 0

analyze_count | 0

autoanalyze_count | 0 2. When data is written to a certain amount, the next table is automatically written.

1. Design:

When writing, data is written through UDF, the write speed of each data stream is monitored in real time, and data sharding is dynamically performed.

2. Test table:

create table log(id int, info text, crt_time timestamp default now());

create index idx_log_crt_time on log(crt_time);

create table log_tmp (like log including all) inherits(log); 3. Rules for sharding:

When the number of records exceeds 100,000, the partition is automatically switched.

4. UDF definitions:

create or replace function f(v_id int, v_info text) returns void as $$

declare

suffix int;

v_rows int8;

min_time timestamp;

max_time timestamp;

begin

-- 插入

insert into log_tmp(id,info) values (v_id, v_info);

-- 判断记录数是否达到切换阈值

select n_live_tup into v_rows from pg_stat_all_tables where relname='log_tmp' and schemaname='public';

-- 达到阈值,切换表

if v_rows >=100000 then

select count(*) into suffix from pg_inherits where inhparent='log'::regclass;

select min(crt_time), max(crt_time) into min_time, max_time from log_tmp ;

execute 'alter table log_tmp add constraint ck_log_'||suffix||'_1 check (crt_time>='''||min_time||''' and crt_time<='''||max_time||''')';

execute 'alter table log_tmp rename to log_'||suffix;

create table log_tmp (like log including all) inherits(log);

end if;

return;

exception when others then

return;

end;

$$ language plpgsql strict; 5. Stress testing

vi test.sql

select f(1, 'test'); pgbench -M prepared -n -r -P 1 -f ./test.sql -c 64 -j 64 -T 120

NOTICE: merging column "id" with inherited definition

NOTICE: merging column "info" with inherited definition

NOTICE: merging column "crt_time" with inherited definition

progress: 1.0 s, 25350.5 tps, lat 2.487 ms stddev 0.986

progress: 2.0 s, 26309.0 tps, lat 2.432 ms stddev 0.688

progress: 3.0 s, 26251.9 tps, lat 2.438 ms stddev 0.741

progress: 4.0 s, 26451.0 tps, lat 2.420 ms stddev 0.737

NOTICE: merging column "id" with inherited definition

NOTICE: merging column "info" with inherited definition

NOTICE: merging column "crt_time" with inherited definition

progress: 5.0 s, 29471.0 tps, lat 2.172 ms stddev 0.844

progress: 6.0 s, 32971.0 tps, lat 1.941 ms stddev 0.670

progress: 7.0 s, 33028.0 tps, lat 1.938 ms stddev 0.661

progress: 8.0 s, 33101.0 tps, lat 1.933 ms stddev 0.656

NOTICE: merging column "id" with inherited definition

NOTICE: merging column "info" with inherited definition

NOTICE: merging column "crt_time" with inherited definition

progress: 9.0 s, 32805.0 tps, lat 1.951 ms stddev 0.752

...... Automatic sharding succeeded:

postgres=# \d log_1

Table "public.log_1"

Column | Type | Collation | Nullable | Default

----------+-----------------------------+-----------+----------+---------

id | integer | | |

info | text | | |

crt_time | timestamp without time zone | | | now()

Indexes:

"log_tmp_crt_time_idx" btree (crt_time)

Check constraints:

"ck_log_1_1" CHECK (crt_time >= '2017-11-02 23:52:34.264264'::timestamp without time zone AND crt_time <= '2017-11-02 23:54:05.939958'::timestamp without time zone)

Inherits: log

postgres=# \d log_2

Table "public.log_2"

Column | Type | Collation | Nullable | Default

----------+-----------------------------+-----------+----------+---------

id | integer | | |

info | text | | |

crt_time | timestamp without time zone | | | now()

Indexes:

"log_tmp_crt_time_idx1" btree (crt_time)

Check constraints:

"ck_log_2_1" CHECK (crt_time >= '2017-11-02 23:54:05.948796'::timestamp without time zone AND crt_time <= '2017-11-02 23:54:10.946987'::timestamp without time zone)

Inherits: log The TimescaleDB plug-in also provides many other functions and is more borderless in usage. We still recommend using TimescaleDB plug-in when it becomes more sophisticated.

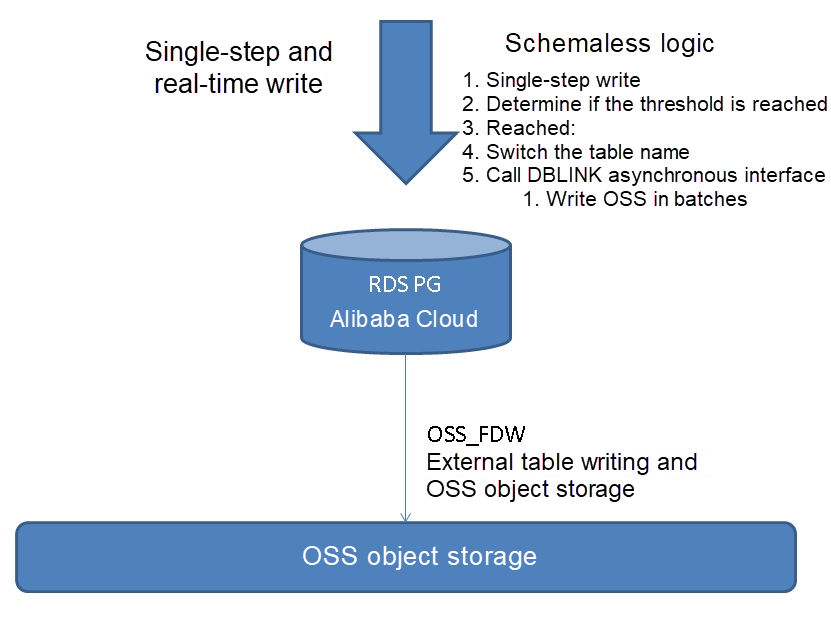

For Alibaba Cloud RDS PG, you can also write the RDS PG in real time by using the method mentioned in this article, and write the OSS external table in batches according to the set threshold at the same time (DBLINK asynchronous interface can be used for writing OSS external tables).

PostgreSQL App Massive FEED LOG Real-time Quality Statistics

Real-time Statistical Analysis on Sliding Windows with PostgreSQL

digoal - May 16, 2019

digoal - May 16, 2019

digoal - May 16, 2019

Alibaba Clouder - July 31, 2019

digoal - May 17, 2021

digoal - May 28, 2021

ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB RDS for MariaDB

ApsaraDB RDS for MariaDB

ApsaraDB RDS for MariaDB supports multiple storage engines, including MySQL InnoDB to meet different user requirements.

Learn MoreMore Posts by digoal