By Qi Xiang, nicknamed Yizhi at Alibaba.

Often unlabeled data cannot be effectively used. This is a major problem in the industry. Combatting this problem, at Alibaba we proposed a deep learning risk control algorithm named Auto Risk to address any of our business scenarios with insufficiently labeled data and a large amount of unlabeled data that cannot be effectively used. This algorithm was directed at behavior sequence data. As part of this initiative, we also proposed the use of agent tasks to learn general features from unlabeled data.

Our idea in many ways follows the same lines of pre-training models like the leading Bidirectional Encoder Representation from Transformers (BERT) model in the natural language processing field. However, behavior sequence data and business is quite distinct from the data typically seen with natural language processing. Therefore, the design and implementation of our model had to be different.

Our model has been implemented in real business scenarios, delivering some real world improvements. Experimental verification showed that the model's broad capabilities can be applicable to a variety of industry scenarios. And, compared with purely supervised learning, this model also delivers significant improvements in scenarios with a small number of samples.

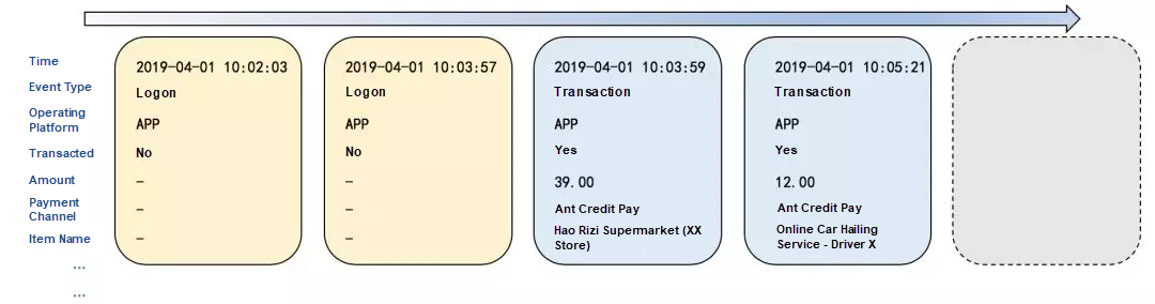

For context, behavior sequence data like the browsing data collected from Taobao shoppers and risk control events in Alipay are common type of data at Alibaba Group. And, in fact, this kind of data serves as an important source-level input for intelligent services we offer like our product recommendation and risk control algorithms.

Consider the following examples. Say we are given the transaction sequence of a user and asked to predict what the user would buy next, or we are given the sequence of a risk control event and asked to predict whether a product is legal or illegal. The reality is that, for both these scenarios, we are require to characterize a list of behavior sequences into vectors and classify the sequences. This is where our algorithm comes into play.

A behavior sequence diagram

Traditionally, many features such as triggers and accumulations are designed based on experience and entered manually. Then, classifiers such as a Gradient Boosting Decision Tree (GBDT) are trained based on these features. In recent years, a relatively successful method has been to use neural networks like recurrent (RNN) and convolutional neural networks (CNN), as well as attention mechanisms. With these, behavior sequences are used directly as the input, and classification results or eigenvectors are the output. This method can be essentially summarized as an everything-to-vector idea. It has the advantage that it avoids the tedious work of manual characterization.

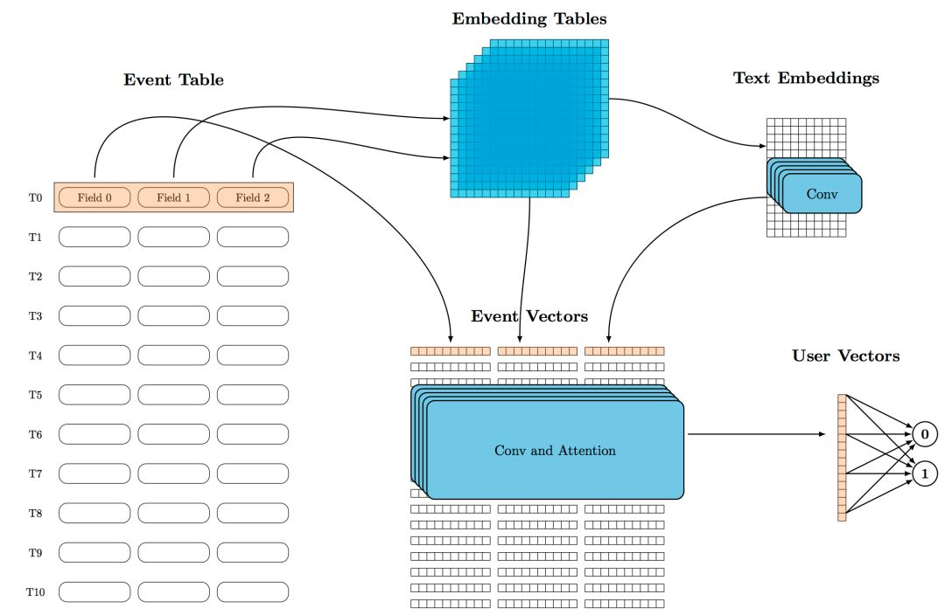

So, it was along these lines that our team proposed the Detail Risk framework for the algorithm. This framework converts the data for the behavior sequence of a user into classification vectors through multiple network layers, which involve discrete field embedding, text convolution, multi-field integration, event convolution, and attention. We have implemented this framework in multiple scenarios to much success. Overall, this framework has greatly reduced the manual work needed to process and use data and has also improved the performance of the model.

Detail Risk framework diagram

However, regardless of all of this success, most of these methods still use supervised learning and cannot completely avoid the problem of insufficient labeled samples. That is, a small number of samples cannot fully make use of the large capacity that is the advantage of a neural network model. But, if multi-task labels are introduced, the migration capabilities between tasks need to be carefully evaluated and these two factors balanced.

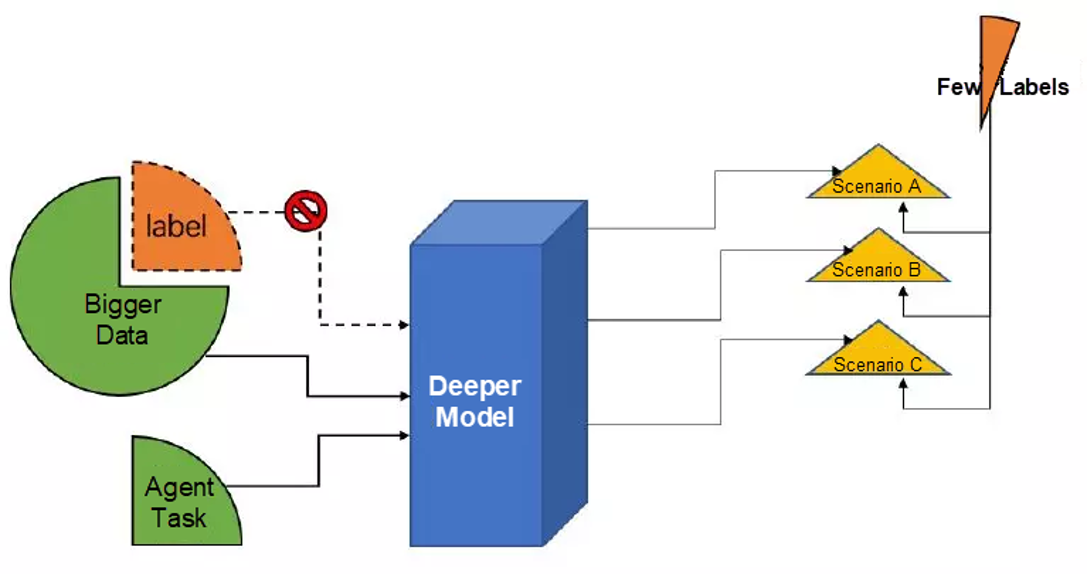

In addition, a massive amount of unlabeled data is constantly accumulated in our business. Therefore, if we can find a way to use this unlabeled data to train models and learn general upper-layer features, we will be able to leave limited labels to downstream scenarios to train a simple classifier. This will ultimately provide great improvements to our overall data utilization. Another point is that eigenvectors generated in unsupervised learning are different from manually designed features, but the integration of the two can also help to achieve better results.

Similar problems also exist in other parts of our business. Last year, a solution was developed based on natural language processing research, which specifically was solution using pre-training technology. Pre-training technology uses readily available agent tasks that embody knowledge, a large amount of unlabeled data, as well as deeper networks. All of this allows the model to learn effective upper-layer features without any manual assistance. Moreover, with such features, the entire system can achieve better results after being fine-tuned in downstream tasks.

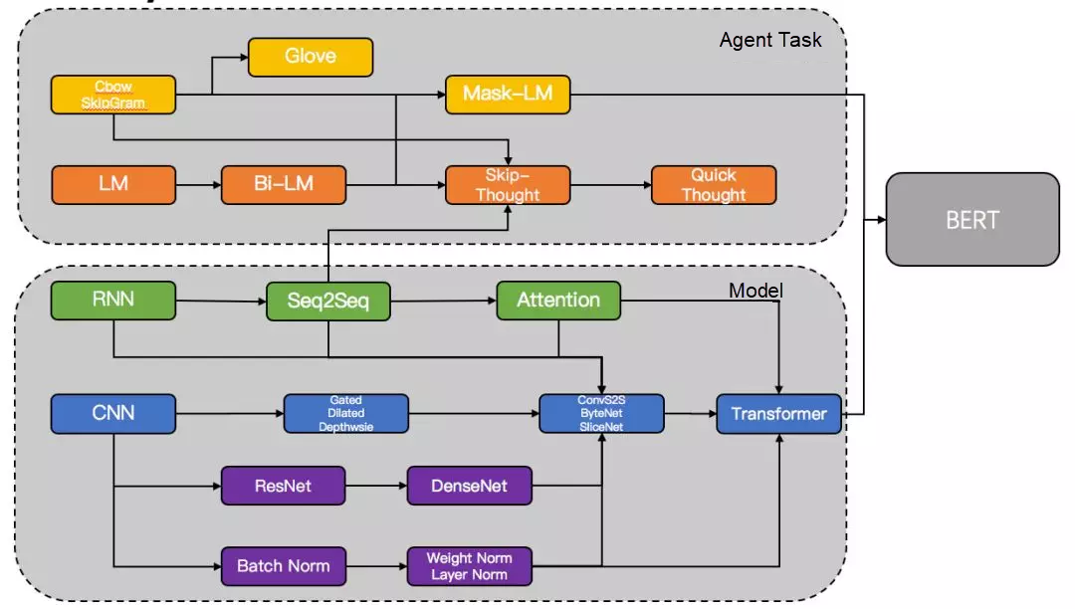

Along these lines, in 2018, pre-training models such as Embeddings from Language Model (ELMo), Generative Pre-training (GPT), BERT, GPT2, and Enhanced Representation through Knowledge Integration (ERNIE) constantly redefined the state-of-the-art (SOTA) models for basic natural language processing problems and drove rapid development in the field. Among these new models, BERT set 11 new records at once, which caused algorithm engineers to sit up in their seats and take notice.

The "AND" training diagram

In the computer vision field, pre-training large networks with ImageNet can be traced back to as far as 2014 when deep learning was just starting to get adopted. In natural language processing, the word2vec or Global Vectors for Word Representation (Glove) algorithm are typically used to pre-train word vectors. However, it wasn't until recently that we were also able to pre-train a large model like BERT. This change meant that significant improvements in the performance of downstream tasks could be achieved even with unlabeled samples. We believe that this technology was made possible mainly because of the following conditions:

The technology involved in BERT pre-training

So far, BERT has been used in some of Alibaba's internal NLP products, but not in other products. But, there are several problems hindering its wider adoption:

Therefore, in order to benefit from pre-training, we must design and implement our own pre-training model based on the characteristics of our data and business. This article presents the pre-training framework we designed and implemented for unsupervised behavior sequences and verifies its effectiveness in actual business scenarios. Our business scenario is risk control and therefore we called the framework Auto Risk model.

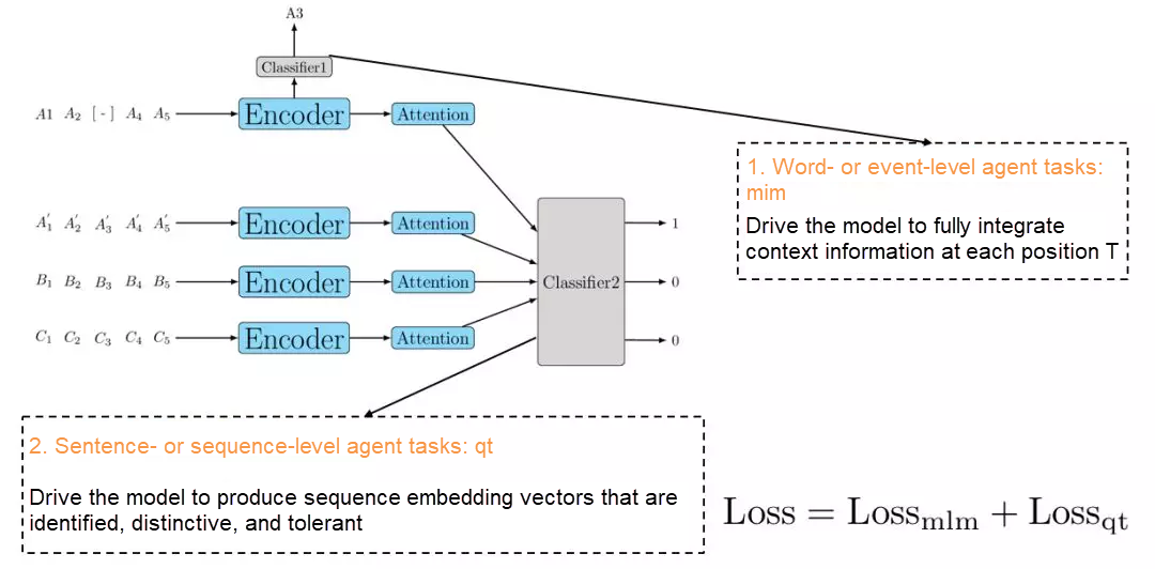

The pre-training model does not need the labels of any actual tasks, but only requires readily available agent tasks to drive the training. The design of agent tasks determines the knowledge that the model can explore. In our study, we compared behavior sequence data with text, considering each point of time T as a word and each continuous sequence 1:T as a document, which is similar to BERT. We also designed the following two types of agent tasks:

Two types of agent tasks

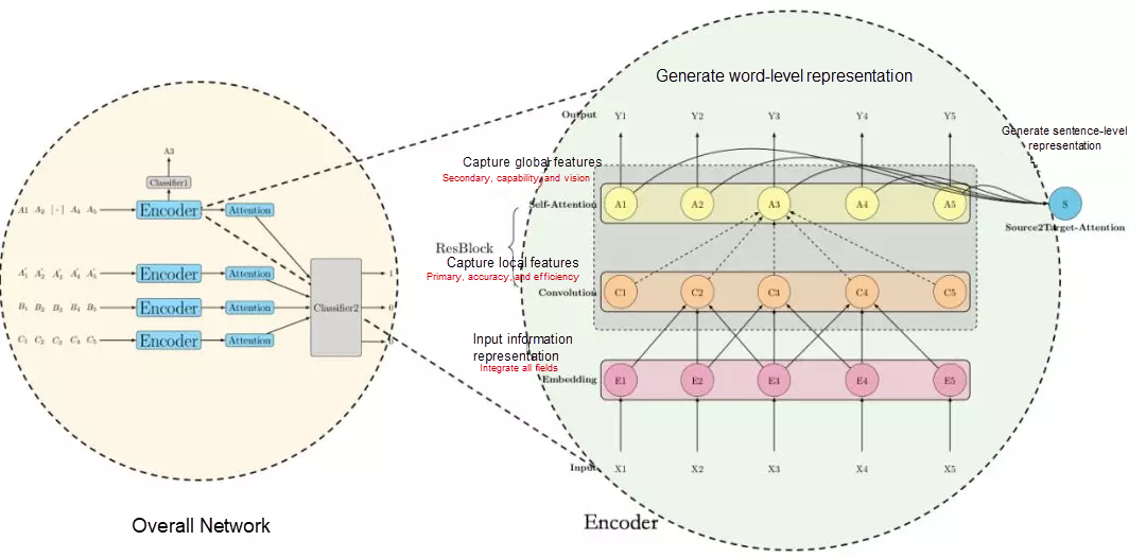

Agent tasks provide readily available labels, and the core of the specific model structure is the encoder network. In previous section we already showed that it is inefficient to directly use heavy weight transformers, such as those in the BERT and GPT models. Therefore, acknowledging this, we have proposed a more efficient encoder structure based on convolutions and attention.

Auto Risk model schematic

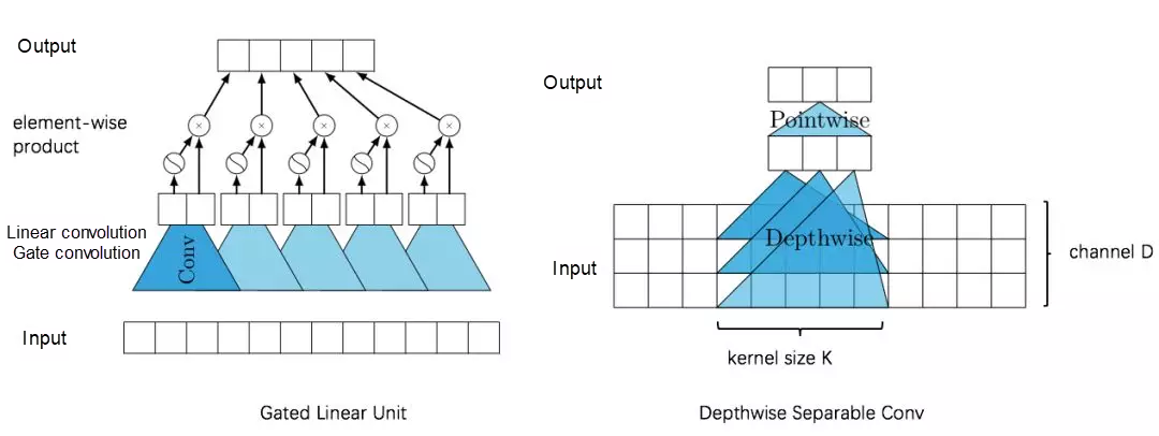

When convolution is used for sequences, multiple convolution layers must be stacked to increase the vision. This causes two side effects: First, the gradients are diffused and optimization becomes difficult. And, second, parameters and computations increase significantly. To overcome these side effects, we replaced general convolutions with two special convolutions: Gated Conv and Depthwise Separable Conv. At first, a gate mechanism similar to Long Short-Term Memory (LSTM) is used to suppress the diffusion of gradients so that more layers can be stacked. Then, a convolution is divided into the depthwise and pointwise steps to reduce the amount of parameters and computations. For example, if the feature dimension D is 256 and the convolution core width K is 5, the number of parameters and computations will decrease by 80% from 320,000 to 60,000. If the convolution core width K is 31, the number will fall to only 3.6% of the original number. The improved convolution layer significantly improves the convergence speed and ultimate performance of the model.

Convolution improvement

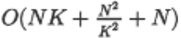

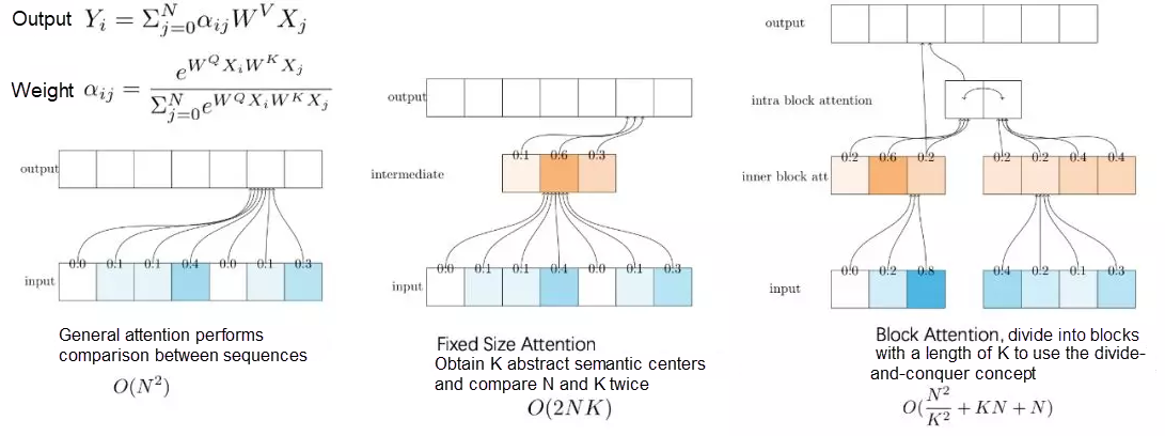

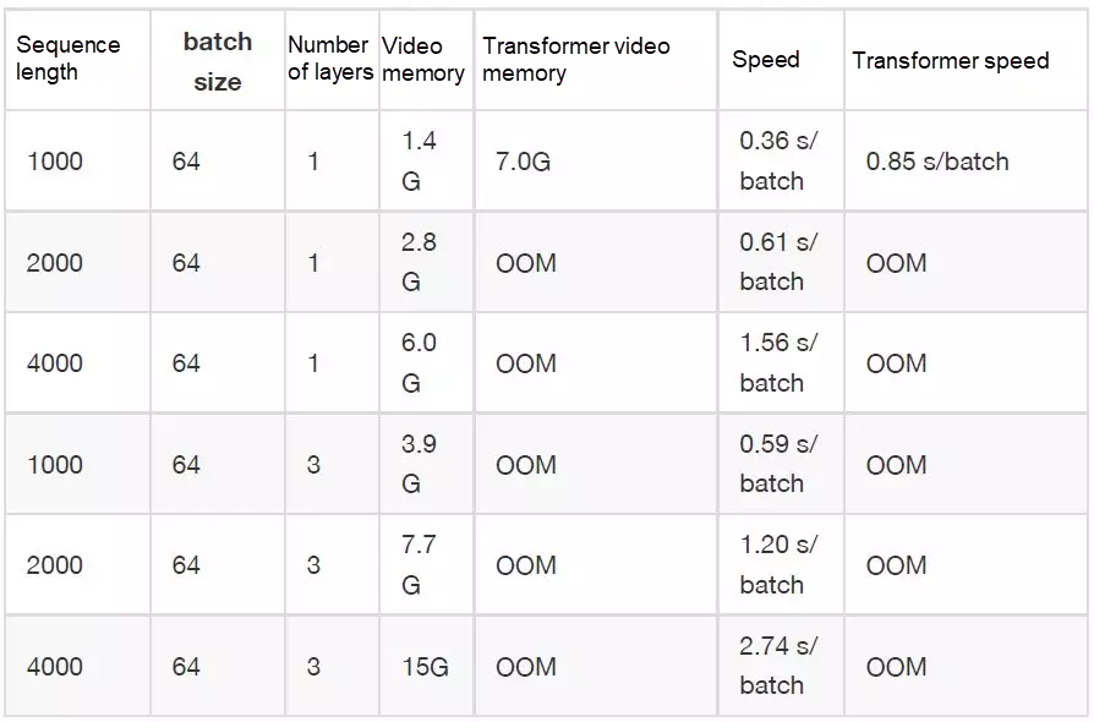

Attention provides excellent vision and capabilities, but requires a great deal of  video memory to perform comparisons between sequences. In practice, if the length of a sequence exceeds 1,000, which is not long for a behavior sequence, self-attention at one layer will cause OOM. For practical reasons, we looked into replacing self-attention with fixed size attention or block attention. This optimizes the memory usage to O(2NK) and

video memory to perform comparisons between sequences. In practice, if the length of a sequence exceeds 1,000, which is not long for a behavior sequence, self-attention at one layer will cause OOM. For practical reasons, we looked into replacing self-attention with fixed size attention or block attention. This optimizes the memory usage to O(2NK) and  at the price of slight performance degrade. Finally, three attention layers can be stacked, allowing us to process a sequence with a length of 4,000 on a single GPU. This allowed us to meet our business requirements.

at the price of slight performance degrade. Finally, three attention layers can be stacked, allowing us to process a sequence with a length of 4,000 on a single GPU. This allowed us to meet our business requirements.

Attention improvement

Many tricks are needed to train such a large network. We will discuss these tricks in later articles.

After the optimizations described above, we can use only one graphics card to:

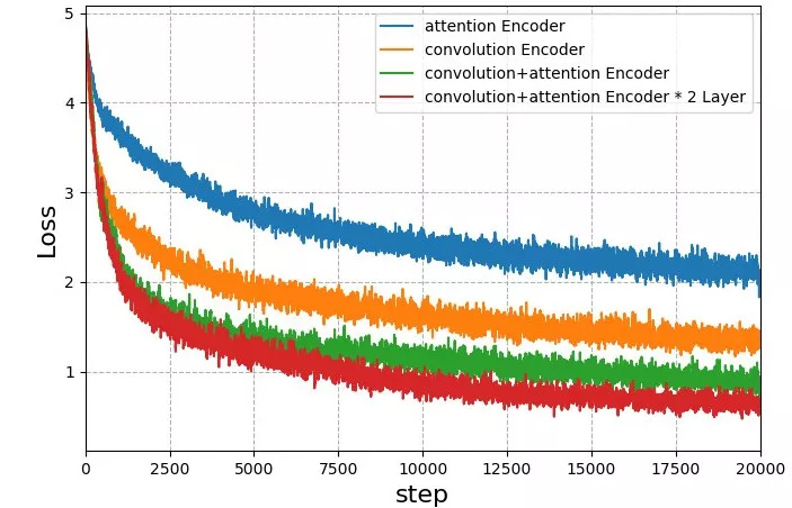

The following figure compares the training processes using different encoder structures and shows that:

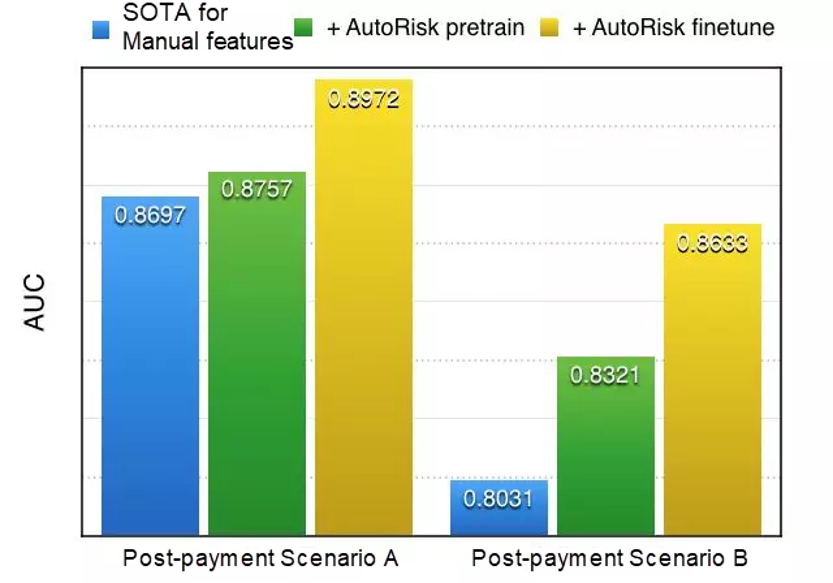

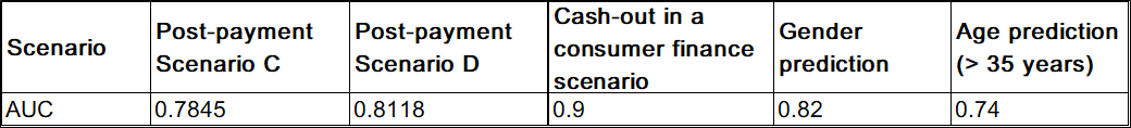

First, let's evaluate the business benefits of the Auto Risk model. Our risk control sequence includes key risk control behaviors involved in such actions as logon, password change, and transactions. We select certain users at random as the training set, train a three-layer network with a hidden value of 128, and then deduce the vectors of other users. Finally, we add these vectors to a feature pool to compare the improvement of the area under the curve (AUC). We will compare the following approaches:

As you can probably see, the AUC improves from 3% to 6% after Auto Risk vectors are added, which shows that the unsupervised Auto Risk model can extract useful features from behavior sequences. If network parameters are fine-tuned for specific scenarios, better results will be achieved. This is the same as in models such as BERT. For ease of comparison, this figure only shows the effect of risk control events that are used as sequence data.

The pre-training model does not use labels for any specific scenarios during training. Therefore, the knowledge learned by the model is relatively general in nature. We tested different scenarios, including irrelevant gender and age prediction scenarios, by using the simplest logistic regression (LR) classifier without adding any manual features or performing fine-tuning. After training and testing the scenarios, we achieved surprising results. In certain scenarios, the AUC reaches 0.9. One potential business benefit is that we can obtain general supplemental features for various businesses at an extremely low cost.

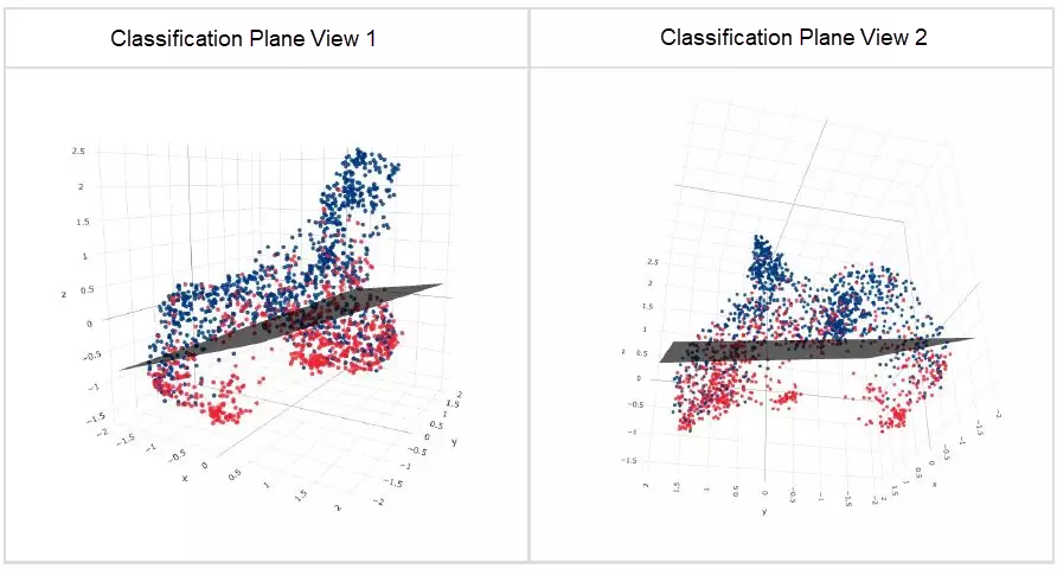

But, how can we achieve such results by only using LR? One way to think about it is that the Auto Risk model fully retains the information of behavior sequences in a good embedding space. This allows us to find appropriate linear classification interfaces for different tasks. The following figure shows the test set samples and the classification plane for cash-out in a consumer finance scenario. As Umap is used to reduce the 128-dimensional vectors to three-dimensional vectors, the classification performance is degraded by about 6% of AUC. However, we can see that:

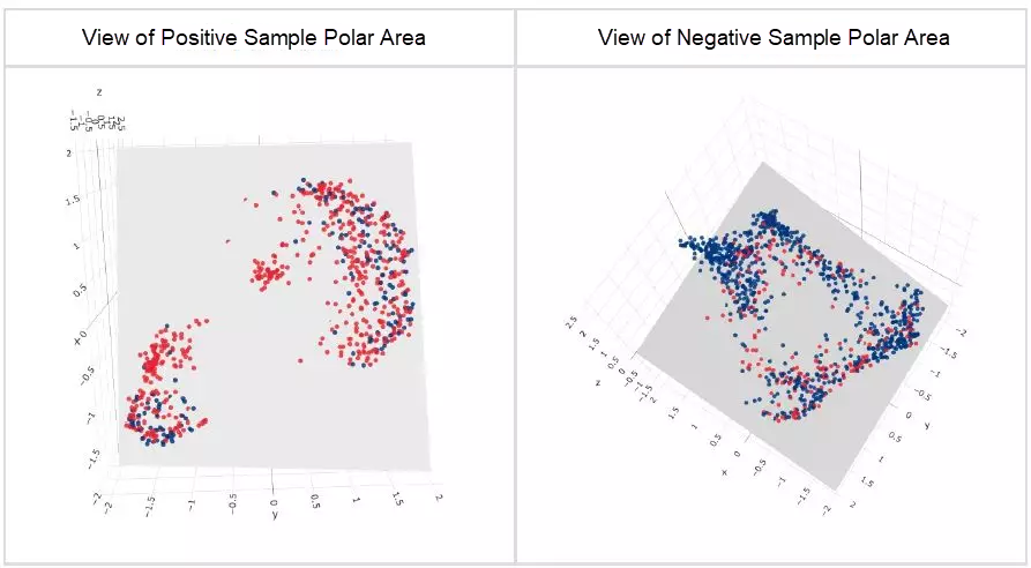

The pre-training model also delivers benefits in small sample scenarios. Due to the large number of parameters in deep learning models, they do not perform well when the number of labeled samples is small in number. However, the pre-training model learns most of its knowledge through unsupervised agent tasks. Therefore, we can achieve better results for a scenario with a small number of labeled samples. The pre-training model is more suitable for businesses that require cold start or for which labeling is expensive. We conducted experiments on two types of behavior sequences in post-payment scenario B. The results show that the Auto Risk model can achieve better results than training a supervised learning neural network from scratch. For behavior log data, simply using the Auto Risk model and LR classifier can achieve better results than supervised learning, even if we do not perform fine-tuning. When the number of labeled samples in the training set reaches 40,000¡ªwith 20,000 positive samples and 20,000 negative samples¡ªsupervised learning still cannot catch up with the Auto Risk model and fine-tuning.

Analogy is an interesting characteristic of word embedding. In the embedding space, King - Man = Queen - Woman and China - Beijing = France - Paris. Similar equations prove that the embedding space can indeed capture upper-layer semantics. Do the sequences in our Auto Risk space have similar characteristics? Similarly, we conducted an A - B = C - D experiment. We selected A, B, and D from a set that comprises 1 million samples, and recall C by using cosine similarity with the A - B + D vector. For ease of description, we show different fields separately even though they are trained at the same time.

A=[Create transaction - Taobao physical guarantee, Ant Credit Pay payment - Taobao physical guarantee, Create transaction - Taobao physical guarantee, Ant Credit Pay payment - Taobao physical guarantee, Create transaction - Taobao physical guarantee, Ant Credit Pay payment - Taobao physical guarantee, Create transaction - Taobao physical guarantee, Ant Credit Pay payment - Taobao physical guarantee, Create transaction - Taobao physical guarantee]

B=[Create transaction - Taobao physical guarantee, Balance payment - Taobao physical guarantee, Create transaction - Taobao physical guarantee Balance payment - Taobao physical guarantee, Create transaction - Taobao physical guarantee, Balance payment - Taobao physical guarantee, Create transaction - Taobao physical guarantee, Balance payment - Taobao physical guarantee, Create transaction - Taobao physical guarantee]

C=[Ant Credit Pay payment - Instant transfer from non-Alibaba account, Ant Credit Pay payment - Instant transfer from non-Alibaba account, Ant Credit Pay payment - Instant transfer from non-Alibaba account, PC side - Create transaction, Logon_app_other_, Ant Credit Pay payment - Instant transfer from non-Alibaba account, PC side - Create transaction]

D=[App side - Logon, Balance payment - Instant transfer from non-Alibaba account, Balance payment - Instant transfer from non-Alibaba account, Balance payment - Instant transfer from non-Alibaba account, Balance payment - Instant transfer from non-Alibaba account, Balance payment - Instant transfer from non-Alibaba account]A=[\\N,\\N,10000.0,10000.0,10000.0,8000.0,8000.0,8000.0,\\N,8000.0,\\N,\\N,8000.0,\\N,8000.0]

B=[\\N,\\N,10.0,10.0,10.0,10.0,10.0,10.0,\\N,10.0,\\N,\\N,10.0]

C=[\\N,\\N,\\N,8000.0,\\N,\\N,8000.0,\\N,\\N,\\N,\\N,\\N,\\N,10000.0,\\N,10000.0]

D=[\\N,1.0,\\N,1.0,\\N,1.0,1.0,\\N,1.0,\\N,1.0,1.0,\\N,1.0,\\N,\\N]A=["DiDi Express-Driver Zhou","DiDi Express-Driver Zhou",...,"DiDi Express-Driver Shao","DiDi Express-Driver Shao",...]

B=["Tencent QQ coins recharged by 100 RMB","Tencent QQ coins recharged by 100 RMB",...,"Tencent QQ coins recharged by 100 RMB","Tencent QQ coins recharged by 100 RMB",...]

C=["DiDi Express-Driver Feng","DiDi Express-Driver Feng","Bus ticket**Bus terminal (south district","","Bus ticket**Bus terminal (south district)","","Quick Unicom recharge of 10 RMB","","","DiDi Express-Driver Qi","DiDi Express-Driver Qi","","","0000****-Plate no. [00*****] Travel time: 2019-04-1914:53:02"]

D=["1000 Tencent QQ coins 1","Tencent QQ coins/QQ coin card/",...,"Tencent QQ coins/QQ coin card/","Tencent QQ coins/QQ coin card/",...]In this article, we discussed our Auto Risk algorithm for deep learning of behavior sequences. This algorithm does not require specific labels for training, but is based on the concept of agent task pre-training similar to BERT. It explores context associations and symbolic characteristics in a large amount of unlabeled data to generate useful upper-layer features. This solves the problems of insufficient labeled samples and unlabeled samples that are hard to use.

We designed the model structure based on the data and business characteristics to facilitate fast training and deployment. We have implemented this algorithm in our actual business operations and achieved significant performance improvements. No labeling is required during training. Therefore, the model results can be applied in many other scenarios and can significantly improve performance in scenarios with a small number of samples. The sequence analogy experiment proves that upper-layer semantics can be captured in the Auto Risk vector space.

In the future, we will continue our current work, expand the model to suit more types of data sources and application scenarios, and verify other agent tasks, including agent tasks that use multi-scenario labels accumulated previously.

1 posts | 0 followers

FollowAlibaba Clouder - December 10, 2019

Alibaba Cloud Data Intelligence - September 6, 2023

- January 17, 2018

Alibaba Cloud Native - July 12, 2024

Alibaba Cloud MaxCompute - September 12, 2018

AliCloud-TechLab - August 25, 2021

1 posts | 0 followers

Follow Intelligent Robot

Intelligent Robot

A dialogue platform that enables smart dialog (based on natural language processing) through a range of dialogue-enabling clients

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More