By Yuxun

Community Redis cluster is a cluster architecture of P2P acentric nodes, and it depends on the gossip protocol to transmit the state of cooperative automated repaired clusters. This text delves into the details of the Redis cluster gossip protocol, and dissects how the Redis cluster gossip protocol operates.

The cluster gossip protocol definitions are in ClusterMsg, this structure, and the source code is as follows:

typedef struct {

char sig[4]; /* Signature "RCmb" (Redis Cluster message bus). */

uint32_t totlen; /* Total length of this message */

uint16_t ver; /* Protocol version, currently set to 1. */

uint16_t port; /* TCP base port number. */

uint16_t type; /* Message type */

uint16_t count; /* Only used for some kind of messages. */

uint64_t currentEpoch; /* The epoch accordingly to the sending node. */

uint64_t configEpoch; /* The config epoch if it's a master, or the last

epoch advertised by its master if it is a

slave. */

uint64_t offset; /* Master replication offset if node is a master or

processed replication offset if node is a slave. */

char sender[CLUSTER_NAMELEN]; /* Name of the sender node */

unsigned char myslots[CLUSTER_SLOTS/8];

char slaveof[CLUSTER_NAMELEN];

char myip[NET_IP_STR_LEN]; /* Sender IP, if not all zeroed. */

char notused1[34]; /* 34 bytes reserved for future usage. */

uint16_t cport; /* Sender TCP cluster bus port */

uint16_t flags; /* Sender node flags */

unsigned char state; /* Cluster state from the POV of the sender */

unsigned char mflags[3]; /* Message flags: CLUSTERMSG_FLAG[012]_... */

union clusterMsgData data;

} clusterMsg;The message may be divided into three parts for this structure:

1. Basic information of the sender

sender: node name

configEpoch: Each master node has a unique configEpoch to make a sign, and if there is a conflict with another master node, it will forcibly autoincrement to make this node the sole node in the cluster.

slaveof: master information, if this node is a slave node, the protocol has master information

offset: mainly offset from duplication

flags: the present state of this node, for example CLUSTER_NODE_HANDSHAKE or CLUSTER_NODE_MEET

mflags: the type of this message; at present there are only two types: CLUSTERMSG_FLAG0_PAUSED and CLUSTERMSG_FLAG0_FORCEACK

myslots: the slots information for which this node is responsible

Port:

cport:

ip:

2. Basic information of the cluster views

currentEpoch: This indicates the unified epoch of the entire cluster currently recorded by this node, and is used to decide the election voting. Where it differs from configEpoch is that configEpoch indicates the sole sign of the master node, while currentEpoch is the sole sign of the cluster.

3. Specific message, data in the corresponding clusterMsgData structure

ping, pong, meet: clusterMsgDataGossip, this protocol sends the information of all nodes of the cluster that are saved in a sender node to the peer, the number of nodes are defined in the numeric field count of the clusterMsg. This protocol contains numeric fields of information of other nodes:

Here the author has used a technique for reducing the gossip communication bandwidth.

If ping_sent = 0 concerning said node on the receiver node, and moreover there are no nodes at all currently in failover and said node has not failed and pong_received concerning said node on the receiver node < pong_received on sender, and moreover pong_received of sender is larger than within 500 ms of the receiver node kernel time, then the pong_received time concerning said node of the receiver node is set to match the sender node, and it multiplexes the pong_received of the sender node. Then the received node will reduce the sending of pings to said node. Reference issue: https://github.com/antirez/redis/issues/3929

fail: clusterMsgDataFail, there is only one nodename field that indicates the fail node, and a fail msg is sent after the statistics exceed one-half the node mission node pfail

publish: clusterMsgDataPublish, the synchronous publish information between clusters is used to support the client's sending of pub/sub in any one node

update: clusterMsgDataUpdate, when the receiver node discovers that the sender node configepoch is lower than this node, it will send to the sender node an update message to notify the sender node of the updated state, including:

The cluster can provide important cluster functions through the gossip protocol, such as synchronization updates of the state between clusters, election self-service failover, etc.

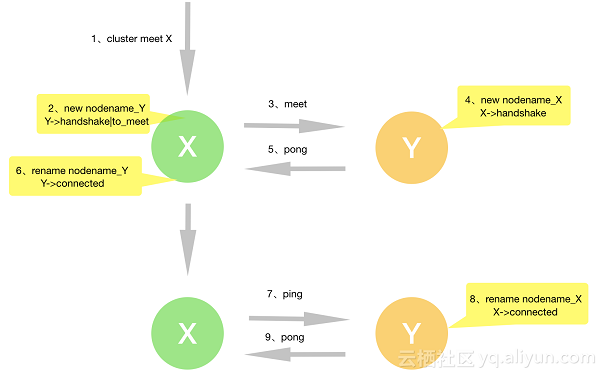

After the client sends the cluster meet node Y to node X request, it will try to establish a connection between the master-slave and node Y after node X. At this time, the state of node Y that is saved in node X is:

The following is the meet process:

(0) Node X generates a nodename for node Y by getRandomHexChars, this function

(1) Node X acquires an unestablished TCP connection from the cluster -> nodes list when clusterCron is operating, and if meet has not been sent, CLUSTERMSG_TYPE_MEET is sent, and after node Y receives the meet message:

(2) A check is done to see if node X has not established a handshake successfully, the messages sent by the sender are compared, and the local area's information about node X is updated

(3) A check is done to see whether node X is not present in the nodes, X is added into the nodes, and a nodename is randomly obtained for X. The state is set as CLUSTER_NODE_HANDSHAKE

(4) It enters gossip to process the cluster's node information carried by this gossip message, and establishes a handshake for the cluster's other nodes.

(5) CLUSTERMSG_TYPE_PONG is sent to node X, and the processing by node Y is concluded (pay attention at this time so that the clusterReadHandler function link -> node of node Y is NULL).

(6) After node X receives a pong, it discovers that it is currently in the handshake stage with node Y, and the address and node name of node Y are updated and the CLUSTER_NODE_HANDSHAKE state is cleared.

(7) Node X sends a ping to node Y of an unestablished connection in the cron () function

(8) After node Y receives a ping, it sends a pong to node X

(9) The state CLUSTER_NODE_HANDSHAKE of node Y that is stored by node X is cleared, and the node name and address are updated for a time until the handshake is completed. The two nodes both store the same node name and information.

After checking the entire handshake process, we try to think about two problems:

1. How will the cluster process if the states of node X and CLUSTER_NODE_MEET are cleared after meet was sent and failed?

At this time node Y will directly send node Y a ping in the next clusterCron function, but it will not save node X into cluster -> nodes, and this results in node X thinking that a connection has already been established, and that node Y has not recognized it. In rear node transmission, if there is another node that has the information of node X and it moreover sends a ping to node Y, this will also trigger node Y to go again on its own accord to send meet to node X to establish a connection.

2. how is it processed if node Y is already storing node X, but it receives a meet request from node X?

(1) Node Y sends a pong to node X

(2) If it is currently in the handshake node, it will directly delete the node, and this will cause node Y to lose the message of node X. It is equivalent to issue 1.

(3) For the non-handshake stage, go down and follow the normal pong flow path.

(1) Node Y re-creates one random node name and places it in nodes, and it is set at the handshake stage, and at this time there are two node names present.

(2) Node Y sends a pong to node X

(3) If node Y has already created a connection with node X, node Y will update the node name of node X in the local area, delete the node where the first node name is stored and update the handshake state. At this time, only the second correct node name will remain.

(4) If node Y has not created a link with node X, it will send a ping request to node X again in the clustercron (), and the two node names will each send one time in succession.

(5) After the node name sends a ping, the node name of node X is updated in the pong that is received in the node X response

(6) After the second node name sends a ping, the sent node X node name is already present in the pong that is received in node X's response. The second node name is in the handshake state, and at this time it directly deletes the second node name.

Conclusion: One node can only lose the other node when the two nodenames are the same and both nodes are at the handshake stage.

For the specifics, refer to the article "Understanding the Failover Mechanism of Redis Cluster".

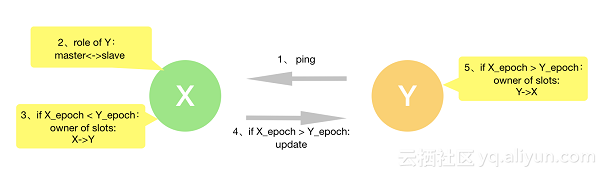

When two masters appear, how does the gossip protocol handle the conflict?

It is necessary to understand two key variables first:

The slots information and currentEpoch and configEpoch that will self carry the send node in the ping packet.

The master node receives the processing flow path after it comes from the slave node:

(1) The role of the receiver comparing the sender

(2) It compares whether or not the slot information carried by the sender itself and the slots in the receiver cluster view are in conflict, and whether there is a conflict the following comparison is carried out

(3) It compares whether or not the configEpoch of the sender is > configepoch than the slots owner in the receiver cluster view. If it resets the slots owner as the sender in the clusterUpdateSlotsConfigWith function, and moreover sets the old slots owner as the slave of the sender, then it re-compares whether or not this node has a dirty slot, and whether it does it clears it away.

(4) It compares whether the sender's slots information < than the configepoch of the slots owner in the receiver cluster view, sends the update information and notifies the sender about the updates. The sender node will also execute the clusterUpdateSlotsConfigWith function.

How is it handled if the roles of the configEpoch and currentEpoch of the two nodes are both master?

The currentEpoch of the receiver autoincrements and assigns to configEpoch, which also compels autoincrementing to resolve conflicts. Since configEpoch is large at this time, you can also go through the flow path of the text above.

So there may exist a situation in which both masters are simultaneously present, but finally it will select a new master.

ApsaraDB for Redis is a stable, reliable and scalable database service with superb performance. It is structured on Apsara Distributed File System and full SSD high-performance storage, and supports master-slave and cluster-based high-availability architectures. It offers a full range of database solutions including disaster recovery switchover, failover, online expansion, and performance optimization. We welcome everyone to purchase and use ApsaraDB for Redis.

Building a Web-Based Platform with PolarDB: Yuanfudao Case Study

ApsaraDB - April 17, 2019

ApsaraDB - June 9, 2022

Alibaba Clouder - July 6, 2018

Alibaba Clouder - January 21, 2021

Alibaba Cloud_Academy - September 16, 2022

ApsaraDB - July 25, 2018

Tair (Redis® OSS-Compatible)

Tair (Redis® OSS-Compatible)

A key value database service that offers in-memory caching and high-speed access to applications hosted on the cloud

Learn More ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB RDS for MariaDB

ApsaraDB RDS for MariaDB

ApsaraDB RDS for MariaDB supports multiple storage engines, including MySQL InnoDB to meet different user requirements.

Learn MoreMore Posts by ApsaraDB