By Minchao from Alibaba F(x) Team

Semantic UI elements have always been a challenge for Design-to-Code (D2C) and Artificial Intelligence (AI). The semantic process is a key link in code generation products of AI, such as D2C, and is crucial for human-centered design. At present, most common semantic technologies in the world are developed based on fields, such as TextCNN, Attention, and BERT, which are quite effective. However, they have certain limitations when applied to D2C. D2C is expected to become an end-to-end system, so the semantic process is difficult if only based on fields.

For example, it is hard to bind the field "¥200" to an appropriate semantic meaning, as "¥200" may be the original price, discounted price and so on. Even if the existing enumeration method is limited. Therefore, to implement semantic interface elements in D2C, at least two problems need to be solved. One is that semantic elements conforming to the current interface can be generated. Another one is that user restrictions are reduced without additional auxiliary information entered by users.

In recent years, Reinforcement Learning (RL) based on game theory is outstanding in AlphaGo, robots, autonomous driving, games and other fields, which attracts many scholars to study. In this article, Deep Reinforcement Learning (DRL) is introduced to analyze the semantic problem of interface elements. Through a semantic solution based on DRL, an RL training environment applicable to semantic problems is innovatively constructed. Experimental results prove the effectiveness of the proposed solution.

In this article, the semantic problem of interface elements is regarded as a decision-making problem of this situation. This article starts directly with interface images, and takes them as the input based on DRL. DRL obtains the optimal strategy after a continuous "trial and error" mechanism, and this is the optimal semantics. In addition, fields with semantic names that are difficult to output are processed by a text classification model so as to ensure the semantic effect.

The structure of this article is as follows. Firstly, the background and problems of field semantics in D2C products are introduced in detail to help readers understand the intention of this article. Secondly, the key technology of RL and text classification model based on Attention mechanism are expounded to better describe the technical solution of this article. Thirdly, the semantic decision model based on RL and text classification model based on Attention mechanism are elaborated. And the last is summary and prospect.

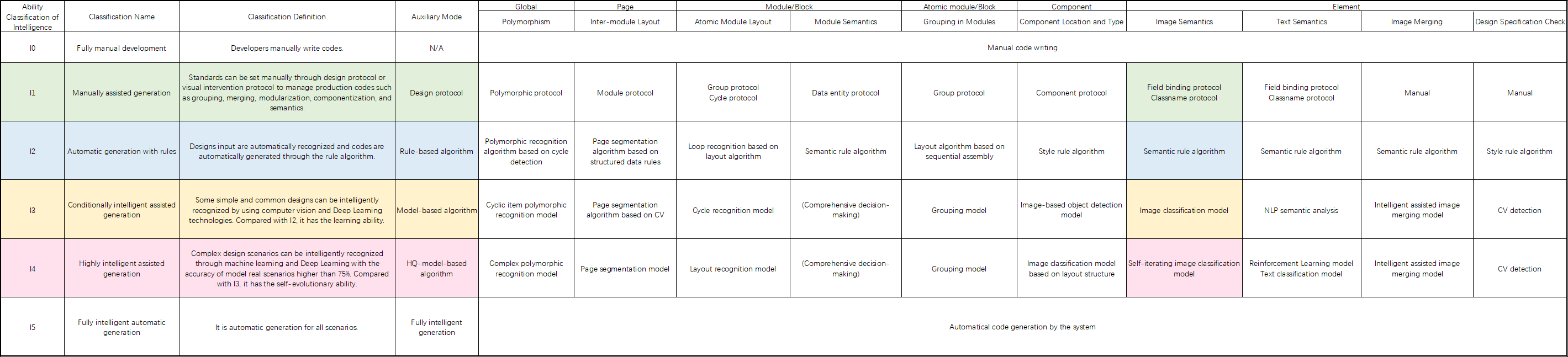

In the development of D2C, AI models have been introduced to the semantic function of fields to reduce the limitations and errors caused by rule algorithms. In Imgcook Semantics, the semantic technology based on rules in D2C is fully introduced. The ability classification of semantic analysis in D2C large images is shown as follows. Last year, we enhanced code semantics by using images and text classification models. This year, RL combining with UI is introduced to analyze semantics.

Ability Classification of Semantic Analysis

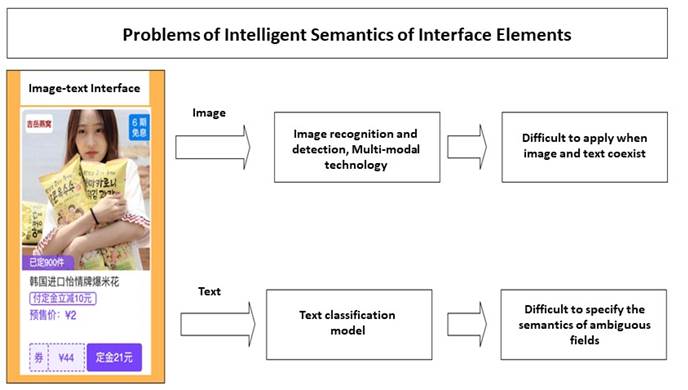

As a benchmark of the front-end intelligent product, D2C has innovation and problems on the way forward. The semantics of image-text interface elements, a stumbling block to intelligent coding, prevents D2C from becoming a truly end-to-end intelligent system. Therefore, effective intelligent solutions are urgently needed to realize the semantic function of D2C. The following figure shows the challenges of intelligent semantics:

As shown in the preceding figure, the existing technologies in the AI industry are difficult to intelligently specify the semantics of image-text interface elements. It has disadvantages in both perspectives of images and texts. From the perspective of images, the current technologies of image recognition and detection and multi-modal technology are difficult to deal with the problem of image-text coexistence encountered in D2C. From the perspective of text, it is difficult to process some ambiguous fields with a simple text classification model, such as price. Therefore, aiming at solving the semantic problem of image-text interface elements, this article proposes a solution using multiple AI models. Besides, this scheme is a framework, which is applicable not only to the semantics of interface elements, but also to tasks that cannot be solved by one AI model.

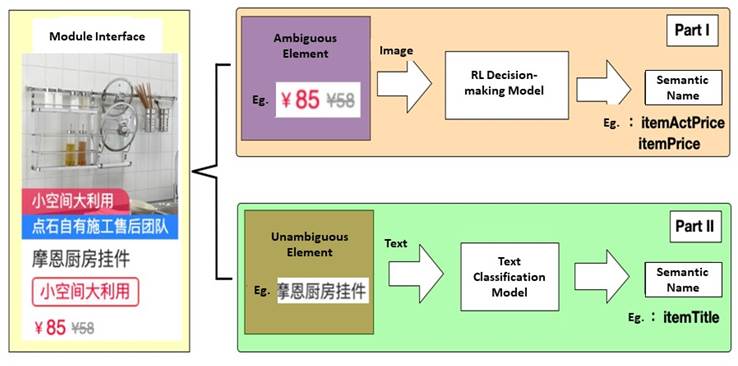

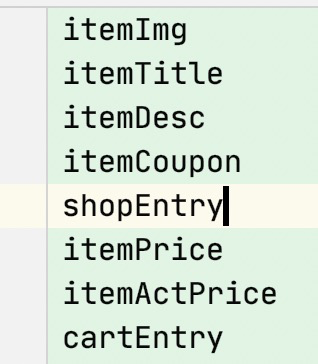

Aiming at solving the semantic problem of image-text interface elements, this article adopts a two-step solution, that is, semantic decision and text classification. The specific process is "filter" interface elements first based on RL according to the style rules to decide semantic names of ambiguous elements that cannot be processed by a text classification model. And the second step is that classify the unambiguous elements that can be processed by a text classification model. The framework is as follows:

In the semantic task of interface elements, those that cannot be processed by the text classification model are generally ambiguous fields, such as "¥85". However, these fields have certain style rules. For example, "¥85" is followed by a price with a strikethrough, which can be considered as the discounted price. Thus, the technical solution of this article can be concluded. It decides the semantic names of ambiguous elements with style rules based on RL decision-making model in images, and then decides the semantic names of unambiguous elements with the text classification model. The practice and experience of these two models are introduced in detail below.

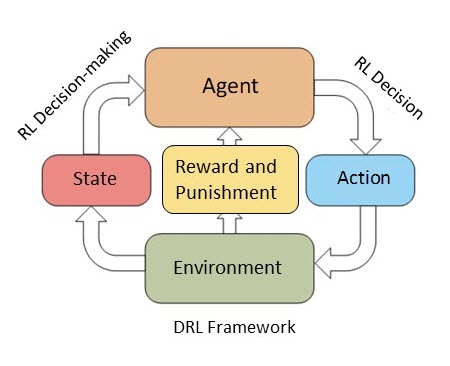

RL is mainly used to learn a strategy to maximize the long-term reward and punishment value obtained from the interaction between an agent and an environment. It is often used to deal with tasks with small state space or action space. With the rapid development of big data and Deep Learning (DL), the traditional RL cannot solve the problem of high-dimensional data input. Thus, Mnih V and other people introduced Convolutional Neural Networks (CNN)1[3] in DL into RL for the first time in 2013, and proposed the algorithm of Deep Q Learning Network (DQN)4. Since then, international scientific researches on Deep Reinforcement Learning (DRL) have begun. In addition, a mileage in DRL development was AlphaGo versus Lee Sedol in 20166. AlphaGo, a computer program based on DRL, defeated Lee Sedol, the world's top Go master. It was developed by DeepMind, the AI team under Google. The result shocked the world, and DRL has been known by the public since then.

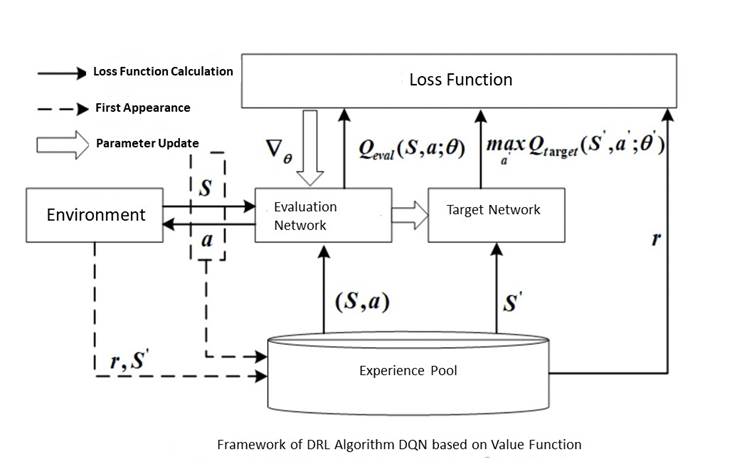

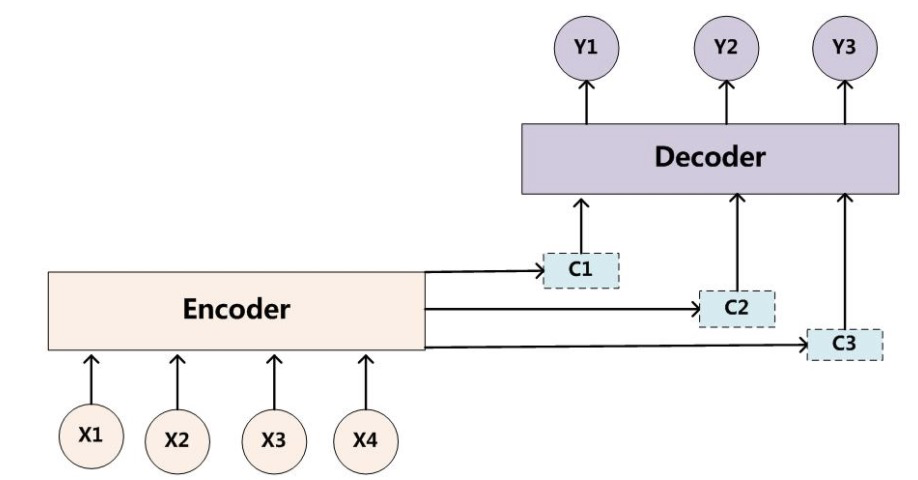

DRL combines the feature extraction capability of DL[8] and the decision-making capability of RL[9]. It is an end-to-end decision-making control system that can directly output optimal decision based on multi-dimensional data input. It is widely used in dynamic decision-making, real-time prediction, simulation, game and other fields. Through continuous real-time interaction with the environment, DRL uses environment information as input to obtain failure or success experience to update the parameters of the decision network. This is the process of how it learns the optimal decision. DRL framework is as follows:

In DRL framework shown in the preceding figure, the agent interacts with the environment, and extracts features of the environment state through DL. And then, it transmits results to RL for decision-making and action execution. After the action is completed, the feedback of new state, rewards and punishments from environment is obtained, and the decision-making algorithm is updated. This process is iterated repeatedly, and finally the agent learns the strategy to obtain the maximum long-term reward and punishment value.

The practice of RL in D2C semantic field binding mainly consists of three parts. They are the construction of RL training environment, the algorithm model training, and the model testing sequentially. They will be introduced in the following part.

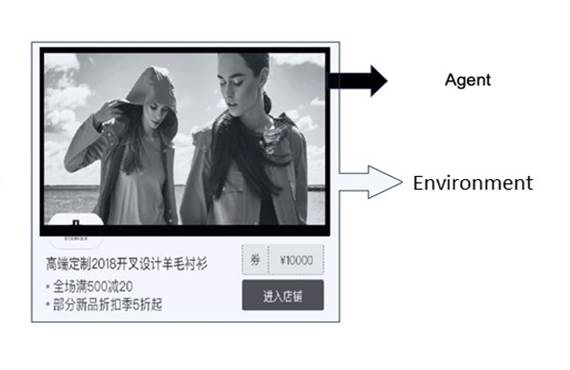

The important factors of RL are agent, environment, function design of reward and punishment from feedback, and step design. The idea of this article takes the semantic field recognition task as a game process. In this process, the algorithm model continuously updates the model parameters in accordance with the feedback from environment to learn a rule of how to maximize the reward and punishment function. Specifically, a module image is directly selected as an environment, and an element frame in the module is used as an agent. When the algorithm is trained, the agent (element frame) moves from top to bottom and left to right, just like walking a maze. Each step requires decision-making to select an action, and this action is the semantic field we want. Only when the action makes a correct selection can the agent go to the next step. If the agent completes all elements, it means that the algorithm model has learned the right way.

This solution models semantic field binding into decision-making in accordance with the situation. The RL model is used to find the semantics of fields. Important factors of RL in semantic filed binding are defined as follows:

Regard the entire module image as the environment of RL and the agent's state in the environment as the current environment.

Regard the element frame in the module as the agent.

Their definitions are shown in the following figure:

It refers to the actions (semantic names) selected by the agent (element frame) in the process of moving from top to bottom and from left to right in the environment (module image).

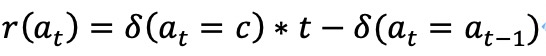

When the agent moves a step, it needs to select an action (semantic name). If the selection is correct, the agent gets points. If the selection is wrong, the agent loses points. The definitions are as follows:

If x is true, d(x)=1. If x is false, d(x)=0. The variables at and at-1 indicate the action was taken in the current state and the action taken in the previous state respectively, namely, selected semantic names. And c indicates the real semantic name of the element.

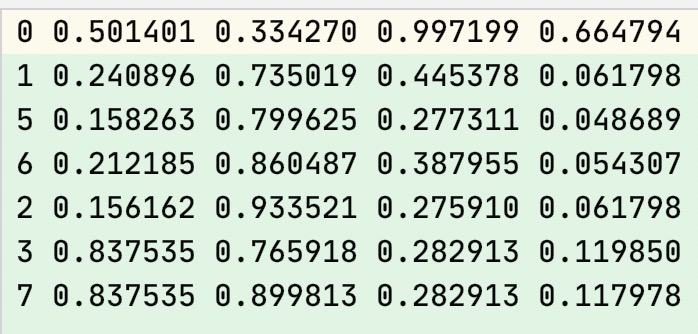

RL is unsupervised learning. However, in semantic field recognition, labels are added manually to create a reward and punishment function conveniently. If CSS code of module images is provided, labels are not needed. LabelImg can be used to add labels to a dataset. Each element has a corresponding semantic field. The labelling method is shown in the following figures, and they are a module image, labelling information of elements in the module, and semantic field information.

The environment constructed before needs to be imported to train a semantic model. The model needs to learn the optimal strategy through mistakes in the constructed environment. This article uses the DRL algorithm based on value function as one of the specific realization technologies of semantic field recognition. The DRL algorithm based on value function uses CNN to approach the action value function of traditional RL. The representative algorithm is DQN. The framework of DQN is as follows:

There are two neural networks with the same structure in DQN, which are called the target network and the evaluation network respectively. The output value of the target network Qtarget indicates the attenuation score when action a is selected in state S. The output value of the evaluation network Qeval is the value when action a is taken in state S.

DQN training process involves three phases.

At this time, the experience pool D is unfilled, and an experience tuple is obtained randomly when an action is selected at each time t.

Experience tuples of all steps are stored in the experience pool

This stage is mainly used to accumulate experience. Two networks of DQN are not trained.

This phase adopts E -Greedy strategy (E gradually decreases from 1 to 0) to obtain action a. While the network generates decisions, it can also explore other possible optimal actions with a certain probability, thus avoiding the problem of falling into a locally optimal solution. In this phase, the experience tuples in the experience pool are continuously updated as the input for the evaluation network and the target network. In addition, Qeval and Qtarget are obtained. The difference between the two is taken as the loss function, and the weight parameters of the evaluation network are updated by gradient descent algorithm. In order to make the training converge, the weight parameter update method of the target network is: Copy the weight parameters of the evaluation network to the target network parameters at regular intervals of iterations.

At this phase, E reduces to 0, indicating that all the selected actions from the evaluation network output. The update methods for the evaluation network and target network are the same as that in the exploration phase.

The DRL algorithm DQN based on the value function conducts network training according to the above three phases. When the network training converges, the evaluation network approaches the optimal action value function and achieves the purpose of optimal strategy learning.

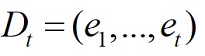

The evaluation method of RL is different from that of classification detection model. There is no measurement indicator like accuracy. The measurement indicator of the RL model is that the score keeps rising and does not fall. The experimental result of this article is that the score has kept rising. The overall score changes are as follows:

The RL algorithm training result is shown as follows:

This article uses Attention mechanism of Natural Language Processing (NLP) to carry out text classification tasks. The Attention Model (AM) is an Encoder-Decoder framework added with the Attention mechanism, which is suitable for generating another sentence or chapter from one sentence or chapter. The Attention mechanism can change target data by weight, and has the function of semantic translation output. Fields that appear in the field binding task have emphases, such as the data of field and style. Therefore, AM is better than the traditional text classification model, so the experiment is carried out.

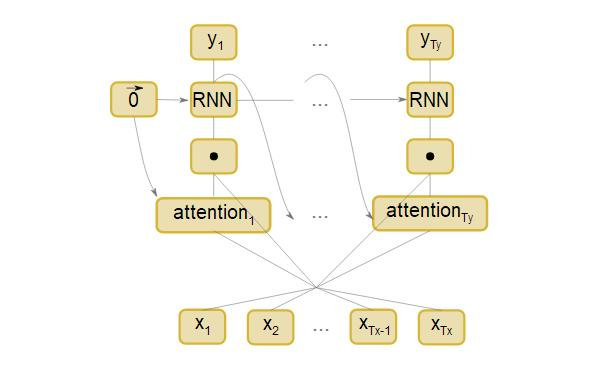

We can change target data by weight with AM. It is a resource allocation model that is sensitive to certain keywords in text, and has a high understanding of the text. AM is essentially an Encoder-Decoder framework. It is different from traditional Encoder-Decoder frameworks in that the intermediate semantics coded by an encoder is dynamic. Each word in a target sentence should learn the probability of attention distribution of the words in the corresponding source sentence. This means that when each word Yi is generated, the original same intermediate semantic representation C will be replaced by Ci that is constantly changing according to the currently generated word. This is the key to understand AM. That is, the same intermediate semantic representation C is changed into the changing Ci based on the current output word with AM changes added. The following figure shows the Encoder-Decoder framework with AM:

In the application of semantic interface elements, the Attention-based text classification model consists of three parts: training dataset construction, model training, and model testing. Each of them will be introduced below.

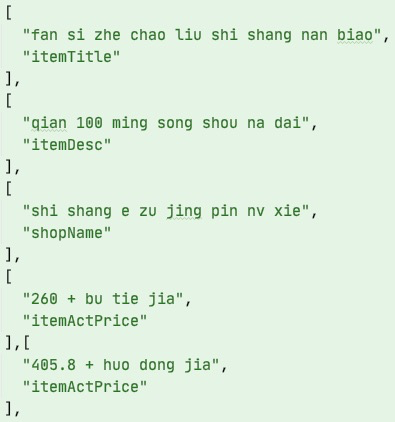

For embedding fields, two json files are created. The first json file: the input is the field's pinyin, and the output is the bound semantic field.

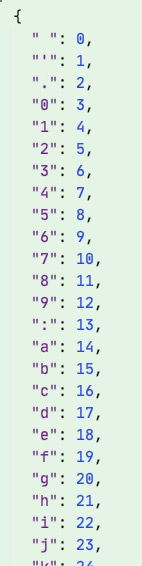

The second json file serves as a dictionary. Key values of input fields are retrieved from the file for easy embedding.

The following figure shows the network structure of AM in this article:

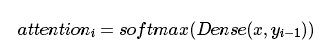

The first step: the attention layer:

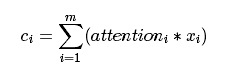

The second step: calculating the weighted sum of attention weight and input (called context):

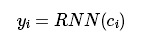

The third step: RNN layer:

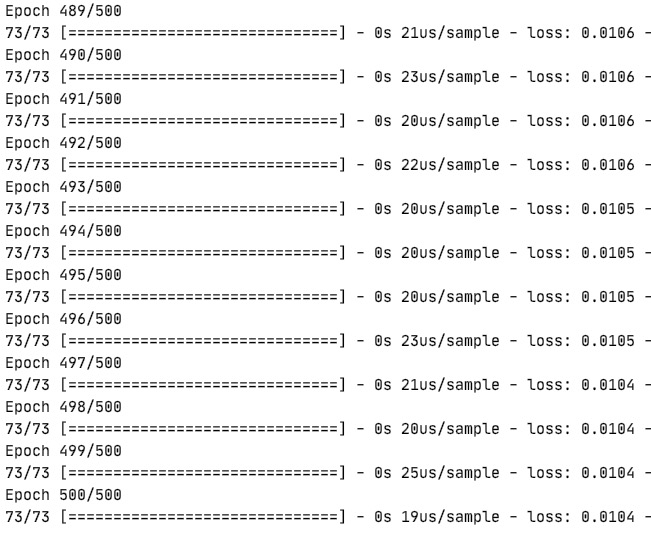

The loss changes during model training are as follows:

Here are some test examples:

This is a two-step solution that combines decision-making based on styles and text classification. In terms of images, it uses the decision-making capability of RL to recognize semantic names of ambiguous elements. In terms of text, it uses text classification model to recognize semantic names of unambiguous elements. Intelligent semantic tasks are completed with their advantages. In addition, this solution is applicable to various scenarios. It's a model training framework, so it can be applied to multiple other tasks. What's more, the text classification model based on the Attention mechanism has an advantage. The model's labels are not limited to certain types, but are infinite. They can directly output each letter of the bound field.

In the future, this solution will focus on RL environment construction, because this is the core of the decision-making model. The RL training environment should be designed to be more general, and the agent can learn in a better way. In addition, the solution based on RL is applicable to semantic interface elements, and it is expected to be added to the layout grouping recognition of D2C in the future. It is expected to realize the following: interface image-> grouping module-> binding of fields with styles -> scheme framework for the binding of fields without styles. Another prospect is that in this solution, the image-text interface can first be processed by OCR text detection technology to extract the text. Then, whether the text should be sent to the decision-making model or the text classification model is judged, and semantics is specified. In this connection, a more ideal end-to-end system is realized.

[1] Ketkar N. Convolutional Neural Networks[J]. 2017.

[2] Aghdam H H, Heravi E J. Convolutional Neural Networks[M]// Guide to Convolutional Neural Networks. Springer International Publishing, 2017.

[3] Gu, Jiuxiang, Wang, et al. Recent advances in convolutional neural networks[J]. PATTERN RECOGNITION, 2018.

[4] MINH V, KAVUKCUOGLU K, SILVER D, et al. Playing atari with deep reinforcement learning[J]. Computer Science, 2013, 1-9.

[5] MNIH V,KAVUKCUOGLU K,SILVER D,et al. Human-level control through deep reinforcement learning[J]. Nature,2015,518(7540):529-533.

[6] 曹誉栊. 从AlphaGO战胜李世石窥探人工智能发展方向[J]. 电脑迷, 2018, 115(12):188.

[7] 刘绍桐. 从AlphaGo完胜李世石看人工智能与人类发展[J]. 科学家, 2016(16).

[8] Lecun Y, Bengio Y, Hinton G. Deep learning[J]. 2015, 521(7553):436.

[9] 赵冬斌,邵坤,朱圆恒,李栋,陈亚冉,王海涛,刘德荣,周彤,王成红.深度强化学习综述:兼论计算机围棋的发展[J].控制理论与应用,2016,33(06):701-717.9

Intelligently Generate Frontend Code from Design Files: Intelligent Plugins

Imgcook 3.0 Series: How Does Design-based Code Generation Recognize Icons?

66 posts | 3 followers

FollowAlibaba F(x) Team - June 7, 2021

Alibaba F(x) Team - June 21, 2021

Alibaba F(x) Team - February 26, 2021

Alibaba F(x) Team - June 20, 2022

Alibaba F(x) Team - February 23, 2021

Alibaba F(x) Team - February 25, 2021

66 posts | 3 followers

Follow Web Hosting Solution

Web Hosting Solution

Explore Web Hosting solutions that can power your personal website or empower your online business.

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Web Hosting

Web Hosting

Explore how our Web Hosting solutions help small and medium sized companies power their websites and online businesses.

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn MoreMore Posts by Alibaba F(x) Team