In manual Code Review (CR), browsing code in plain text form will inevitably take a lot of time, affecting the efficiency of CR. Is there a more intelligent method for CR? Based on the quick code navigation service of cloud backup, the Syntax Intelligence Service of Apsara DevOps allows you to quickly view definitions and references without local cloning, which greatly improves the efficiency and quality of code review. This article shares the relevant technical principles and implementation methods of the Syntax Intelligence Service.

Code text is not a simple two-dimensional plane structure. To understand a piece of code, you need to jump through definitions and references repeatedly, in order to thoroughly understand the deep-seated logic of the code and the scope of segment influence. Code browsing in the form of plain text is one of the biggest pain points of code review on the webpage.

Mr. Zhu Xi often said, "If your mind is not here, you will not look at anything carefully, and you will not remember what you are reading. Even if you remember it, you will not bear it in mind for a long time." If you just read the code text in person, you may neglect something. If you are a serious and responsible code reviewer, the Syntax Intelligence Service of Apsara DevOps is designed to help you fully understand code changes.

So what is the Syntax Intelligence Service? The Syntax Intelligence Service provides a fast code navigation service based on cloud backup. Without local cloning, you can experience the familiar quick view and jump of definition and reference on the page, which greatly improves the efficiency and quality of CR.

The underlying technology of the Syntax Intelligence Service of Apsara DevOps is Language Server Index Format (LSIF), which is a graph storage format for indexes of persistent languages. Through the graph format, it represents the event relationship between "code document" and "syntax intelligent result".

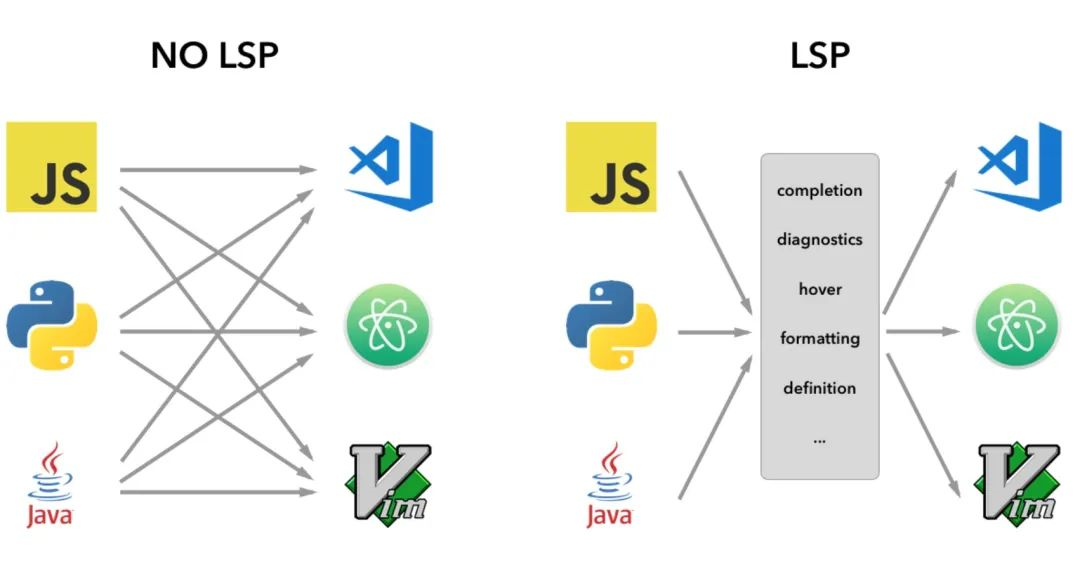

Prior to LSIF, Language Server Protocol (LSP) defined the interaction protocol between coding language and various terminal code editors. Originally, developers needed to define a syntax analysis service application for each editor, so M languages need M x N applications to be used in M code editors. With the emergence of LSP, developers only needed to follow the LSP protocol format when parsing code syntax.

When you implement interfaces, such as code completion, definition display, and code diagnosis, you only need to develop M + N applications.

However, code analysis often consumes a lot of time and resources. When a user requests a syntax service (such as viewing a definition), the backend needs to clone the code, download the dependency package, parse the syntax, and create indexes.

An example is a scenario where a project is initialized based on IntelliJ IDEA. In the editor scenario, users are accustomed to this mode, and think that waiting for a few minutes is not a big issue. However, for CR scenarios or lightweight code browsing scenarios, this method has relatively low timeliness. The user may have finished code browsing after several minutes, and the lack of persistent storage may lead to excessive consumption of resources.

Therefore, LSIF came into being in this context, with the idea of exchanging space for time, calculating the syntax analysis results in advance, and storing them in the cloud in a specific index format. It can quickly respond to multiple requests from different users.

I have used an official example to introduce LSIF, as shown in the following code:

// this is a sample class

public class Sample {

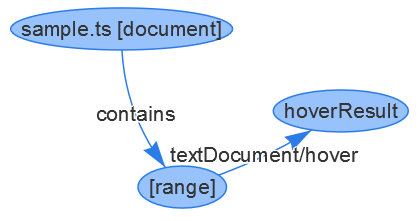

}Assuming that only one interaction mode exists, when you move the pointer over the class name in Sample, the comment "this is a sample class" is displayed. Use the LSIF diagram to describe it as follows.

A sample file contains a range of information and this range is associated with a hoverResult. The stored result of hoverResult is provided if the hover event is triggered within a specified range of the file.

Using a Json file to describe the graph storage provides the following results:

{ id: 1, type: "vertex", label: "document", uri: "file:///abc/sample.java", languageId: "java" }

{ id: 2, type: "vertex", label: "range", start: { line: 0, character: 13}, end: { line: 0, character: 18 } }

{ id: 3, type: "edge", label: "contains", outV: 1, inVs: [2] }

{ id: 4, type: "vertex", label: "hoverResult", result: {["this is a sample class"]} }

{ id: 5, type: "edge", label: "textDocument/hover", outV: 2, inV: 4 }The actual LSIF diagram of a project is very complex and often contains hundreds of thousands of nodes. For more information about LSIF, see [1].

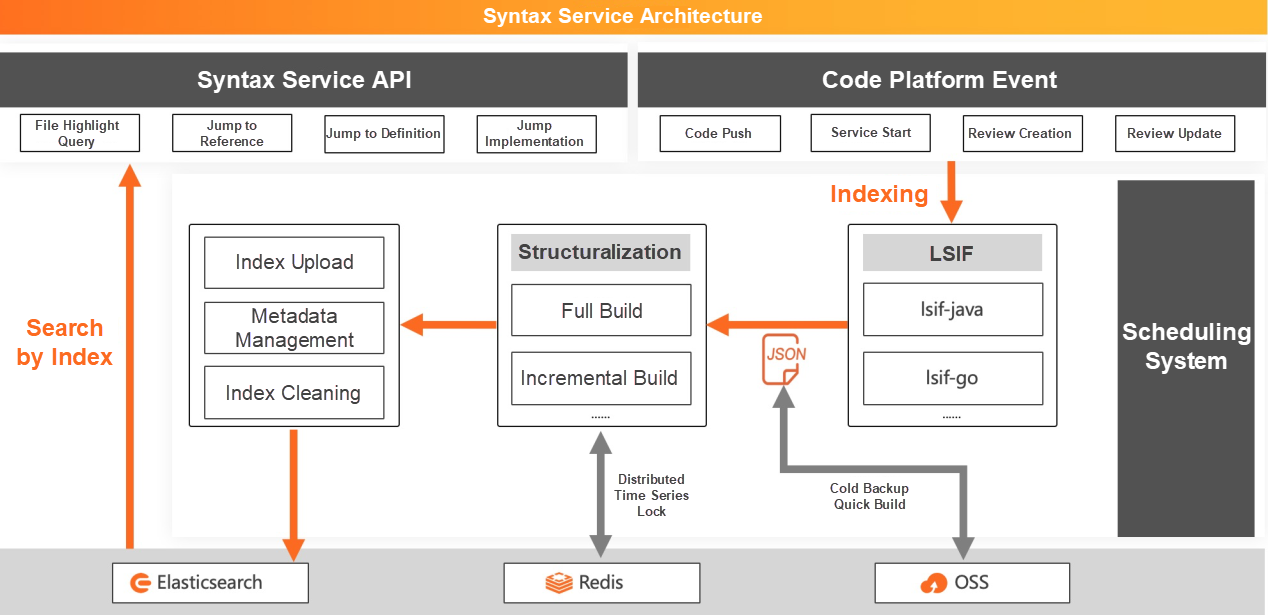

The syntax service architecture diagram of Apsara DevOps is divided into two parts:

Users' code browsing scenarios mainly focus on code review and main branch code browsing, so we mainly support syntax services of two scenarios at present. The syntax service receives event messages from the code platform, such as code push events and the creation, update, merging, closing, and reopening events of reviews, to trigger the construction of syntax service.

Our workflow scheduling framework is mainly based on Alibaba's open-source distributed scheduling framework, tbschedule. This system will maintain a task cluster, do node management and task distribution through ZooKeeper, and quickly process scheduling tasks without repetition or omission.

For different languages, we only need to convert the source code to LSIF format once and apply LSIF in different scenarios. Multiple code languages are parsed into a unified LSIF format file.

For the main Java language within Alibaba, we use Spoon [2], an open-source Java code analysis tool, to analyze the Java source code into AST (abstract syntax tree). Then, the tool captures the associations between definitions and references, definitions and annotations. It aggregates the coordinate information, annotation content, and associated files, which are output into LSIF JSON format.

During development, some lsif-java problems are fixed, such as disordered location range information, recalling a variety of missing highlighted word types, and adapting index construction of non-Maven warehouse. At the same time, the problem that Spoon cannot correctly parse some annotations is also fixed, and the Project Review (PR) has been accepted and merged by the Spoon community [3].

After the Isif.json file is generated, because this JSON file is large, it is not reasonable to load and respond to the request directly from the frontend, and it is also difficult to generate and maintain incremental data in the later stage. Therefore, we need one more step: lsif.json is converted into structured data to respond to user query requests on demand. The graph storage format in lsif is naturally associated with graph data structure storage and the graph query speed is also relatively fast.

However, because of the frequent index changes, iterations, and ID changes, it is difficult for graph storage to adapt to incremental solutions and it is difficult to structure index data of different code libraries and languages in a single graph. Referring to relevant practices in the community, and considering cost and performance, we finally chose ES (Elasticsearch) for structured data storage because ES is naturally suitable for large-scale data storage and indexing.

After such structured data is uploaded to ES, the backend server of the syntax service constructs the ES query requests based on the syntax to query the definition, reference, or comment information, and then assembles the returned data.

For branches, we will continuously update and retain the latest version of index data. For CR, we will build indexes for each push version of the source branch and the merge-base version of the source and target branches.

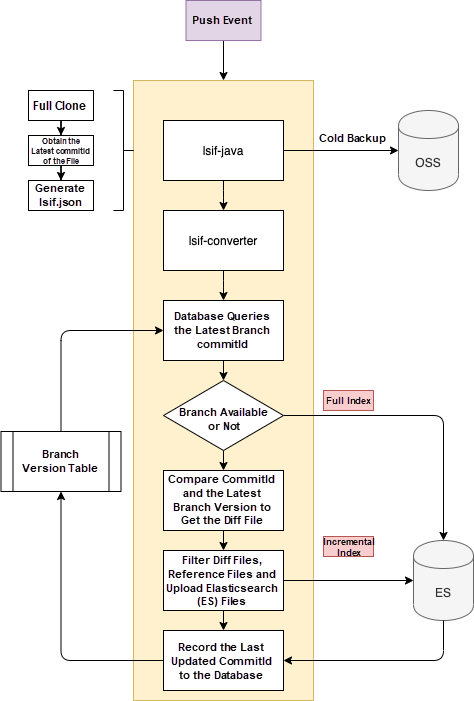

Another difficulty in indexing is incremental computing. As mentioned above, indexing for syntax services requires extremely high resources. However, in reality, code libraries inevitably require frequent submission of code. This leads to two optimization points:

Each time the branch index is successfully built, we will record the version number of the branch in the database. After a new commit is made to the branch and the lsif.json is generated, the system will compare the diff from the two branches to obtain the change file and change type. Then, the system will further extract the files affected by the index through the change file (the coordinate information changes of references or definitions).

After all the affected files and the corresponding ES addition and deletion operations are listed, the incremental index upload is completed. This incremental process can reduce the branch building time by 45% on average.

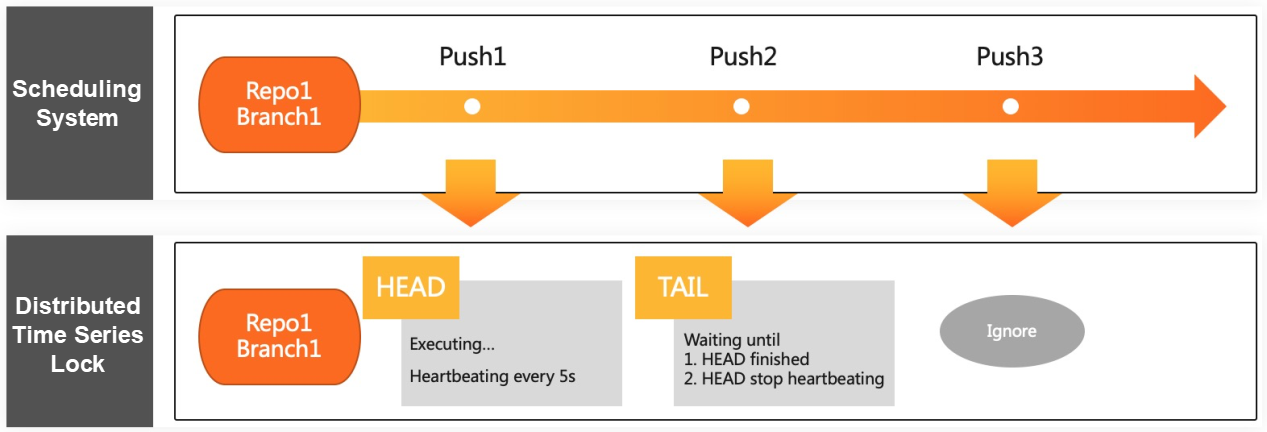

According to the database size, the indexing time of LSIF ranges from 10 seconds to several minutes, while the peak value of user submission operations for the same code warehouse may reach nearly 100 times per minute. Even if we use incremental data acquisition technology, it is still difficult to meet high-frequency build requests. In addition, submitting event touch tasks and executing scheduling tasks cannot ensure accurate timing. A distributed time series lock is required to ensure task scheduling sequence and minimize scheduling repetitions.

When different push messages from the same code library surge, the distributed lock maintained by ApsaraDB for Redis will make a judgment: If the library does not currently have a running task, the task is placed in the head of the queue and run immediately. If there is already a task in progress, check whether the new push message is the latest. If so, the message joins the tail of the queue. When there are already two members in the team, the task is discarded.

Each time the task is executed, the branch code is cloned and the index is built based on the latest version. This avoids the possibility of indexing as many times as the push is performed. Considering the unexpected exit of threads, the head of the queue will send a global heartbeat every 5 seconds. When the tail of the queue or a new task detects that the heartbeat times out, the task for the leader of the team is abandoned and the new task is executed.

For example, when using the above example of syntax service, users mainly have the following three requests:

For the first request, the system will construct a query request of ES based on the filter condition of the file path, and send all the highlighted word coordinate information of the current file to the frontend, thus avoiding the syntax segmentation of the frontend. Naturally, the files that are not built will not be highlighted on the page. In addition, to avoid the pressure on ES due to extremely large files, the frontend performs dynamic loading in batches.

For the second request, when obtaining the definition and reference list, we not only need to obtain the file name and location information, but also display the corresponding code content for the user to understand. To achieve this effect, we have added an interface for obtaining multiple file fragments at one time.

For the third request, jumps within the same file are automatically highlighted to the corresponding code line, and a new page will be opened for the jumps between different files and the jumps will be performed.

Syntax service responses and syntax indexing are completely asynchronous and do not affect each other. They support independent resource scaling.

The size of an index is approximately several times the size of a code file, which consumes storage resources. Therefore, according to users' general usage habits and scenarios, a series of index cleaning tasks are developed to avoid excessive consumption of resources.

When code reviews are merged or deleted, and branches are deleted, the system will start the index cleaning task and release the index resources.

The lack of symbol jumps has long been one of the pain points in code reading on pages. Various syntax protocols and technologies are emerging, such as LSIF, Kythe, Static Analysis Results Interchange Format (SARIF), Universal Abstract Syntax Tree (UAST), Tree-sitter, and generate tag files for source code (ctags). Technical personnel around the world are striving for smarter code analysis, better code experience, and higher code quality. In the future, the Apsara DevOps Syntax Intelligence Service will gradually speed up syntax building, support more code languages, meet more syntax scenarios, and improve user code browsing experience.

Alibaba Clouder - July 15, 2020

Alibaba Developer - April 7, 2020

Alibaba Clouder - August 12, 2021

Alibaba F(x) Team - September 30, 2021

Alibaba F(x) Team - September 2, 2021

Alibaba Cloud MaxCompute - March 3, 2020

Alibaba Cloud Elasticsearch

Alibaba Cloud Elasticsearch

Alibaba Cloud Elasticsearch helps users easy to build AI-powered search applications seamlessly integrated with large language models, and featuring for the enterprise: robust access control, security monitoring, and automatic updates.

Learn More YiDA Low-code Development Platform

YiDA Low-code Development Platform

A low-code development platform to make work easier

Learn More DevOps Solution

DevOps Solution

Accelerate software development and delivery by integrating DevOps with the cloud

Learn More Alibaba Cloud Flow

Alibaba Cloud Flow

An enterprise-level continuous delivery tool.

Learn MoreMore Posts by Biexiang