By Wang Weijun (nicknamed Hongli), a senior development engineer at Alibaba's Search Recommendation Department. In addition, Chen Huaxi (nicknamed Kunlun) and Li Guoding (nicknamed Shiji) provided input for this article.

In Alibaba's search engineering system, services that respond to user requests at the millisecond level, such as search engines and online algorithm analysis, are referred to as "online" services. On the other hand, systems that convert and process data from various sources and send them to "online" services, such as search engines, are collectively referred to as "offline" systems.

Offline search platforms are the data providers of search engines. It is the only bridge between the group's businesses and search services and is also a critical part of the entire search link. The quality and efficiency of offline data output directly affects the user experience of downstream services.

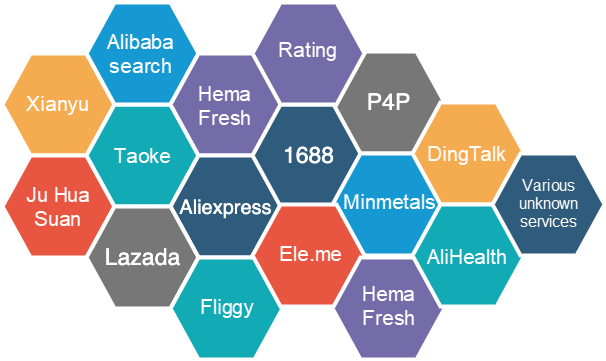

With years of cumulative experience, the offline search platform not only hosts a large number of search services within the group but also serves many external customers in the cloud. With the rich functions of this platform, Blink (Alibaba version of Flink) provides leading performance for users. We started planning to migrate Alibaba Search (Taobao Tmall search) to the offline search platform in early 2019.

Before the migration to the offline search platform, the architecture of Alibaba Search had many disadvantages, such as the aged architecture, outdated Blink version, difficult O&M, and inconsistent computing frameworks. With the turnover of Alibaba Search personnel and increasing O&M difficulty, reconstruction was imminent. Migrating Alibaba Search (which is a logically complex application with a data scale of hundreds of millions) to the offline search platform always faced performance challenges. Business characteristics and performance requirements made every step in the reconstruction process very difficult. To achieve the expected performance, almost every Blink Job was optimized separately. The initial ideal and the final result are both wonderful, but the process was extremely difficult.

This article describes how Alibaba Search was migrated to the offline search platform. The completion of the Alibaba Search migration to the offline search platform was a milestone for the platform. It represented that the offline search platform has the ability to host ultra-large businesses.

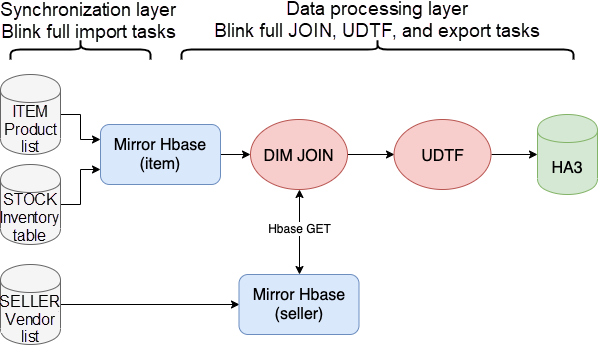

The offline search platform mainly consists of the synchronization layer and the data processing layer, which include the full search process and the incremental search process. To help you better understand, we will briefly introduce some concepts about the offline search platform.

Currently, the offline search platform supports hundreds of businesses in the group, including Alibaba Search and AE. Among them, Taobao's Tmall rating business has the largest data volume, which is X tens of billions of data entries, with each entry having about X fields.

After processing the users' data source (MySQL or MaxCompute) tables, the resulting data goes through a series of offline processing processes and is finally imported to the Ha3 online search engine or ES.

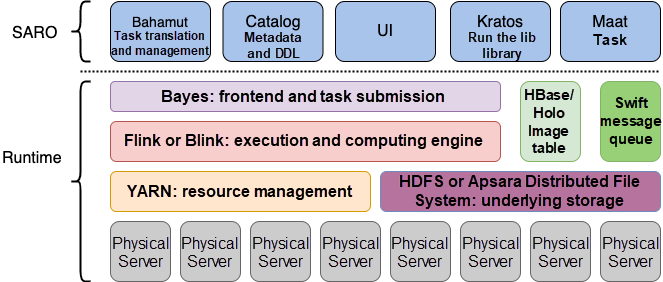

The following figure shows that the data storage of the offline search platform is based on HDFS and Apsara Distributed File System. Meanwhile, resource scheduling relies on YARN or Hippo, and the computing framework is uniformly run by using Flink or Blink.

Full import is to reprocess and generate all search business data and transmit the resulting data to the online engine. Normally, this takes place once a day.

There are two reasons for this action. First, the business data is updated on a daily basis. Second, the engine needs full data for efficient index collation and pre-processing to improve online service efficiency. The running of full import is divided into the synchronization layer and the data processing layer.

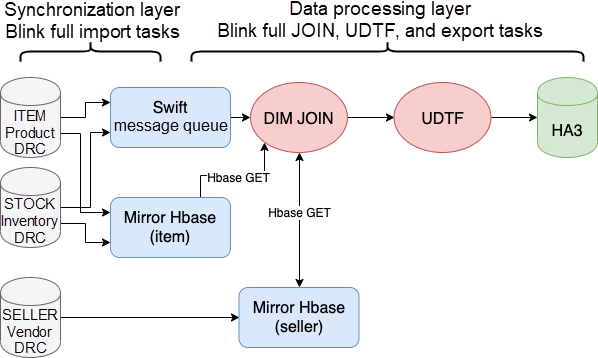

Incremental import is to update data changes from upstream data sources to the online engine in real time.

With this action in our scenario, for incremental data, there is no need to ensure Exactly Once semantics but At Least Once semantics. Based on this context, use the full-link asynchronous processing method to solve the one-to-many problem, which we will explain in detail below.

Similar to full import, the running of incremental import is divided into the synchronization layer and the data processing layer.

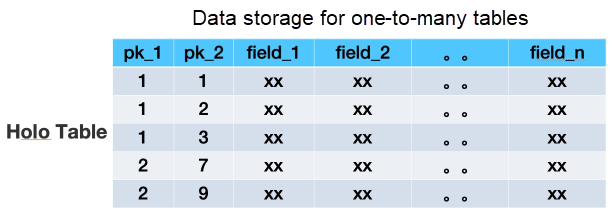

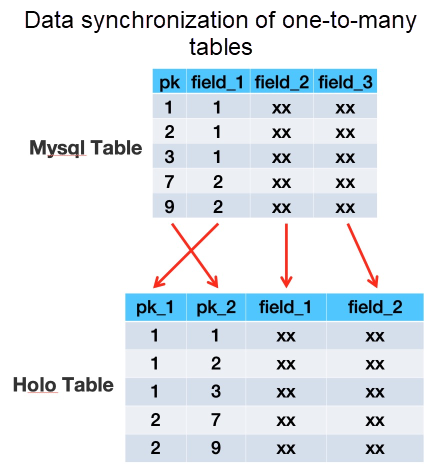

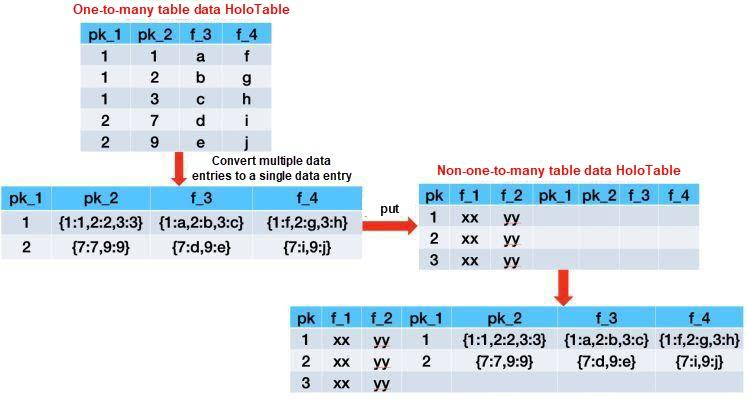

Some business data in the search field must be described in the one-to-many manner. For example, the relationship between an item and the SKU is a typical one-to-many example. In the offline search architecture based on Hologres (Alibaba's proprietary distributed database) storage, one-to-many data is stored in a separate HoloTable with dual PKs. In this case, the first and second primary keys are the item ID and SKU_ID, respectively.

After understanding these concepts, let's see how the offline search platform was optimized for Alibaba Search Blink Jobs in subsequent sections. But, first, let's briefly summarize the features and performance requirements of the Alibaba Search service.

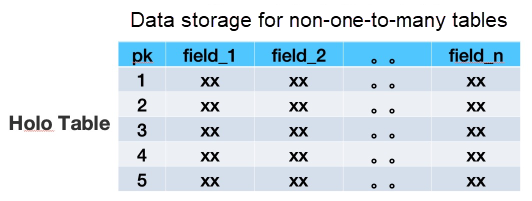

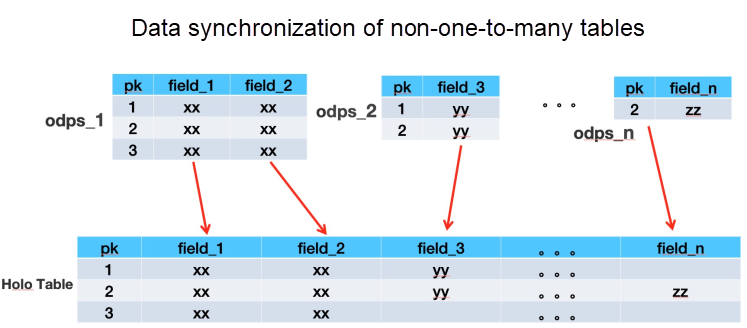

When HBase was used for the offline search platform, a multi-column family-wide table was used to store all single-dimension business data. After thorough research, we decided to replace HBase with Hologres, and therefore, we needed to completely reconstruct the storage architecture and use multiple tables to simulate the multi-column families in HBase. A single HoloTable contains data from many business data source tables. The reconstructed data storage method is as follows:

The synchronization layer synchronizes data from upstream data sources to image tables for efficient processing at the data processing layer. The business side's single-dimension data is composed of X MySQL tables or MaxCompute tables, which may be more or less in actual cases. Therefore, when the data of the same dimension is aggregated into a Holo table, a large amount of shuffle is generated if multiple tables are joined together. For this reason, we adopt the asynchronous upsert method, which writes data in different data source tables to different columns in the Holo table to solve the problem of massive data import.

The data processing layer computes the data in each image table (HBase or Holo table) obtained at the synchronization layer. This layer provides multi-table Join and UDTF to facilitate the development and access of search businesses.

The following sections describe the features and performance requirements of the Alibaba Search service and explain how optimization was implemented to meet the performance requirements.

Full import is performed once a day, and X billion products are processed each time under limited resources. In this context, achieving high throughput for such a large amount of data is very challenging.

When the TPS is X W, incremental data has a low latency of several seconds. During the Double Eleven Shopping Festival, the TPS of some tables (such as XX tables) reaches X W. In this case, how can we ensure a constant and low latency for incremental data?

The following section describes how we solved these problems to meet the performance requirements.

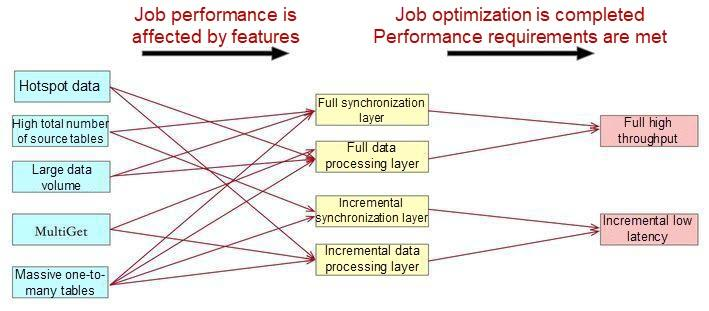

The following figure shows the features and performance requirements of the Alibaba Search service. The columns on the left and in the middle indicate which features of Alibaba Search result in the poor performance of tasks at a certain stage. To address this, we need to optimize the Blink Job in the corresponding phase. Implementing optimizations allow the platform to meet the full import performance requirement for high throughput and the incremental import performance requirement for low latency, which are shown in the rightmost column in the figure.

The following segments describe how to solve the full import, incremental import, and one-to-many problems to meet the performance requirements of high throughput for full import and low latency for incremental import.

The running of full import mainly involves the synchronization layer and the data processing layer. High throughput must be achieved so that full data migration is done within X hours. The synchronization layer needs to synchronize hundreds of millions of full data entries from about X tables in a short period of time, without affecting the timeliness of incremental import that is taking place at the same time. This is an enormous challenge.

On the other hand, the data processing layer needs to process more than X hundred million pieces of data in a short period of time, join many image tables, perform UDTF processing, MultiGet, and other operations, and ultimately generate a full HDFS file.

The following describes the performance optimization process for the data processing layer. The optimization of the relevant Job took long, and many solutions were tried. The following sections discuss the optimization in chronological order.

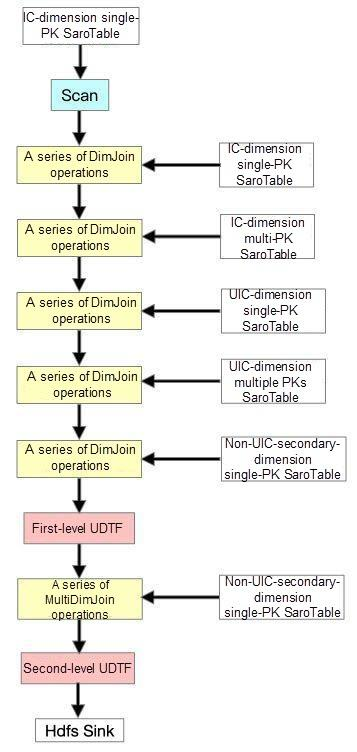

First, let's take a look at the "IC" dimension as the product dimension and the "UIC" dimension as the seller dimension. In the beginning, our solution did not include "FullDynamicNestedAggregation" or "IncDynamicNestedAggregation" (will describe both Jobs in detail later). After scanning the IC-dimension single-PK table, a series of DimJoin, UDTF, and MultiJoin operations are performed.

During testing, the performance of the multi-PK table (one-to-many table) in DimJoin was very poor, and the full-link Async process degraded to Sync. It happened because one-to-many data is stored in a separate SaroTable (the logical abstraction of multiple HoloTables). Partial Scan was performed to retrieve the corresponding data for the specified first PK, which was a full Sync. Meanwhile, a Scanner was created for each Get operation, and not only a Cache was added to DimJoin, but also a precise Cache was added for SubKey for the unique MultiGet operation of Alibaba search. However, after testing, we found that the performance requirements were still not fully met, and therefore further optimization is required.

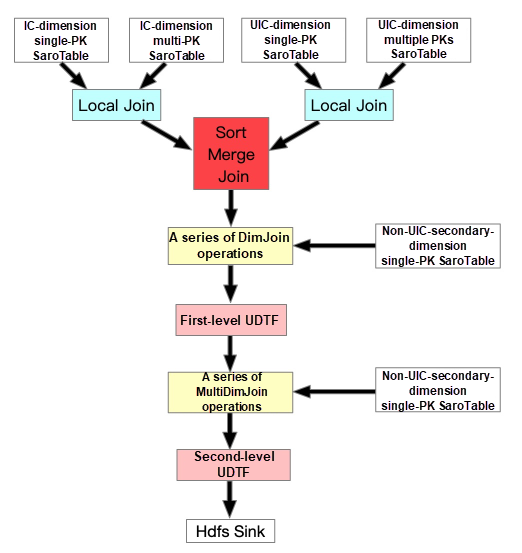

Since the performance bottleneck laid in the SaroTable with DimJoin of multiple PKs, we managed to remove this part. As the one-to-many SaroTable table has only two dimensions, we performed LocalJoin between all tables of the IC and UIC dimensions (including single-PK and multi-PK), and then performed SortMergeJoin. Then, we proceed with other processes.

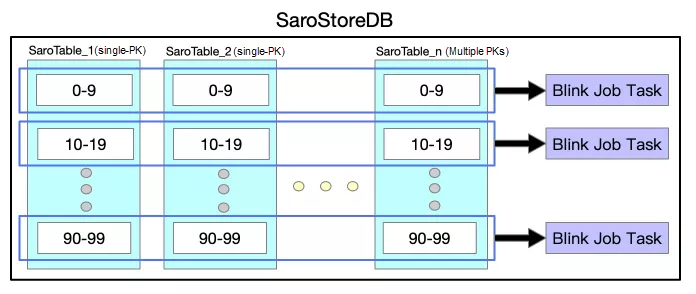

First, let's take a look at Local Join. HoloStore ensures that all tables in the same database follow the same Partition policy and are sorted in the lexicographic order of primary keys. Therefore, we can pull the data of the same dimension and Partition into a process to perform Join. In this way, Shuffle is avoided, as shown in the following figure.

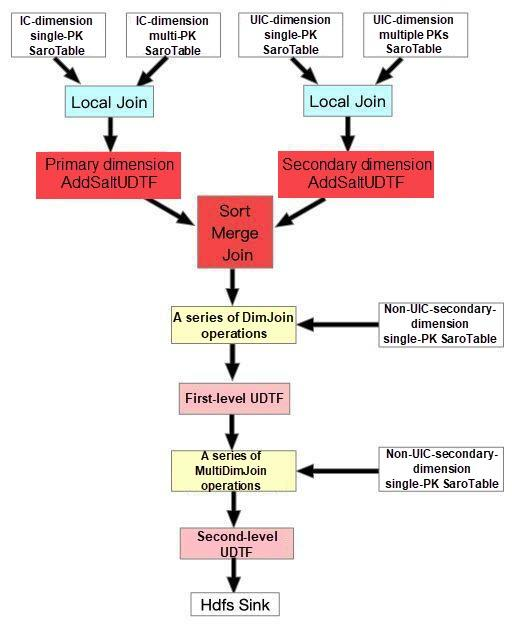

Then, the topology changes as shown in the following figure:

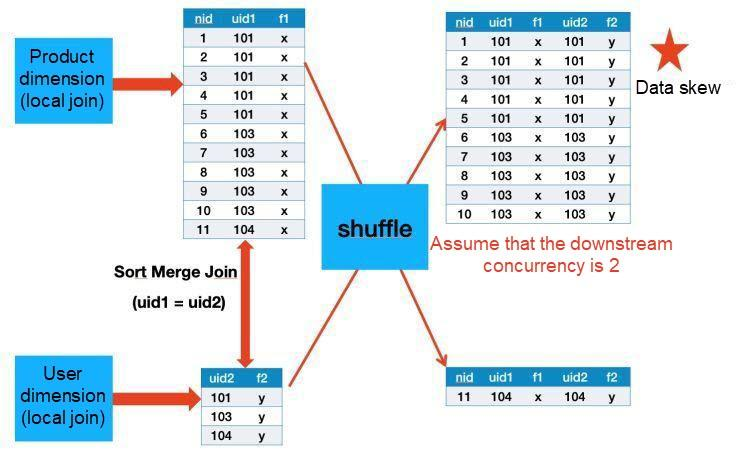

After testing, a large seller (a seller with many products) may cause a serious long tail after SortMergeJoin. As shown in the following figure, the data with UIDs of 101 and 103 falls into the same concurrent operation. To address this, weI added a PartitionBy nid to this value and found that it was useless because the Sort stage and External Shuffle operations in SortMergeJoin require performing Disk File Merge multiple times for tasks with a large amount of data. This means, it still takes a long time to complete long-tail tasks.

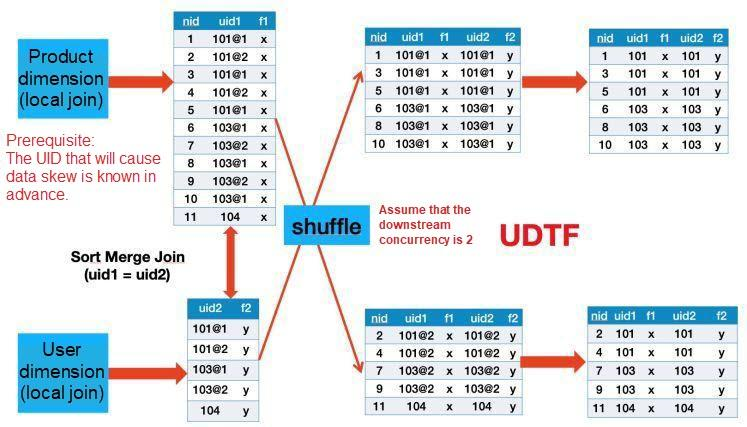

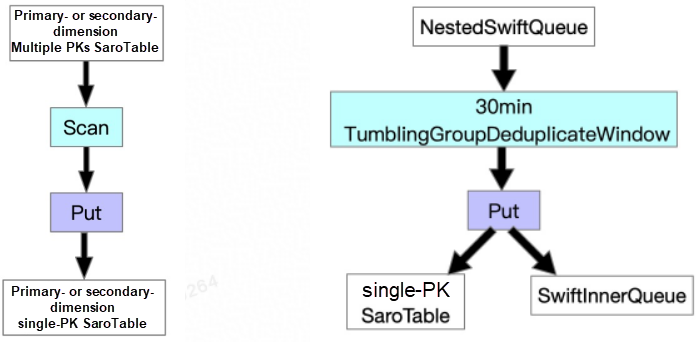

Due to the preceding problem, we need to perform further optimization. After discussing with team members, we decided to salt and scatter big sellers. To do this, we filtered the IDs of the top X big sellers from the MaxCompute source table. Then, after performing primary- and secondary-dimension Scan and Local Join operations on them, they are classified into UDF and UDTF. The following figures show the specific flowchart and working principle of the process:

As shown in the preceding figure, data with UIDs 101 and 103 is scattered to multiple concurrent operations. Since we added a UDTF after SortMergeJoin to remove the added salt, the final data will not be affected.

After making the preceding optimizations, the full data FullJoin operation is completed, and the performance is just above the standard. Therefore, we began to adjust the incremental import process (IncJoin). At this time, we found that the initial form of IncJoin had the same problem as that of FullJoin. As it was very inefficient to keep up with incremental data, after discussing with team members, we decided to add a FullDynamicNestedAggregation Job at the synchronization layer (as explained in detail below).

This job is a Blink Batch Job, which writes one-to-many SaroTable data of each dimension to the main table of the corresponding dimension, and then, scans all the data at the beginning of the FullJoin scan. It prevents a SaroTable with multiple PKs in DimJoin. Eventually, the requirements of full import and high throughput were met. The final form of full import FullJoin is as follows:

The incremental performance was mainly subject to IncJoin at the data processing layer. This job was originally a Blink Stream Job. It reads incremental messages from SwiftQueue, associates them with the data in each image table to complete the fields, performs UDTF processing on the data, and lastly sends the incremental messages to the online engine SwiftQueue.

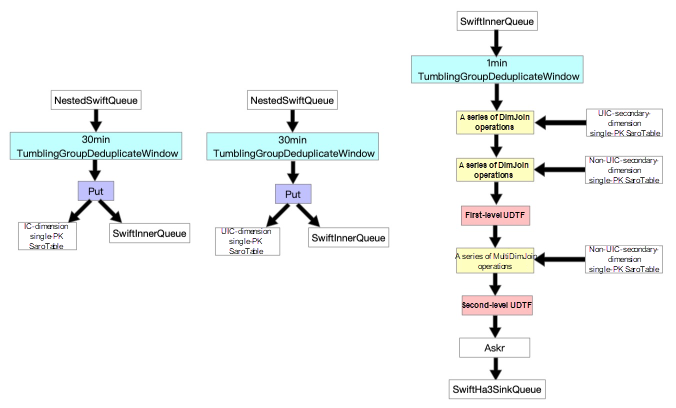

Based on the idea of "stream-batch integration," after a series of attempts, the final form of the incremental data job at the processing layer is as follows:

Different from full data, incremental data is updated in real time, and therefore new data entries must be written not only to SwiftQueue, but also to the SaroTable. In addition, based on business characteristics, we added a window to each Job to deduplicate data entries by PK.

Alibaba Search involves many one-to-many tables. It took a long time for us to find how to efficiently get data from the data processing layer and convert it to the primary dimension for field completion.

To improve efficiency, we had to find a way to improve CPU utilization. To do this, we get data entries in full-link asynchronous mode. Since one-to-many data is stored in a HoloTable with multiple PKs, the specification of the first primary key is implemented by Scan to obtain relevant data on the Holo server.

In this case, due to the infectivity of asynchronous programming, full-link asynchronization is degraded to synchronization, whose performance is far less satisfactory.

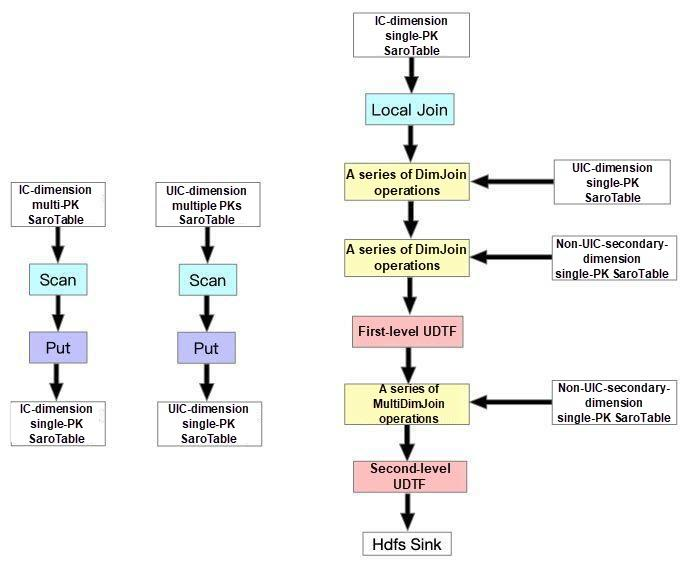

After many discussions and practices, to transform the "pseudo-asynchronization" into real full-link asynchronization, we decided to Scan multiple data entries with the same primary key in one-to-many tables and generate a single data entry by performing GroupBy, convert each field to a JSON string, and then Put the converted fields into the main table. The primary steps of this process are shown in the following figure.

To do this, we added two Jobs to the synchronization layer for full and incremental data, which are FullDynamicNestedAggregation (Blink Batch Job) and IncDynamicNestedAggregation (Blink Stream Job). The general process of both the jobs is shown in the following figure.

As mentioned in the section about incremental data above, there is no need to ensure Exactly Once semantics but At Least Once semantics for incremental data in our scenario. Therefore, based on this context, we can split the incremental Job at the data processing layer into two smaller jobs for execution, solving the one-to-many problem.

In this way, we do not need to scan HoloTable at the data processing layer, and we can improve the overall incremental performance by using the full-link asynchronization method.

Truncation Optimization: To avoid the "large row" problem in FullGC caused by excessive data after multiple data entries are converted into a single entry, based on the characteristics of the business, we provide the truncation function for each Scan of one-to-many tables. For the same first PK, only a certain number of data entries are scanned and assembled into a JSON string. In addition, you can configure whitelists for different tables.

Filter Window Optimization: Based on the business characteristics, although many one-to-many tables can accept a latency, the number of new data entries must be restricted to avoid the impact on the offline system and online BuildService. Therefore, we added a 30-minute deduplication window, which is very effective and has an average deduplication rate of more than X%.

After a series of optimizations, the new Alibaba Search architecture not only saves a lot more resources than the previous architecture, but also achieves high throughput for full import and low latency for incremental import. Moreover, it easily handled sudden traffic surges during the Double Eleven Shopping Festival in 2019.

Performance optimization is extremely complex and fine-grained and is also very challenging. To achieve it, you must be familiar with the selected technical tools (Flink or Blink) and your business. Window addition, truncation optimization, the salt addition and scattering of large sellers, and other optimizations can be used only because the business scenario can tolerate the shortcomings of these measures.

In addition to the optimization experience mentioned in this article, we have also made a lot of optimizations for full and incremental data Jobs and MultiGet at the synchronization layer, which is not detailed here due to spatial constraints.

The successful migration of Alibaba Search enables the offline search platform to complete the last piece of the puzzle to become a fundamental module of Alibaba Group's Search mid-end and core links.

Proxima - April 30, 2021

Alex - June 18, 2020

Alibaba Cloud New Products - January 19, 2021

Alibaba Cloud Storage - May 14, 2019

ApsaraDB - December 17, 2024

Alibaba Clouder - December 12, 2017

Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More