By Yeti

In 2018, Microsoft published the paper "FASTER: A Concurrent Key-Value Store with In-Place Updates" on SIGMOD. The paper introduced a key-value (KV) store that supports high concurrency and achieves a high throughput of 160 million OPS on a single server. This performance exceeds other pure in-memory data structures. The other highlights of FASTER include the support for a larger data volume than the memory size and the novel implementation method. Although FASTER has restrictions in engineering and is difficult to put into production, it is enlightening for optimizing the KV engine and is worth learning.

FASTER consists of the following three parts:

However, FASTER has some limitations. It only supports point look-up and not range query. FASTER is applicable only to update-intensive scenarios. Write-ahead Logging (WAL), which affects update performance, is not written by FASTER. In addition, some data may get lost after recovery.

FASTER supports three types of interfaces: read, blind update, and read-modify-writes (RMWs). RMWs indicates an atomic update on the original value, which supports partial updates of entry - for example, updating only one field of the value. FASTER is a point-operation system that is capable of achieving hundreds of millions of throughput in memory. Even when the amount of data exceeds the memory capacity, FASTER still has a high performance, thanks to the innovations made in its design and implementation.

First, to support a scalable thread model, FASTER extends the standard epoch-based synchronization mechanism to promote deferred synchronization of global changes to all threads with the trigger action. This Epoch framework helps FASTER to simplify the concurrency design.

Then, FASTER adopts the design of concurrent latch-free and cache-friendly hash indexing. When FASTER is used with the pure in-memory allocator, they compose a memory KV system, whose performance and scalability are higher than other popular pure in-memory structures.

Finally, FASTER introduces HybridLog. Log-structuring is used to store larger-than-memory data. It supports failure recovery by writing WAL so that updates to entries are written to the log through append writes, based on the read-copy-update policy. However, this mode limits throughput and scalability. In-place updates are the key to high performance. Therefore, FASTER proposes HybridLog, which combines the memory and append-only log. With HybridLog, hot data gets updated in-place, while cold data follows the read-copy-update policy. Thus, cold data is copied to the hot data area and then updated in-place.

By following the principle of being fast in most cases, FASTER has been refined in the following aspects:

1) FASTER implements concurrent latch-free hash indexing to provide fast point look-up for entries.

2) FASTER selects the appropriate time to perform time-consuming operations, such as hash index scaling, checkpoint, and eviction.

3) FASTER is able to perform in-place updates most of the time.

FASTER performs much better in memory-intensive scenarios than other pure in-memory systems. Furthermore, it outperforms when the data volume exceeds the memory capacity and when the hot data set changes.

Epoch is not something new and has been used for resource recycling by Silo, Masstree, and Bw-Tree. FASTER extends and makes Epoch a more general framework, which is mainly used for memory-safe garbage collection, hash index table scaling, circular buffer maintenance of the log-structured allocator, page flushing, page boundary maintenance, and checkpoint.

The epoch system maintains a sharded atomic counter, E, that is called the current epoch, whose value increases by every thread. Each thread T has a local E, which is represented by Et. Threads regularly refresh the local epoch value, and the epoch value of each thread is saved in the sharded epoch table. If local epochs of all threads are greater than epoch c, c is considered secure. FASTER maintains an extra global variable Es, used to record the largest secure epoch. For any thread T, its epoch is greater than Es.

Using trigger actions enables the framework to execute any global action when an epoch becomes secure. When the current epoch is increased from c to c+1, the thread is associated with an additional action, which will be triggered when epoch c becomes secure.

The following four operations are supported for a thread T:

By executing trigger actions, the Epoch framework simplifies latency synchronization in parallel systems. A typical example is that if you need to call the active-now function when the status of a shared variable becomes active, a thread updates its status to active and sets active-now as the trigger action. At this time, not all threads detect the status change immediately, but it is assured that the change is detected when the thread refreshes its epoch. Therefore, the active-now function is called only when all the threads detect the status change.

High-performance hash indexing of FASTER has diverse features - Concurrent, latch-free, scalable, and resizable. Unlike the implementation of a normal hash table, which stores the physical or logical address of an entry set instead of the key value, the physical address is used in pure in-memory storage, and the logical address is used in hybrid storage.

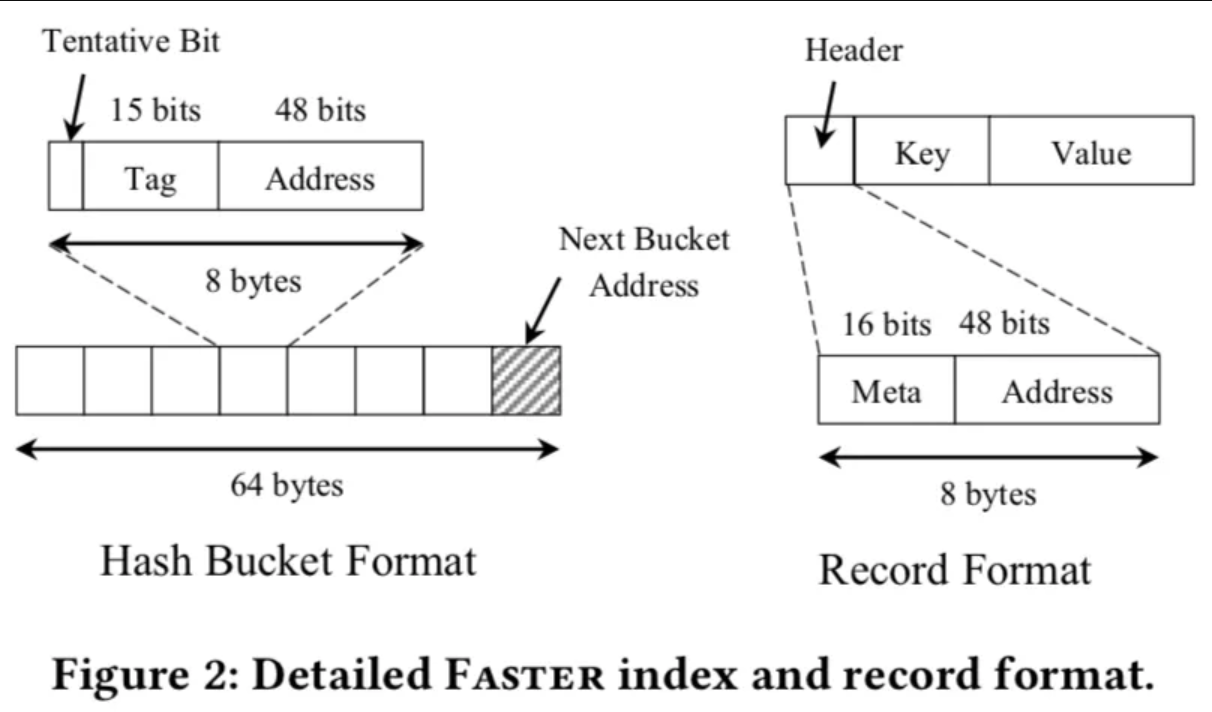

In the above design, let's assume that the size of the cache line in a 64-bit system is 64 bytes. The index has 2^k hash buckets, and each bucket is 64 bytes in size, which is also the size of the cache line. A bucket contains eight entries and a pointer to the next bucket. The design of 8-byte entries makes for 64-bit atomic operations.

The physical address is usually less than 64 bits on a 64-bit machine. Intel machines use 48-bit pointers. Therefore, only 48 bits are used to store the physical address. The other 15 bits are called tags, used for hash processing. The last remaining bit is called the tentative bit, used in the two-stage algorithm during the insert operation.

The hash index is built on the basis that each offset or tag corresponds to a unique entry. The address points to the set of entries, which is quite different from a normal hash table that stores keys. Comparatively, this modified hash index does not store keys, but points to the set of entries and solves conflicts in values.

The hash index supports the following operations:

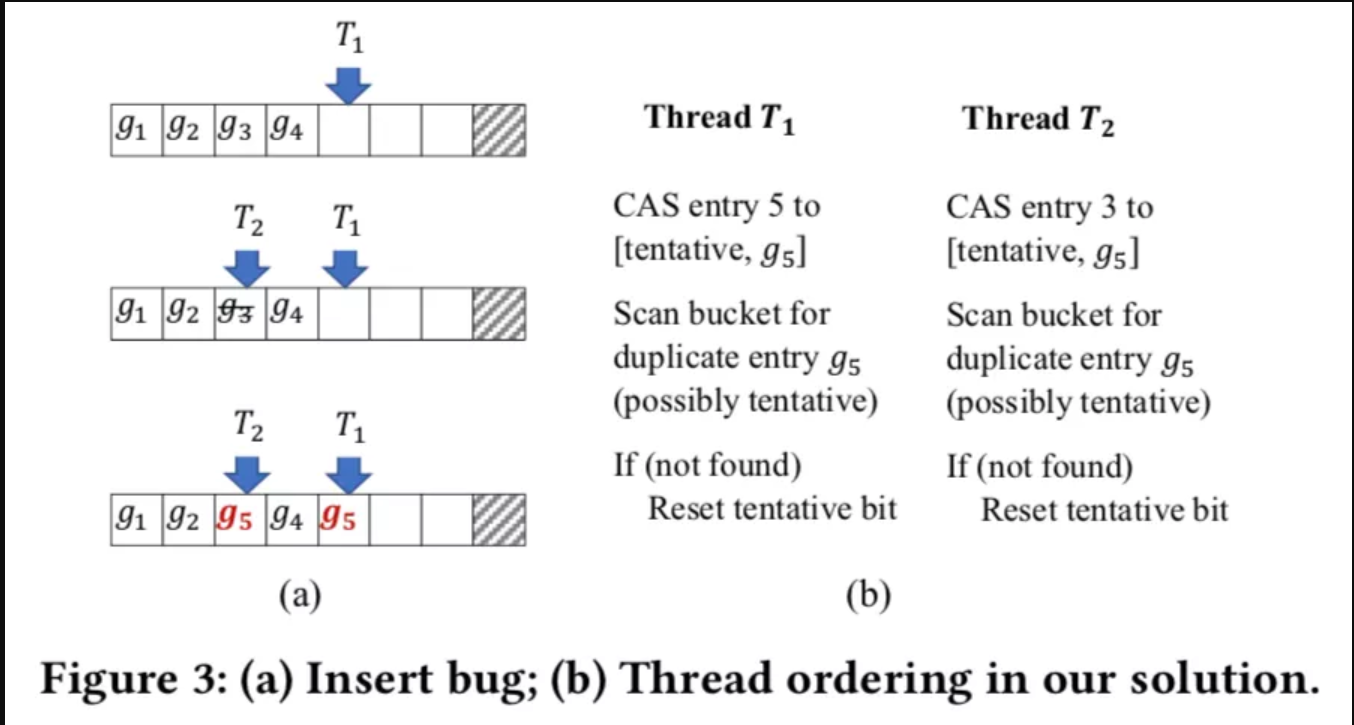

The root cause of this problem is that the threads separately select the entries and modify them directly. This may be resolved by locking the bucket, but this solution is resource-consuming. FASTER solves this by using the latch-free two-stage method with the help of the tentative bit. Specifically, the thread finds an empty entry, writes a new entry, and sets the tentative bit. An entry with the tentative bit set is invisible to read and write operations. Then, the bucket is scanned again to check whether the same tag exists. If yes, it returns for retry. If no, the tentative bit is reset to finish the insert operation. Figure 3(b) shows this process.

The paper first introduces the pure in-memory and pure log-structured implementations respectively and then combines the two to form HybridLog.

The entry format is shown in Figure 2. An entry consists of an eight-byte header, a key, and a value. The key and value are either fixed length or variable length. The header is divided into a 16-bit meta and a 48-bit address. The meta uses a small number of bits to store the information required by the log-structured allocator, whereas the address is used to maintain the linked list of entries.

In the pure in-memory implementation, entries are stored only in the memory. An underlying allocator, like jemalloc, is used to allocate memory resources, and a hash index is used to store physical addresses. In this implementation, read, update, insert, and delete operations are supported.

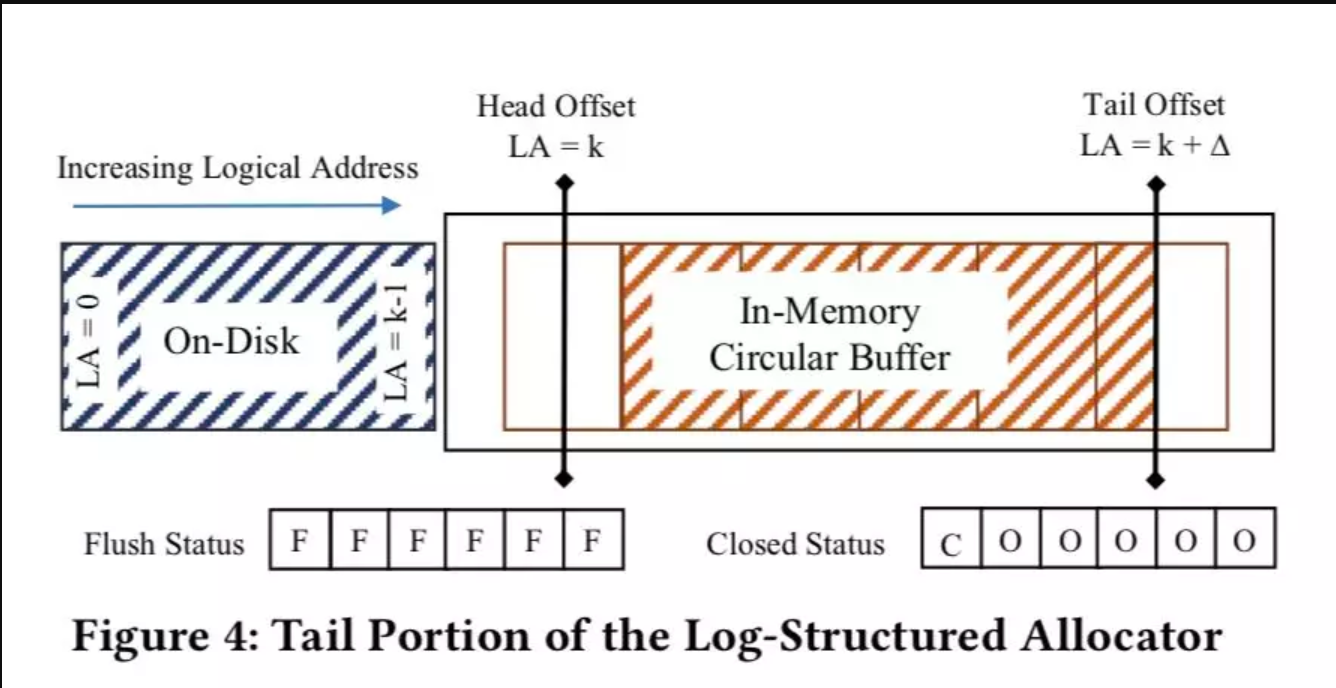

In the pure log-structured implementation, the memory and disk are unified by logical addresses, and entries are written by log append writes. The log-structured implementation supports massive-volume storage, but the operations per second (OPS) will not exceed 20 million, and the performance will not scale with the number of threads. The log-structured implementation adopts two offsets (head offset and tail offset) which are used to maintain the minimum logical address and the next idle address respectively. Memory allocation always begins at the tail offset. The space between the head offset and the tail offset is the memory capacity. Here, this capacity is called Circular Buffer, which is an array of fixed-length pages that corresponds to physical pages.

In order to flush entries to the disk without locks, two status arrays are introduced: The flush-status array records the page that is being flushed to the disk. The closed-status array determines whether the page can be evicted. A page gets evicted only after it has been flushed to the disk. When the tail offset increases, a trigger action of the epoch mechanism is used to trigger an asynchronous I/O request to flush the page to the disk. The action is called when the epoch is secure to ensure that the writes of all threads on this page are done. After this, the flush-status array will be set accordingly.

With the increase of the tail offset, the top page needs to be removed from the memory, which is the eviction. To do so, the database needs to ensure that no threads are accessing the page. In traditional databases, the page being accessed is usually pinned with a latch. However, to achieve high performance, FASTER manages the eviction with the epoch instead.

The blind update simply appends new entries to the log. Deleted entries are identified by the flag bit in the header, and the identification is performed during resource recycling. The read and RMW operations are similar to the operations in the memory, except that RMW update appends a new entry rather than conducting an in-place update. Also, the processing of logical addresses is different from the processing of physical addresses. If a logical address is greater than the head offset, it indicates that the target data is in the memory. Otherwise, an asynchronous read request is submitted to the disk.

The log-structured solution may process larger-than-memory data, but the append-only feature has side effects. First, each update involves updating the tail offset atomically, copying data, and atomically updating the logical address in the hash index. In addition, when dealing with update-intensive workloads, the log-structured solution will soon produce an I/O bottleneck. By contrast, under such workloads, in-place updates have the following advantages:

1) Frequently accessed entries are placed in a higher-level cache.

2) Access paths for the key values of different hash buckets do not conflict.

3) Partial update of large values avoids copying the entire entry.

4) Most updates do not need to modify the hash index.

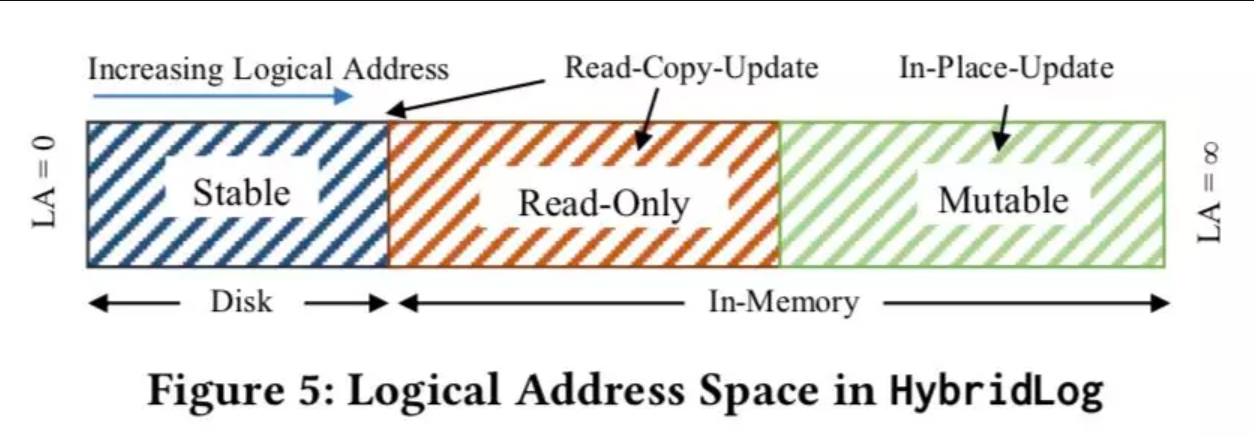

HybridLog divides the log into three regions: the stable region on the disk, the read-only region, and the mutable region. HybridLog unifies the three regions with one set of logical addresses. Among them, the read-only and mutable regions are in the memory, and only the mutable region is updated in-place and stores hot data. To update data in the read-only region, a copy of the original data will be created in the mutable region, and then, an in-place update is performed on the copied data.

As the tail offset increases, the data at the head of the mutable region is converted to read-only data, which is then flushed to the disk. As shown in this process, this hybrid solution is suitable for update-intensive scenarios. Compared with the log-structured solution, HybridLog has added a read-only offset which has similar functions as the head and tail offsets. The read-only offset separates the immutable region from the mutable region that can be updated in-place.

The in-memory part of HybridLog is regarded as a cache, for which performance depends on its efficiency. Several caching protocols have been proposed in databases and virtual memory management in operating systems, such as First-In-First-Out (FIFO), CLOCK, Least Recently Used (LRU), and extended versions of LRU. These protocols, except FIFO, require fine-grained statistics to work well. However, FASTER does not have these overheads, but adopts a protocol that is much of a second-chance FIFO.

Data distribution of FASTER depends on the access mode. After a period of time, hot data will gradually be concentrated in memory. Hence, memory partitioning for the mutable and read-only regions is quite important. A larger mutable region will lead to better memory performance and more in-place updates. However, some data in the mutable region may have trouble being evicted. A larger immutable region leads to many costly append-only updates that copy data to the mutable region and accelerate log growth. The paper mentioned that an experiment concluded that a ratio of 9:1 for the mutable and immutable regions contributes to better performance.

FASTER considers update performance as the first priority. Hence, FASTER does not write WAL, since this affects update performance. As a consequence, data in the memory will be lost if the process fails.

However, it is possible to restore the process to a consistent state. For example, two update requests r1 and r2 are submitted by one thread in a sequence. Possible states after recovery are 1) none, 2) only r1, and 3) r1 and r2. Therefore, r2 will not exist without r1.

The paper compares FASTER with two high-performance pure in-memory systems (Masstree and Intel TBB hash map) and two leading KV stores (RocksDB and Redis).

For a single thread, according to the uniform and Zipf distribution, FASTER performs best, TBB performs second, and RocksDB performs last.

When 256 threads are used, the uniform distribution of FASTER reaches 110 million, and that of TBB is also close. But for Zipf, FASTER gathers hot data in the mutable region and achieves a throughput of 160 million, which significantly outperforms other systems.

In the scalability test, FASTER scales very well on both one CPU and multiple CPUs. Masstree also scales well but has much lower performance. TBB scales well on one CPU, but falls over when running on multiple CPUs. For more details about the test, see the paper.

Online DDL: An Important Feature of the MySQL Ecosystem and a Core Capability of X-Engine

digoal - December 6, 2023

ApsaraDB - August 12, 2020

Alibaba Clouder - November 6, 2018

Alibaba Clouder - December 10, 2019

ApsaraDB - October 27, 2023

Alibaba Cloud Community - January 5, 2023

Tair (Redis® OSS-Compatible)

Tair (Redis® OSS-Compatible)

A key value database service that offers in-memory caching and high-speed access to applications hosted on the cloud

Learn More Tablestore

Tablestore

A fully managed NoSQL cloud database service that enables storage of massive amount of structured and semi-structured data

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More Oracle Database Migration Solution

Oracle Database Migration Solution

Migrate your legacy Oracle databases to Alibaba Cloud to save on long-term costs and take advantage of improved scalability, reliability, robust security, high performance, and cloud-native features.

Learn MoreMore Posts by ApsaraDB