As a senior technical expert at Alibaba Group, I have been working with data for 11 years now. I have been engaged in the development of big data frameworks such as Apache Hadoop, Pig, Tez, Spark, and Livy, and have developed several big data applications. My efforts in this regard include writing MapReduce jobs for ETL, using Hive for ad hoc queries, and adapting Tableaus for data visualization. So I would like to talk from my experience and knowledge about the current state of big data and some future trends as far as I can see them.

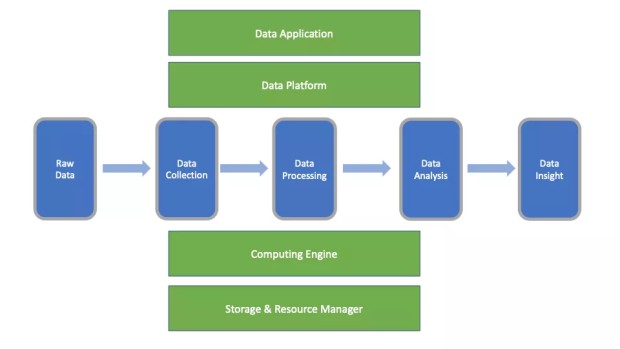

First, before we can get anywhere, we will need to get clear what exactly "big data" is. The concept of big data has been around for more than 10 years, but there has never been an accurate definition, which is perhaps not required. Data engineers see big data from a technical and system perspective, whereas data analysts see big data from a product perspective. But, as far as I can see, big data cannot be summarized as a single technology or product, but rather it comprises a comprehensive, complex discipline surrounding data. I look at big data from mainly two aspects: the data pipeline (the horizontal axis in the following figure) and the technology stack (the vertical axis in the following figure).

Social media has always focused on emphasizing, even sensationalizing the "big" in big data. I don't think this really shows big data actually is, and I don't really like the terming "big data." I'd prefer just to say "data" because the essence of big data essentially just lies in the concept and application of "data".

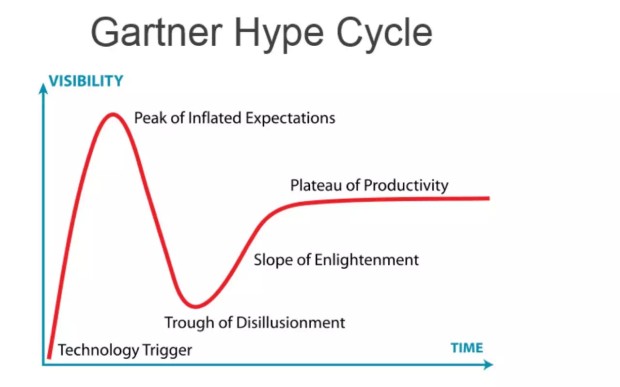

After figuring out what big data is, we have to look at where big data is on the maturity curve. Looking at the development of technologies in the past, each emerging technology has its own lifecycle that is typically characterized by the technology maturity curve seen below.

People tend to be optimistic about an emerging technology at its inception, holding high expectations that this technology will bring great changes to society. As a result, the technology gains popularity at a considerable speed but then reaches a peak at which time people begin to realize that this new technology is not as revolutionary as originally envisioned and their interests wane. After this, everyone's thoughts about this technology then falls into the trough of disillusionment. But, after a certain period of time, people start to understand how the technology can benefit them and start to apply the technology in a productive way, with the technology gaining mainstream adoption.

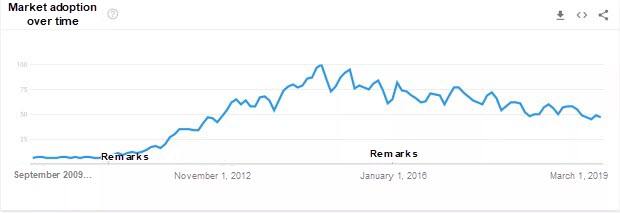

After going through the peak of inflated expectations and trough of disillusionment, big data is now at a stage of steady development. We can verify this from the curve of big data on Google Trends. Big data started to garner everyone's attention somewhere around 2009, reaching its peak at around 2015, and then attention slowly fell. Of course, this curve does not fit perfectly with the preceding technology maturity curve. But you can imagine that this has something to do with what this data represents. That is, one could imagine and suppose that a downward shift in the actual technology curve may lead to the increase in searches for this technology on Google and other search engines.

Source: Google Analytics

Now I would like to share my thoughts on what are likely to be the future trends of big data.

As mentioned earlier, big data has gone through the peak of inflated expectations and a trough of disillusionment, and it is now steadily moving forward in its development and use in the mainstream. I am confident to make this conclusion when making the following predictions about the future development of big data:

Data streams from upstream systems will continue to grow in volume, especially due to the development and maturity of IoT technology and the upcoming rollout of 5G technology. In the near future, the amount of data will continue to multiply, growing at a rapid pace, which is the principal driving force for the continuous development of big data.

There is still a lot of potential from downstream data consumption in the future. Even though big data is no longer discussed as much as artificial intelligence, machine learning, and blockchain, and it may not be much in the spotlight in the future, big data will continue to play an important and even fundamental role in technology. It is fair to say that as long as there is data, big data will never go out of style. I think that in the lifetime of most people, we will witness the continuous development of big data.

The biggest challenge for big data in the past was data volume. That is why everyone calls it "big data." However, after several years of hard work and putting big data applications into practice in the industry, we have overcome this hurdle. In the next few years, the bigger challenge will be data velocity and real-time availability. The real-time availability of big data is about implementing an end-to-end real-time data pipeline, rather than simply transmitting or processing data in real time. Any delay along the way will affect the real-time performance of the entire system. Next, the challenges facing real-time availability of big data can be summarized in the following aspects:

Streaming engines such as Apache Kafka and Flink have laid a solid technical foundation for real-time computing. I think that more good products will emerge in the future for both real-time data visualization and online machine learning models. When the real-time availability of big data has been brought to a level that can be more useful, more valuable data will be generated on the data consumption side, enabling more efficient closed-loop data management, while also promoting the sound development of the entire data pipeline.

In my opinion, the eventual move of most industry IT infrastructures to the cloud is inevitable, be it to a public cloud, private cloud, or hybrid cloud. Part of this is because it is impossible to deploy all big data facilities to a public cloud, because each enterprise has a different business scope and different requirements for data security. However, migrating to the cloud will be the wave of the future, I'm sure. At present, major cloud service providers offer a variety of big data products to meet various user needs, including PAAS-oriented EMR systems and SAAS-oriented data visualization products.

The migration of big data infrastructure to the cloud also has an impact to big data technologies and products. The frameworks and products in the big data world will shift towards cloud-native where we can expect to see the following trends:

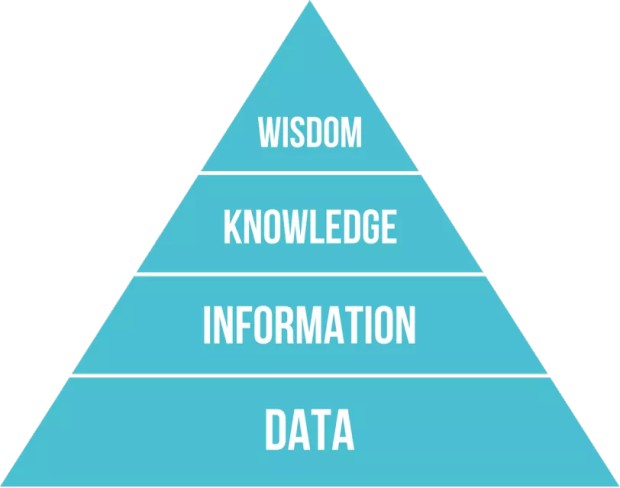

In my opinion, another think we will see is end-to-end integration of big data product. End-to-end integration is about providing an end-to-end solution rather than simply stacking several different big data product components on top of each other. Big data products such as Hadoop come under fire for its steep learning curve and high custom development costs. Therefore, end-to-end integration aims to solve this problem. What users need are not technologies such as Hadoop, Spark, and Flink, but rather products that can solve specific business problems based on these technologies. Cloudera's Edge to AI approach is something I strongly agree with. The value of big data is not so much the raw data itself, but rather the information and knowledge extracted from this data and how it can have an impact on business. The following figure shows the knowledge hierarchy, sometimes related to as the DIKW pyramid. We need to make data be able to see its value and climb the bricks of this pyramid.

Source: Wikipedia

Big data technologies are designed to process and refine raw data continuously. As you rise up to the next layer in the pyramid, the amount of data gets smaller but the impact on the business becomes greater. To extract wisdom from data, the data has to pass through a long data pipeline. Without a complete system to ensure the efficient operation of the entire pipeline, it is difficult to guarantee that valuable insights can be extracted from the data. Therefore, end-to-end integration of big data products will be another major trend in the future.

In the preceding paragraph, I talked about the development of big data towards end-to-end integration. So what is the current state of this long data pipeline and what can we expect in the future?

As far as I can tell, the innovation and development of big data technologies will shift to downstream data consumption and application terminals in the future. For over a decade or so, the development of big data has been namely concentrated on the underlying frameworks of what is big data. Hadoop was the first big data framework to gain significant traction in the open source community, followed by popular the computing engines Apche Spark and Flink, message middleware Kafka, and resource scheduler Kubernetes. A series of excellent products have emerged in various segments. In short, the underlying frameworks have laid the foundation for big data processing. The next step is to use these technologies to provide enterprises with products that deliver a better user experience and can solve actual business problems. In other words, the focus of big data research will shift from underlying frameworks to upper-layer applications in the future. Previous big data innovations were geared towards IAAS and PAAS, while in the future we will see more SAAS-oriented big data products and innovations.

This trend is evident from the recent acquisitions made by some international corporations.

Big data products for end users will be the future focus of companies in the big data field. I believe that future innovations in this field will also center in and around these products. In the next five years, there will be at least one company like Looker, but it will be hard to come up with another computing engine like Spark.

For anyone who has studied big data, they will be amazed at the overwhelming volume of information and knowledge they have to deal with in the big data world, especially when it comes to all the underlying technologies. After years of competition, many remarkable products have rose to the top, while some other products have slowly withered away. For example, the Spark engine has clearly arisen as the market leader for big data processing. Legacy systems use the traditional MapReduce model and new MapReduce applications are less likely to further developed. Flink is currently the unparalleled option that offers low latency in the stream processing world, while the original Storm system is being phased out. Similarly, Kafka has evolved as the de facto standard in the message middleware field. There will be barely any new technologies and frameworks in the underlying big data ecosystem in the future. Rather, in each segment, the strongest technology will survive and become more mature and entrenched in the market. More innovations will be made in upper-layer applications or end-to-end integration. That is, I believe that in the future we will witness more innovations and developments in upper-layer big data applications, such as BI and AI products and big data applications in a vertical field.

The big data world is overwhelmed with not only well-known open-source products such as Hadoop, Spark, and Flink, but also many outstanding closed-source products, such as AWS Redshift and Alibaba Cloud MaxCompute. Although these products are not as popular as open-source products among developers, they are nonetheless widely accepted. Open-source is not the only standard, because enterprises have to take into account various factors when choosing a big data product, such as whether the product is stable and secure, whether commercial companies support it, and whether it can be integrated with existing systems. Closed-source products tend to tailor to these sorts of enterprise-level requirements.

In the recent years, open-source products have been greatly affected by the push of public cloud. Public cloud vendors allow users to enjoy the results of open-source projects free of charge. They have taken away a large portion of the market share of commercial companies sitting behind these open-source products. Therefore, many such commercial companies have begun to change their strategies, and some have even modified open-source product licenses. However, the chance of public cloud providers squeezing these commercial companies out is slim. Squeezing them out is equivalent to squeezing the biggest technological innovators of open-source products and the open-source products themselves out. I believe that open-source communities and public cloud providers will eventually strike a balance. Open-source will remain the best way to achieve a better developer experience, while some first-class closed-source products will also occupy a certain market share.

In the end, I would like to summarize a few key points in this article:

Zhang Jianfeng is a senior technical expert as well as an Apache member and Apache Pig committer. As a former employee of Hortonworks, Jianfeng currently serves as a senior technical expert at Alibaba Computing Platform Department and the project management consultant for Apache Tez, Livy, and Zeppelin open-source projects. He started working with big data and the open-source community starting a long time ago, with the aspiration to contribute to the areas of big data and data science in the open-source community.

Alibaba Clouder - July 24, 2018

Alibaba Clouder - June 14, 2018

Key - February 20, 2020

Alibaba Cloud MaxCompute - September 18, 2019

Alibaba Clouder - November 19, 2018

Alibaba Clouder - October 15, 2018

Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Financial Services Solutions

Financial Services Solutions

Alibaba Cloud equips financial services providers with professional solutions with high scalability and high availability features.

Learn More