By Digoal

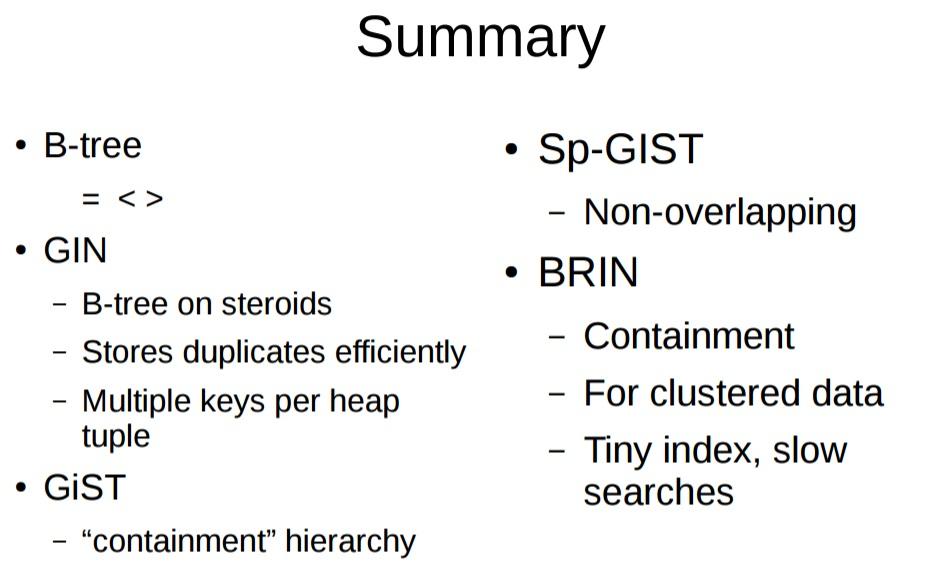

A fuzzy query is a common yet difficult scenario in a database such as PostgreSQL. You can use inverted or B-tree indexes for fuzzy prefix queries such as '%xxx', or B-tree indexes for fuzzy suffix queries such as 'xxx%'. Generally, a B-tree index supports queries including >, <, =, <=, >=, and sorting. Currently, most databases support B-tree indexes.

However, many databases do not provide a way to optimize fuzzy prefix and suffix queries like '%xxxx%' and regular expressions with fuzzy prefix and suffix (~ '.ab? cd[e-f]{1,10}-0.'). In such cases, you can only perform a full-table scan to process each record separately.

A common brute-force acceleration method is to enable parallel acceleration, for example, CPU/GPU/FPGA-based parallel acceleration. When you are processing large amounts of data, use the LLVM-based acceleration in PostgreSQL to significantly reduce CPU usage. However, this article is not about brute-force acceleration. Instead, it talks about index-based acceleration, which is more scientific and desirable in today's world that advocates high efficiency and effectiveness and more suitable for OLTP systems. The flexibility of PostgreSQL makes it possible to perform fuzzy prefix and suffix query and regexp query by using database indexes. This support is due to the open index interfaces and data types in PostgreSQL. For example, PostgreSQL supports GIN, GiST, RUM, and customized indexes. You can consider them a unique feature of PostgreSQL because this feature is currently not supported in any other database.

Currently, you can use three indexes for fuzzy query optimization. If you don't know which index is more suitable, read the principles of GIN, GiST, and RUM described in this article to find an answer.

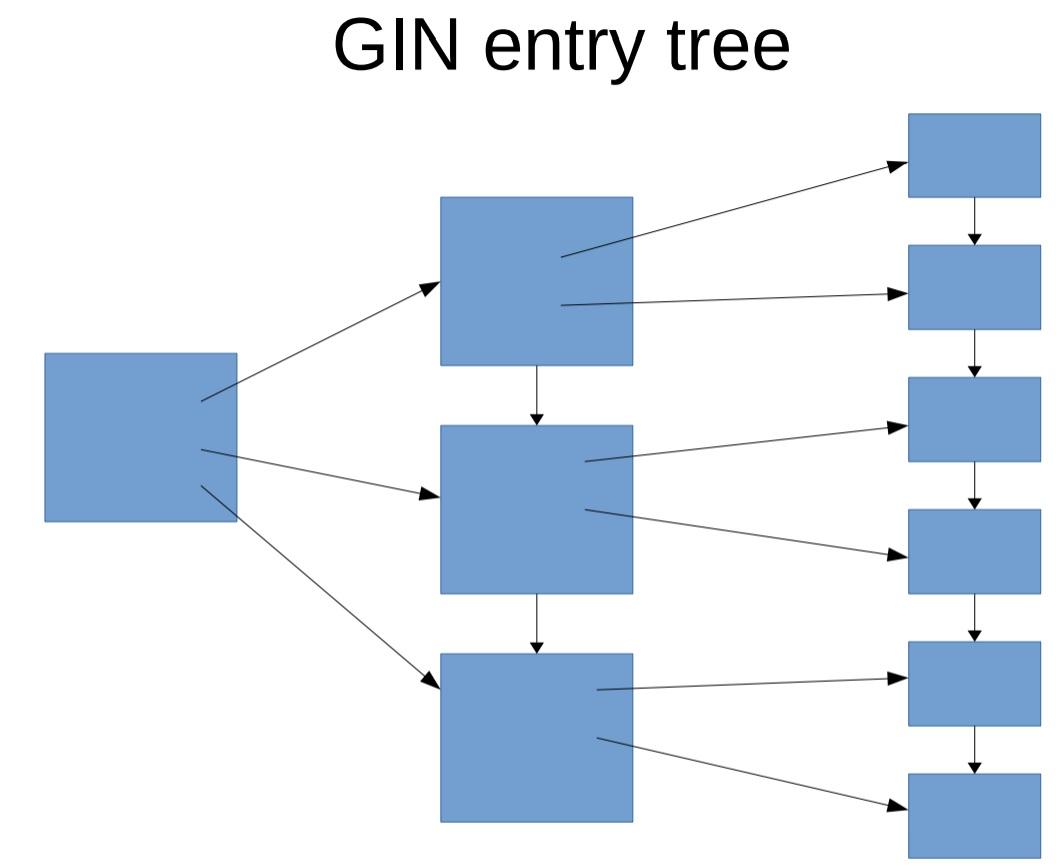

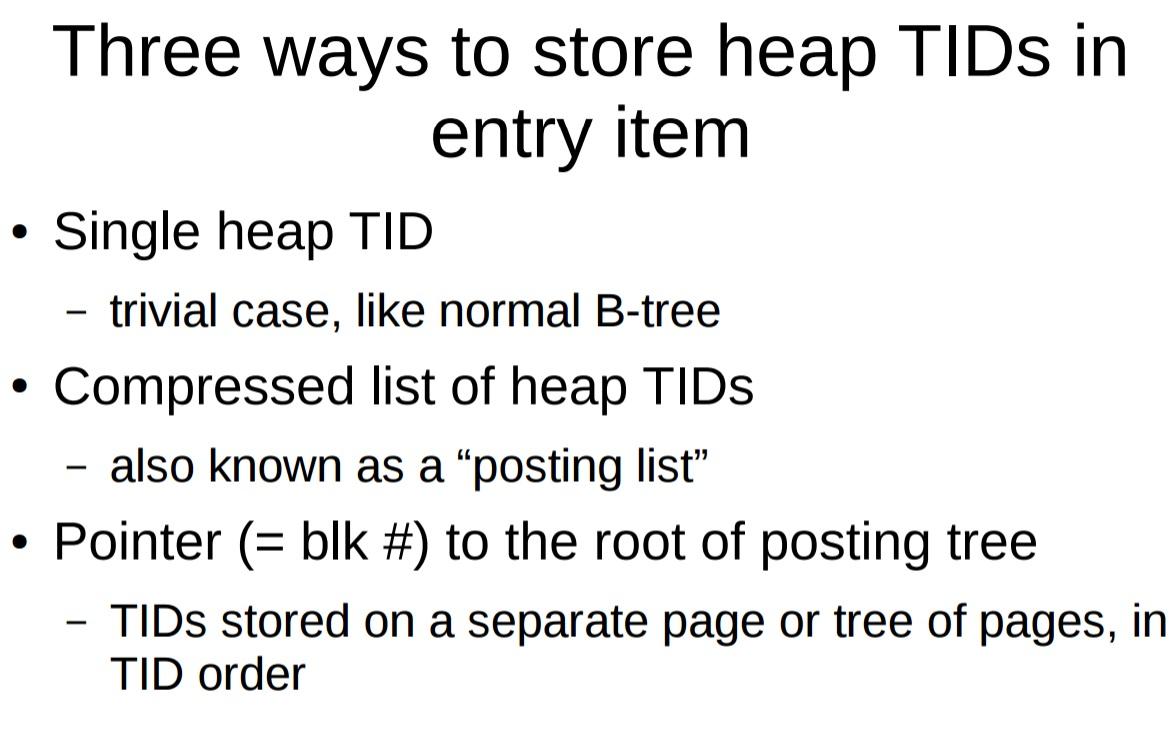

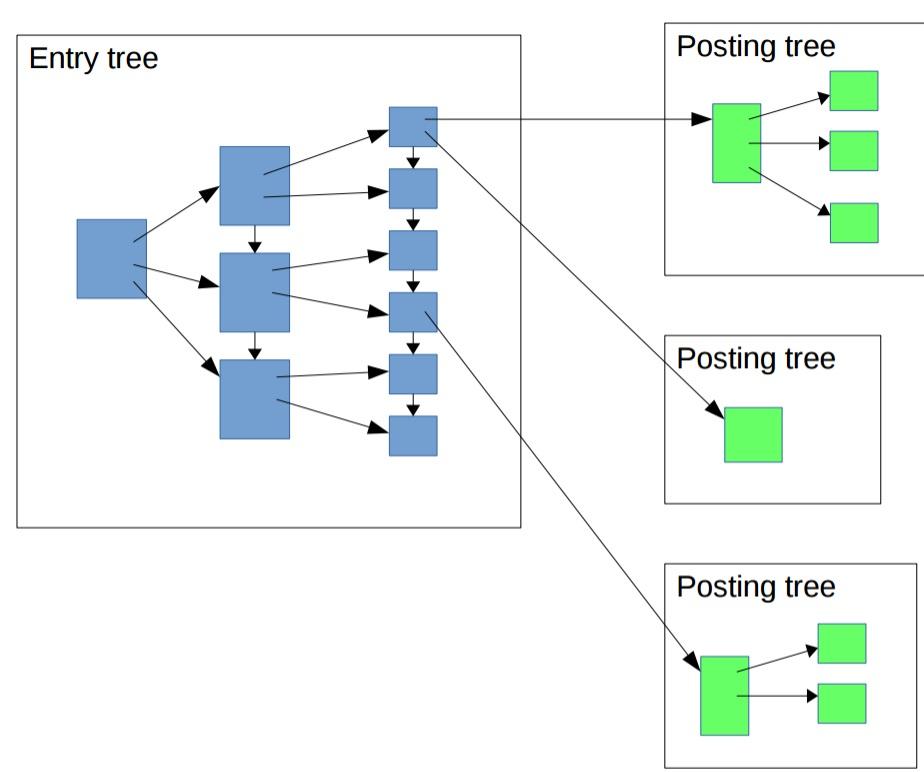

A GIN index extracts values from a column (for example, array or full-text search) and stores them to a tree structure (similar to a B+tree; with the value and the row IDs). In the case of high-frequency values, row IDs are usually stored on a separate page to reduce the depth of the tree.

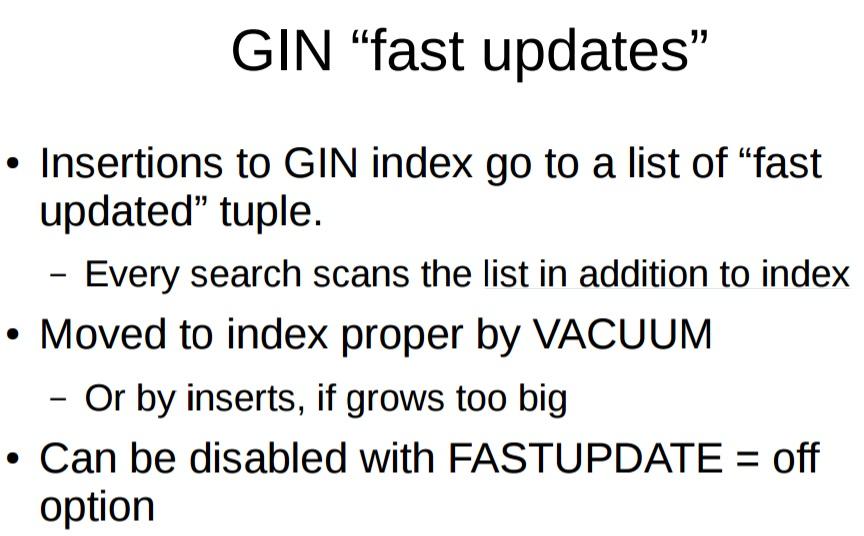

As GIN index stores elements, updating or inserting an entry may involve many elements. Therefore, GIN may require changes in multiple items. To improve the insertion, update, and deletion performance, PostgreSQL supports buffer similar to index-organized tables in MySQL, allowing values to be written into a buffer first and then merging into the tree.

Querying using GIN has one additional advantage over index-organized tables in MySQL, as here query does not block merging and writing. This is because a query operation does not have to wait until data in the buffer is merged into the tree. Instead, the query operation is directly performed on the buffer. (If the buffer is too large, query speed may be affected.)

You can use a proper parameter to control the buffer size. GIN will automatically merge a buffer when it grows to a certain extent. Or VACUUM will merge buffers.

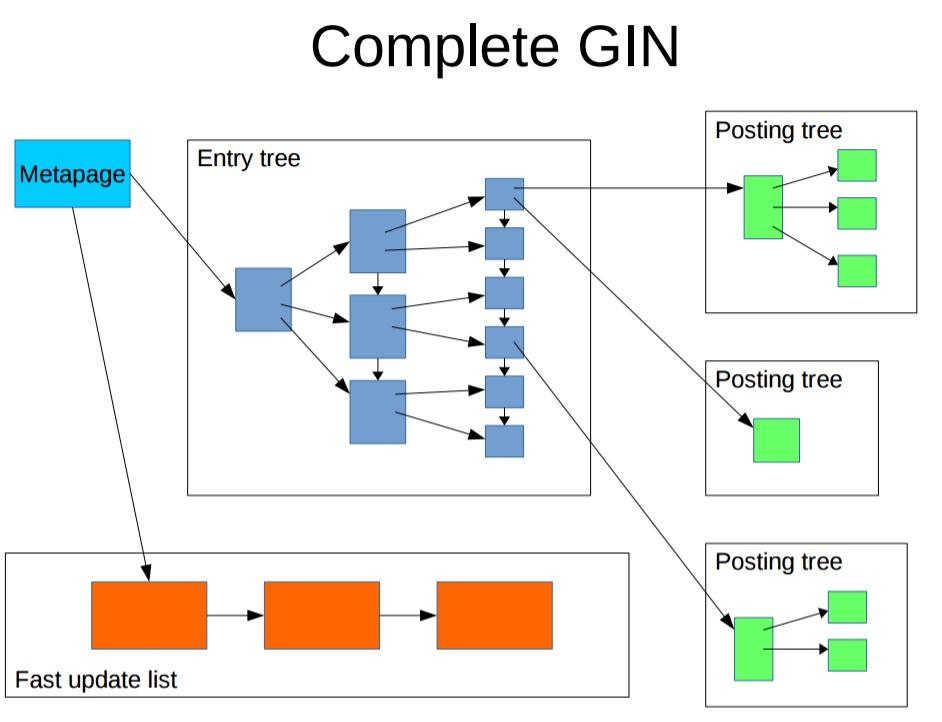

The Following diagram illustrates a complete GIN index:

1. To improve the update speed, FASTER UPDATE is used. When the buffer grows to a certain size (you can limit the size yourself), the query speed may be relatively slow. It is recommended that you set a proper buffer size based on the balance between insertion and query.

2. It only supports bitmap queries. Therefore, all row IDs are obtained first and sorted, and then data retrieval is performed. This can reduce random heap page scans. However, sorting may consume lots of resources and take a long time in case there are many large rows (for example, a certain element is contained in each row). In this case, it takes a relatively long time to obtain the first result after the query starts, and you have to wait until the sorting ends even if you use LIMIT.

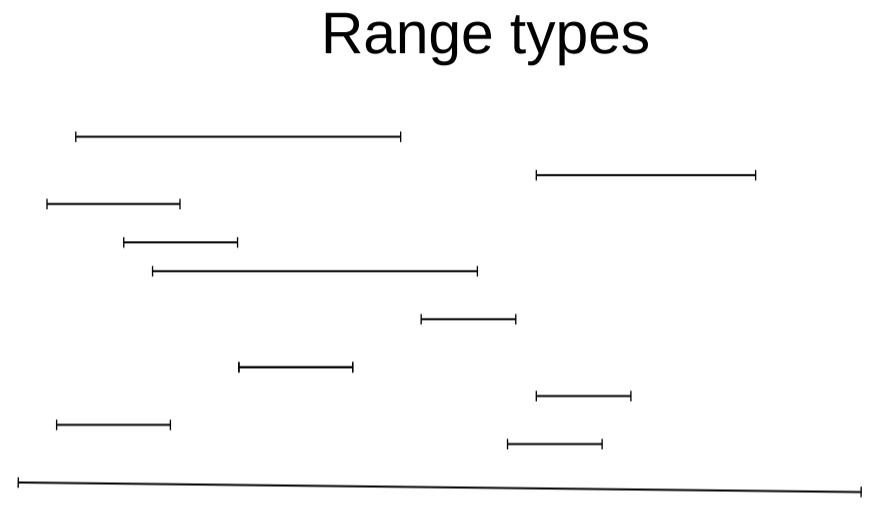

Generalized Search Tree (GiST) is used to solve data problems that are difficult to solve by using B-tree or GIN. For example, GiST helps to judge the intersection or inclusion relationship between ranges, the point and plane intersection in geographical location and search for geographical points near a certain point.

However, GiST can implement much more than these features. Take the range type for example. Each line segment in the following figure represents a stored range.

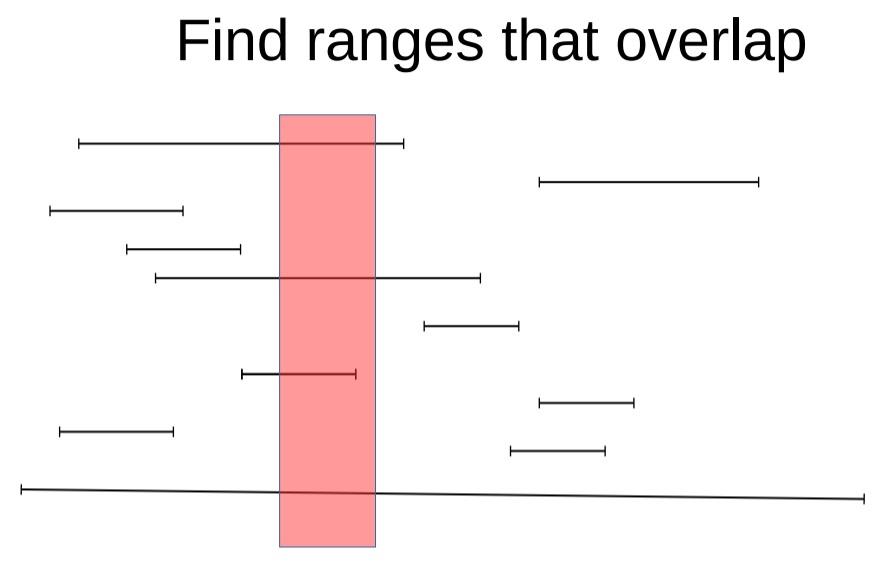

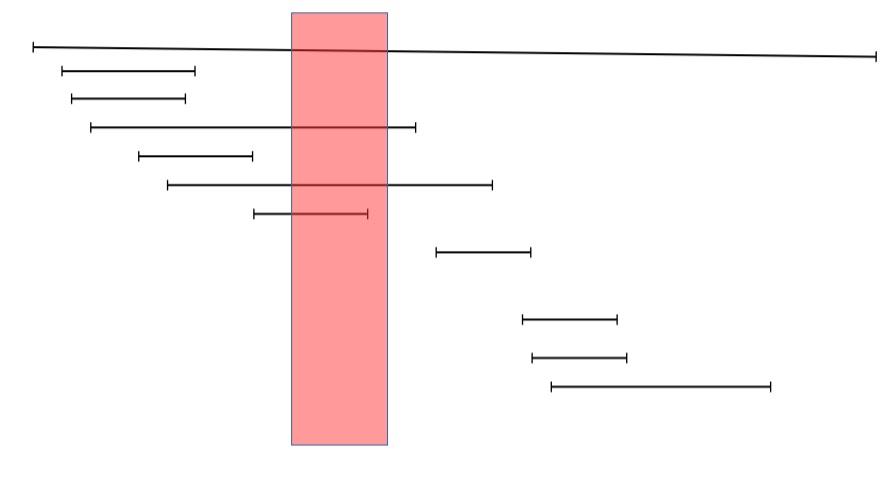

Find ranges that overlap.

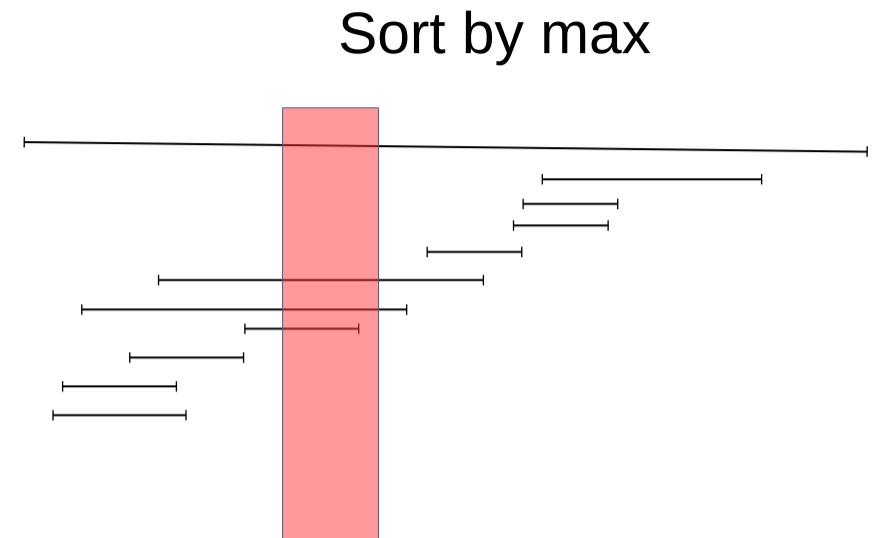

Sort by the minimum range value (leftmost value) (Ignore the red box).

Sort by the maximum range value (rightmost value) (Ignore the red box).

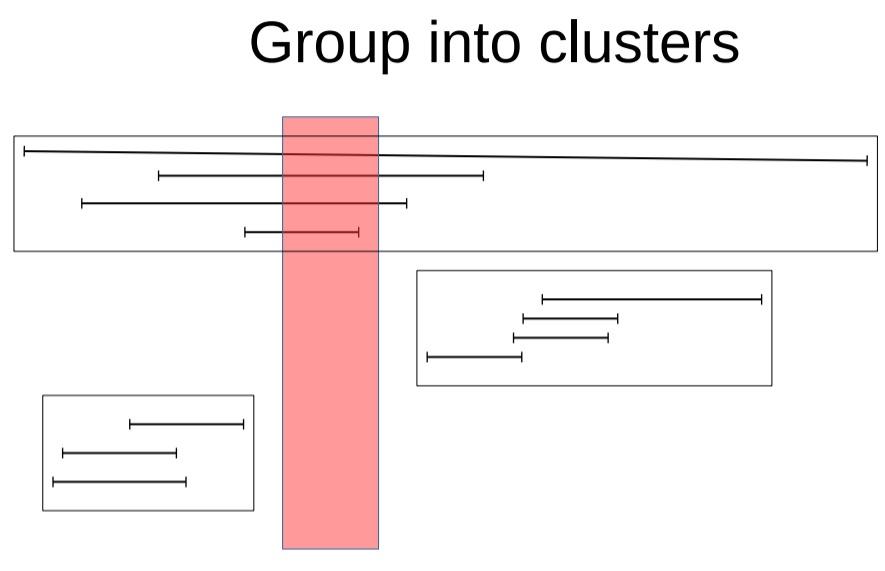

First, line segments are clustered into different groups (similar to what K-means clustering does) (Ignore the red box).

Clustered data represents the information included in a single index page of the corresponding GiST (multi-level clustering is allowed, for example, the two-level structure shown later).

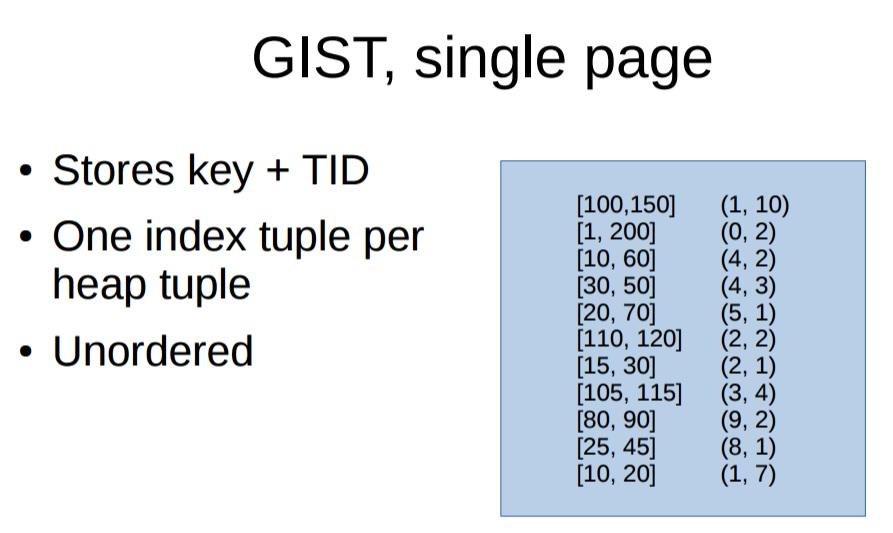

A single GiST index page looks like this:

1. Key + row ID (one-to-one correspondence between indexes and records)

2. Stored in the index without being ordered

In the blue box below, values in the left-side column are keys, and values in the right-side column are row IDs (heap page number, record ID).

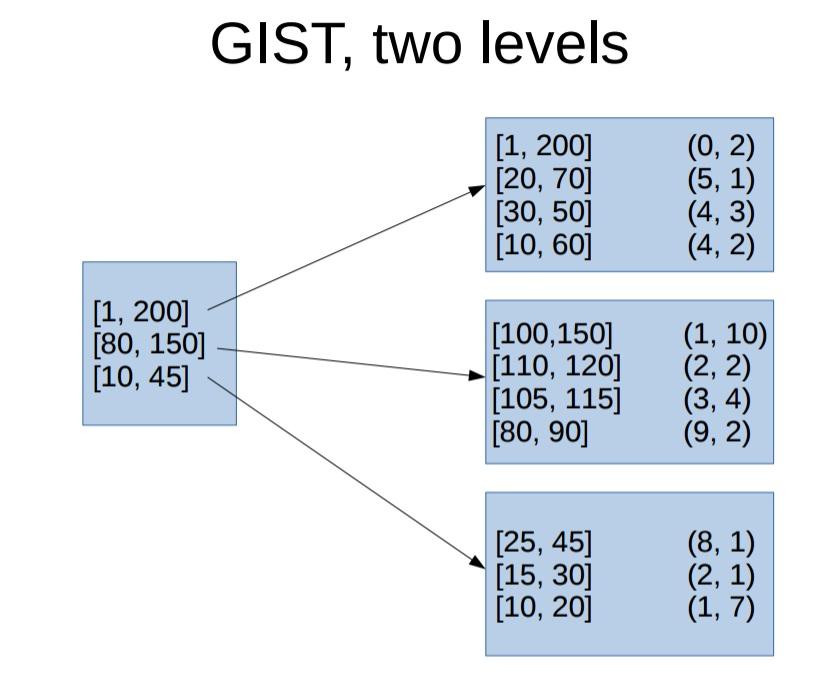

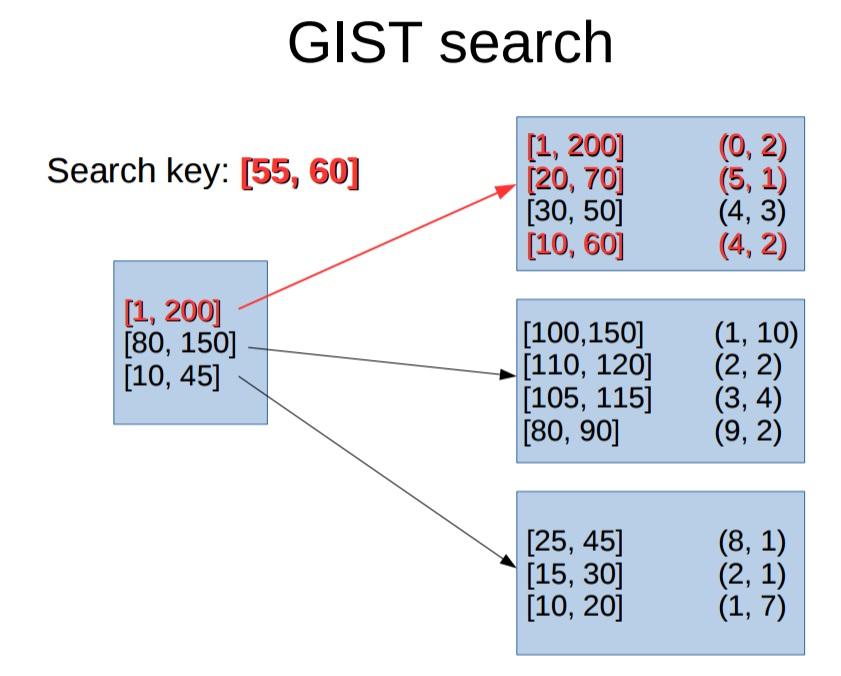

The following figure shows the two levels of the GiST index. The upper level is the total range obtained from individual index pages at the lower level.

The following figure shows how to search for keys within the range [55,60].

The core of GiST is clustering. After clustering, key and heap row IDs in a single cluster are put into a separate index page. The clustering range is the level-1 structure and stored in the GiST entry for easy retrieval.

Since the core of GiST is clustering, the GiST performance is closely related to clustering algorithms. PostgreSQL leaves this interface to its users. If you want to implement a GiST index when you define a data type yourself, you need to think carefully about how you want to perform clustering on the specific data types.

GiST indexes for built-in types like range and geometry are already provided. You only need to create GiST indexes for types that you want to add, for example, the type for storing human body structure information, photos, or X-ray images. Your clustering design in the GiST index determines how quickly you can search for your customized data types.

Performance depends on how well the userdefined

Picksplit and Choose functions can

group keys

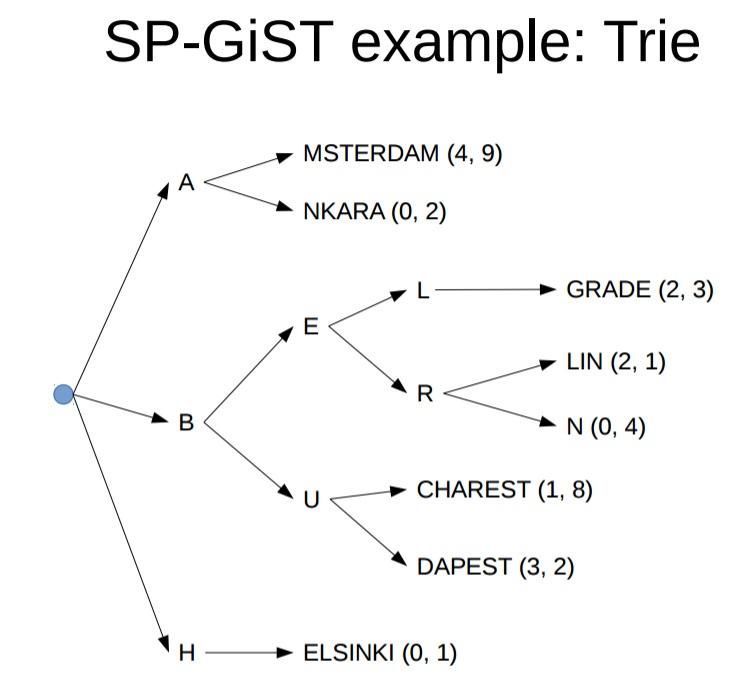

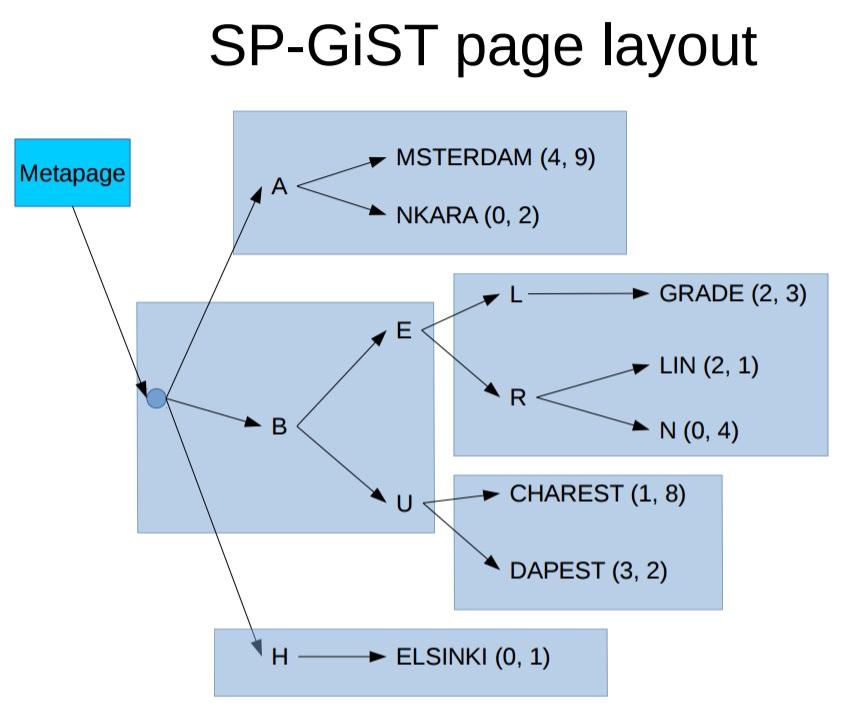

Space-Partitioned GIST (SP-GiST) is an extension of GiST and has the following characteristics:

(1) Nodes do not overlap. However, In GiST indexes, nodes may overlap. Data is just grouped into different clusters in GiST, and nodes (on different index pages) can have overlapping content.

(2) Index depth is changeable.

(3) Each physical index page corresponds to multiple nodes.

SP-GiST supports the following search types:

1. Kd-tree, points only; ( because shapes might overlap)

2. prefix tree for text

Similar to the application scenarios mentioned above for GiST.

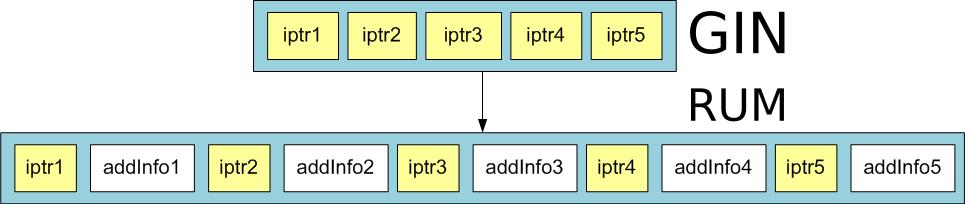

RUM references the implementation of GIN and improves upon the following disadvantages of using GIN for full-text searches

1. Slow Ranking: GIN does not store lexeme positions for a full-text search, so it cannot support index-level ranking. You can obtain the information by using CPU to perform operations after scanning the heap pages. It needs position information about lexeme for ranking. GIN index doesn't store positions of lexemes. Hence, after the index scan, you need an additional heap scan to retrieve lexeme positions.

2. Slow Phrase Search With GIN Index: Similarly, since GIN does not store lexeme position information, it cannot support phrase search at the index level. For example, search for 'speed' <1> 'passion' or 'China:100' is not supported at the index level. This problem relates to the previous problem as it needs position information to perform a phrase search.

3. Slow Ordering by Timestamp: Since GIN only stores tsvector tokens and does not contain any additional field information (such as full-text search + index filed and double-field index), some special features or service expansion features require heap page scans or CPU processing. GIN index can't store some related information in index with lexemes. Thus, it is necessary to perform additional heap scan.

RUM improves the GIN access method and adds additional information to indexes (for example, token positions) to support the aforementioned query examples. RUM also supports double-field indexes, for example, tsvector + timestamp. RUM solves these problems by storing additional information in the posting tree. For example, positional information of lexemes or timestamps.

You can get an idea of RUM by the following picture.

Currently, RUM also encounters a problem. Compared to GIN, it takes longer to create an index and change data. Though, this is already in the RUM to-do list and will be the focus of subsequent improvements.

Another drawback of RUM is that it has slower build and insert time compared to GIN. The main reasons for this include the need to store additional information besides keys and the use of generic WAL.

Currently, PostgreSQL supports the following index types:

Open interfaces also allow you to customize indexes. To learn how to customize indexes, see the implementation of the Bloom index.

The previous section describes index types supported in PostgreSQL. Also, you can use B-tree for fuzzy prefix and suffix queries.

Further, use indexes to accelerate the fuzzy prefix or suffix query and regexp query.

Now let's compare the performance of GIN, GiST, and RUM in case of different input data and decide which index type is more suitable in a specific scenario.

To obtain valid test results, create multiple indexes on a column and use pg_hint_plan to select a suitable index.

PostgreSQL allows you to create multiple indexes on a column. For example, in the following test, we will create GIN, GiST, and RUM indexes.

We will not discuss this section in this blog.

To test that RUM supports a fuzzy query, convert a string to the full-text search type. Simultaneously, convert the input to the tsquery type as the search requires tsquery input.

Find the ASCII code of the escape character .

select chr(i) from generate_series(1,255) as t(i); Convert the string to a tsvector with position tags.

create or replace function string_to_tsvector(v text) returns tsvector as $$

declare

x int := 1;

res text := '';

i text;

begin

for i in select regexp_split_to_table(v,'')

loop

res := res||' '||chr(92)||i||':'||x;

x := x+1;

end loop;

return res::tsvector;

end;

$$ language plpgsql strict immutable; Next, convert the string to a tsquery with position tags (the <-> symbol is used to provide adjacency information).

create or replace function string_to_tsquery(v text) returns tsquery as $$

declare

x int := 1;

res text := '';

i text;

begin

for i in select regexp_split_to_table(v,'')

loop

if x>1 then

res := res||' <-> '||chr(92)||i; -- 这一句<->符号是用来带上相邻信息的

else

res := chr(92)||i;

end if;

x := x+1;

end loop;

return res::tsquery;

end;

$$ language plpgsql strict immutable; You need three plugins namely, pg_trgm, pg_hint_plan, and rum. This article doesn't cover the steps to install plugins.

postgres=# \dx

List of installed extensions

Name | Version | Schema | Description

--------------+---------+------------+-------------------------------------------------------------------

dblink | 1.2 | public | connect to other PostgreSQL databases from within a database

pg_hint_plan | 1.1.3 | hint_plan |

pg_trgm | 1.3 | public | text similarity measurement and index searching based on trigrams

plpgsql | 1.0 | pg_catalog | PL/pgSQL procedural language

rum | 1.0 | public | RUM index access method

(5 rows) The information is stored in a table where you will perform the fuzzy query test.

create table test(id int , info text); Write 1 million rows of test data.

insert into test select id, md5(random()::text) from generate_series(1,1000000) t(id);

CREATE INDEX trgm_idx1 ON test USING GIN (info gin_trgm_ops);

耗时 19秒

占用空间 102MB

CREATE INDEX trgm_idx2 ON test USING GiST (info gist_trgm_ops);

耗时 31秒

占用空间 177MB

CREATE INDEX rum_idx1 ON test USING rum ( string_to_tsvector(info) rum_tsvector_ops);

耗时 133秒

占用空间 86MB

The tsvector after the conversion looks like this.

create table test(id int , c1 text, c2 tsvector);

insert into test select id, md5(rn), string_to_tsvector(md5(rn)) from (select random()::text as rn, id from generate_series(1,1000000) t(id)) t;

select * from test limit 10;

postgres=# select * from test limit 10;

id | c1 | c2

----+----------------------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------

1 | f09f48ac93fbb69169f63973a516dcd1 | '0':2 '1':16,27,32 '3':10,21,24 '4':5 '5':26 '6':14,17,20,28 '7':23 '8':6 '9':3,9,15,18,22 'a':7,25 'b':12,13 'c':8,30 'd':29,31 'f':1,4,11,19

2 | 7554a9e3b95c7ff01a54587f914ea9af | '0':16 '1':17,26 '3':8 '4':4,20,27 '5':2,3,11,19,21 '7':1,13,23 '8':22 '9':6,10,25,30 'a':5,18,29,31 'b':9 'c':12 'e':7,28 'f':14,15,24,32

3 | aa21991b6507275b86e43f2ec67ffc57 | '0':11 '1':4,7 '2':3,13,23 '3':21 '4':20 '5':10,15,31 '6':9,18,26 '7':12,14,27,32 '8':17 '9':5,6 'a':1,2 'b':8,16 'c':25,30 'e':19,24 'f':22,28,29

4 | c5e0ff17b63ab43c1b17d7a8c457c341 | '0':4 '1':7,17,19,32 '3':11,15,30 '4':14,26,31 '5':2,27 '6':10 '7':8,20,22,28 '8':24 'a':12,23 'b':9,13,18 'c':1,16,25,29 'd':21 'e':3 'f':5,6

5 | 70b83685fc0a4cbaf08423f686845977 | '0':2,11,18 '2':21 '3':5,22 '4':13,20,28 '5':8,29 '6':6,24,26 '7':1,31,32 '8':4,7,19,25,27 '9':30 'a':12,16 'b':3,15 'c':10,14 'f':9,17,23

6 | 98761ca17c8665787a11c1819a93cf44 | '1':5,8,19,20,22,24 '3':28 '4':31,32 '5':14 '6':4,12,13 '7':3,9,15,17 '8':2,11,16,23 '9':1,25,27 'a':7,18,26 'c':6,10,21,29 'f':30

7 | 9e432254f5de26ddd881709ac49f435b | '0':22 '1':20 '2':5,6,13 '3':4,30 '4':3,8,26,29 '5':7,10,31 '6':14 '7':21 '8':18,19 '9':1,23,27 'a':24 'b':32 'c':25 'd':11,15,16,17 'e':2,12 'f':9,28

8 | 383a710642cfe6ff9d65a587e3158371 | '0':7 '1':6,27,32 '2':10 '3':1,3,26,30 '4':9 '5':20,22,28 '6':8,14,19 '7':5,24,31 '8':2,23,29 '9':17 'a':4,21 'c':11 'd':18 'e':13,25 'f':12,15,16

9 | 9aa45e3355c7d0564e990466e06b9a49 | '0':14,21,26 '3':7,8 '4':4,17,22,31 '5':5,9,10,15 '6':16,23,24,27 '7':12 '9':1,19,20,29,32 'a':2,3,30 'b':28 'c':11 'd':13 'e':6,18,25

10 | f61a4af88c1e9f08af6597d9f93c25f4 | '0':15 '1':3,11 '2':29 '3':27 '4':5,32 '5':20,30 '6':2,19 '7':22 '8':8,9,16 '9':13,21,24,26 'a':4,6,17 'c':10,28 'd':23 'e':12 'f':1,7,14,18,25,31

(10 rows)

In this case, the intermediate result set is large (the matching accuracy is low and lots of records meet the criteria) and the returned result set is also large. Also, LIMIT is not applied in this test case.

postgres=# set pg_hint_plan.debug_print=on;

postgres=# set client_min_messages ='log';

postgres=# /*+ BitmapScan(test trgm_idx1) */ select count(*) from test where info ~ 'a';

LOG: available indexes for BitmapScan(test): trgm_idx1

LOG: pg_hint_plan:

used hint:

BitmapScan(test trgm_idx1)

not used hint:

duplication hint:

error hint:

count

--------

873555

(1 row)

Time: 3426.308 ms

The following content shows the complete execution plan. Pay attention to the number of rows evaluated and the evaluation cost, as it is later used to decide which index is better.

postgres=# explain (analyze,verbose,timing,costs,buffers) /*+ BitmapScan(test trgm_idx1) */ select * from test where info ~ 'a';

LOG: available indexes for BitmapScan(test): trgm_idx1

LOG: pg_hint_plan:

used hint:

BitmapScan(test trgm_idx1)

not used hint:

duplication hint:

error hint:

QUERY PLAN

---------------------------------------------------------------------------------------------------------------------------------------

Bitmap Heap Scan on public.test (cost=28878.04..47944.37 rows=858586 width=37) (actual time=2393.340..3438.434 rows=873555 loops=1)

Output: id, info

Recheck Cond: (test.info ~ 'a'::text)

Rows Removed by Index Recheck: 126445

Heap Blocks: exact=8334

Buffers: shared hit=21335

-> Bitmap Index Scan on trgm_idx1 (cost=0.00..28663.39 rows=858586 width=0) (actual time=2391.618..2391.618 rows=1000000 loops=1)

Index Cond: (test.info ~ 'a'::text)

Buffers: shared hit=13001

Planning time: 0.464 ms

Execution time: 3513.761 ms

(11 rows)

postgres=# /*+ IndexScan(test trgm_idx2) */ select count(*) from test where info ~ 'a';

LOG: available indexes for IndexScan(test): trgm_idx2

LOG: pg_hint_plan:

used hint:

IndexScan(test trgm_idx2)

not used hint:

duplication hint:

error hint:

count

--------

873555

(1 row)

Time: 1692.881 ms

The following content shows the complete execution plan. Pay attention to the number of rows evaluated and the evaluation cost, as it is later used to decide which index is better.

postgres=# explain (analyze,verbose,timing,costs,buffers) /*+ IndexScan(test trgm_idx2) */ select * from test where info ~ 'a';

LOG: available indexes for IndexScan(test): trgm_idx2

LOG: pg_hint_plan:

used hint:

IndexScan(test trgm_idx2)

not used hint:

duplication hint:

error hint:

QUERY PLAN

-----------------------------------------------------------------------------------------------------------------------------------------

Index Scan using trgm_idx2 on public.test (cost=0.41..42825.67 rows=858586 width=37) (actual time=0.328..1719.398 rows=873555 loops=1)

Output: id, info

Index Cond: (test.info ~ 'a'::text)

Rows Removed by Index Recheck: 126445

Buffers: shared hit=962577

Planning time: 0.353 ms

Execution time: 1798.426 ms

(7 rows)

postgres=# /*+ IndexScan(test rum_idx1) */ select count(*) from test where string_to_tsvector(info) @@ string_to_tsquery('a');

LOG: pg_hint_plan:

no hint

count

--------

873555

(1 row)

Time: 623.930 ms

The following content shows the complete execution plan. Pay attention to the number of rows evaluated and the evaluation cost, as it is later used to decide which index is better.

postgres=# explain (analyze,verbose,timing,costs,buffers) /*+ IndexScan(test rum_idx1) */ select * from test where string_to_tsvector(info) @@ string_to_tsquery('a');

LOG: pg_hint_plan:

used hint:

not used hint:

IndexScan(test rum_idx1)

duplication hint:

error hint:

LOG: pg_hint_plan:

used hint:

not used hint:

IndexScan(test rum_idx1)

duplication hint:

error hint:

LOG: pg_hint_plan:

used hint:

not used hint:

IndexScan(test rum_idx1)

duplication hint:

error hint:

LOG: available indexes for IndexScan(test): rum_idx1

LOG: pg_hint_plan:

used hint:

IndexScan(test rum_idx1)

not used hint:

duplication hint:

error hint:

QUERY PLAN

--------------------------------------------------------------------------------------------------------------------------------------

Index Scan using rum_idx1 on public.test (cost=4.00..3940.50 rows=5000 width=37) (actual time=303.718..606.763 rows=873555 loops=1)

Output: id, info

Index Cond: (string_to_tsvector(test.info) @@ '''a'''::tsquery)

Buffers: shared hit=9027, temp read=1315 written=1315

Planning time: 1.165 ms

Execution time: 674.612 ms

(6 rows)

Here, the intermediate result set is large (the matching accuracy is low and lots of records meet the criteria) and the returned result set is small. LIMIT is applied in this test case.

If it works, paging is also very effective.

The following content shows the complete execution plan. Pay attention to the number of rows evaluated and the evaluation cost, as it is later used to decide which index is better.

postgres=# explain (analyze,verbose,timing,costs,buffers) /*+ BitmapScan(test trgm_idx1) */ select * from test where info ~ 'a' limit 100;

LOG: available indexes for BitmapScan(test): trgm_idx1

LOG: pg_hint_plan:

used hint:

BitmapScan(test trgm_idx1)

not used hint:

duplication hint:

error hint:

QUERY PLAN

---------------------------------------------------------------------------------------------------------------------------------------------

Limit (cost=28878.04..28880.26 rows=100 width=37) (actual time=2404.025..2404.156 rows=100 loops=1)

Output: id, info

Buffers: shared hit=13002

-> Bitmap Heap Scan on public.test (cost=28878.04..47944.37 rows=858586 width=37) (actual time=2404.023..2404.139 rows=100 loops=1)

Output: id, info

Recheck Cond: (test.info ~ 'a'::text)

Rows Removed by Index Recheck: 10

Heap Blocks: exact=1

Buffers: shared hit=13002

-> Bitmap Index Scan on trgm_idx1 (cost=0.00..28663.39 rows=858586 width=0) (actual time=2402.336..2402.336 rows=1000000 loops=1)

Index Cond: (test.info ~ 'a'::text)

Buffers: shared hit=13001

Planning time: 0.437 ms

Execution time: 2404.330 ms

(14 rows)

The following content shows the complete execution plan. Pay attention to the number of rows evaluated and the evaluation cost, as it is later used to decide which index is better.

postgres=# explain (analyze,verbose,timing,costs,buffers) /*+ IndexScan(test trgm_idx2) */ select * from test where info ~ 'a' limit 100;

LOG: available indexes for IndexScan(test): trgm_idx2

LOG: pg_hint_plan:

used hint:

IndexScan(test trgm_idx2)

not used hint:

duplication hint:

error hint:

QUERY PLAN

-----------------------------------------------------------------------------------------------------------------------------------------

Limit (cost=0.41..5.40 rows=100 width=37) (actual time=0.304..0.610 rows=100 loops=1)

Output: id, info

Buffers: shared hit=125

-> Index Scan using trgm_idx2 on public.test (cost=0.41..42825.67 rows=858586 width=37) (actual time=0.301..0.593 rows=100 loops=1)

Output: id, info

Index Cond: (test.info ~ 'a'::text)

Rows Removed by Index Recheck: 12

Buffers: shared hit=125

Planning time: 0.359 ms

Execution time: 0.680 ms

(10 rows)

The following content shows the complete execution plan. Pay attention to the number of rows evaluated and the evaluation cost, as it is later used to decide which index is better.

postgres=# explain (analyze,verbose,timing,costs,buffers) /*+ IndexScan(test rum_idx1) */ select * from test where string_to_tsvector(info) @@ string_to_tsquery('a') limit 100;

LOG: pg_hint_plan:

no hint

QUERY PLAN

-----------------------------------------------------------------------------------------------------------------------------------------

Limit (cost=4.00..82.73 rows=100 width=37) (actual time=299.327..299.392 rows=100 loops=1)

Output: id, info

Buffers: shared hit=693, temp read=2 written=1315

-> Index Scan using rum_idx1 on public.test (cost=4.00..3940.50 rows=5000 width=37) (actual time=299.323..299.371 rows=100 loops=1)

Output: id, info

Index Cond: (string_to_tsvector(test.info) @@ '''a'''::tsquery)

Buffers: shared hit=693, temp read=2 written=1315

Planning time: 0.353 ms

Execution time: 303.170 ms

(9 rows)

In this case, the intermediate result is small (the matching accuracy is high).

postgres=# select * from test where info ~ '2e9a2c';

id | info

-------+----------------------------------

31 | e5e51acb3802e9a2c6318f6f2554ee1e

47924 | b1562e9a2cced50c2ea8629d03b64416

(2 rows)

Time: 295.222 ms

postgres=# /*+ BitmapScan(test trgm_idx1) */ select count(*) from test where info ~ '2e9a2c';

LOG: available indexes for BitmapScan(test): trgm_idx1

LOG: pg_hint_plan:

used hint:

BitmapScan(test trgm_idx1)

not used hint:

duplication hint:

error hint:

count

-------

2

(1 row)

Time: 3.835 ms

The following content shows the complete execution plan. Pay attention to the number of rows evaluated and the evaluation cost, as it is later used to decide which index is better.

postgres=# explain (analyze,verbose,timing,costs,buffers) /*+ BitmapScan(test trgm_idx1) */ select * from test where info ~ '2e9a2c';

LOG: available indexes for BitmapScan(test): trgm_idx1

LOG: pg_hint_plan:

used hint:

BitmapScan(test trgm_idx1)

not used hint:

duplication hint:

error hint:

QUERY PLAN

---------------------------------------------------------------------------------------------------------------------

Bitmap Heap Scan on public.test (cost=20.77..122.03 rows=100 width=37) (actual time=2.488..2.494 rows=2 loops=1)

Output: id, info

Recheck Cond: (test.info ~ '2e9a2c'::text)

Heap Blocks: exact=2

Buffers: shared hit=26

-> Bitmap Index Scan on trgm_idx1 (cost=0.00..20.75 rows=100 width=0) (actual time=2.467..2.467 rows=2 loops=1)

Index Cond: (test.info ~ '2e9a2c'::text)

Buffers: shared hit=24

Planning time: 0.549 ms

Execution time: 2.543 ms

(10 rows)

postgres=# /*+ IndexScan(test trgm_idx2) */ select count(*) from test where info ~ '2e9a2c';

LOG: available indexes for IndexScan(test): trgm_idx2

LOG: pg_hint_plan:

used hint:

IndexScan(test trgm_idx2)

not used hint:

duplication hint:

error hint:

count

-------

2

(1 row)

Time: 296.805 ms

The following content shows the complete execution plan. Pay attention to the number of rows evaluated and the evaluation cost, as it is later used to decide which index is better.

postgres=# explain (analyze,verbose,timing,costs,buffers) /*+ IndexScan(test trgm_idx2) */ select * from test where info ~ '2e9a2c';

LOG: available indexes for IndexScan(test): trgm_idx2

LOG: pg_hint_plan:

used hint:

IndexScan(test trgm_idx2)

not used hint:

duplication hint:

error hint:

QUERY PLAN

-------------------------------------------------------------------------------------------------------------------------------

Index Scan using trgm_idx2 on public.test (cost=0.41..105.16 rows=100 width=37) (actual time=55.568..293.998 rows=2 loops=1)

Output: id, info

Index Cond: (test.info ~ '2e9a2c'::text)

Buffers: shared hit=15888

Planning time: 0.423 ms

Execution time: 294.103 ms

(6 rows)

Time: 295.444 ms

postgres=# /*+ IndexScan(test rum_idx1) */ select count(*) from test where string_to_tsvector(info) @@ string_to_tsquery('2e9a2c');

LOG: pg_hint_plan:

no hint

count

-------

2

(1 row)

Time: 891.093 ms

The following content shows the complete execution plan. Pay attention to the number of rows evaluated and the evaluation cost, as it is later used to decide which index is better.

postgres=# explain (analyze,verbose,timing,costs,buffers) /*+ IndexScan(test rum_idx1) */ select * from test where string_to_tsvector(info) @@ string_to_tsquery('2e9a2c');

LOG: available indexes for IndexScan(test): rum_idx1

LOG: pg_hint_plan:

used hint:

IndexScan(test rum_idx1)

not used hint:

duplication hint:

error hint:

QUERY PLAN

-----------------------------------------------------------------------------------------------------------------------------

Index Scan using rum_idx1 on public.test (cost=20.00..22.01 rows=1 width=37) (actual time=833.852..833.856 rows=2 loops=1)

Output: id, info

Index Cond: (string_to_tsvector(test.info) @@ '''2'' <-> ''e'' <-> ''9'' <-> ''a'' <-> ''2'' <-> ''c'''::tsquery)

Buffers: shared hit=5027

Planning time: 0.432 ms

Execution time: 864.190 ms

(6 rows)

alter table test alter column SET STATISTICS 1000;

vacuum analyze test;

explain $QUERY;

If LIMIT is not applied, choose the number of the rows on the top-level node. If LIMIT is applied, choose the number of rows on the second node. For more complicated queries, for example, a query that has multiple criteria, it is better to find corresponding nodes by using hints to find indexes.

Consider the following example:

postgres=# explain /*+ BitmapScan(test trgm_idx1) */ select * from test where info ~ '2e9a2c';

LOG: available indexes for BitmapScan(test): trgm_idx1

LOG: pg_hint_plan:

used hint:

BitmapScan(test trgm_idx1)

not used hint:

duplication hint:

error hint:

QUERY PLAN

---------------------------------------------------------------------------

Bitmap Heap Scan on test (cost=20.77..122.02 rows=100 width=37)

Recheck Cond: (info ~ '2e9a2c'::text)

-> Bitmap Index Scan on trgm_idx1 (cost=0.00..20.75 rows=100 width=0)

Index Cond: (info ~ '2e9a2c'::text)

(4 rows)

postgres=# explain /*+ BitmapScan(test trgm_idx1) */ select * from test where info ~ '2e9';

LOG: available indexes for BitmapScan(test): trgm_idx1

LOG: pg_hint_plan:

used hint:

BitmapScan(test trgm_idx1)

not used hint:

duplication hint:

error hint:

QUERY PLAN

----------------------------------------------------------------------------

Bitmap Heap Scan on test (cost=59.30..5079.87 rows=7006 width=37)

Recheck Cond: (info ~ '2e9'::text)

-> Bitmap Index Scan on trgm_idx1 (cost=0.00..57.54 rows=7006 width=0)

Index Cond: (info ~ '2e9'::text)

(4 rows)

On the basis of the number of intermediate results and aforementioned suggestions, choose a proper hint and start executing your query.

Build your own PostgreSQL solution on Alibaba Cloud with ApsaraDB for RDS PostgreSQL.

Efficiently Implementing Full-table and Full-field Fuzzy Search in Milliseconds with PostgreSQL

Alibaba Clouder - December 11, 2017

digoal - September 12, 2019

digoal - September 12, 2019

digoal - October 22, 2018

digoal - December 11, 2019

digoal - December 23, 2020

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by digoal