This is a tutorial on how to run the open-source project Apache Airflow on Alibaba Cloud with ApsaraDB (Alibaba Cloud Database). We also show a simple data migration task deployed and run in Airflow to migrate data between two databases.

You can access the tutorial artifact, including the deployment script (Terraform), related source code, sample data, and instruction guidance from the Github project.

Please refer to this link for more tutorials related to Alibaba Cloud Database.

Apache Airflow is a platform created by the community to author, schedule, and monitor workflows programmatically.

Airflow requires a database. If you are experimenting and learning Airflow, you can stick with the default SQLite option or single node PostgreSQL built-in Docker edition. We will show the steps of deployment working with Alibaba Cloud Database to enhance the database's high availability behind the Apache Airflow.

Airflow supports PostgreSQL and MySQL. You can use one of the following databases on Alibaba Cloud:

In this tutorial, we will show the case of using RDS PostgreSQL high availability edition to replace the single node PostgreSQL built-in Docker edition for more stable production purposes.

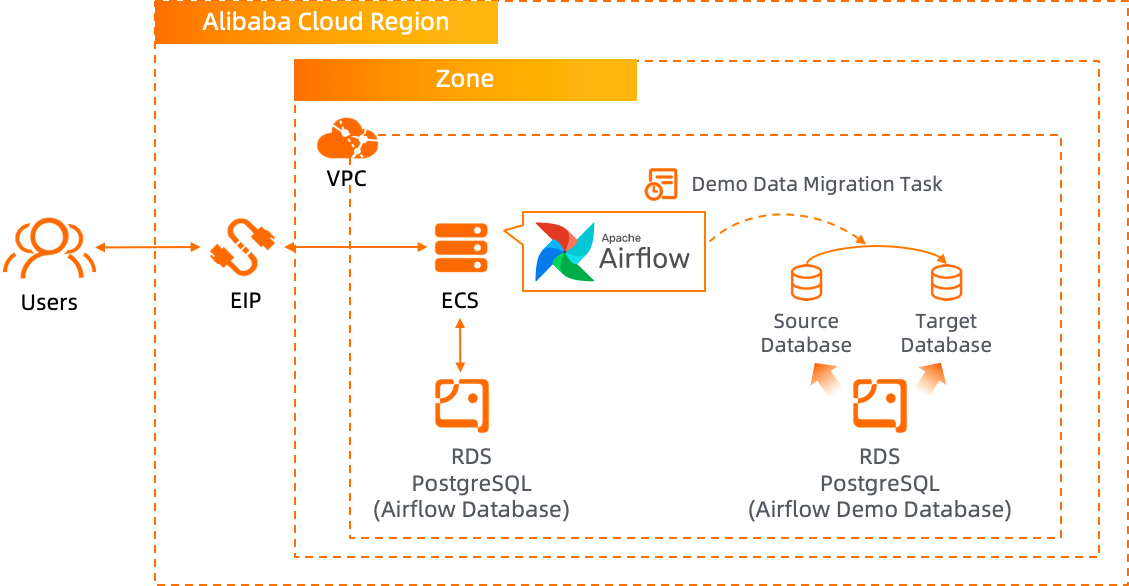

Deployment Architecture:

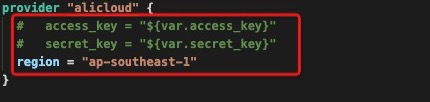

Run the terraform script to initialize the resources. (In this tutorial, we use RDS PostgreSQL as backend database of Airflow and another RDS PostgreSQL as the demo database showing the data migration via Airflow task, so ECS and 2 RDS PostgreSQL instances are included in the Terraform script.) Please specify the necessary information and region to deploy:

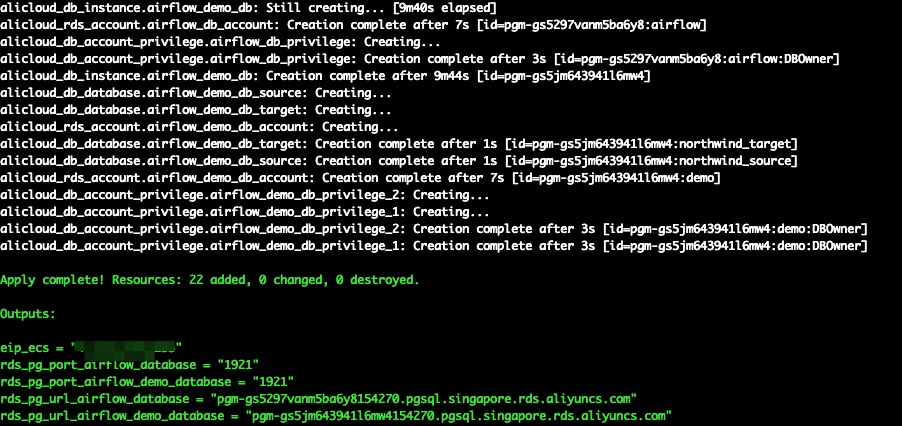

After the Terraform script execution is finished, the ECS and RDS PostgreSQL instances information are listed below:

rds_pg_url_airflow_database: The connection URL of the backend database for Airflowrds_pg_url_airflow_demo_database: The connection URL of the demo database using AirflowThe database port for RDS PostgreSQL is 1921 by default.

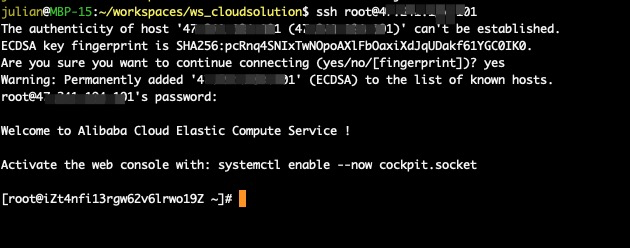

Please log on to ECS with ECS EIP:

ssh root@<ECS_EIP>

Download and execute the script setup.sh via the following commands to setup Airflow on ECS:

cd ~

wget https://raw.githubusercontent.com/alibabacloud-howto/opensource_with_apsaradb/main/apache-airflow/setup.sh

sh setup.sh

cd ~/airflow

mkdir ./dags ./logs ./plugins

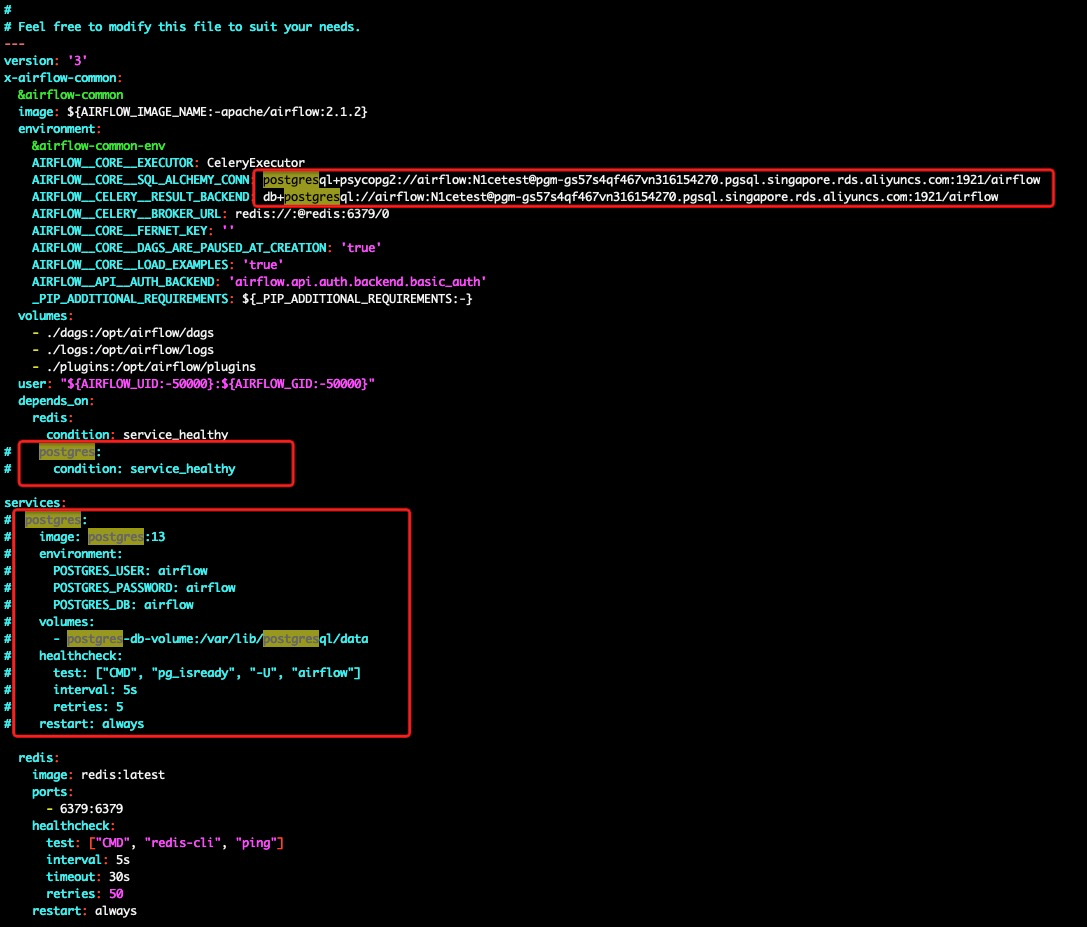

echo -e "AIRFLOW_UID=$(id -u)\nAIRFLOW_GID=0" > .envEdit the downloaded docker-compose.yaml file to set the backend database as the RDS PostgreSQL:

cd ~/airflow

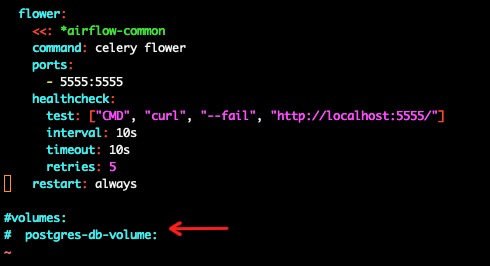

vim docker-compose.yamlUse the connection string of rds_pg_url_airflow_database in Step 1. Comment on the part related to postgres:

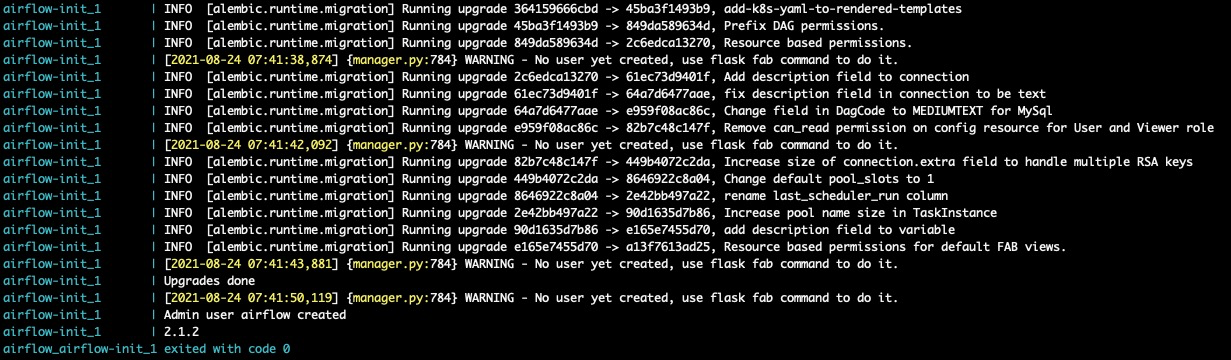

Execute the following command to initialize Airflow docker:

docker-compose up airflow-init

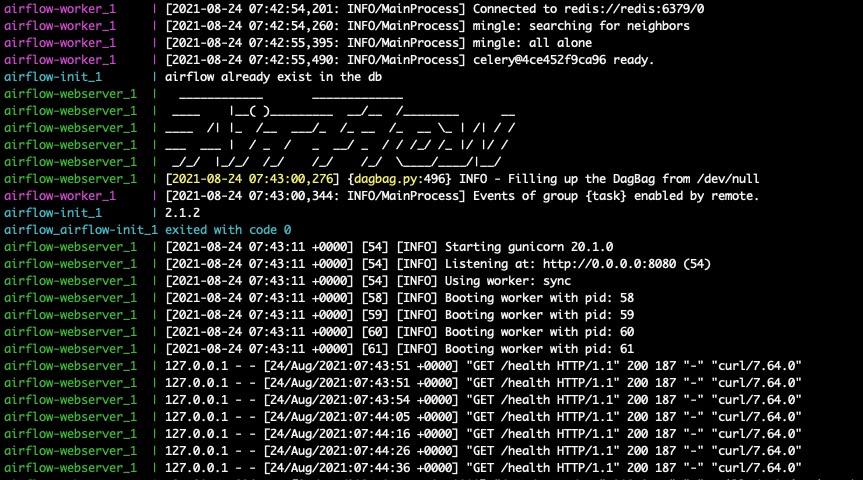

Execute the following command to start Airflow:

docker-compose up

Now, Airflow has started successfully. Please visit the following URL (replace <ECS_EIP> with the EIP of the ECS) to access the Airflow web console:

http://<ECS_EIP>:8080The default account has the login airflow and the password airflow:

Next, let's move on to the first data migration task on Airflow.

Please log on to ECS with ECS EIP in another terminal window:

ssh root@<ECS_EIP>Download and setup the PostgreSQL client to communicate with the demo database:

cd ~

wget http://mirror.centos.org/centos/8/AppStream/x86_64/os/Packages/compat-openssl10-1.0.2o-3.el8.x86_64.rpm

rpm -i compat-openssl10-1.0.2o-3.el8.x86_64.rpm

wget http://docs-aliyun.cn-hangzhou.oss.aliyun-inc.com/assets/attach/181125/cn_zh/1598426198114/adbpg_client_package.el7.x86_64.tar.gz

tar -xzvf adbpg_client_package.el7.x86_64.tar.gzFetch the database DDL and DML SQL files:

cd ~/airflow

wget https://raw.githubusercontent.com/alibabacloud-howto/opensource_with_apsaradb/main/apache-airflow/northwind_ddl.sql

wget https://raw.githubusercontent.com/alibabacloud-howto/opensource_with_apsaradb/main/apache-airflow/northwind_data_source.sql

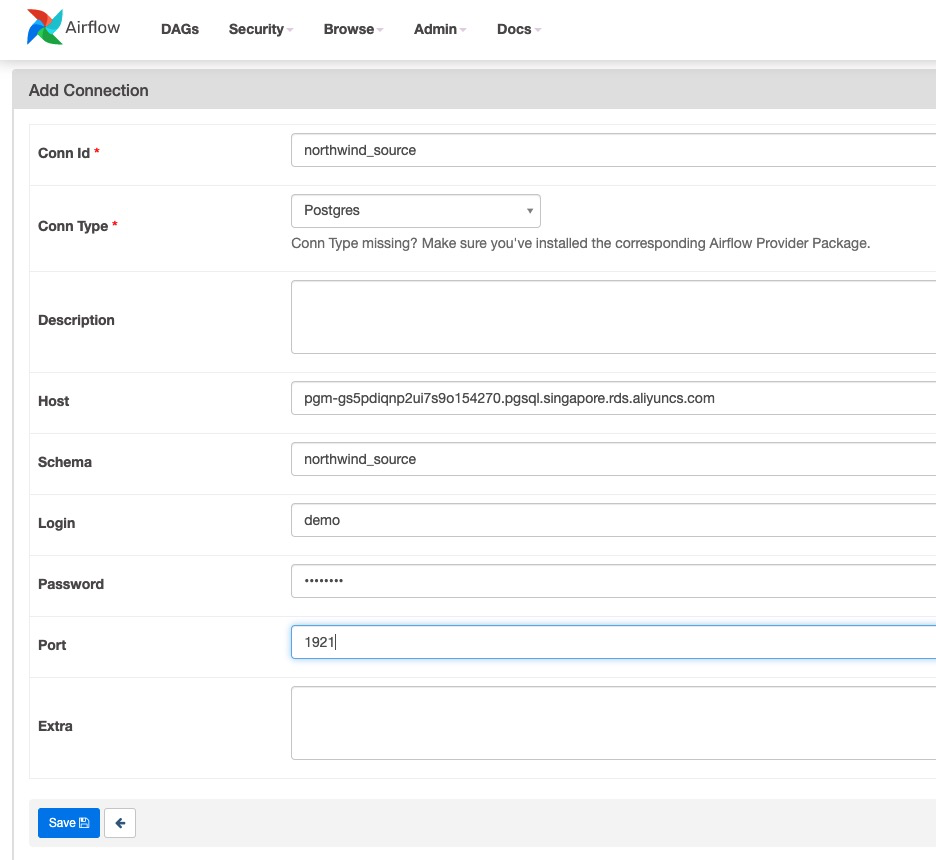

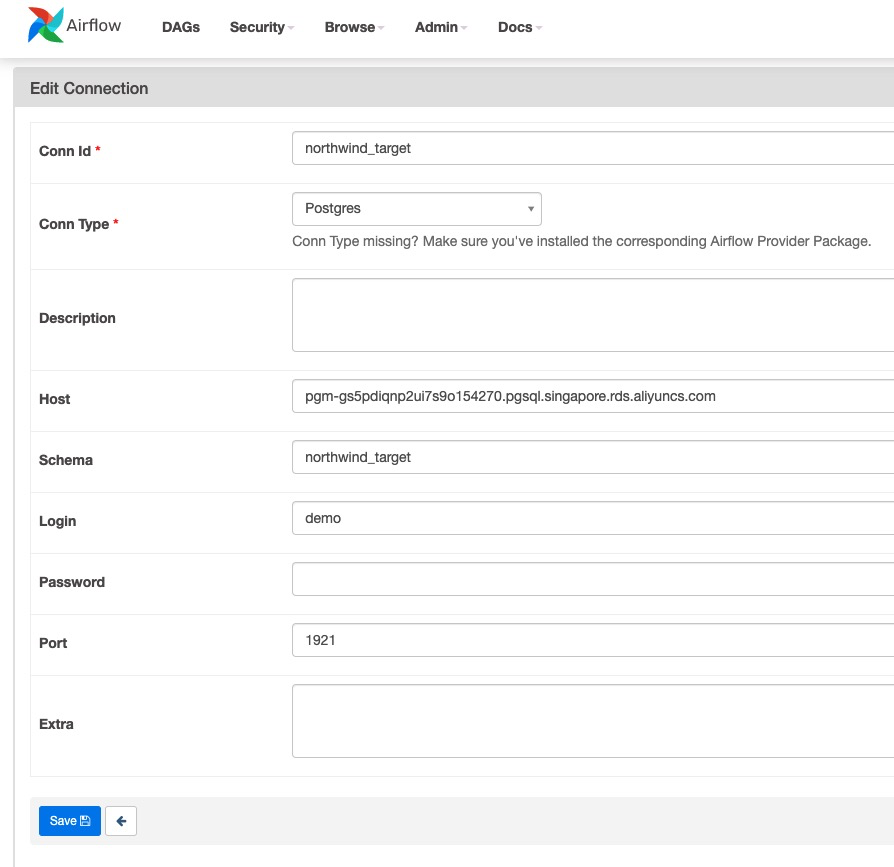

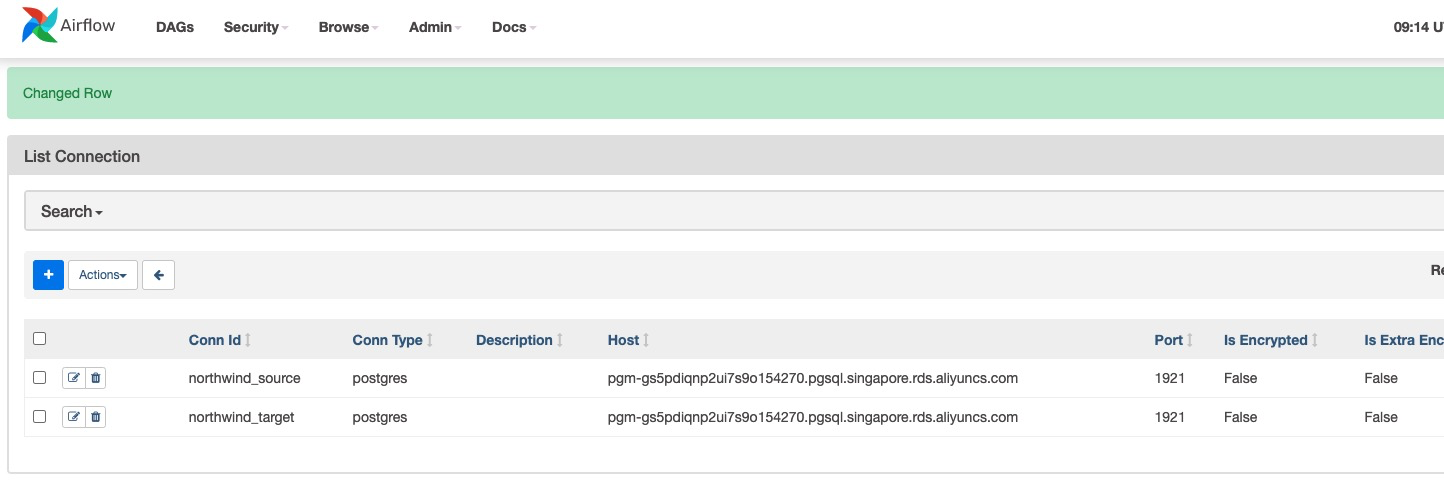

wget https://raw.githubusercontent.com/alibabacloud-howto/opensource_with_apsaradb/main/apache-airflow/northwind_data_target.sqlThere are two databases (source: northwind_source and target: northwind_target) working as the source and target in this data migration demo.

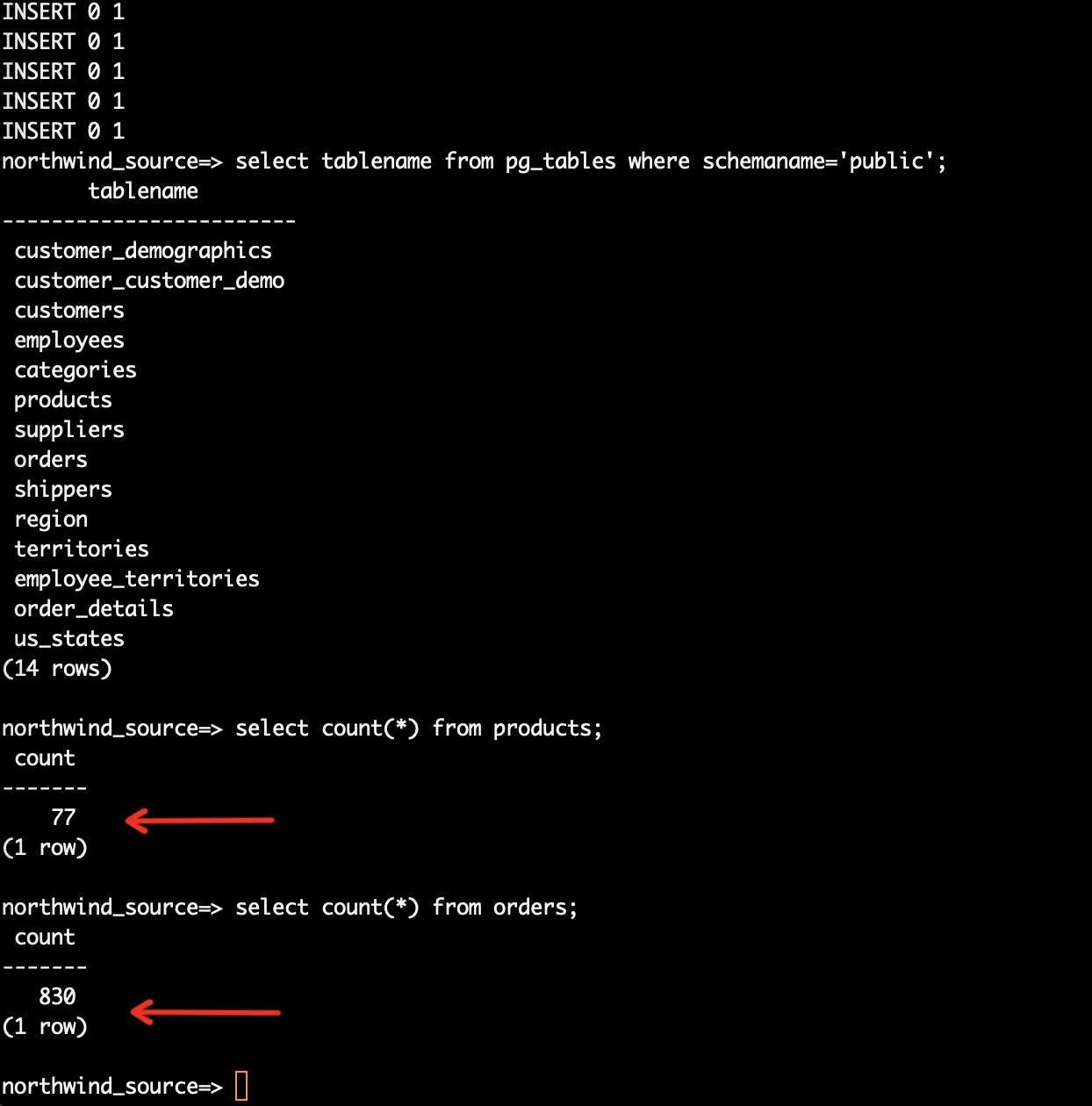

Connect to the demo source database northwind_source, create the tables (northwind_ddl.sql), and load the sample data (northwind_data_source.sql).

Replace <rds_pg_url_airflow_demo_database> with the demo RDS PostgreSQL connection string.

We've set up the demo database account as username demo and password N1cetest.

Execute the following commands for the source database:

cd ~/adbpg_client_package/bin

./psql -h<rds_pg_url_airflow_demo_database> -p1921 -Udemo northwind_source

\i ~/airflow/northwind_ddl.sql

\i ~/airflow/northwind_data_source.sql

select tablename from pg_tables where schemaname='public';

select count(*) from products;

select count(*) from orders;

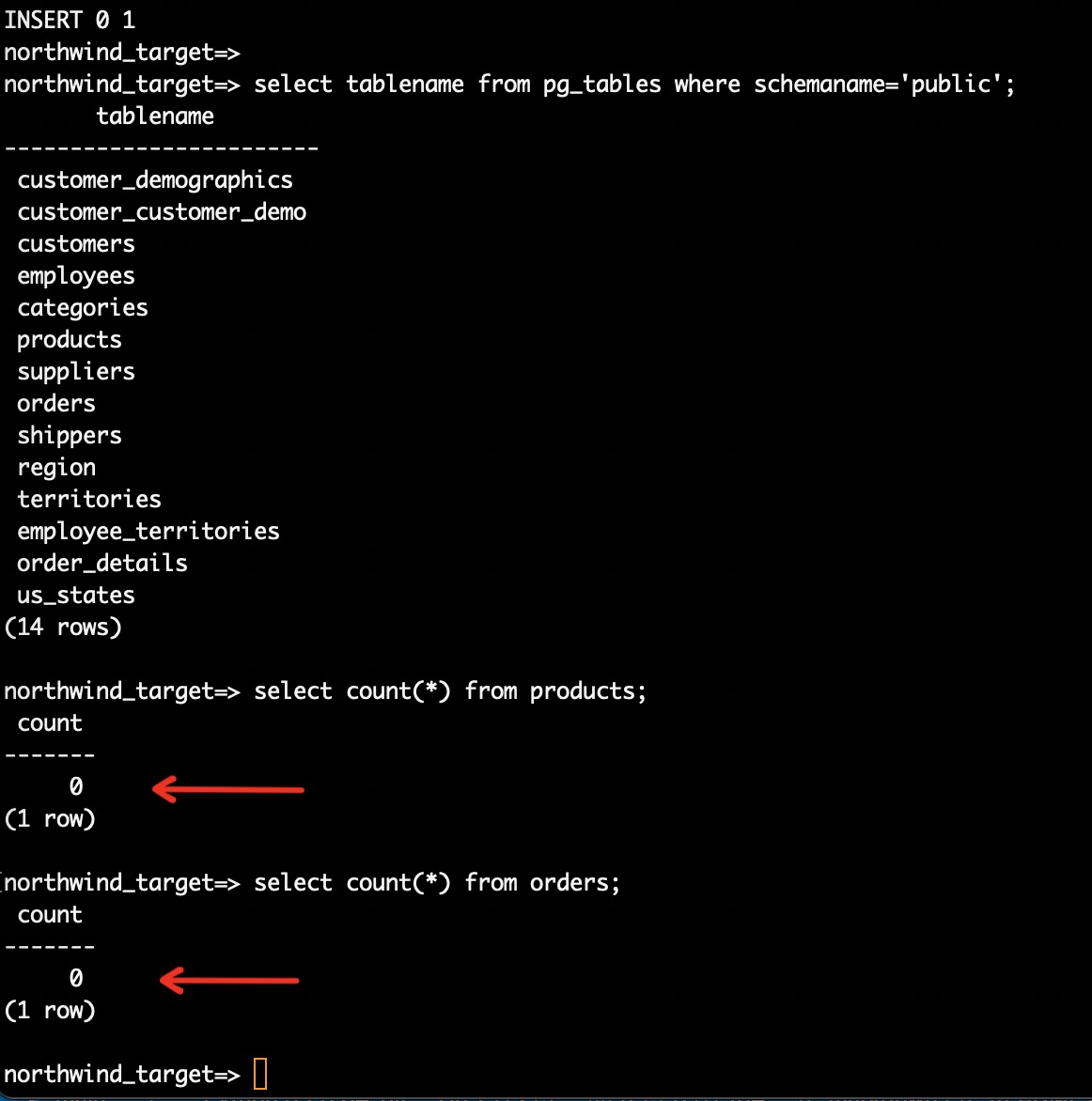

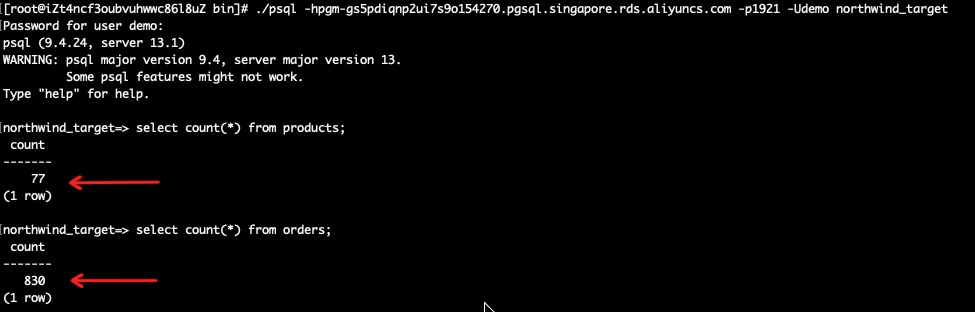

Execute the following commands for the target database:

./psql -h<rds_pg_url_airflow_demo_database> -p1921 -Udemo northwind_target

\i ~/airflow/northwind_ddl.sql

\i ~/airflow/northwind_data_target.sql

select tablename from pg_tables where schemaname='public';

select count(*) from products;

select count(*) from orders;

We can see that the tables' products and orders in the target database are empty. Later we will use the migration task running in Airflow to migrate data from the source database to the target database.

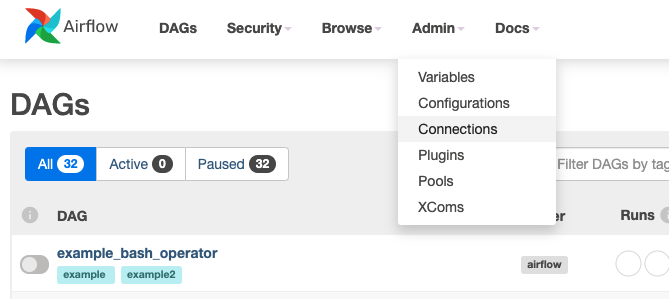

First, go to the Airflow web console (Admin :arrow_right: Connections) to add database connections to the source and target databases, respectively.

Download and deploy (put it into the dags directory) the migration task python script into Airflow:

cd ~/airflow/dags

wget https://raw.githubusercontent.com/alibabacloud-howto/opensource_with_apsaradb/main/apache-airflow/northwind_migration.py

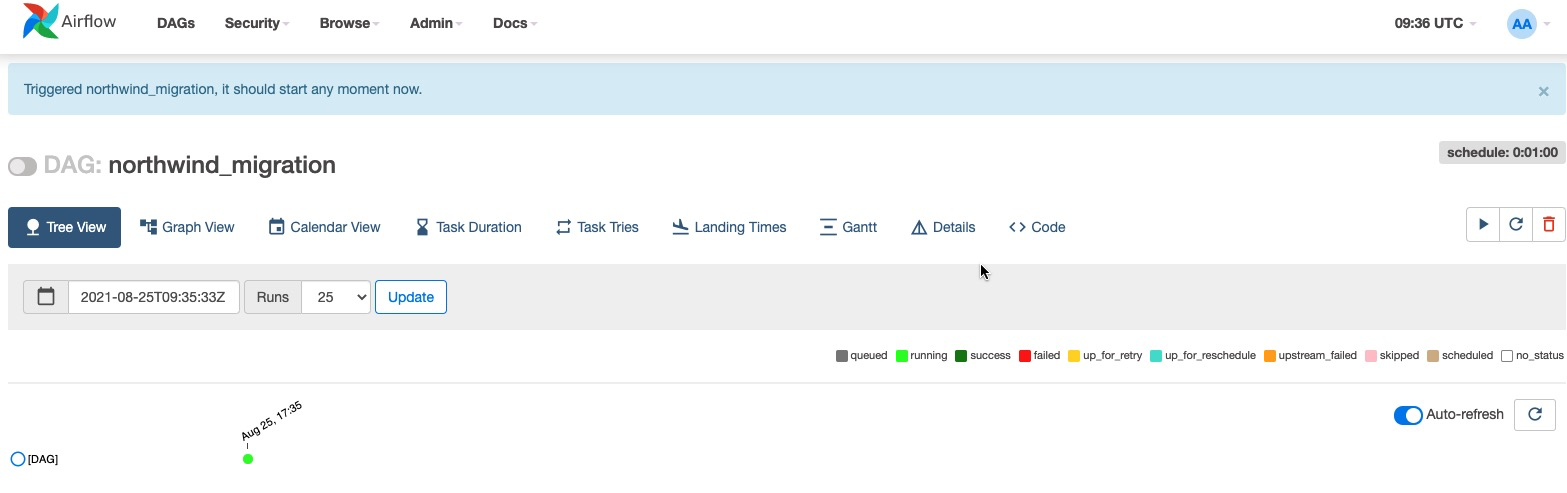

The DAG task in this demo finds the new product_id and order_id's in database northwind_source and then updates the same product and order tables in database northwind_target with the rows greater than that maximum id. The job is scheduled to run every minute starting on today's date (when you run this demo, please update accordingly.)

The demo airflow DAG python script originated from here. We've done some modifications.

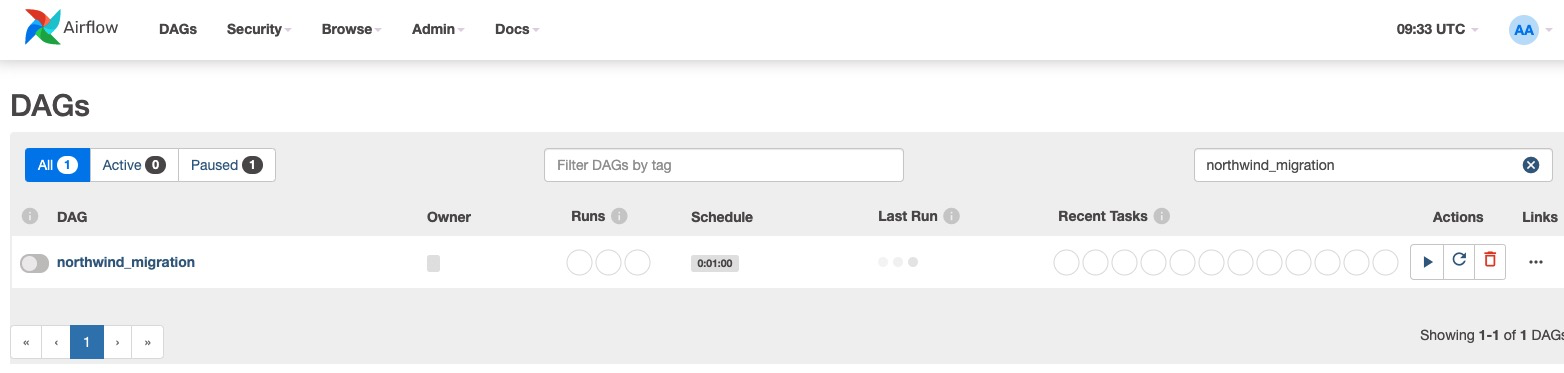

If the task loaded successfully, the DAG task will be shown on the web console.

Since the migration task is running continuously, we can go to the target database and check the migrated data.

Rose Bowl Games Scoreboard Powered by Redis & MySQL - Provisioning Resources with Terraform

Alibaba Clouder - November 15, 2018

andy.zh - November 7, 2019

Alibaba EMR - October 12, 2021

Apache Flink Community China - September 27, 2020

Apache Flink Community - April 25, 2025

JDP - June 17, 2022

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn MoreMore Posts by ApsaraDB