By Wang Zhe, Product Manager of DataWorks

This article is a part of the One-stop Big Data Development and Governance DataWorks Use Collection.

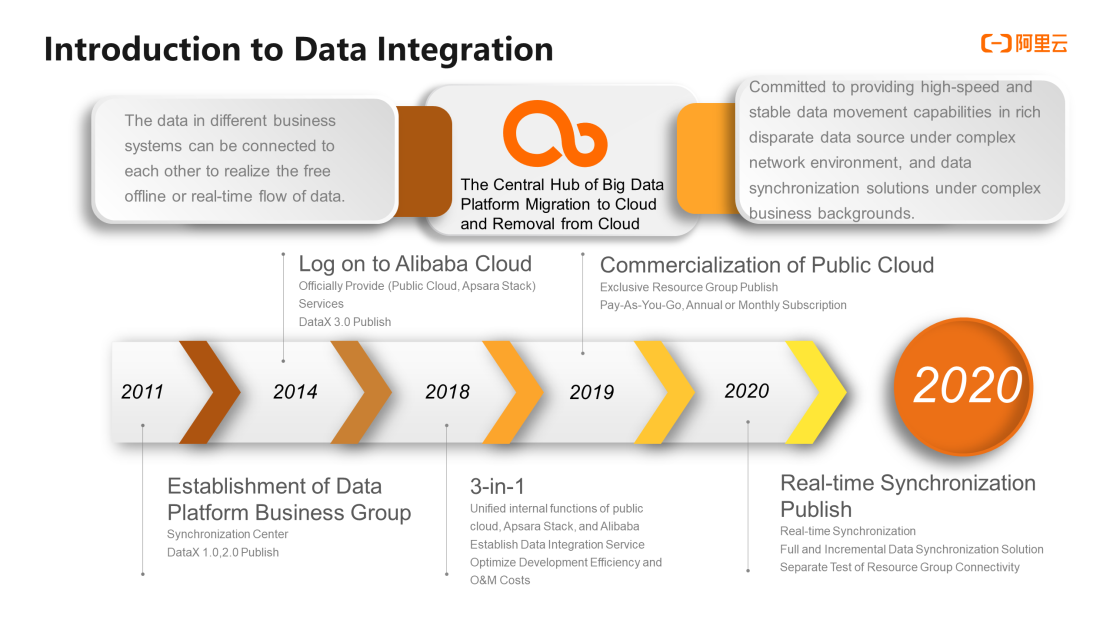

Data integration is the central hub for the big data platform to migrate to the cloud and remove from the cloud. Its main function aims to get through the data in different business systems, realize the free offline or real-time flow of data, complete data migration to the cloud, and remove it from the cloud using network solutions.

Data integration completed publishing DataX 1.0 and 2.0 in 2011 and officially published DataX 3.0 in 2014. In 2018, the three versions were integrated, which unified the internal functions of public cloud, Apsara Stack, and Alibaba to establish the Data Integration service of Alibaba, which can optimize development efficiency and reduce O&M costs. In 2019, the exclusive resource group was published, and data integration was formally commercialized. In 2020, real-time simultaneous was published, allowing a full incremental solution and separate testing of resource group connectivity.

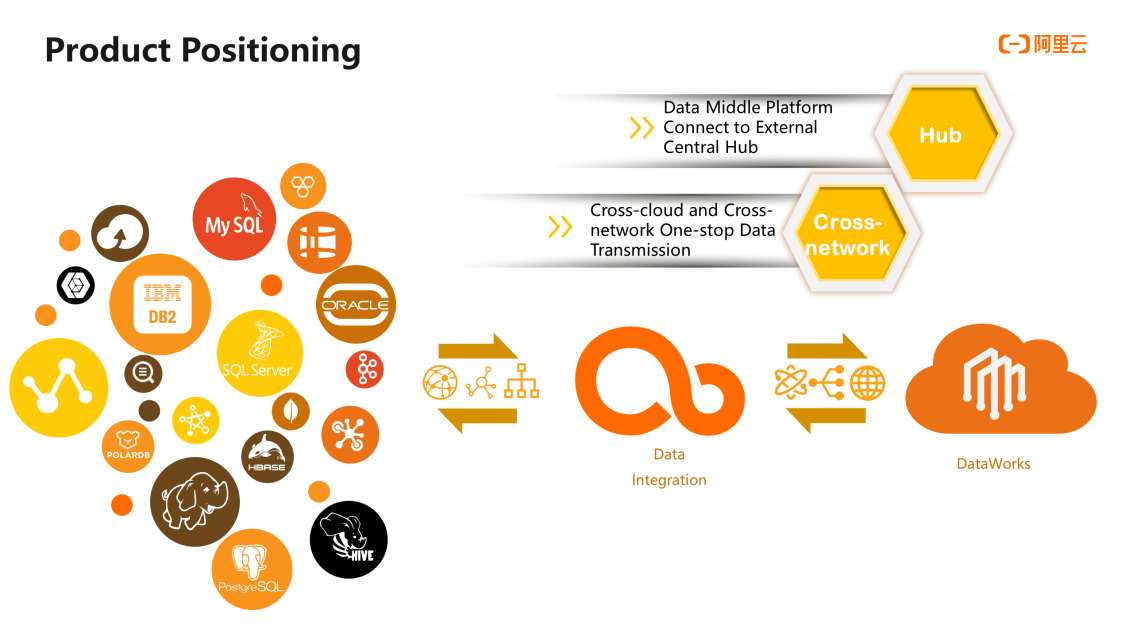

Data integration has two major functions. The first function works a as data middle platform to connect to the external central hub. It is responsible for the connection of data flow among the connected cloud systems. The second is a one-stop data transmission service across clouds and networks. In data integration, all data can be accessed to provide a one-stop solution.

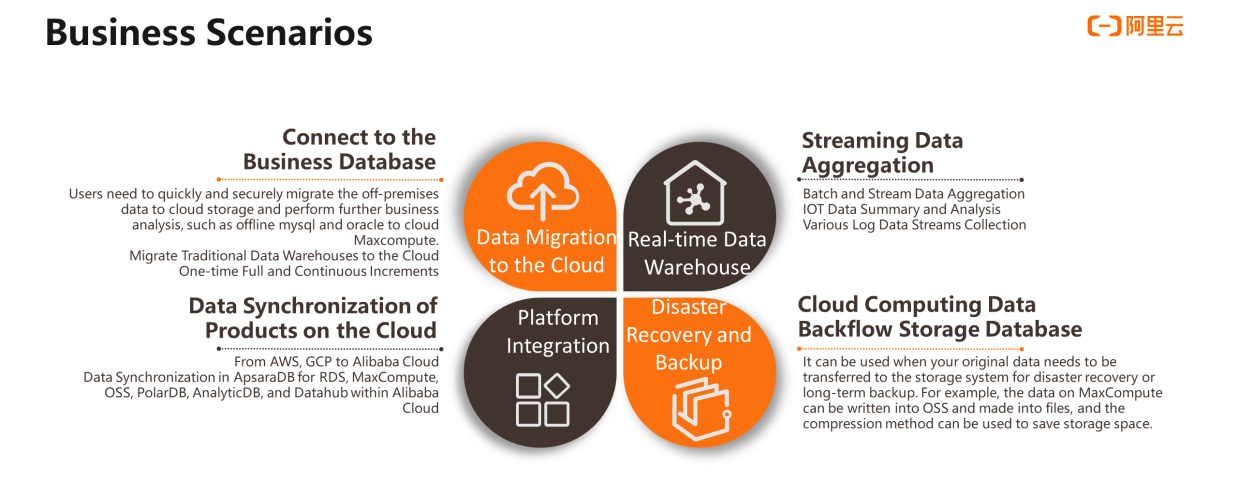

Business Scenario 1 – Migrate to the Cloud: It can be used when you need to quickly and securely migrate data to the cloud storage for further business analysis, such as offline MySQL, Oracle-to-cloud MaxCompute, or MC-Hologres and E-MapReduce. One-time full or continuous increment is supported during cloud migration, including offline or real-time modes.

Business Scenario 2 – Real-Time Data Warehouses: It supports the aggregation of batch or streaming data into real-time warehouses. It can also be used for data aggregation and analysis related to IoT and the collection and analysis of log data streams.

Business Scenario 3 – Disaster Recovery Backup: It can be used when your original data needs to be transferred to the storage system for disaster recovery or long-term backup. For example, the data on MaxCompute can be written into OSS and made into files, and the compression method can be used to save storage space. When needed, OSS files can also be restored to original tables and other forms.

Business Scenario 4 – Platform Convergence: You can synchronize the data of various products on the cloud, such as third-party cloud AWS, GCP, and other products. Alibaba Cloud internal data can be synchronized among various products, such as ApsaraDB for RDS, MaxCompute, OSS, PolarDB, AnalyticDB, and Datahub.

Coverage of Regions and Industries: In terms of geographical coverage, eight regions have been opened in China, including the Hong Kong area. Eleven regions have been opened internationally, which is virtually the same as Alibaba Cloud. In terms of industry distribution, it has covered common fields, including government, finance, insurance, energy, power, manufacturing, Internet, and retail. It has extensive experience in business scenarios.

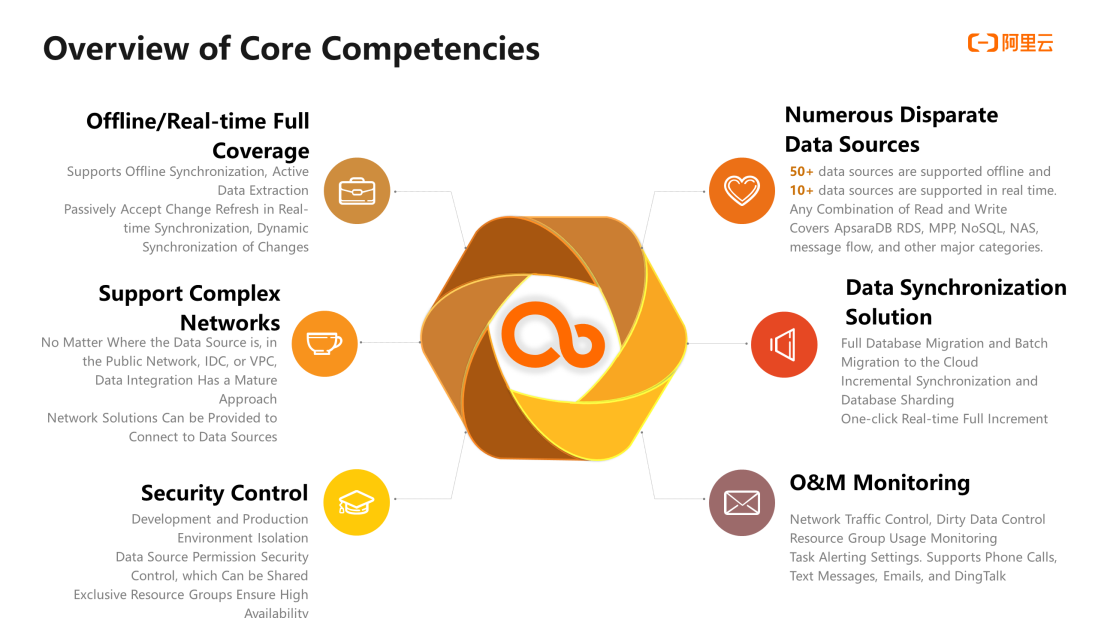

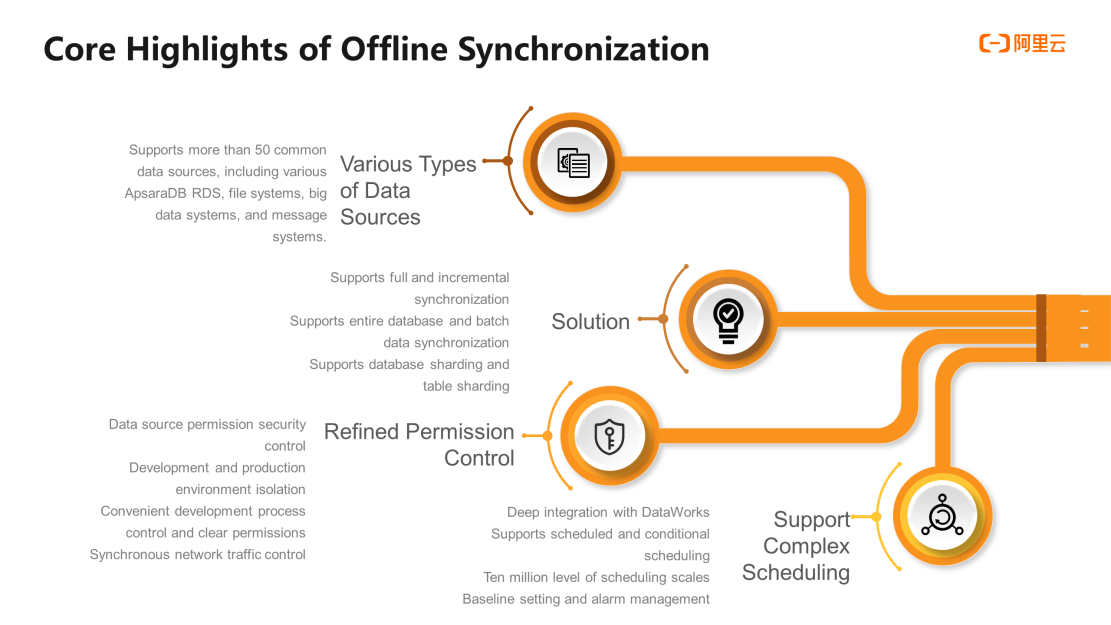

The first core capability is offline/real-time full coverage. Since 2020, data integration has supported real-time data synchronization, covering almost all data circulation scenarios. It includes offline data synchronization, active data extraction, passive real-time data receiving, data changes perception, and real-time synchronization.

The second core capability aims to support a wide range of disparate data sources. It supports more than 50 data sources offline and more than 10 data sources in real-time. It can implement any combination of reading and writing and covers ApsaraDB RDS, MPP, NoSQL, NAS, and message flow.

The third core capability aims to support complex networks, regardless of where data sources are located, such as public networks, IDCs, and VPCs. Data integration is equipped with mature solutions to connect to data sources.

The fourth core capability is the synchronization solution, including full database migration, batch cloud migration, incremental synchronization, database and table sharding, and one-click real-time full increment. These have been productized and can be completed by setting up a simple configuration.

The fifth core capability is security control. In tasks and data sources, development and production environments can be isolated, data source permission security control can be achieved, personnel and projects can be specified to share data sources, and minimum granularity control can be achieved.

The sixth core capability is O&M monitoring, which can implement network traffic control, dirty data control, and resource group usage monitoring and support task alert settings, including phone calls, SMS messages, emails, and DingTalk.

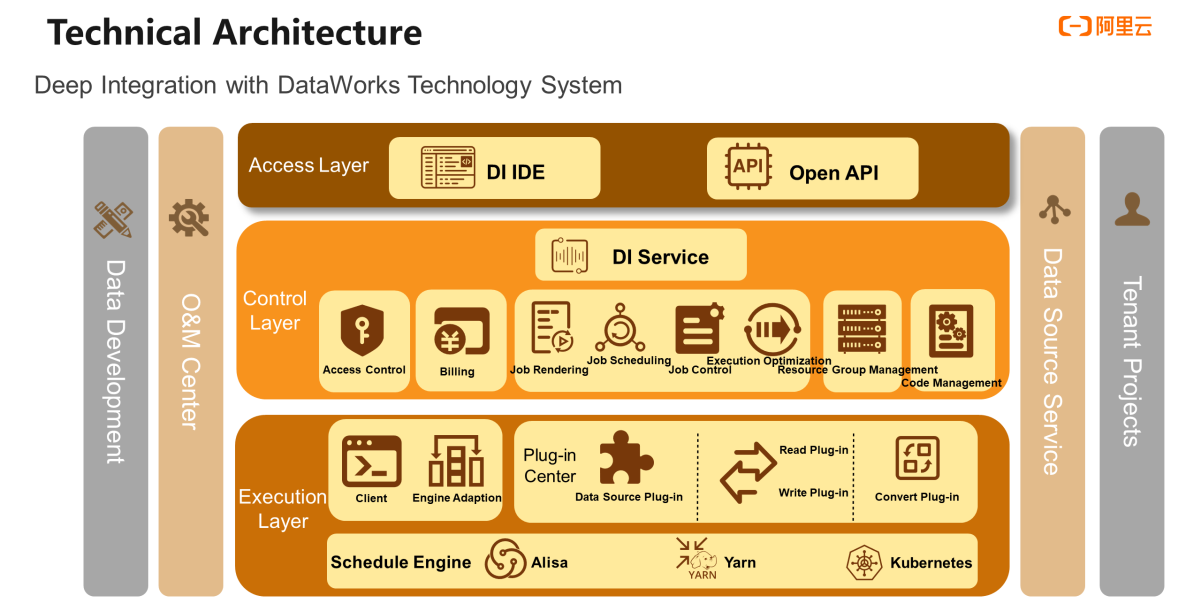

Data Integration is deeply integrated with DataWorks.

The underlying layer is the execution layer, which can be connected to the Alisa engine of DataWorks, including the Yarn engine and Kubernetes, which can be connected immediately. A center upward aims to control the data source plug-ins, which can be expanded upward to enhance the reading and writing capabilities of various data.

You can perform rendering, scheduling, control, execution, and resource group management for jobs at the control layer. You can also perform detailed feedback on measurement and billing and deep integration with the permission control of DataWorks. These tasks rely on DataWorks for code management.

The access layer is further up. First of all, visual development is realized using the IDE visual environment provided by DataWorks. At the same time, it can also support the development of OpenAPI by working with DataWorks. In addition to using the interface of DataWorks, you can use OpenAPI to package data integration capabilities in your functions or products, so the upstream and downstream are connected to the capabilities of major DataWorks modules.

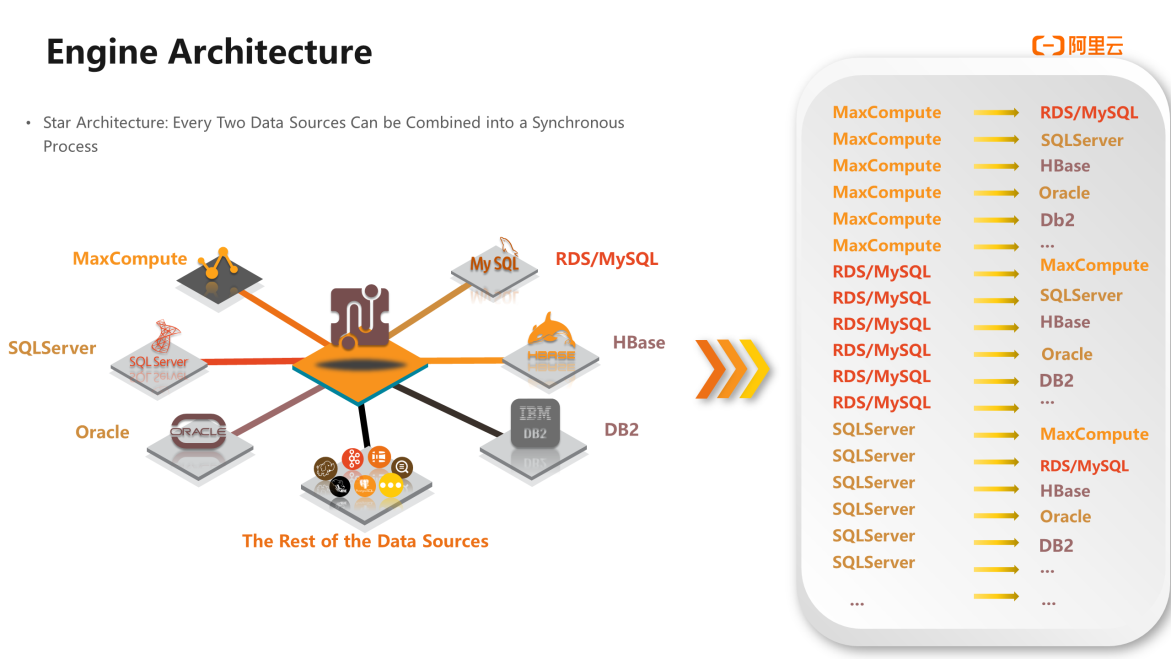

The data integration adopts a new engine architecture. No matter what kind of data source, it can form a one-to-one or many-to-one synchronization process with other data sources as long as it is connected to this engine. Any two data sources can be combined and matched, and dozens of possibilities are added for each additional data source. This capability provides support for data process scalability.

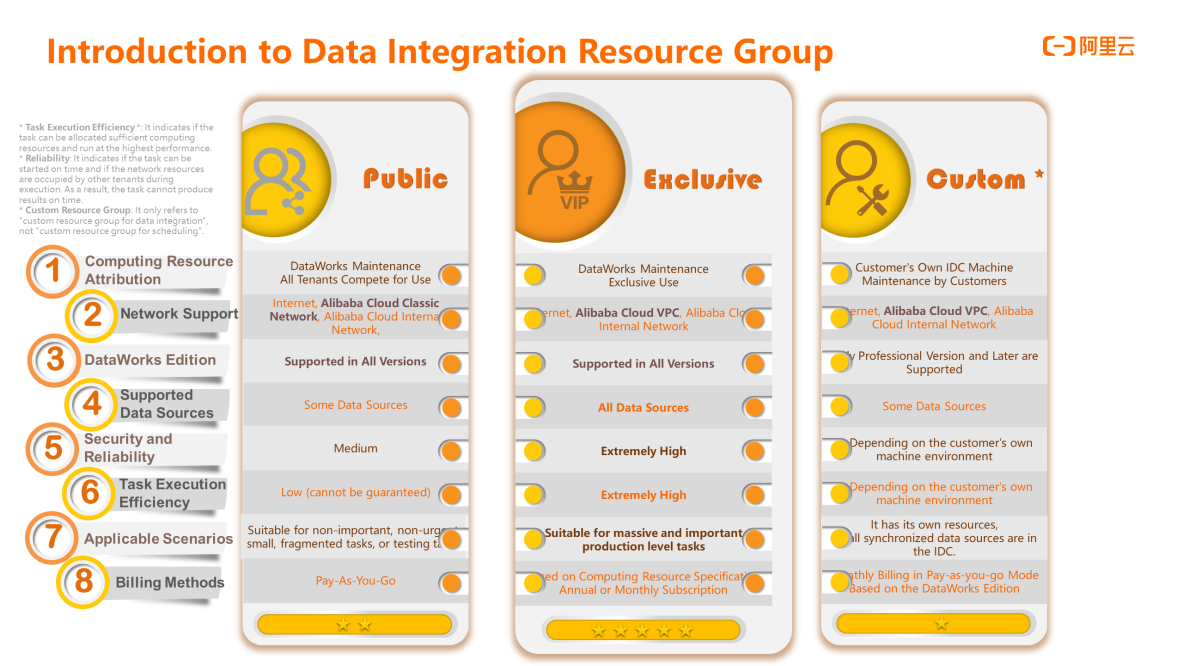

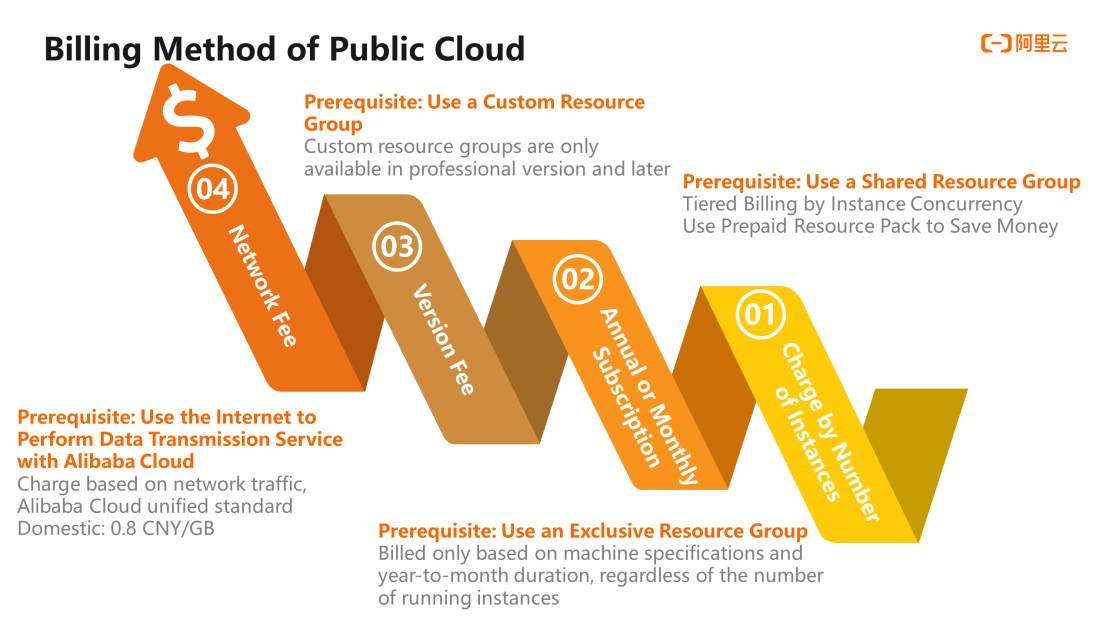

The data integration consists of three resource groups, exclusive resource groups, shared resource groups, and custom resource groups. The differences can be seen in eight dimensions.

The first is the attribution of computing resources. The computing resources of public resource groups are shared by all tenants, and the overall maintenance is undertaken by DataWorks. Exclusive resource groups are also maintained by DataWorks but are exclusive to users. Custom resource groups are your IDC machines and are maintained by users.

The second is network support. Public resource groups support the Internet, Alibaba Cloud classic network, and Alibaba Cloud internal network but do not support Alibaba Cloud VPC. Exclusive resource groups and custom resource groups support the Internet, Alibaba Cloud VPC, and Alibaba Cloud internal network but do not support the Alibaba Cloud classic network.

The third is the DataWorks version. Shared resource groups and exclusive resource groups are supported in all versions. Custom resource groups only support the professional or later versions.

The fourth aims to support data sources. Shared resource groups and custom resource groups support some data sources. Exclusive resource groups support all data sources.

The fifth is safety and reliability. Shared resource groups are open to users. Users that preempt more resources will cause delays and other problems to users that preempt fewer resources, so the security and reliability are low. Exclusive resource groups have high security and reliability. Custom resource groups depend on the user machine environment.

The sixth is the efficiency of task execution. Public resource groups have low task execution efficiency due to resource preemption, which is not guaranteed. The task execution efficiency of exclusive resource groups is high. Custom resource groups depend on the user machine environment.

The seventh is the applicable scenarios. Shared resource groups are suitable for non-important and non-urgent scattered tasks or testing tasks. Exclusive resource groups are suitable for a large number of important production-level tasks. A custom resource group is generally the user's existing resources, or all data sources are inside the IDC. In this case, the cost of using custom resources is low from one IDC to another.

The eighth is the billing method. Shared resource groups are charged on a pay-as-you-go basis. The exclusive resource groups are available on an annual or monthly subscription basis and have different computing resource specifications. Custom resource groups are only charged for the professional version of DataWorks.

In summary, we recommend using exclusive resource groups.

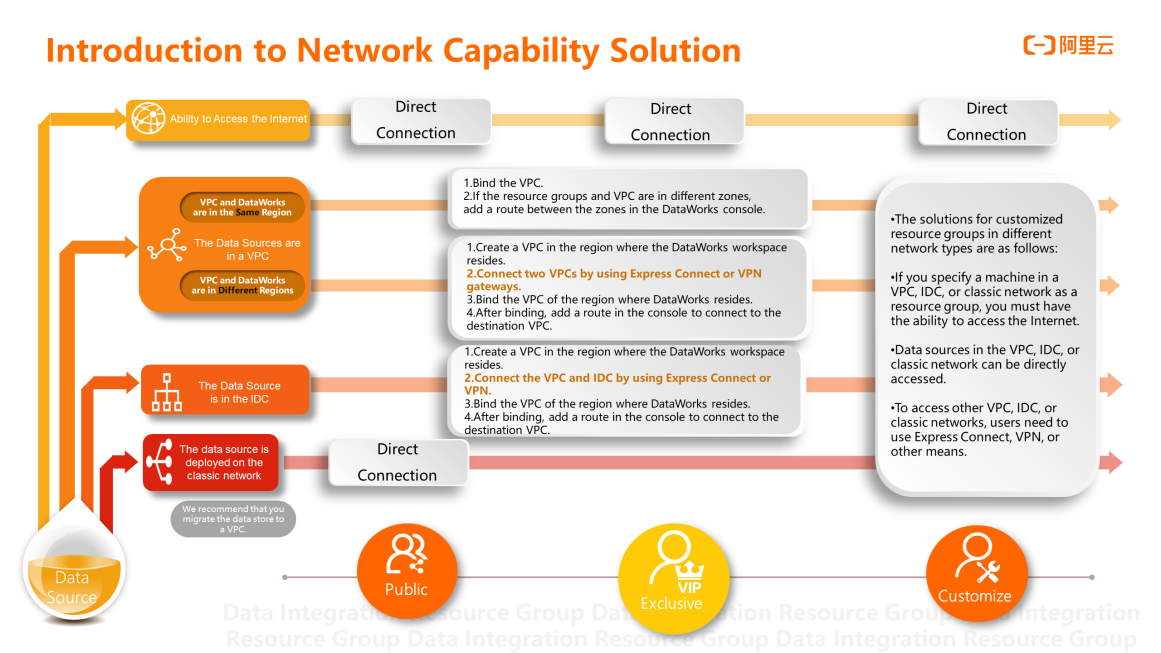

Different resource groups have different network solutions and capabilities, and there are usually four categories of situations.

1. The data source can access the public network. All three resource groups can connect with this group of data sources to connect the network to realize data synchronization.

2. If the data source is in a VPC, shared resource groups cannot be connected. You can only use exclusive or custom resource groups. This is the most common case. There are two situations:

3. The data source is in the IDC. Connect through an exclusive resource group by creating a VPC in the region where DataWorks resides. Then, bind the VPC in the region where DataWorks resides using an express connect or VPN to connect the VPC to the IDC. Add a router in the console to connect to the destination VPC.

4. The data source is in the classic network. We recommend moving the data source to the VPC. We have corresponding solutions, but this situation is relatively rare. Currently, only public resource groups can directly connect to the classic network.

The solution is more flexible for custom resource groups because the machine environment is in the user's place, but you may encounter various situations. For example, it needs to have the ability to access the Internet to set a machine in a specified VPC, IDC, or classic network as a resource group. Clients need to use their Express Connect, VPN, or other connection means to access a data source in a VPC, IDC, or classic network or access other VPCs, IDCs, or classic networks elsewhere.

In summary, we recommend using exclusive resource groups. The interface is easy to operate, and the solutions are abundant.

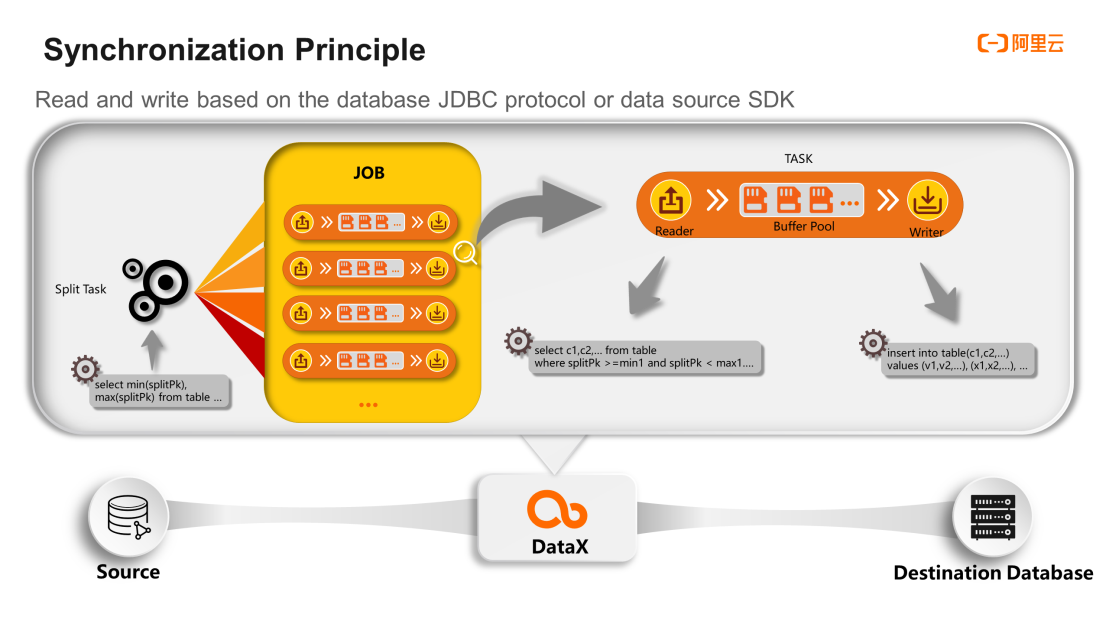

Offline synchronization from the source to the destination is achieved using the data integration engine. Based on the JDBC protocol of the database or the SDK of the data source, the tasks of pulling and dividing the data of the source database are carried out. A task is divided into multiple tasks, and the data can be read concurrently to speed up data synchronization. Each task has a corresponding read-write subthread. During reading, the source database data can be extracted from the divided area and put into the cache. Finally, the writer at the write end injects the data into the target end by calling the JDBC or SDK interface.

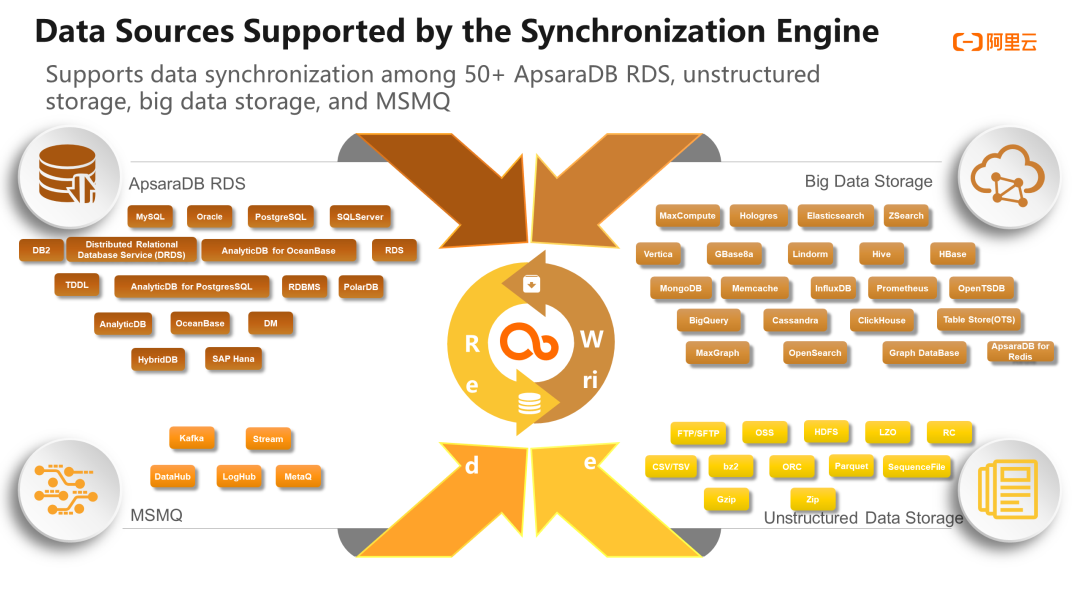

Offline synchronization supports data sources, such as 50 + ApsaraDB RDS, unstructured storage, big data storage, and MSMQ. The reads and writes of these data sources are crossed and can be combined into a variety of synchronization processes to match the needs in production.

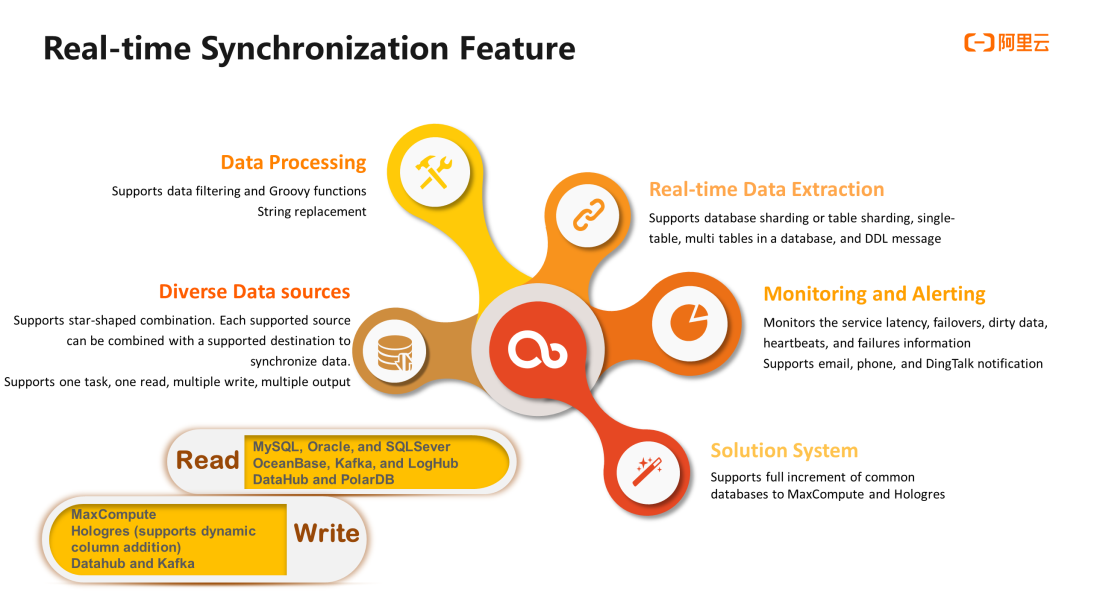

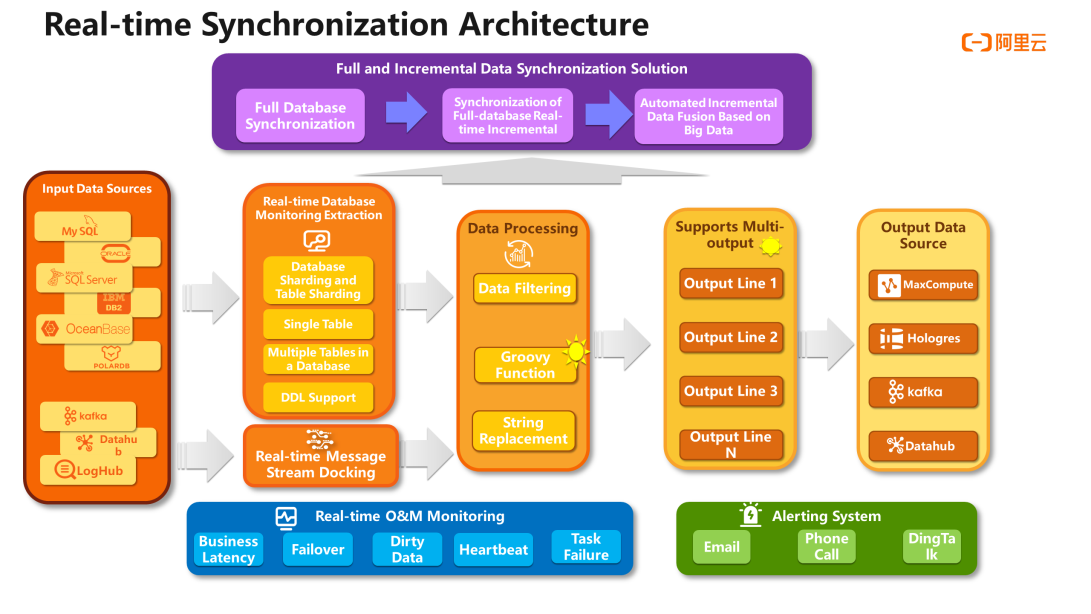

Real-time synchronization has five major features:

Input data sources are extracted from real-time databases, including database sharding and table sharding, single table or multiple tables in a database, DDL, or real-time message stream docking. Then, data processing is performed, including data filtering, Groovy functions, and string replacement. Finally, it supports multiple outputs, referring to the implementation of one read and multiple writes. Reading a data source can be written to multiple data sources at the same time. Then, the data is finally output.

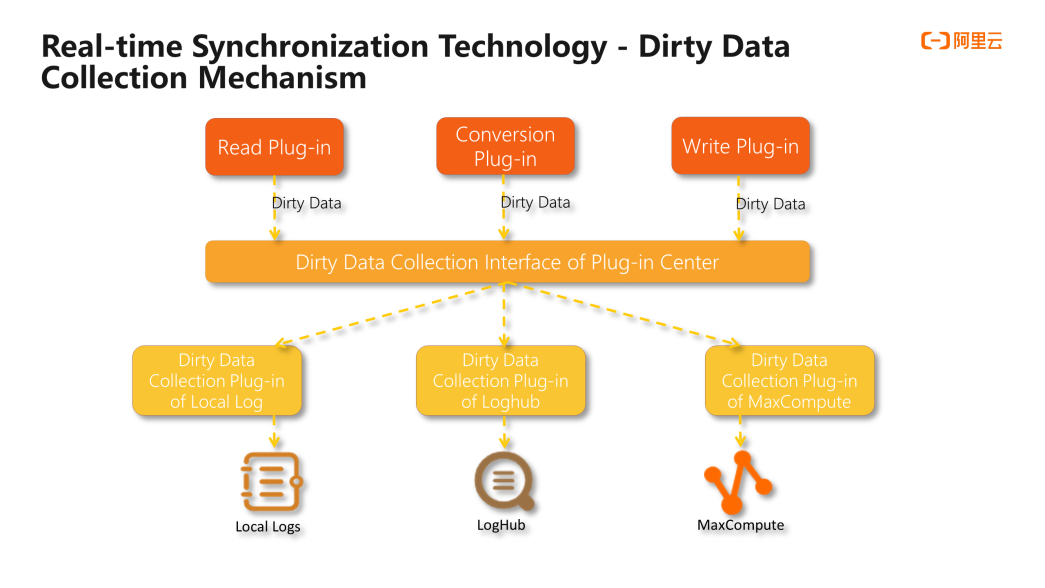

Dirty data can be collected in a unified manner. After collection, it can be written from the interface to the collection place, including local logs, Loghub, and MaxCompute.

MySQL, Oracle, Datahub, Loghub, and Kafka can be synchronized to Datahub, Kafka, and other destinations in real-time. You can complete development by dragging. It also supports simple data filtering, replacement, and Groovy custom processing capabilities.

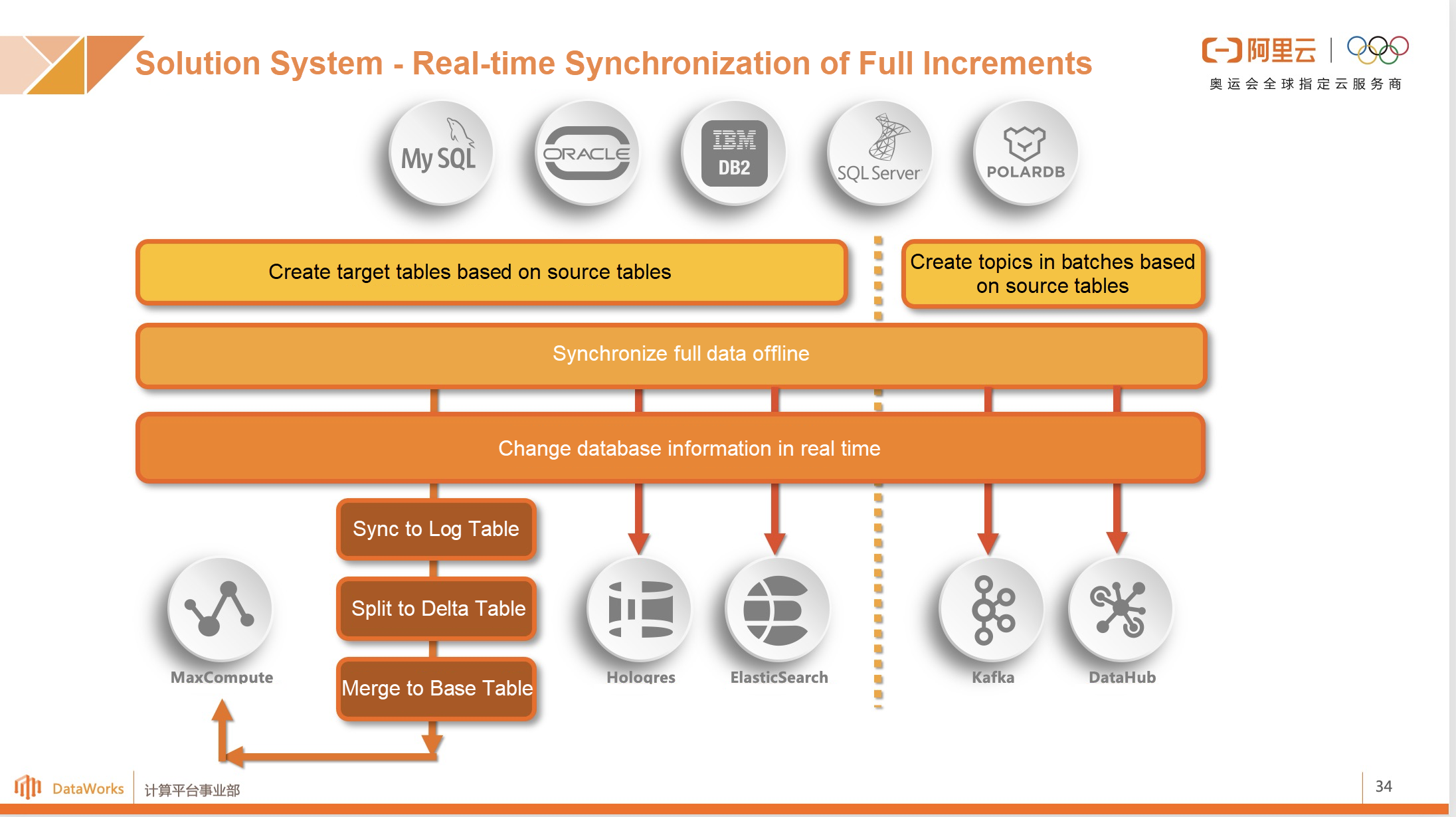

The solution does not refer to the configuration or synchronization of a single task but rather a series of usage scenarios. It automatically helps you establish tasks or start tasks and integrates these processes into solutions.

The full database migration is the full database synchronization. You can synchronize all tables in the ApsaraDB RDS to MaxCompute at one time, helping users improve efficiency and reduce usage costs.

Batch cloud migration allows users to upload multiple data sources to MaxCompute at the same time. Users can configure rules, such as table name conversion, field name conversion, field type conversion, destination table new fields, destination table field assignment, data filtering, and destination table name prefixes to meet the needs of business scenarios.

The solution system can easily configure the existing databases and complete the full migration of existing data and the real-time synchronization of subsequent increments.

The following situations are supported:

The public cloud billing method is related to resource groups and is based on four major points:

Woay: Creating 'Games' for A More Engaging Retail Experience

An Introduction and Best Practice of DataWorks Data Services

1,320 posts | 464 followers

FollowAlibaba Clouder - August 25, 2020

Alibaba Clouder - November 6, 2017

Alibaba Clouder - April 11, 2018

Alibaba Cloud MaxCompute - January 7, 2019

Alibaba Cloud MaxCompute - September 18, 2018

Alibaba Clouder - June 26, 2018

1,320 posts | 464 followers

Follow Cloud Migration Solution

Cloud Migration Solution

Secure and easy solutions for moving you workloads to the cloud

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba Cloud Community