By Mujiao

Data creation can be used in many interesting scenarios. In general, data comes first before creating data-based applications. But if the application is applied to another scenario, there is no way to demonstrate the function without data.

Generally, the more realistic the data, the better it is for functional testing and demonstration scenarios. However, to adhere to international data privacy and security requirements, data masking is typically required. Data masking is complicated as obscuring too much data can make important data unavailable, and obscuring too little data may risk potential data leakages. Because of this, it is usually safer to just simulate data by ourselves.

Personally, there are two scenarios that I generally encounter that requires data generation. First is when a colleague or partner who wants to consult a method of SQL to process data, but there is no data. Second is when you need to build a proof of concept (POC) without providing real simulation data. In both cases, you need to simulate data by yourself.

For data simulation of a single business scenario, a single table is usually enough. However, to simulate a certain business scenario or a POC test scenario, multiple interrelated tables in a business system must be simulated.

In general, there will be some user requirements, with a clear description of the business scenario. There are also some other requirements in the table, such as the number of records, row storage, field generation rules, value range of a field, enumeration value of a field. A small amount of real data may also be given.

Creating a separate table for data may be very simple. For example, in our daily test, a function is used to test the JOIN logic of a SQL statement. However, it will be very complex, because creating a table can also be equivalent to creating a business system.

The business system is just an increase in the number of tables compared to a single table. Since there is a primary-foreign key relationship between the tables of the business system, it is necessary to build the code table (dimension table) first and then create the business table (fact table).

The method of simulating data is divided into two stages. In the first stage, a small table is created to generate a code table, while in the second stage, the Cartesian product is used to quickly multiply the required data volume. Here, the columns can be filled with data values generated with random functions.

The simplest way to create data in MaxCompute is to use the insert into values statement, which is also my most commonly used method. Earlier versions, before this statement was not supported, used the union all statement. If you don't want to actually write the data, use the from values and with expressions.

Example 1: Use insert … values to insert data into a specified partition.

The following statement shows an example:

-- Create a partitioned table named srcp.

create table if not exists srcp (key string,value bigint) partitioned by (p string);

-- Add a partition to the srcp.

alter table srcp add if not exists partition (p='abc');

-- Insert data into the abc partition of the srcp.

insert into table srcp partition (p='abc') values ('a',1),('b',2),('c',3);

-- Query data from the srcp.

select * from srcp where p='abc';

-- The following result is returned.

+------------+------------+------------+

| key | value | p |

+------------+------------+------------+

| a | 1 | abc |

| b | 2 | abc |

| c | 3 | abc |

+------------+------------+------------+

Example 2: Use values table to insert data into a partitioned table.

The following statement shows as below:

-- Create a partitioned table named srcp.

create table if not exists srcp (key string,value bigint) partitioned by (p string);

-- Insert data into the srcp.

insert into table srcp partition (p) select concat(a,b), length(a)+length(b),'20170102' from values ('d',4),('e',5),('f',6) t(a,b);

-- Query data from the srcp.

select * from srcp where p='20170102';

-- The following result is returned.

+------------+------------+------------+

| key | value | p |

+------------+------------+------------+

| d4 | 2 | 20170102 |

| e5 | 2 | 20170102 |

| f6 | 2 | 20170102 |

+------------+------------+------------+Values (…), (…) t(a, b) defines that a table named t with columns named a and b. The data types are STRING and BIGINT. column types must be derived from the values list.

Example 3: Use methods of from value or union all to create a constant table.

The following statement shows as below:

with t as (select 1 c union all select 2 c) select * from t;

-- The preceding statement is equivalent to the following statement:

select * from values (1), (2) t(c);

-- The following result is returned.

+------------+

| c |

+------------+

| 1 |

| 2 |

+------------+The above examples are from: https://www.alibabacloud.com/help/doc-detail/73778.htm

It is well known that the writing of the Cartesian product can only be used under MAPJOIN prompts. Therefore, the small constant table created in step 1 can use MAPJOIN.

The statement shows as below:

-- 1 Create a constant table. The ordered number I use here is convenient for using where to get the specified number of records and multiply the Cartesian product.

create table za1 as

select c0 from values

(1),(2),(3),(4),(5),(6),(7),(8),(9),(10),(11),(12),(13),(14),(15)

,(16),(17),(18),(19),(20),(21),(22),(23),(24),(25),(26),(27),(28),(29),(30)

,(31),(32),(33),(34),(35),(36),(37),(38),(39),(40),(41),(42),(43),(44),(45)

,(46),(47),(48),(49),(50),(51),(52),(53),(54),(55),(56),(57),(58),(59),(60)

,(61),(62),(63)

t(c0);

--------------------------

-- The following statement uses a shell loop to build which is better to control the number of rows, and will produce 50 rows of data

select transform('for i in `seq 1 50`; do echo $i; done') using 'sh' as (data);

-- The transform method is available at the following URL

https://help.aliyun.com/document_detail/73719.html?spm=5176.21213303.J_6028563670.7.35a03eda0iPiEQ&scm=20140722.S_help%40%40%E6%96%87%E6%A1%A3%40%4073719.S_hot.ID_73719-RL_TRANSF%20M-OR_s%2Bhelpproduct-V_1-P0_0

--------------------------

-- 2 Use the constant table to associate multiple times to construct the required number of records [Use the calculator to estimate how many times of N is sufficient]

create table zb1 as

select *

from(

-- 10*63*63=39690

select /*+mapjoin(t2,t3)*/

1000000 + row_number() over(partition by 1)-1 as c0

from za1 t1 -- 63

join za1 t2 -- 63

join(select c0 from za1 limit 10)t3 -- 10

)t

;

--3 The table created in step 2 reaches tens of thousands of records. The number of table records created by using this table can easily reach hundreds of millions of records.Data can be essentially divided into two types, sequential values and enumerated values. The sequence value, an ordered array, is implemented with the row_number() function, which is mainly defined as the primary key in this scenario. The enumerated value is just a handful of code values, such as values, amounts, codes, that is distributed among the records, which is mainly filled with random functions. Other circumstances, which I have not yet encountered, will not be described.

The following statement shows as below:

-- 1 Ordered values. In this example, the generated data is an ordered sequence from 1,000,000 to 1,036,689 that can be used as a business primary and foreign key

select /*+mapjoin(t2,t3)*/

1000000 + row_number() over(partition by 1)-1 as c0

from za1 t1 -- 63

join za1 t2 -- 63

join(select c0 from za1 limit 10)t3 -- 10

;

-- 2 Random values/fixed values. In this example, column c2 will generate a relatively uniform value from 1 to 1,000

-- The random number generated by the random function is a floating point value and must be converted to bigint

select /*+mapjoin(t2,t3)*/

1000000 + row_number() over(partition by 1)-1 as c0

,1617120000 as c1

,cast(round(rand()*999,0) as bigint)+1 as c2

from za1 t1 -- 63

join za1 t2 -- 63

join(select c0 from za1 limit 10)t3 -- 10

;Generally, data types can be divided into four types, the unique value of the primary key, enumerated values represented by the string, value, date and time. In the preceding example, all enumeration values are numeric values. The only difference is that the enumeration value is numeric instead of text without data and time. So if it is really necessary, how to implement it is an issue.

Time can be created as unixtime, which can be converted to a number. To associate text enumeration values, you can create a code table, and then create a business table. Generally, a business system also stores code values, rather than a long string.

The following statement shows as below:

-- Use the code table to convert text

with za as (

select * from values

(1),(2),(3),(4),(5),(6),(7),(8),(9),(10),(11),(12),(13),(14),(15)

,(16),(17),(18),(19),(20),(21),(22),(23),(24),(25),(26),(27),(28),(29),(30)

,(31),(32),(33),(34),(35),(36),(37),(38),(39),(40),(41),(42),(43),(44),(45)

,(46),(47),(48),(49),(50),(51),(52),(53),(54),(55),(56),(57),(58),(59),(60)

,(61),(62),(63)

t(c0)

)

,ta as (

select * from values ('zhangsan',4),('lisi',5),('wangmazi',6) t(a,b))

select k,a,b,c

from(

select 100 + row_number() over(partition by 1)-1 as k

,cast(round(rand()*3,0) as bigint)+3 as c

from za -- 63

limit 3

)tb join ta on ta.b=tb.c

;

Return:

k a b c

101 lisi 5 5

102 wangmazi 6 6

103 zhangsan 4 4

-- Use unixtimetamp to convert data and time

with za as (

select * from values

(1),(2),(3),(4),(5),(6),(7),(8),(9),(10),(11),(12),(13),(14),(15)

,(16),(17),(18),(19),(20),(21),(22),(23),(24),(25),(26),(27),(28),(29),(30)

,(31),(32),(33),(34),(35),(36),(37),(38),(39),(40),(41),(42),(43),(44),(45)

,(46),(47),(48),(49),(50),(51),(52),(53),(54),(55),(56),(57),(58),(59),(60)

,(61),(62),(63)

t(c0)

)

select k

,from_unixtime(1617120000) as t

,from_unixtime(1617120000

+3600000 * c ) -- hour

as b

,c

from(

select 100 + row_number() over(partition by 1)-1 as k

,cast(round(rand()*3,0) as bigint)+3 as c

from za -- 63

limit 3

)tb

;

Return:

k t b c

100 2021-03-31 00:00:00 2021-03-31 03:00:00 3

101 2021-03-31 00:00:00 2021-03-31 05:00:00 5

102 2021-03-31 00:00:00 2021-03-31 06:00:00 6In a POC project in the telecommunications industry some time ago, the customer first gave 80 lines of real data, requiring to create more than a billion data, and giving some very special data requirements.

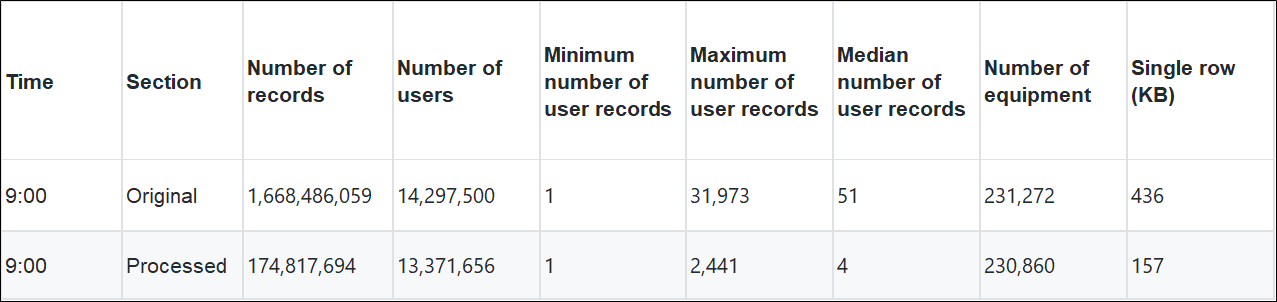

Requirements for raw data and data characteristics after processing according to the customer's requirements

The following table shows more details:

According to the above requirements, the first step is to analyze the business requirements. The original data has 61 columns, but the columns actually involved in data calculation are only 10 columns. Therefore, to build the original table, you only need to create the 10 columns, and then associate the column of the original 61 columns with one row.

Analyze the original data structure to select the columns to be calculated:

create table if not exists t_log10 (

imei_tac int comment ' User equipment ID1'

,phone7 int comment ' User equipment ID2'

,imsi string comment ' User equipment ID3'

,msisdn string comment ' User equipment ID4'

,tac int comment ' Telecom equipment ID1'

,cell_id int comment ' Telecom equipment ID2'

,Procedure_Type int comment ' Business type'

,Procedure_Start_Time bigint comment ' Business start time, unixtimestamp'

,Procedure_status int comment ' Business status, fixed value: 1'

,country_code int comment ' Country code, fixed value -406' )

partitioned by (hh string);In the telecom industry, this business scenario describes the registration of users' mobile devices with base station devices of telecom operators. This business calculation uses 10 fields. Among these fields, five are related to user equipment dimensions, namely user equipment ID (1-4) and country code. Two are related to telecom equipment dimensions, namely telecom equipment ID (1-2), and three are related to the occurrence of business between the user equipment and telecommunication equipment, namely, business type, business status, and business start time.

Therefore, after analyzing requirements, I think it needs to build a dimension table of user equipment and telecom base station equipment first, and then create a telecom business fact table based on these dimension tables.

Step 1: Create a telecom base station dimension (code) table:

drop table if exists t_tac_lacid;

create table if not exists t_tac_lacid (id bigint,tac bigint,lacid bigint);

insert overwrite table t_tac_lacid

select /*+mapjoin(t2)*/

row_number() over(partition by 1)+100000 as rn

,t1.c0+6001 as tac

,t2.c0+1201 as lacid

from (select row_number() over(partition by 1)-1 as c0 from zb1 limit 2300)t1

join (select row_number() over(partition by 1)-1 as c0 from zb1 limit 100)t2

;

-- 230000The result set of a specific number of records selected by the created zb1 is multiplied with a Cartesian product by the specified number of records. As the two IDs are to be built out as unique primary keys, the row_number window function is used here. When building the primary key, the 100000+ is used to build the ID with a fixed length.

Step 2: Create a user device dimension (code) table.

drop table if exists t_user;

create table t_user (imei_tac bigint,phone7 bigint,imsi string ,msisdn string);

insert overwrite table t_user

select

rn as imei_tac

,cast(substr(to_char(rn),2,7) as bigint)+1000000 as phone7

,substr(MD5(rn), 1,10) as imsi

,substr(MD5(rn),11,10) as msisdn

from(

select /*+mapjoin(t2,t3,t4)*/

row_number() over(partition by 1)+10000000 as rn

from za1 t1

join za1 t2

join za1 t3

join (select c0 from za1 limit 58) t4

-- limit 100

)t;

-- 14502726

-- 63*63*63*58 = 14502726In this example, a seemingly realistic number of records is built by a 4-time use of table za1. However, in reality, it makes no difference if it is a few entries away when creating the data. We use the row_number window function to create the business primary key and use MD5 truncation to construct different primary key styles. Then the random function is used to create the base station information. In fact, the base station information is also calculated. The purpose of such special processing is mainly to create the final result table.

The last step is to build the result table. We have not considered the median, extreme value and the result after processing, therefore, the final implementation is more complicated. We will not post it here since it is too long. The method for meeting special requirements is the user segment.

Prompts are needed to improve performance as the original tables used to create the data are very small and the Map stage generally has only 1 worker. Therefore, the data block input in the Map stage must be small, and the resources of Map and Reduce must be large.

set odps.sql.mapper.cpu=200;

set odps.sql.mapper.memory=8192;

set odps.sql.mapper.split.size=4;

set odps.sql.reducer.cpu=200;

set odps.sql.reducer.memory=8192;Data creation scenarios are mostly simple, while there are special complications like the above. The complex business mainly tests the ability of data processing, and the process of using basic tables to generate complex tables as well as of building the relational model of the relational database. To build a single data table, it is necessary to analyze the dimensions and facts of the business first, then build the dimensions, and use them to build the facts.

137 posts | 21 followers

FollowAlibaba Cloud MaxCompute - August 27, 2021

Alibaba Cloud MaxCompute - June 23, 2022

Alibaba Cloud MaxCompute - September 18, 2018

Alibaba Cloud Community - October 17, 2025

Alibaba Cloud MaxCompute - January 15, 2019

Alibaba Cloud MaxCompute - November 15, 2021

137 posts | 21 followers

Follow MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn MoreMore Posts by Alibaba Cloud MaxCompute