1. An ECS instance has been created. For more information, see Create an instance by using the wizard. In this tutorial, the ECS instance is configured as follows:

• Instance Type: ecs.g6.large

• Operating system: CentOS 7.7 64-bit public image

• Network type: Virtual Private Cloud (VPC)

• IP address: public IP address

Note: The commands used may vary depending on your instance's operating system and software versions. If your software version or operating system differs from the ones mentioned above, please adjust the commands accordingly.

2. The ECS instance has been added to security groups with rules allowing traffic on ports 8088 and 50070, which are used by Hadoop. To learn how to add a security group rule, please refer to Add a security group rule.

Hadoop is an open-source Apache framework written in Java that enables efficient processing and storage of large datasets across clusters. It allows users to develop distributed programs without needing to understand the underlying infrastructure. Hadoop Distributed File System (HDFS) and MapReduce are essential components of Hadoop.

HDFS is a distributed file system that enables distributed storage and retrieval of application data.

MapReduce is a distributed computing framework that distributes computing jobs across servers in a Hadoop cluster. These jobs are split into map and reduce tasks, which are scheduled for distributed processing by the JobTracker.

For more information, visit the Apache Hadoop website.

Perform the following steps to build a Hadoop pseudo-distributed environment on the ECS instance:

1. Connect to the created ECS instance. For more information, see Connection methods.

2. Run the following command to download the JDK 1.8 installation package:

wget https://download.java.net/openjdk/jdk8u41/ri/openjdk-8u41-b04-linux-x64-14_jan_2020.tar.gz3. Run the following command to decompress the downloaded JDK 1.8 installation package:

tar -zxvf openjdk-8u41-b04-linux-x64-14_jan_2020.tar.gz4. Run the following command to move and rename the JDK installation package: In this example, the installation package is renamed java8. You can specify a different name for the folder based on your business requirements.

mv java-se-8u41-ri/ /usr/java85. Run the following command to configure Java environment variables: If your specified name of the folder to which the JDK 1.8 installation files are extracted is not java8, replace java8 in the following commands with the actual folder name:

echo 'export JAVA_HOME=/usr/java8' >> /etc/profile

echo 'export PATH=$PATH:$JAVA_HOME/bin' >> /etc/profile

source /etc/profile6. Run the following command to check whether Java is installed:

java -versionA command output similar to the following one indicates that JDK 1.8 is installed:

openjdk version "1.8.0_41"

OpenJDK Runtime Environment (build 1.8.0_41-b04)

OpenJDK 64-Bit Server VM (build 25.40-b25, mixed mode)1. Run the following command to download the Hadoop installation package:

wget https://mirrors.bfsu.edu.cn/apache/hadoop/common/hadoop-2.10.1/hadoop-2.10.1.tar.gz2. Run the following command to decompress the Hadoop installation package to the /opt/hadoop path:

tar -zxvf hadoop-2.10.1.tar.gz -C /opt/

mv /opt/hadoop-2.10.1 /opt/hadoop3. Run the following command to configure Hadoop environment variables:

echo 'export HADOOP_HOME=/opt/hadoop/' >> /etc/profile

echo 'export PATH=$PATH:$HADOOP_HOME/bin' >> /etc/profile

echo 'export PATH=$PATH:$HADOOP_HOME/sbin' >> /etc/profile

source /etc/profile4. Run the following commands to modify the yarn-env.sh and hadoop-env.sh configuration file:

echo "export JAVA_HOME=/usr/java8" >> /opt/hadoop/etc/hadoop/yarn-env.sh

echo "export JAVA_HOME=/usr/java8" >> /opt/hadoop/etc/hadoop/hadoop-env.sh5. Run the following command to check whether Hadoop is installed:

hadoop versionA command output similar to the following one indicates that Hadoop is installed:

Hadoop 2.10.1

Subversion https://github.com/apache/hadoop -r 1827467c9a56f133025f28557bfc2c562d78e816

Compiled by centos on 2020-09-14T13:17Z

Compiled with protoc 2.5.0

From source with checksum 3114edef868f1f3824e7d0f68be03650

This command was run using /opt/hadoop/share/hadoop/common/hadoop-common-2.10.1.jar1. Modify the core-site.xml configuration file of Hadoop.

a) Run the following command to go to the Edit page:

vim /opt/hadoop/etc/hadoop/core-site.xmlb) Press the I key to enter the edit mode.

c) In the configuration section, add the following content:

<property>

<name>hadoop.tmp.dir</name>

<value>file:/opt/hadoop/tmp</value>

<description>location to store temporary files</description>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>d) Press the Esc key to exit the edit mode and enter :wq to save and close the file.

2. Modify the Hadoop configuration file hdfs-site.xml.

a) Run the following command to go to the Edit page:

vim /opt/hadoop/etc/hadoop/hdfs-site.xmlb) Press the I key to enter the edit mode.

c) In the configuration section, add the following content:

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/opt/hadoop/tmp/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/opt/hadoop/tmp/dfs/data</value>

</property>d) Press the Esc key to exit the edit mode and enter :wq to save and close the file.

1. Run the following command to create a public key and a private key:

ssh-keygen -t rsaA command output similar to the following one indicates that the public and private keys are created:

[root@iZbp1chrrv37a2kts7sydsZ ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:gjWO5mgARst+O5VUaTnGs+LxVhfmCJnQwKfEBTro2oQ root@iZbp1chrrv37a2kts7s****

The key's randomart image is:

+---[RSA 2048]----+

| . o+Bo= |

|o o .+.# o |

|.= o..B = + . |

|=. oO.o o o |

|Eo..=o* S . |

|.+.+o. + |

|. +o. . |

| . . |

| |

+----[SHA256]-----+2. Run the following command to add the public key to the authorized_keys file.

cd .ssh

cat id_rsa.pub >> authorized_keys1. Run the following command to initialize namenode:

hadoop namenode -format2. Run the following commands in sequence to start Hadoop:

start-dfs.shAt the prompts that appear, enter yes, as shown in the following figure.

start-yarn.shA command output similar to the following one is returned.

[root@iZbp1chrrv37a2kts7s**** .ssh]# start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /opt/hadoop/logs/yarn-root-resourcemanager-iZbp1chrrv37a2kts7sydsZ.out

localhost: starting nodemanager, logging to /opt/hadoop/logs/yarn-root-nodemanager-iZbp1chrrv37a2kts7sydsZ.out3. Run the following command to view the processes that are started:

jpsThe following processes are started:

[root@iZbp1chrrv37a2kts7s**** .ssh]# jps

11620DataNode

11493NameNode

11782SecondaryNameNode

11942ResourceManager

12344Jps

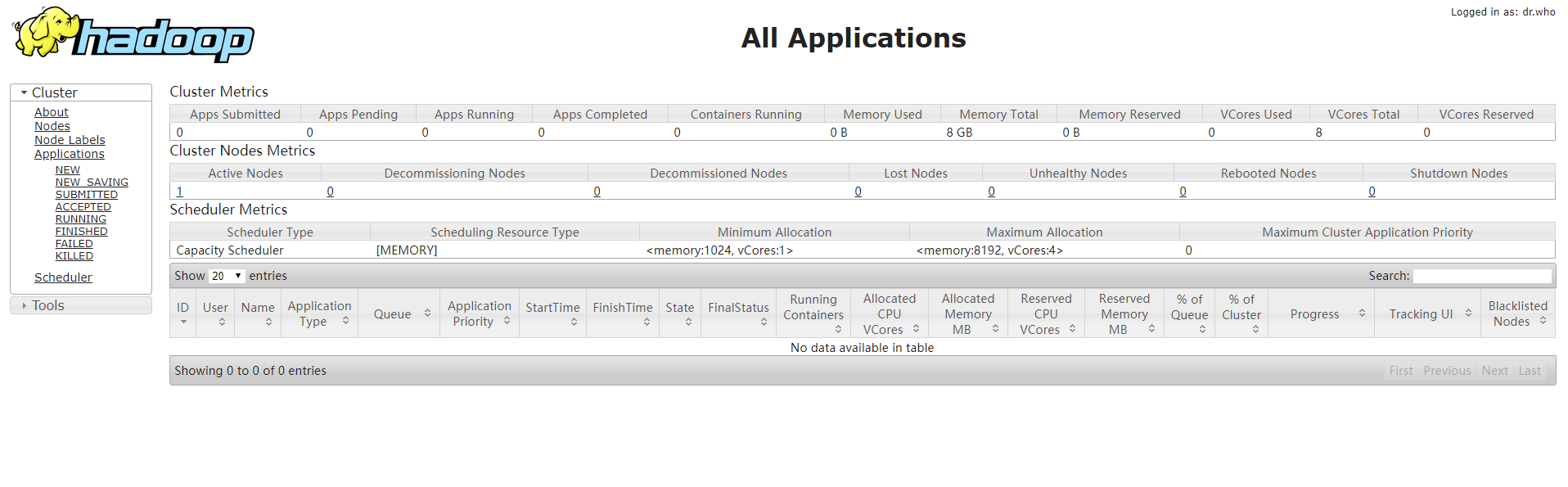

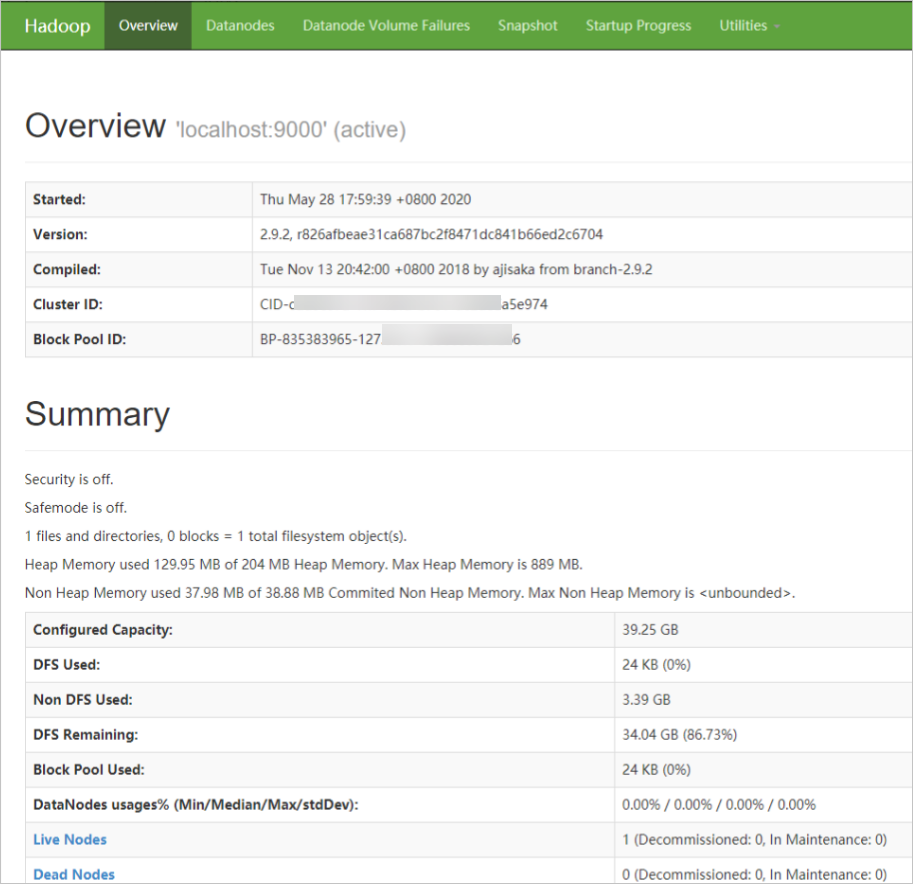

12047NodeManager4. Use a browser to access http://:8088 and http://:50070. If the Hadoop pseudo-distributed environment is built, the page shown in the following figure is displayed.

Note: Make sure that security group rules of the ECS instance allow inbound traffic to ports 8088 and 50070 used by Hadoop. Otherwise, the Hadoop pseudo-distributed environment cannot be accessed. For more information, see Add a security group rule.

Deploying a Java Web Environment on a CentOS 7 Instance Manually

1,319 posts | 463 followers

FollowAlibaba Clouder - March 4, 2021

Alibaba Clouder - August 20, 2020

Alibaba Clouder - April 8, 2019

Alibaba Clouder - April 14, 2021

Alibaba Clouder - March 31, 2021

Alibaba Clouder - September 27, 2019

1,319 posts | 463 followers

Follow ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Elastic High Performance Computing

Elastic High Performance Computing

A HPCaaS cloud platform providing an all-in-one high-performance public computing service

Learn MoreMore Posts by Alibaba Cloud Community