The content of this article is from a speech entitled The Way to Quickly Build Low-Cost and High-Elasticity Cloud Applications by Gao Qingrui (Alibaba Cloud Elastic Computing Technology Expert). This article is divided into four parts:

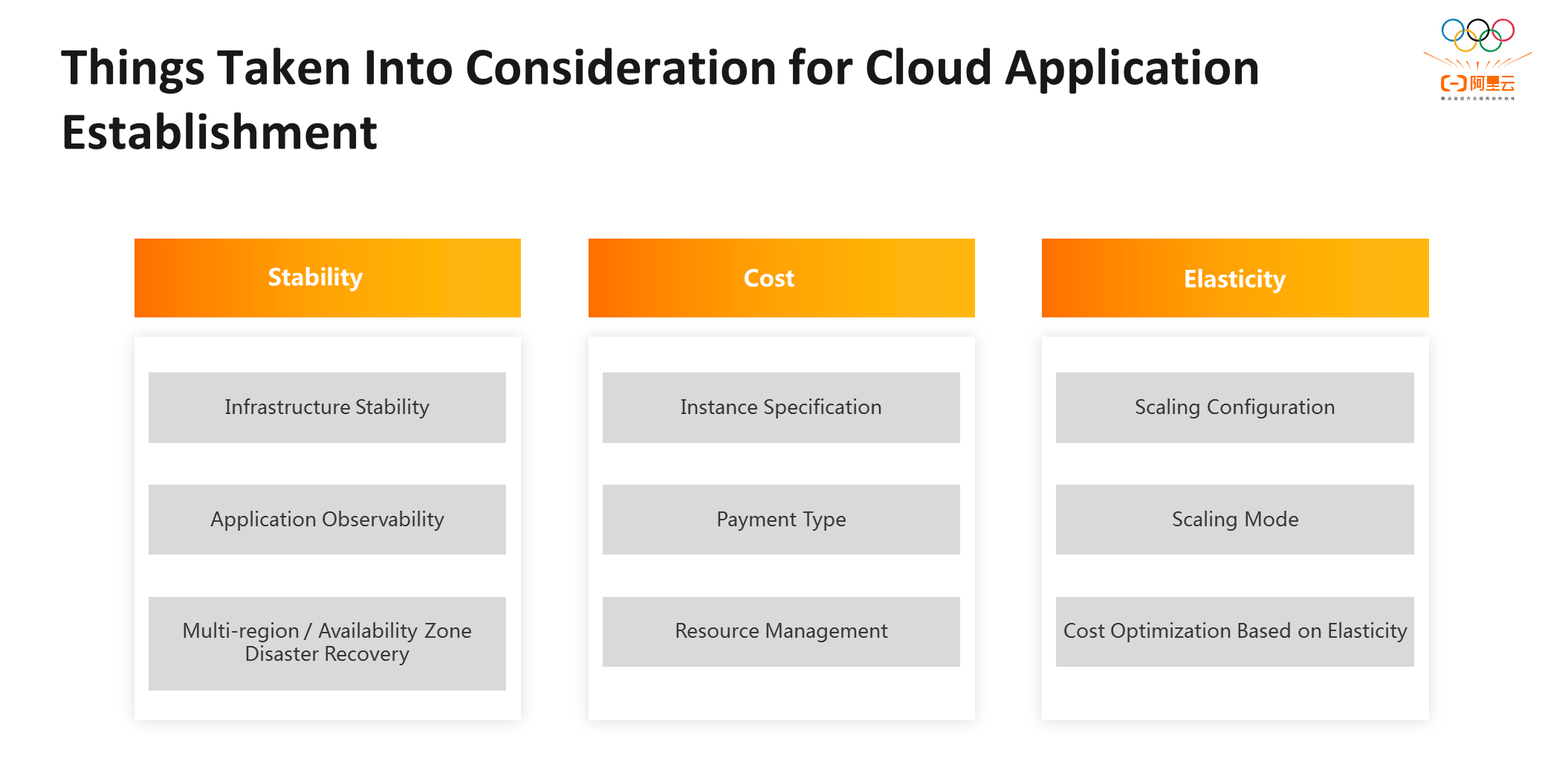

There are three main factors to consider when establishing an application on the cloud: stability, cost, and elasticity. Among them, stability includes infrastructure stability, application stability, and application observability.

Users need to select a cloud platform with a stable infrastructure. Users can realize quick recovery with stable instance operations. In terms of application observability, users can use the monitoring data and monitoring applications on the cloud platform to maintain application stability.

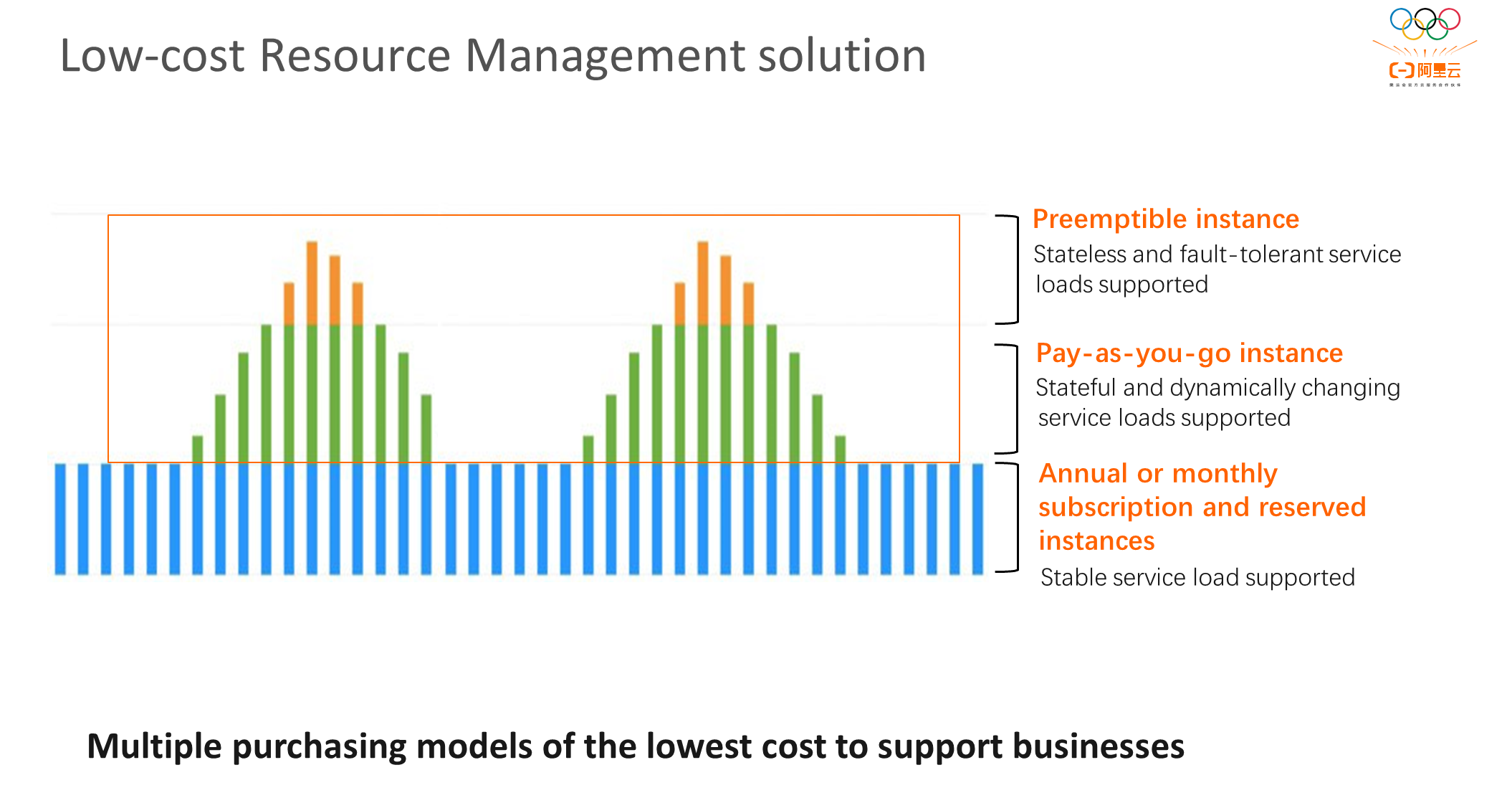

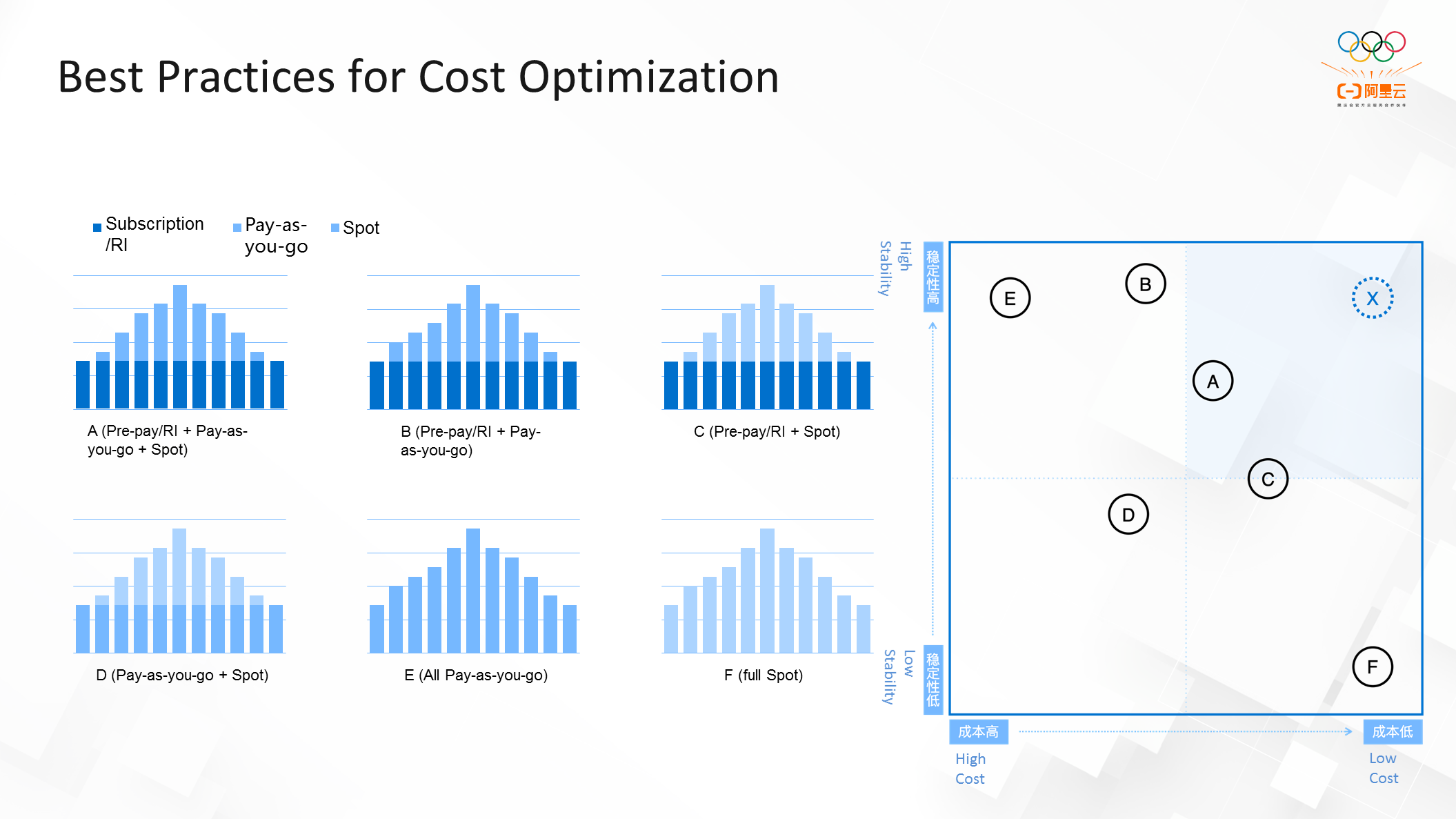

In terms of cost, it mainly involves instance specifications, payment types, and resource management. Among them, the payment type determines the application cost. Even if different payment types are used for the same instance, the cost will vary significantly.

Due to the contradiction between stability and cost, users need to add more resources and machines to improve the stability of services, which will lead to higher costs. When an application is faced with shock loading, the stability will be reduced. Elasticity can effectively resolve conflicts between stability and cost.

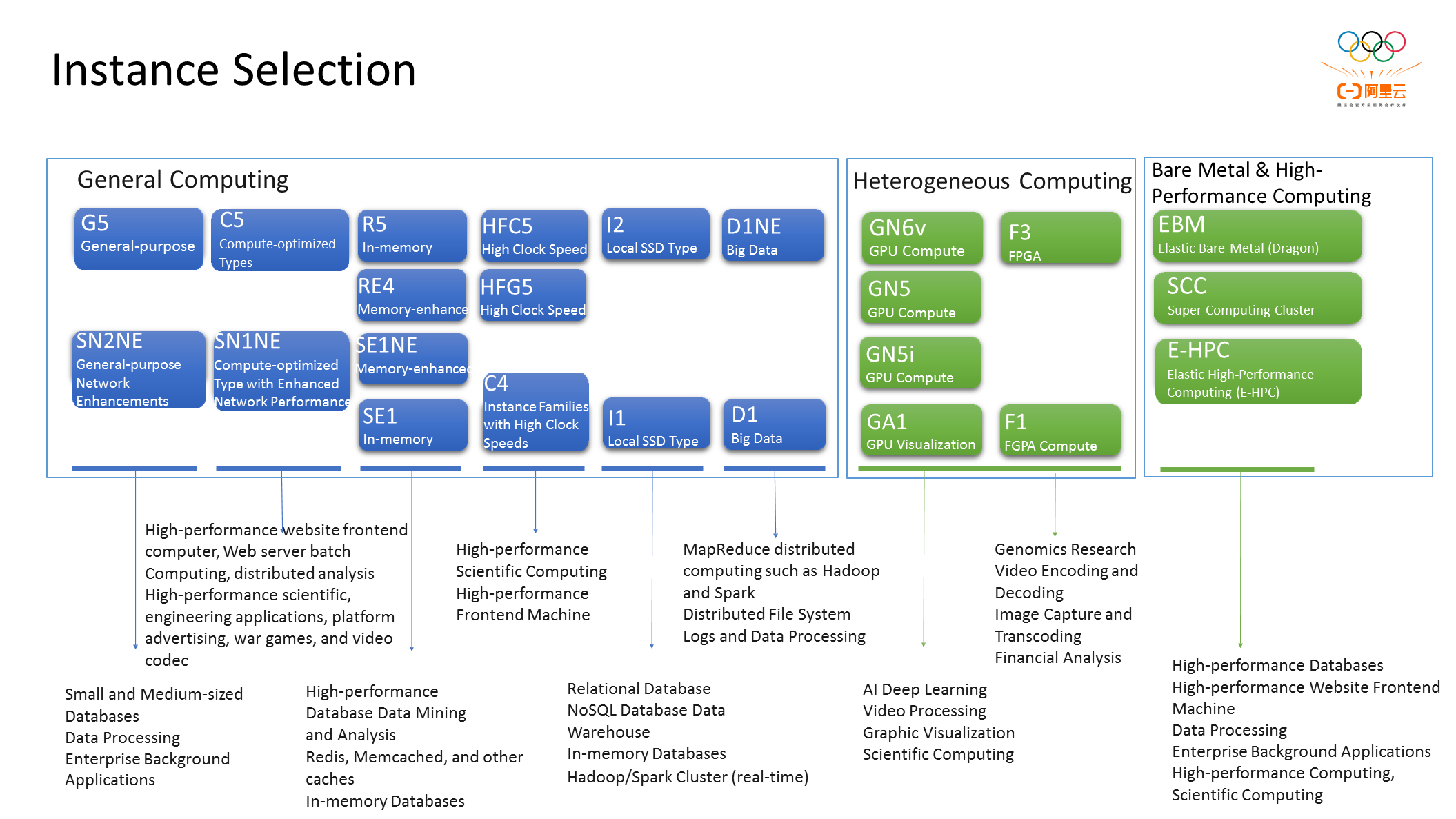

Alibaba Cloud provides three types of instances: general computing, heterogeneous computing, and bare metal and high-performance computing. Users need to select different instances based on specific application features.

If the application is an in-memory database, users can select a memory-based instance to avoid resource mismatches and waste of resources. Bare metal and heterogeneous computing are suitable for application scenarios with higher requirements for resources (such as machine learning).

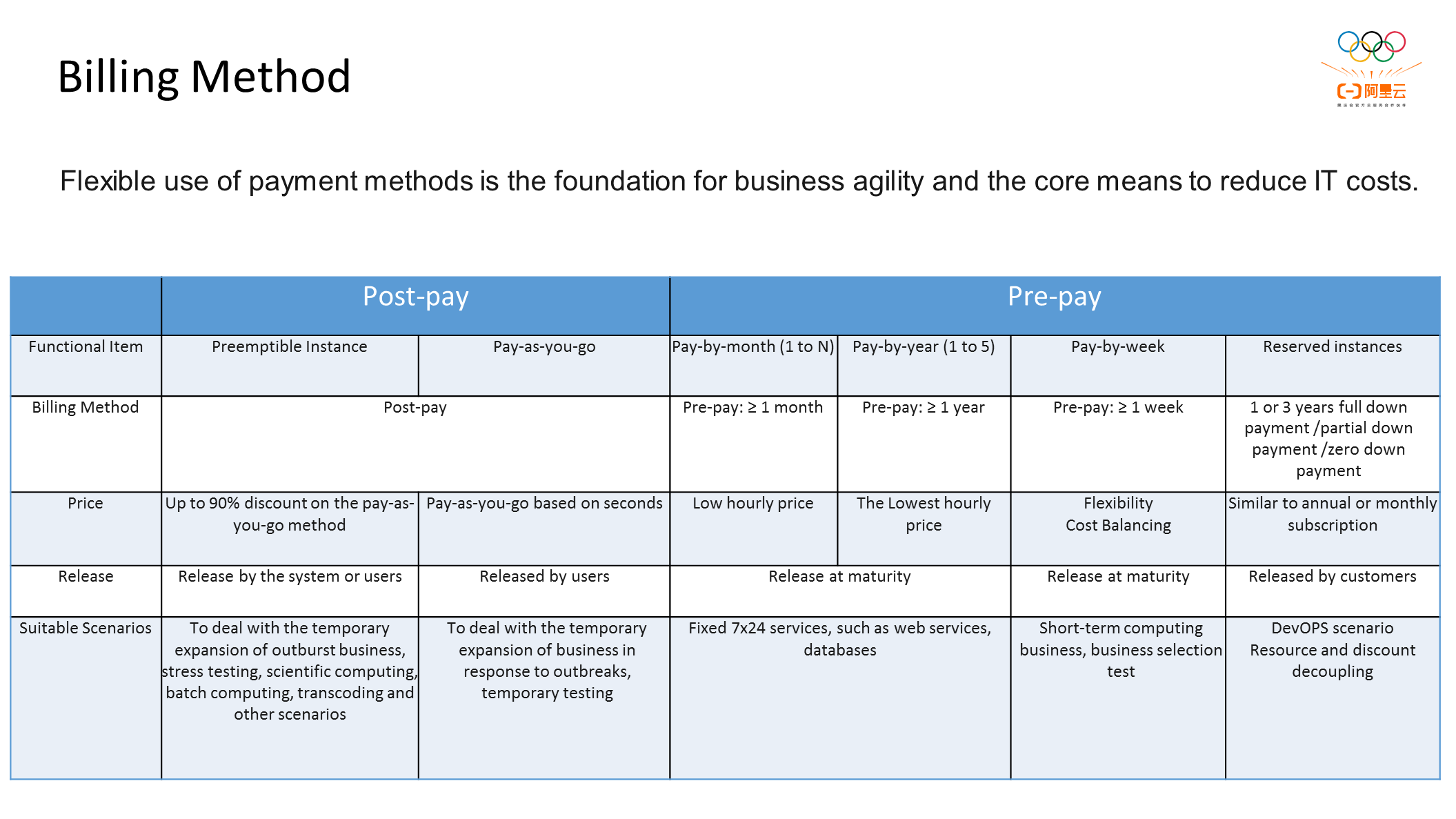

Alibaba Cloud has two payment methods: post-pay and pre-pay:

As shown in the preceding figure, users cannot release instances if it's prepaid by month or year. However, users can release instances when they choose the post-pay method. Users can pay for the reserved instances with a discount through the pay-as-you-go method.

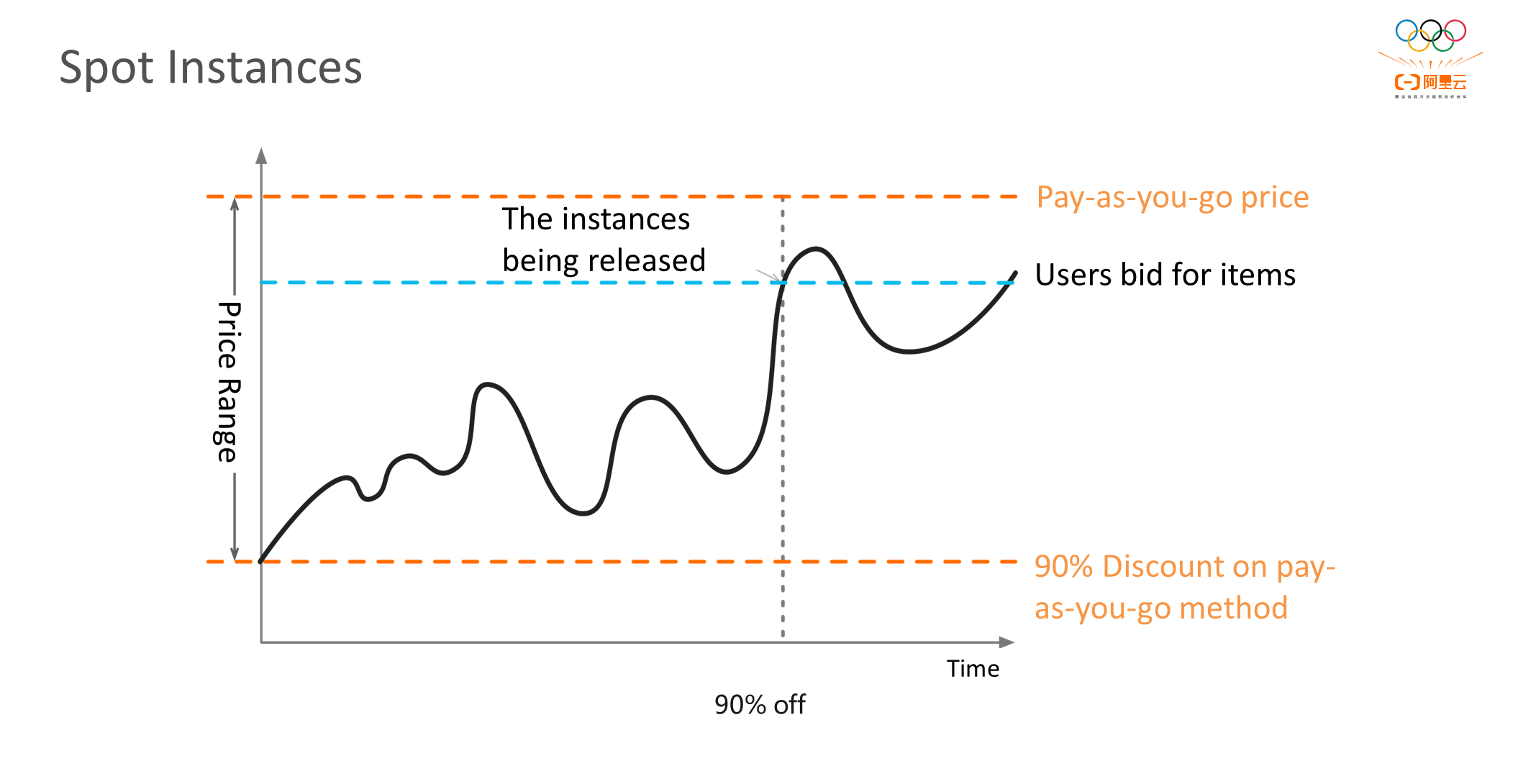

The price of a Spot Instance changes dynamically, and the user bids based on the changes in the Spot Instance.

If the user's bid is higher than the price fluctuation of the Spot instance, the instance can be used all the time. If the price of the Spot Instance is higher than the user's bid, the system will release the instance. Although the Spot instance is cheaper than the instance charged by the pay-as-you-go method, Spot has risks of system recycling and automatic recycling, so its stability is relatively poor.

The application load is mainly divided into three phases:

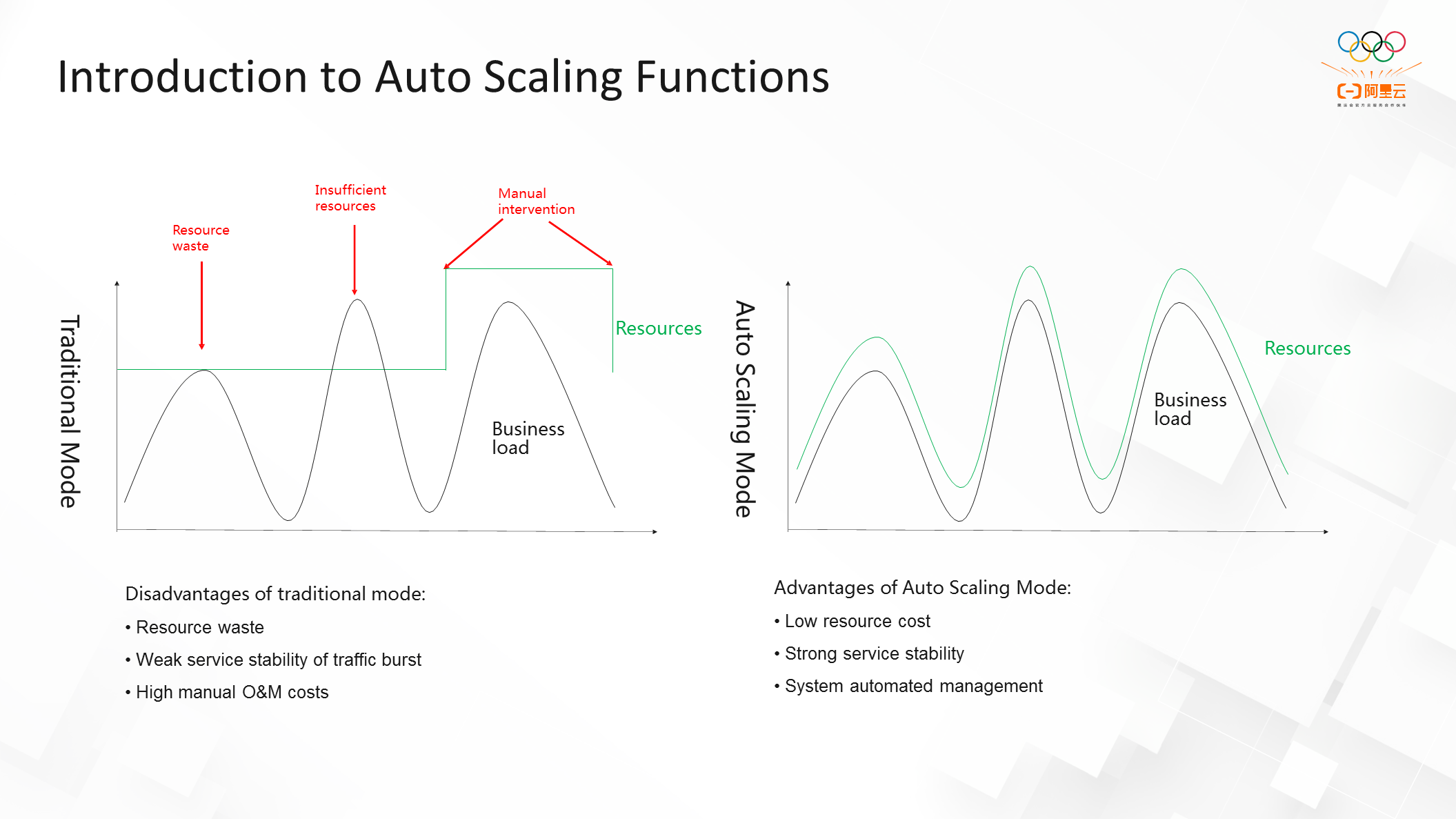

As shown in the preceding figure, the figure on the right shows the basic functions of auto scaling. The figure on the left shows the O&M scenarios of traditional business resources. The black line indicates the business load, which is dynamically changing, and the green curve indicates the amount of resource deployment. Resources are deployed through traditional manual intervention. Users hold fixed resources for a long time.

When the traffic reaches the first point, the business load grows to the peak period, and the resource volume covers the traffic during the peak period. At this time, the service load does not change significantly. Scale-in is not performed during off-peak hours. Service resources are still deployed based on the maximum traffic. When the current business load surges, the original resource deployment volume cannot meet the business load demand, and the business stability is affected.

The traditional manual intervention mode has three disadvantages: resource waste, service instability, and high manual O&M costs.

In the auto scaling mode in the preceding figure, the black curve indicates the business load, and the green curve indicates the resource volume. When the business load bursts and the volume of resources increases, the elastic scale-out ensures service stability. When the business load is reduced, the elastic scale-in ensures service stability and reduces costs.

Compared with traditional methods, the resource costs for auto scaling are lower, and there are no obvious scenarios where resources are wasted. The stability of auto scaling services is better, which ensures the stability of services even in scenarios where traffic increases sharply. In addition, system automated management with no manual intervention reduces labor costs.

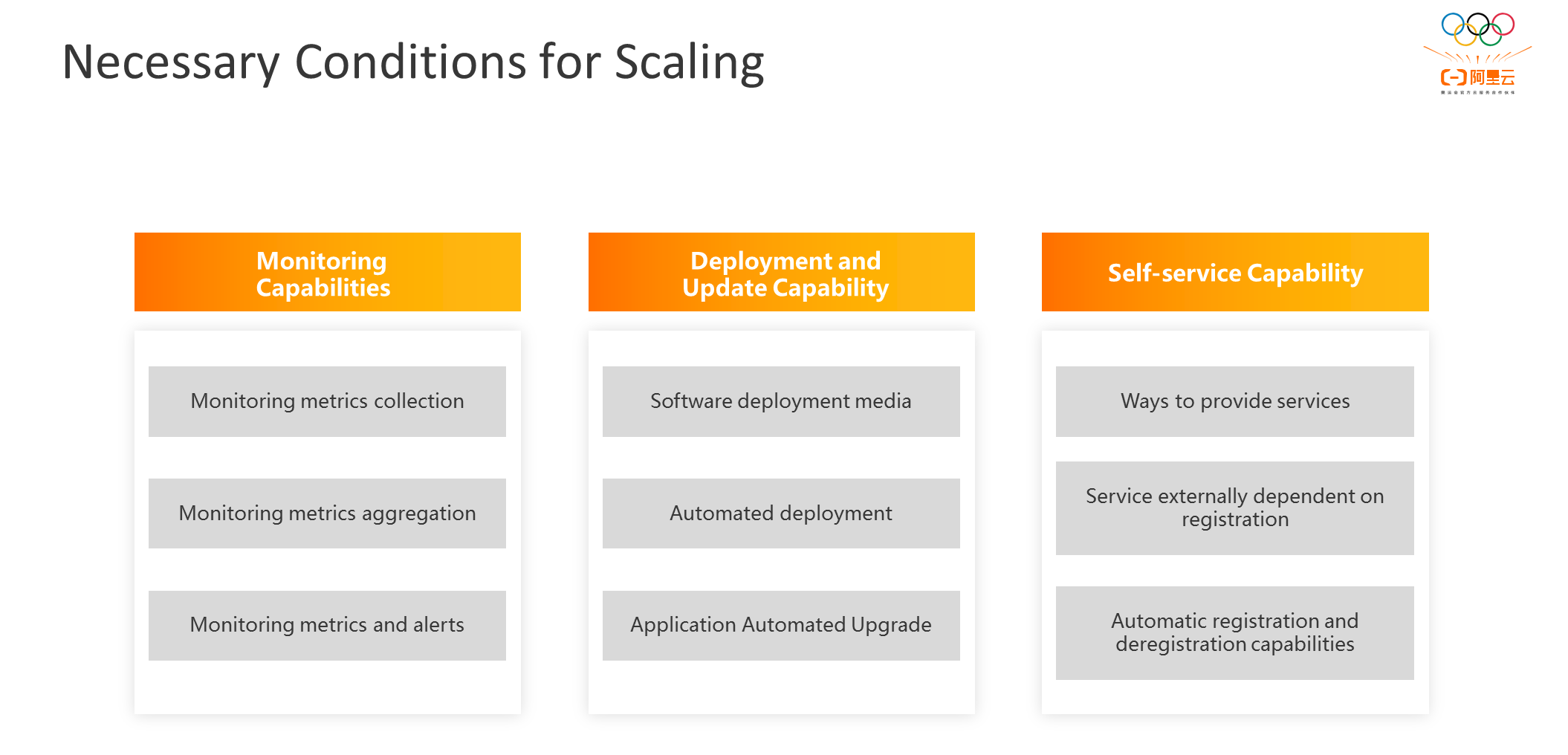

The necessary conditions for auto scaling are shown in the preceding figure. Not all applications can be enabled with auto scaling capabilities immediately. Auto scaling includes the monitoring capability, deployment and update capability, and self-service capability.

Monitoring capabilities include monitoring and collection capabilities, metric aggregation capabilities, and monitoring and alerting capabilities. The monitoring capability needs to understand the business load, CPU metrics, or QPS metrics. The monitoring and alerting capability means that when the CPU usage is greater than 50%, an event is triggered to perform elastic scale-in.

Metric aggregation capability needs to be aggregated by application. If the private cloud has 100 machines and only 20 machines are used, users only need to aggregate the 20 machines of the application when aggregating metrics. In addition, the CPU metrics of 20 machines can be averaged to aggregate specific metrics.

The deployment and update capability required during auto scaling involve three core metrics:

In terms of self-service capabilities, after the elastic scale-out, users need to determine whether the application instance can provide external services normally.

When an instance that is elastically scaled-out is started, a web service is started. However, the web service is not attached to the corresponding load balance. In this case, users need to evaluate whether their services are capable of self-service, whether the external service depends on registration, and whether the application instance has automatic registration and deregistration capabilities.

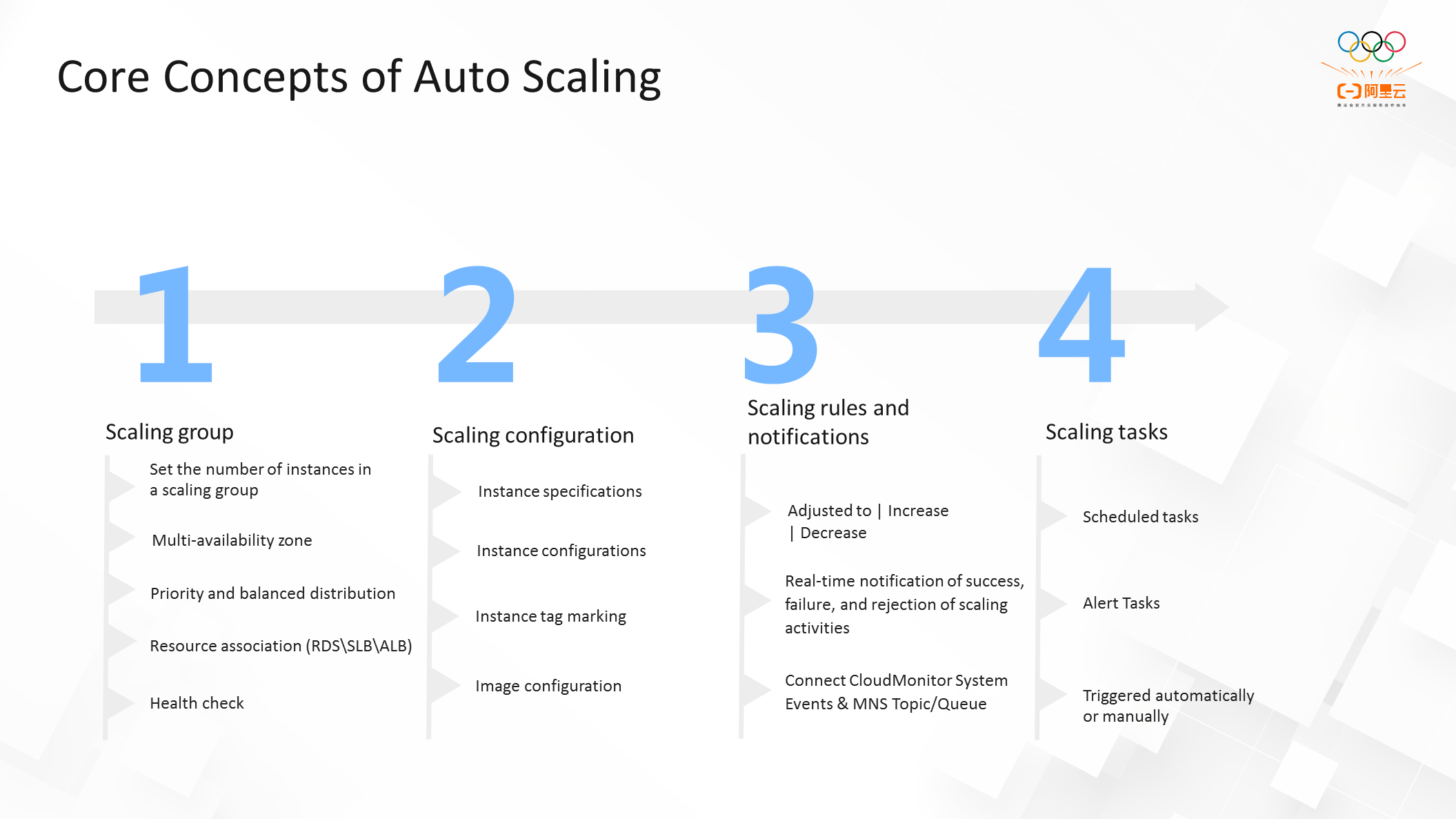

We can use Alibaba Cloud Elastic Scaling Service (ESS) to delineate a batch of machines and use the instances of the scaling group to collect metrics and deploy instances.

There are two main aspects in the scaling configuration. In terms of instance specifications, users need to configure additional parameters to scale-out the instance. If management is required, users can make relevant markings.

For instance images, if users use containers, they can specify images for applications. This ensures that instances can be scaled-out to meet users' requirements and provide services at the same time.

In terms of scaling rules and notifications, after auto scaling is triggered, Alibaba Cloud can notify if the scaling activity succeeds or fails. Users can also reject real-time notifications. Auto scaling is connected to the CloudMonitor system events and the MNS topic queues.

There are three types of scaling tasks: scheduled tasks, alert tasks, and automatic/manual triggers.

Scheduled tasks indicate that a scheduled task has obvious time patterns during peak and off-peak hours. Users can scale-out the capacity regularly before the peak period. After the peak period, users can scale-in the capacity regularly.

Alert tasks can be dynamically scaled-out based on CPU or QPS monitoring metrics. Automatic or manual scaling is triggered to perform dynamic scale-in.

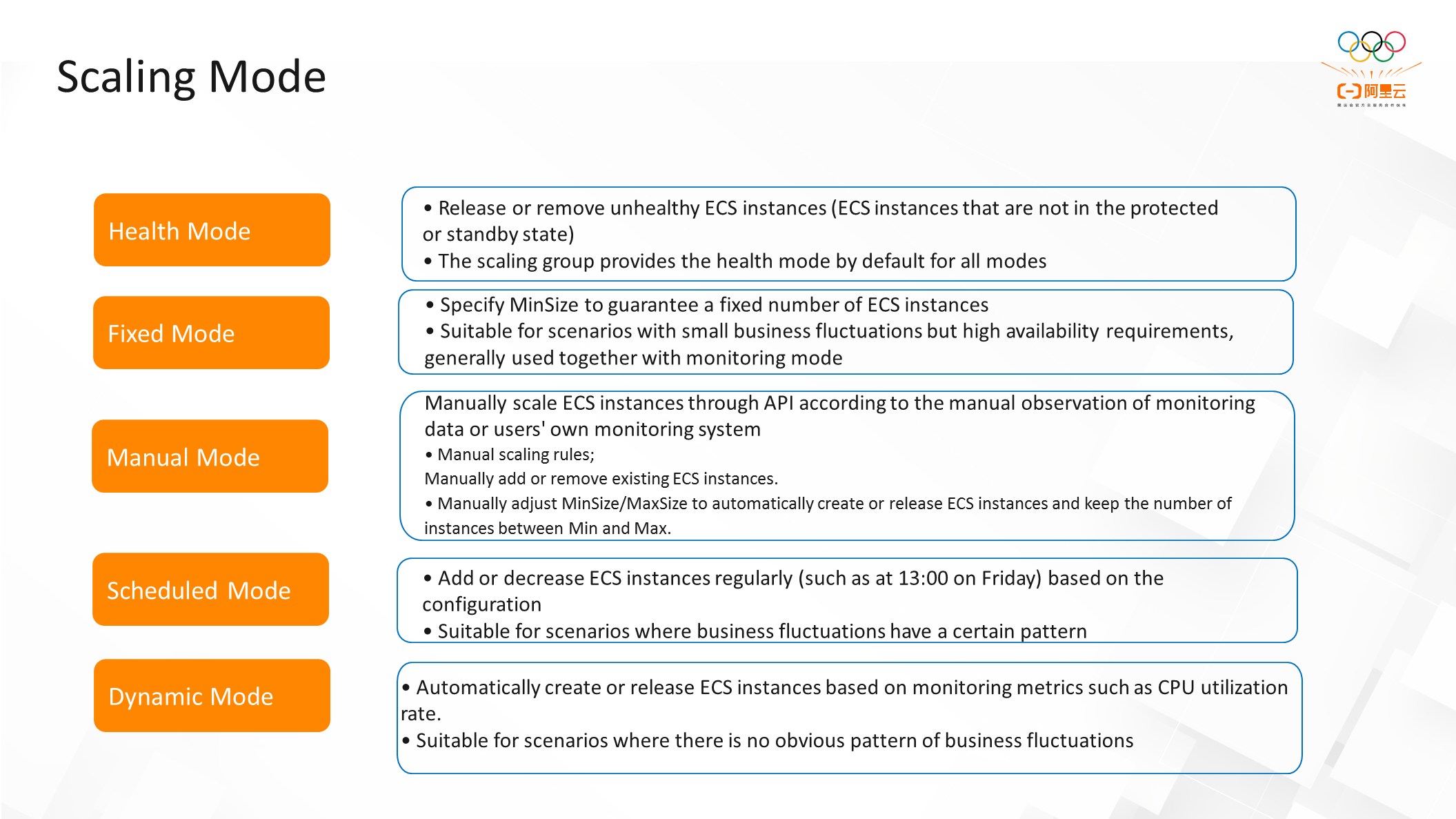

As shown in the preceding figure, the healthy scaling mode releases or removes unhealthy ECS instances. The scaling group provides this capability for all modes by default.

In the fixed scaling mode, a fixed number of ECS instances are guaranteed by specifying MinSize, which is suitable for scenarios with little business fluctuation but high availability requirements, and is generally used together with monitoring mode.

In manual scaling mode, scaling rules are manually executed by scaling ECS instances using APIs based on the manually observed monitoring data or user-owned monitoring system. After the MinSize or MaxSize is manually adjusted, ECS instances are automatically created or released to maintain the number of instances between Min and Max.

In scheduled scaling mode, ECS instances are added or removed based on the specified time (such as 13:00 on Friday). It is suitable for scenarios where business fluctuations have a certain pattern.

In dynamic scaling mode, ECS instances are automatically created or released based on the load status of monitoring metrics. It is suitable for scenarios where there is no obvious pattern of business fluctuations. When the standalone CPU is over 50%, some instances can be compensated to ensure service stability and reduce the load on the standalone.

When an event triggers a scale-in or scale-out, how do we do the scaling?

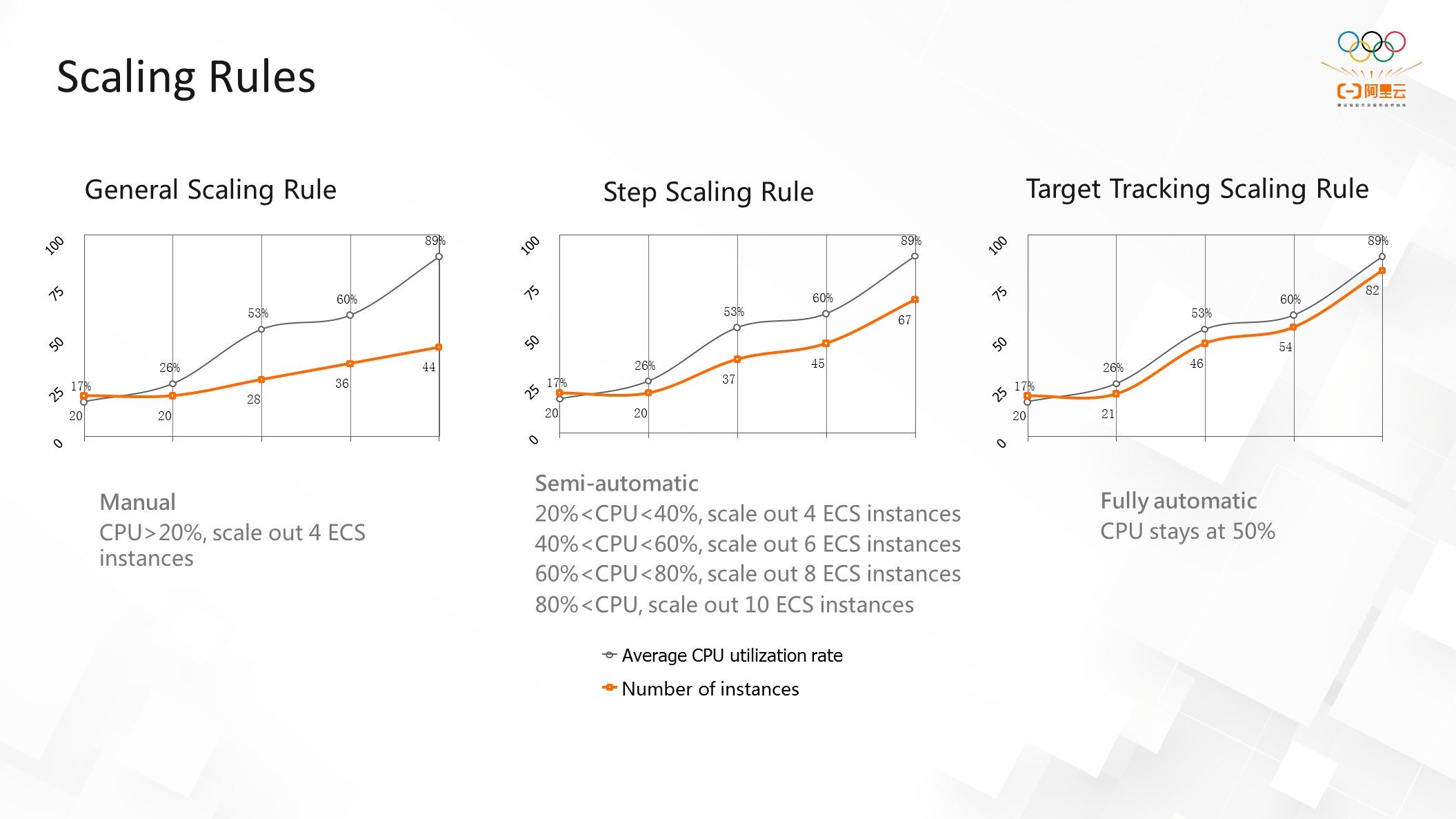

For the general scaling rule shown in the preceding figure, if we assume the CPU is greater than 20%, that means four units are scaled-out. The machine load and the scale-out continue to rise due to the failure to meet the transaction requirements of the business load.

The step scaling rule defines the trigger threshold for scale-out, and different thresholds trigger different scale-out actions.

The target tracking scaling rule is to keep the CPU at 50%. If the service load surges, the system calculates that more than 20 machines need to be scaled-out at the next point. According to the target tracking scaling rule, more than 20 units are scaled-out at a time to quickly cope with scenarios with traffic bursts.

Next, let's learn how to select different scaling modes for different application scenarios.

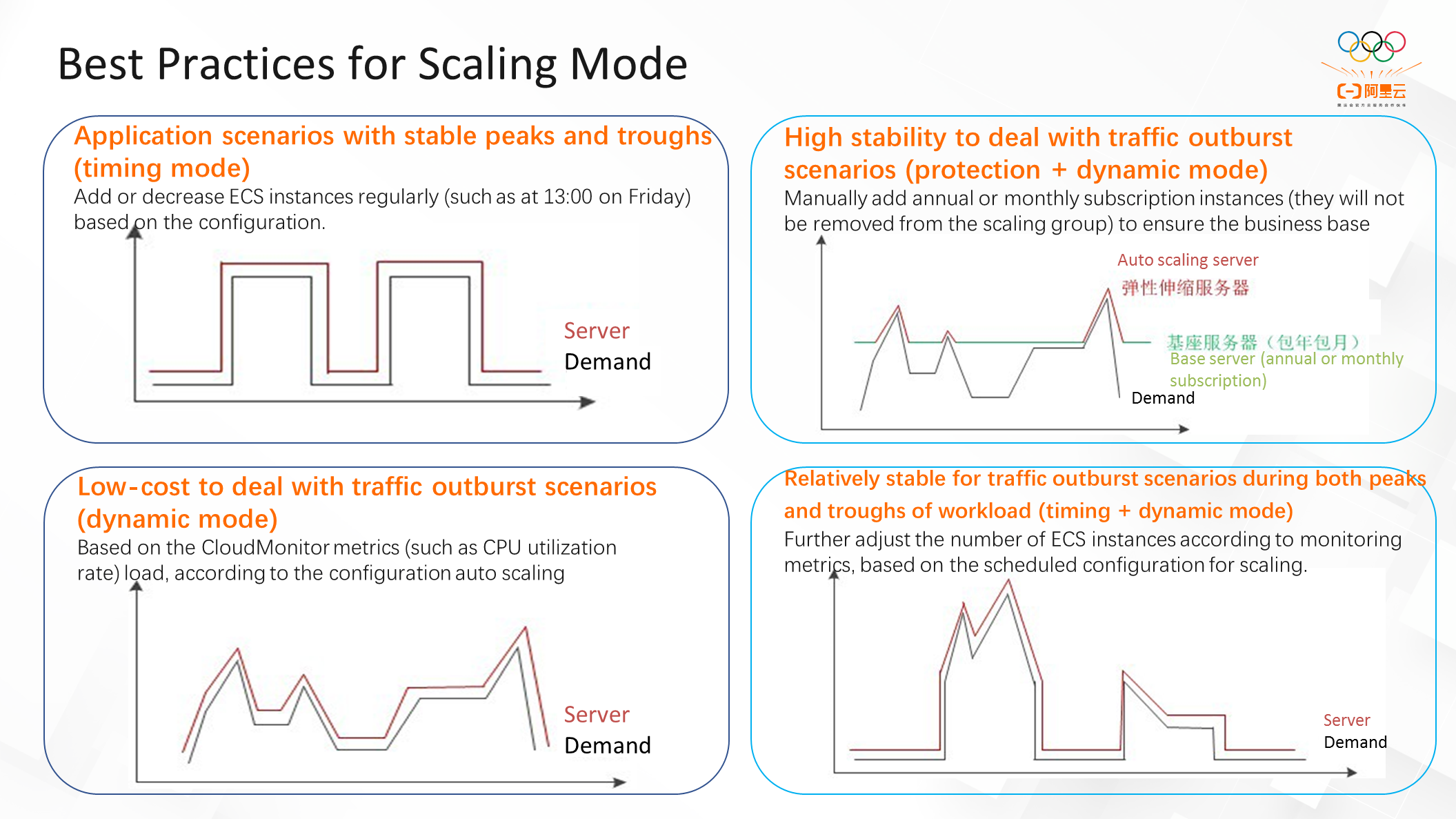

In scenarios where the peak and off-peak hours are relatively fixed, users can use the scheduled mode to increase or decrease ECS instances regularly based on their configurations (such as 13:00 on Friday).

For application scenarios with high stability and traffic bursts, the user can use the protection and dynamic mode to manually add annual or monthly subscription instances to ensure the business base. When the business load increases suddenly, some resources are compensated by dynamic scaling to ensure service stability. When services have burst traffic, dynamic scaling can fully guarantee service resources.

If the user has high requirements for the cost, they can use pay-as-you-go instances and configure their policies through dynamic scaling. Based on CloudMonitor metric load (such as CPU utilization rate) auto scaling is done according to the configuration.

For outburst scenarios with relatively stable load peaks and troughs, users can adjust the number of ECS instances according to the dynamic monitoring metrics (based on the scheduled configuration for scaling).

What is the best way to scale-out an instance when the instance resources are equipped with the dynamic survival capability? As shown in the preceding figure, we want an annual or monthly paid instance as the base to ensure service stability. When the service peak comes, the pay-as-you-go instances are more stable than those of Spot. Its cost is relatively low, and its stability is high.

Q1: How can we reduce costs when using three nodes to build a Kubernetes cluster?

A1: If you use only three nodes to build a Kubernetes cluster and need to cut costs further, you can select a node or a specification with low-level configurations. Choose Spot Instances as the first choice for the billing method.

Q2: How can we find the balance between high availability and low cost?

A2: You need to consider application features to choose between application stability and cost and do evaluations based on your business.

Q3: How do we respond to business changes that result in elastic demand for resources?

A3: Configure some simple alert rules. We recommend using Alibaba Cloud elastic products. If you build auto scaling application by yourself, the cost is very high.

1,352 posts | 478 followers

FollowAlibaba Cloud Native - June 9, 2022

ApsaraDB - February 9, 2021

Alibaba Cloud Community - April 25, 2022

Alibaba Cloud Community - December 14, 2021

ApsaraDB - January 9, 2023

Alibaba Cloud Native - September 5, 2022

1,352 posts | 478 followers

Follow ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn More Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn MoreMore Posts by Alibaba Cloud Community