By Jiachun

Multiple participants reached a complete agreement on one thing: one conclusion for one thing.

The conclusion on which agreement has been reached cannot be overturned.

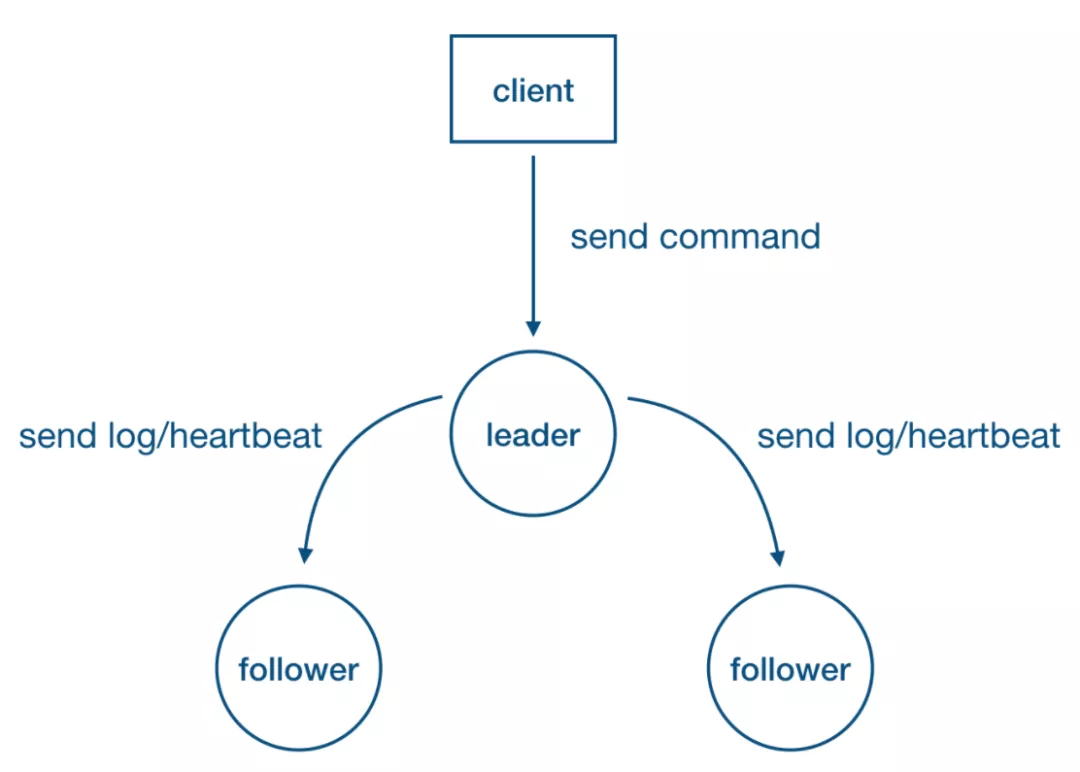

A leader must keep sending heartbeats all the time.

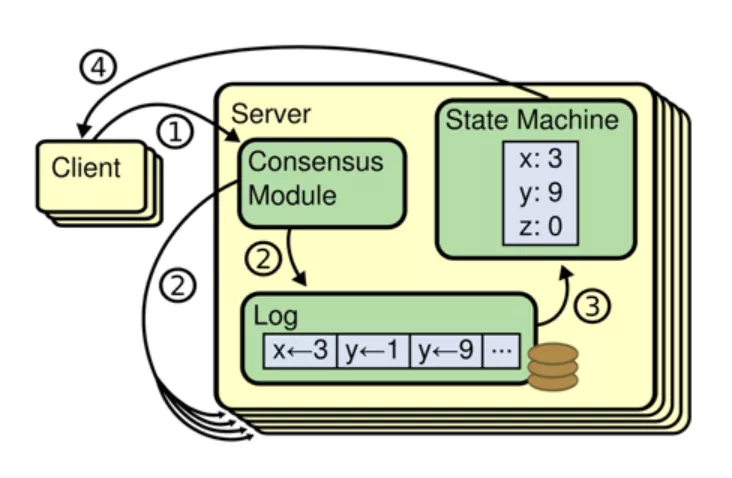

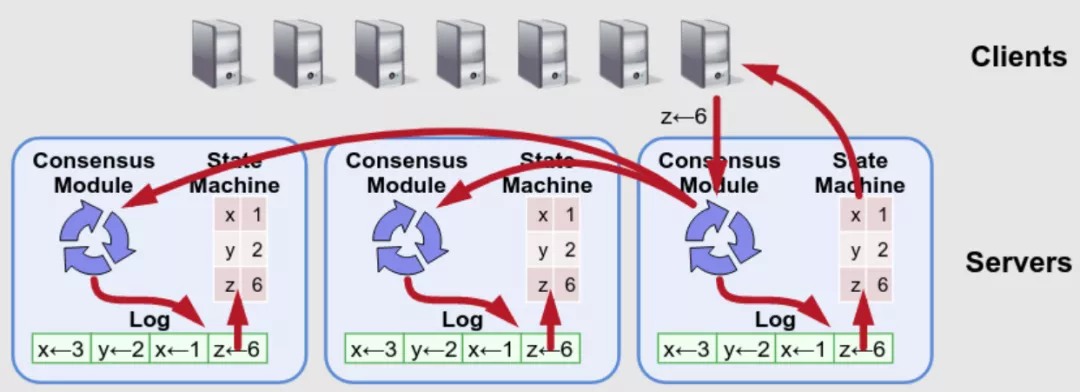

For an infinitely growing sequence a[1, 2, 3...], if for any integer i, the value of a[i] is aligned with distributed consensus, the system meets the requirement of the consensus state machine.

All real systems run continuously, and it is not enough to agree on a specific value. A real system usually converts the operation to a write-ahead-log (WAL) to ensure the consistency of all replicas. Then, all replicas in the system are kept consistent with the WAL, so each replica performs operations in the WAL in sequence. This ensures that the final status is consistent.

Timeout Driver: Heartbeat / Election Timeout

Random Timeout Value: Reduces the probability that votes are divided due to election conflict

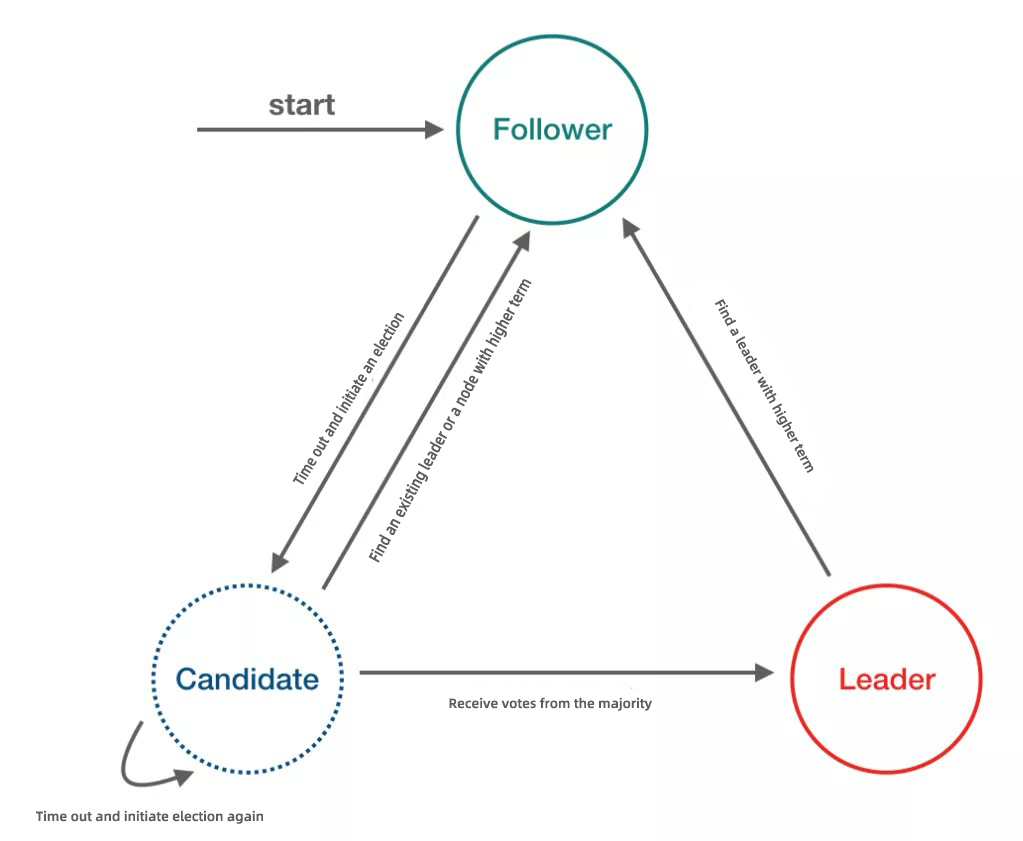

Election Process: Follower :arrow_right: Candidate (triggered by election timeout)

Election Action:

RequestVote RPCSelection Principle of New Leader (Maximum Commitment Principle)

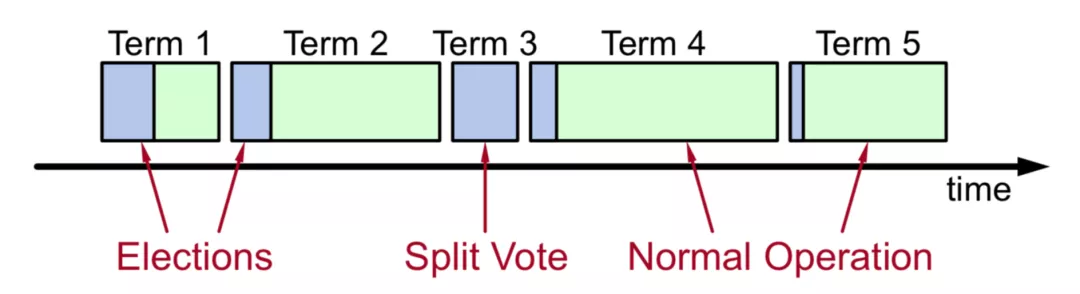

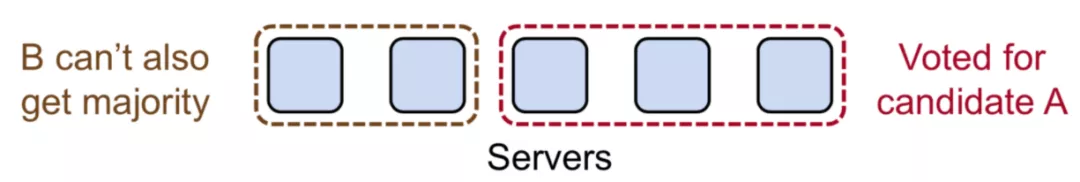

Security: In one term, up to one leader can be elected. If no leader is elected, start the election in the next term.

Several time parameters affecting the election success rate in Raft:

Time for Triggering a Random Leader Selection: Random(ET, 2ET)

Raft Log Format

TermId, LogIndex, LogValue)TermId and LogIndex) can determine a unique log.Key Points of Log Replication

Validity:

logIndex must be the same across different nodes.Log Validity Check in Followers

prevTermId or prevLogIndex) of the previous log.Log Recovery in Followers

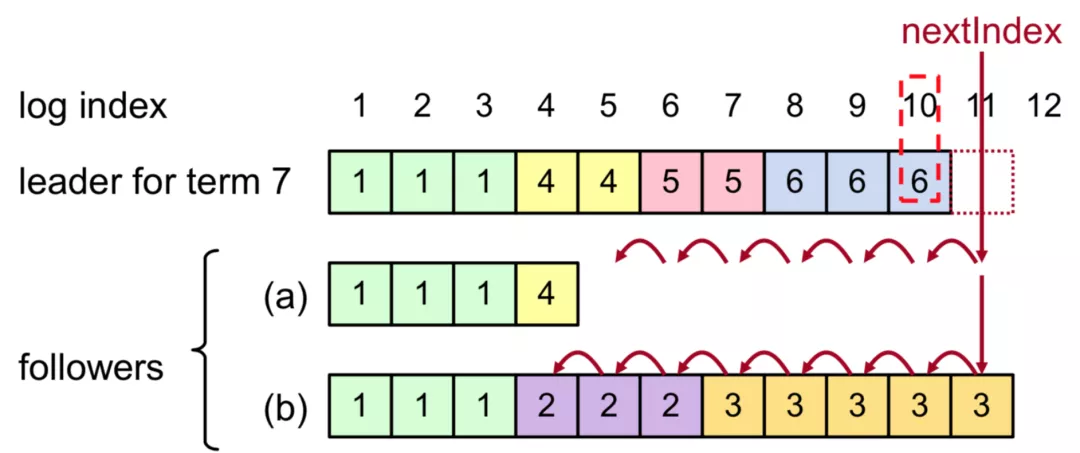

nextIndex and resends AppendEntries until they are consistent with those of the leader log.

CommitIndex (TermId and LogIndex)

commitIndex is the latest log location that has reached a consensus among the majority and can be applied to the state machine.commitIndex received from the leader. All the logs smaller than or equal to the commitIndex can be applied to the state machine.CommitIndex Promotion

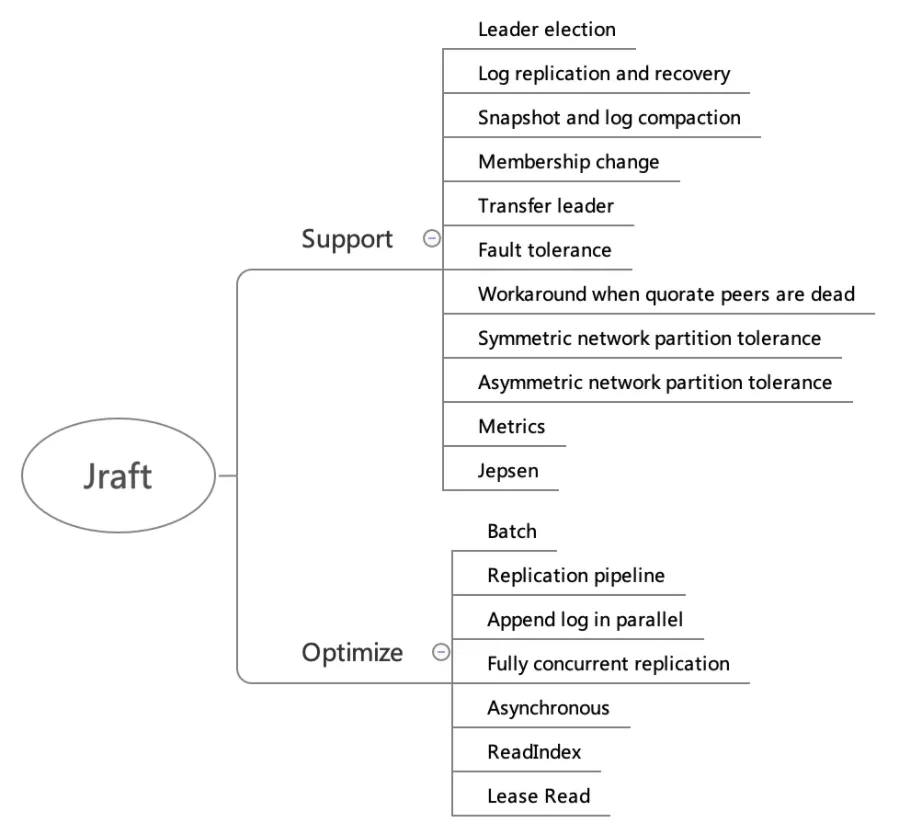

commitIndex.AppendEntries and update the local commitIndex. Finally, all the logs smaller than or equal to the commitIndex are applied to the state machine.currentTerm, logEntries[], prevTerm, prevLogIndex, commitTerm, commitLogIndex)currentTerm and logEntries[]: This is the log information. Considering the efficiency, there are usually multiple logs.prevTerm and prevLogIndex: Log validity checkcommitTerm and commitLogIndex: The latest log commitment point (commitIndex)It is a Raft implementation library only based on Java. All Raft functions are rewritten using Java with some improvements and optimizations.

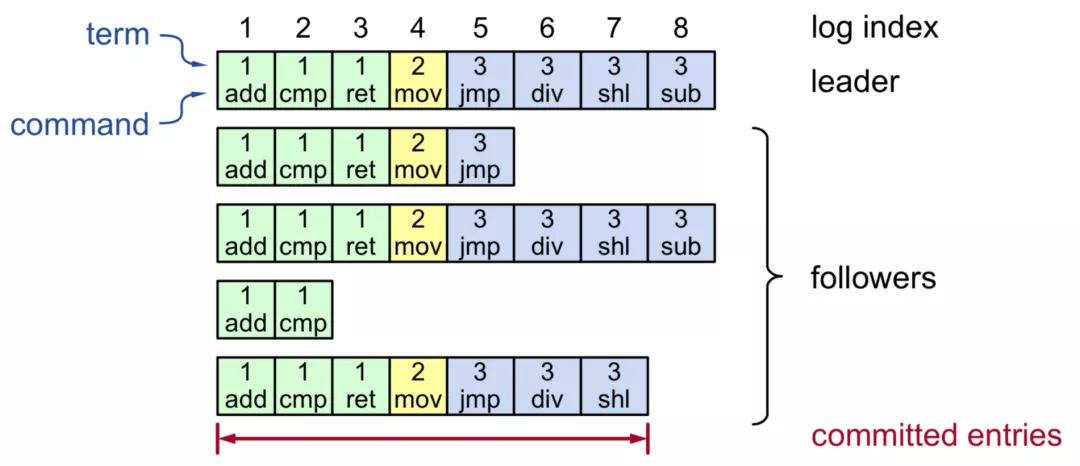

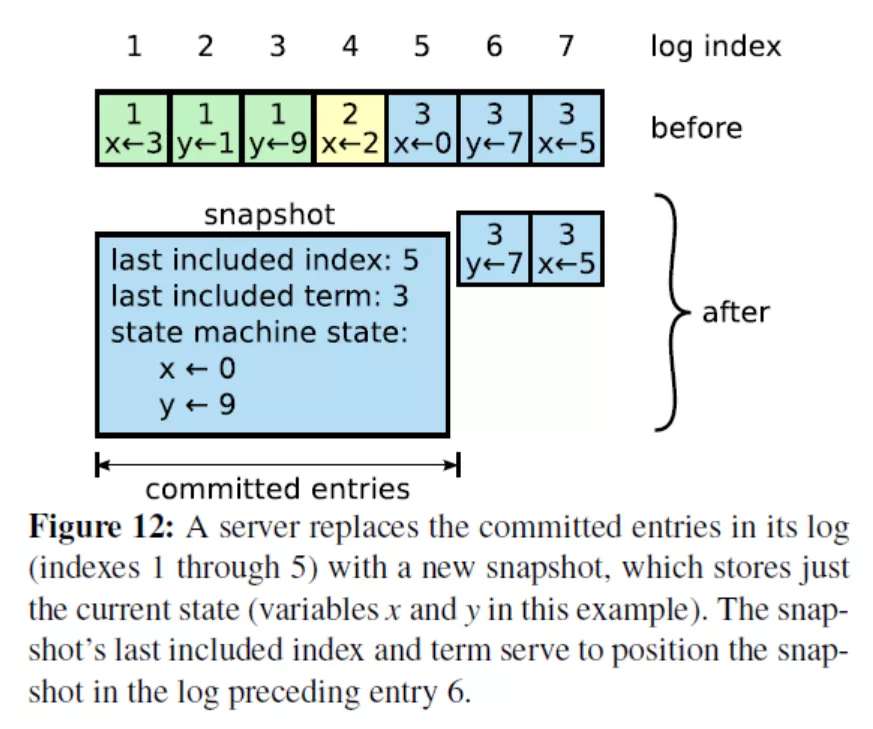

Log Replication and Recovery: Log recovery ensures that the committed data is not lost. Log recovery includes two aspects:

InstallSnapshot.

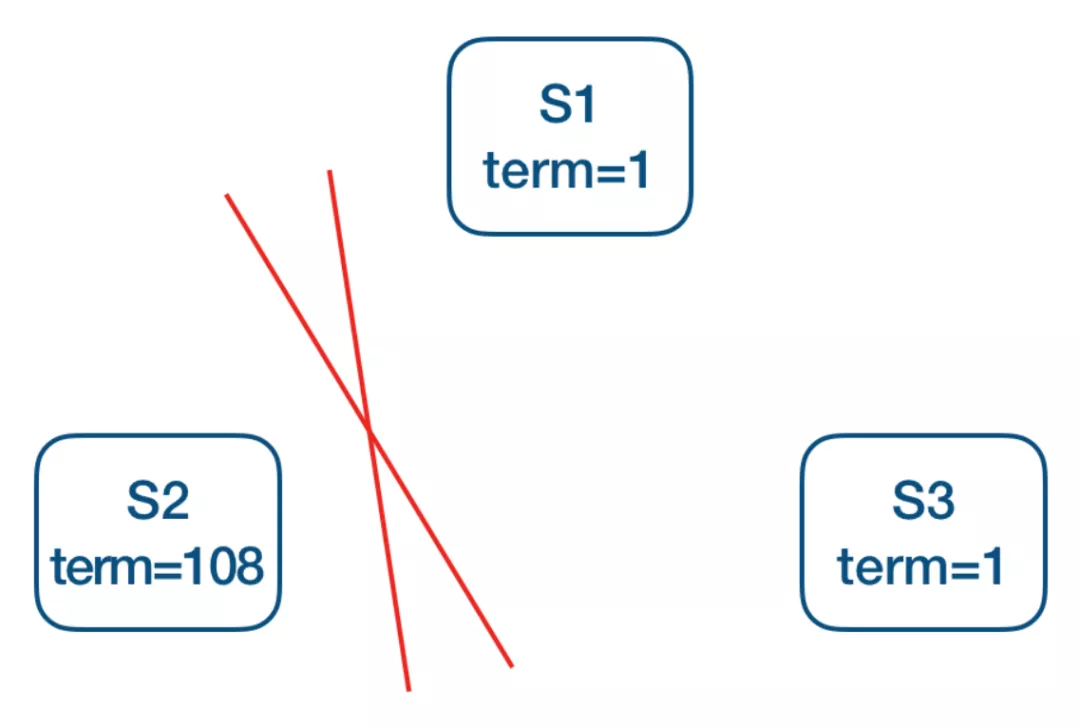

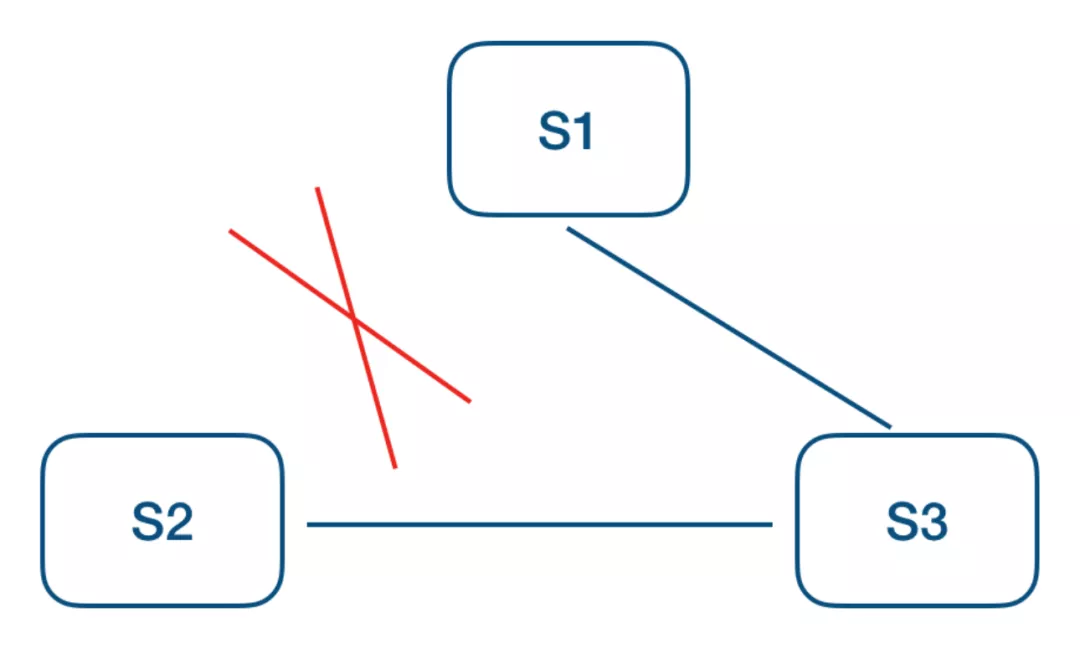

pre-vote(currentTerm + 1, lastLogIndex, lastLogTerm) will be performed before the request-vote. After the majority agrees on a consensus, S2 changes the status to the candidate and initiates the real request-vote. Therefore, the pre-vote of the nodes after partitioning will not succeed, and the cluster will be unable to provide services normally for a period of time.

As is shown in the preceding figure, S1 indicates the current leader, and S2 triggers leader selection through a continuous timeout. S3 interrupts the current lease by improving the term to reject the leader update. At this time, a trick check can be added, and each follower maintains a timestamp to record the time when the leader receives data updates (including the heartbeat.) A request-vote request is only allowed when the election timeout is exceeded.

Fault Tolerance: The faults of the minority do not affect the overall system availability:

reset_peers command can recreate the cluster quickly to ensure the availability of the cluster.Jepsen: In addition to unit tests, SOFAJRaft uses Jepsen, a distributed verification and fault injection testing framework, to simulate many situations, all of which have been verified:

Batch: The entire procedure in SOFAJRaft is in batch mode and consumes in batches by relying on the MPSC model in disruptor, including but not limited to:

ReadIndex: The performance of reading Raft logs is optimized. Only the commitIndex is recorded when reading logs each time. Then, all peer heartbeats are sent to confirm the leader's identity. If the leader identity is confirmed, when applied index is greater than or equal to commitIndex, the result of client read can be returned. Linearly consistent reading can be performed based on ReadIndex. However, commitIndex should be obtained from the leader, which means one more round of RPC.readIndex to confirm the leader's identity each time through the heartbeat, which provides better performance. However, it is not secure to maintain leases using the clock. The default configuration in Jraft is readIndex because readIndex performance is good enough.

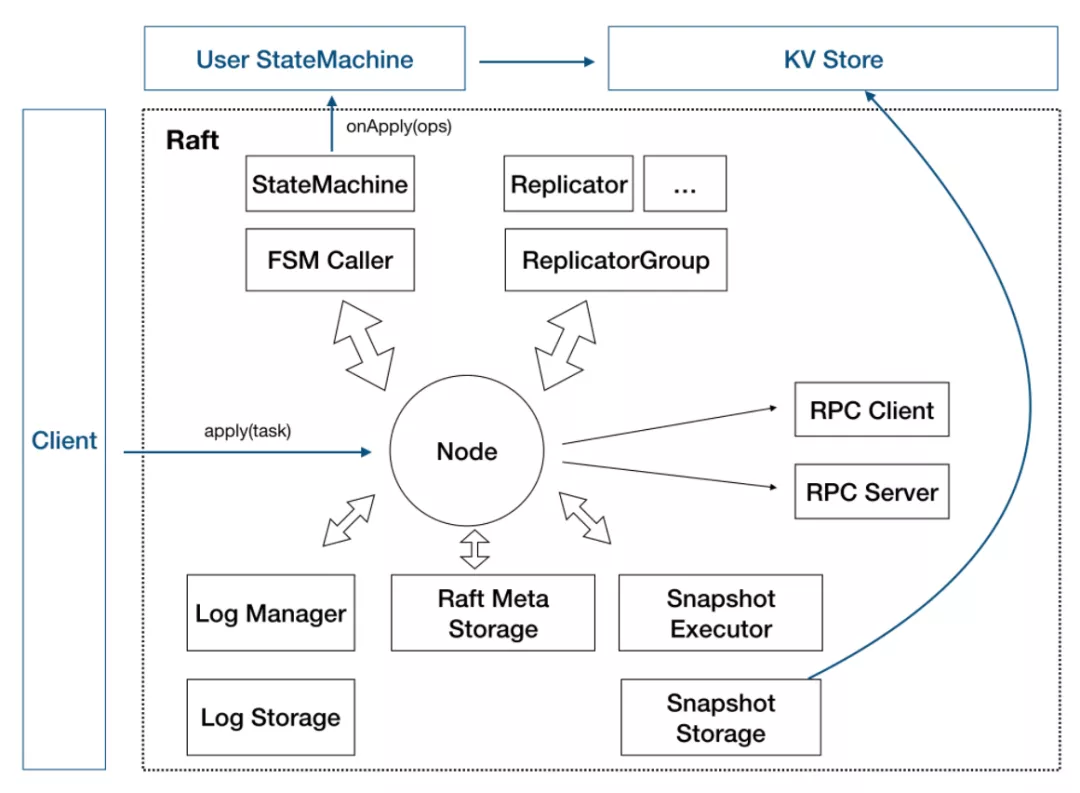

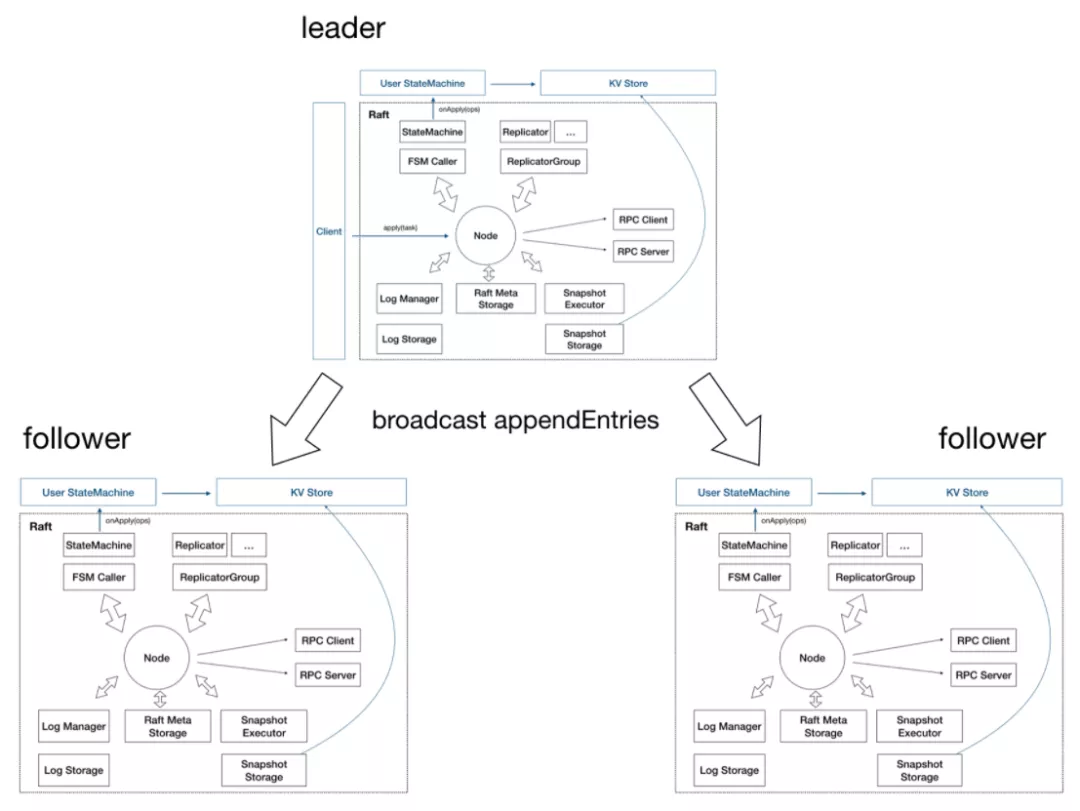

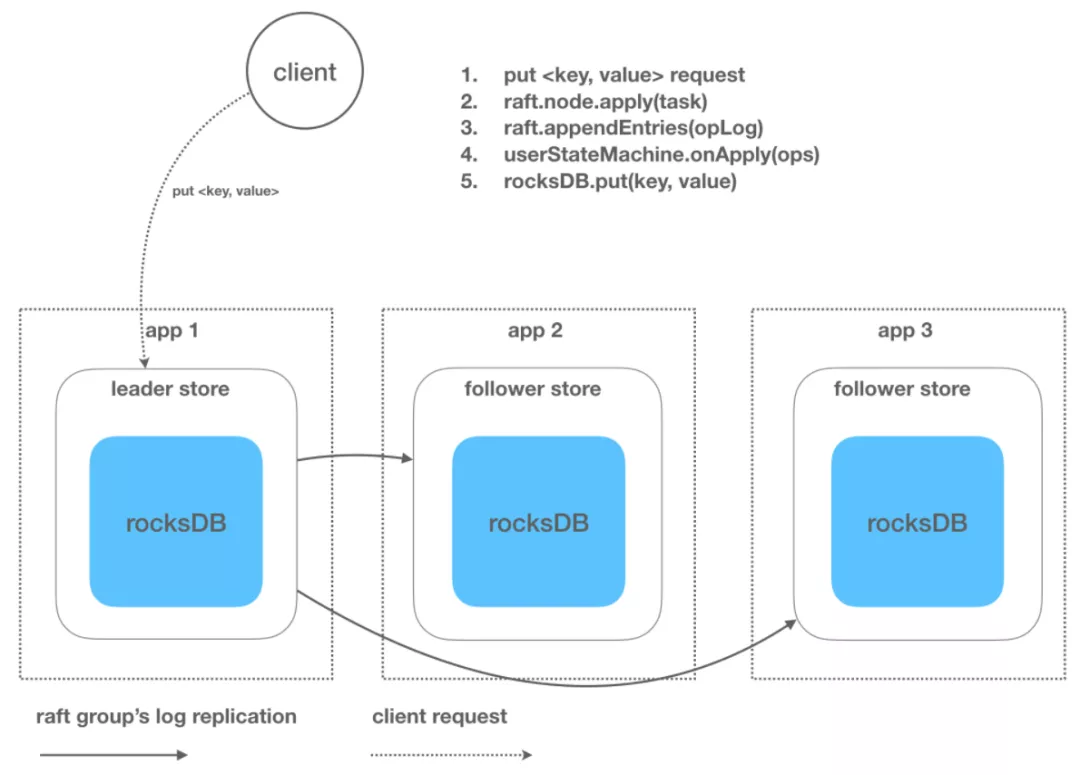

apply(task), submits new tasks to the state machine replication cluster composed by the Raft group. Then, tasks are applied to service state machines.Storage

LogStorage is the implementation of storage. LogManager is responsible for calling the underlying storage and caching, submitting in batches, checking, and optimizing the calls.SnapshotStorage is the implementation of snapshot storage. SnapshotExecutor is used to manage the storage, remote installation, and replication of snapshots.State Machines

StateMachine: It implements the core logic of the user. The core is the onApply(Iterator) method. Applications submit logs to service state machines using Node#apply(task).FSMCaller: It encapsulates calls for state transition of service StateMachine and writes of logs. It is used to implement a finite state machine and perform necessary checks, merged request submission, and concurrent processing.Replication

AppendEntries calls in Raft, including the state check through the heartbeat.ReplicatorGroup: It is used to manage all replicators in a single Raft group. It checks and grants the necessary permissions.RPC module for network communication between nodes

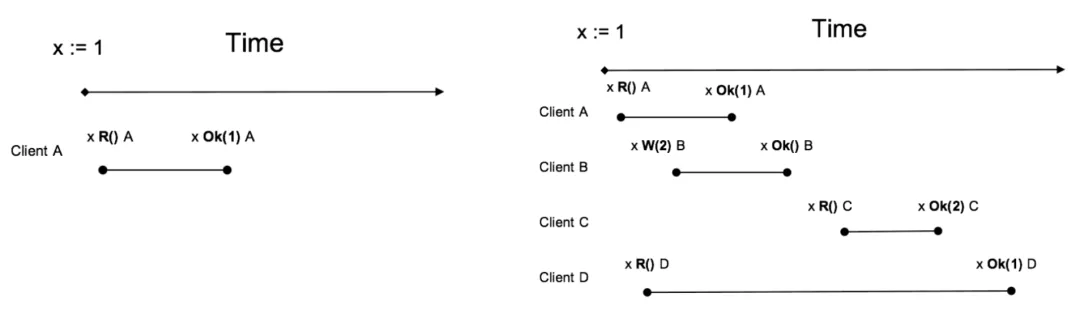

What Is Linearly Consistent Reading?

Here is a simple example of linearly consistent reading. When we write a value at t1, we can read this value after t1, but it is impossible to read the old value before t1. Think about the volatile keyword in Java. Simply speaking, linearly consistent reading is implementing volatile semantics in a distributed system.

The client A, B, C, and D in the preceding figure all conform to linearly consistent reading. Among them, D seems to be a stale read, but it is not. The request of D spans three stages, but reading may occur at any time, so reading 1 or 2 is OK.

Note: The following discussion is based on the major premise that the implementation of the service state machine must meet linearly consistent reading. In other words, it must also have Java volatile semantics.

1) To put it simply, can we read data directly from the current leader?

How can we determine whether the current leader is the real leader (network partition)?

2) The simplest implementation method is to apply the Raft protocol to the read request.

What's the Problem?

3) ReadIndex Read

This is an optimization solution mentioned in the Raft paper. Specifically:

ReadIndex

ReadIndex so that Linearizable Read can be provided safely. We don't have to consider if the leader has gone at the time of reading. Thinking: why can't I perform the read request before the value of apply index exceeds ReadIndex?

With ReadIndex, it is also easy to provide linearly consistent reading on the follower nodes:

ReadIndex from the leader.ReadIndex to followers.ReadIndex. What is the problem? Is it a slow node?

ReadIndex Summary:

ReadIndex saves the disk overhead in reading data and can improve throughput significantly. The read throughput of the leader is close to the upper limit of RPC after combining with the batch + pipeline ack + full asynchronization of SOFAJRaft in three-replica mode.4) Lease Read

Lease read is similar to ReadIndex. It saves the logs and the network interaction. It can improve the read throughput and reduce the latency significantly.

The leader selects a lease period smaller than election timeout (preferably ten times smaller), and no election will take place during the lease period. This ensures that the leader does not change, so Step 2 of ReadIndex can be skipped, which reduces the latency. As we can see, the correctness of lease read is closely related to time, so the implementation of time is very important. If the drift is serious, this mechanism will go wrong.

Implementation:

ReadIndex (confirming leader identity by heartbeat) can be ignored.ReadIndex, which can provide Linearizable Read safely.5) Go Further: Wait Free

So far, lease has dropped Step 2 of ReadIndex (heartbeat) and can go further, omitting Step 3.

Let's think about the essence of the previous implementation solution? The state machine of the current node is in the same or newer state at the same time point as the "read" moment.

Then, a stricter constraint is that the state machine of the current node is up-to-date at the current time.

Here is the question: can we guarantee that the leader's state machine is up-to-date?

The Wait Free mechanism will minimize the read latency. SOFAJRaft has not implemented the wait free optimization yet, but it is already in the plans.

Initiate a linearly consistent read request in SOFAJRaft:

// KV storage realizes linearly consistent read.

public void readFromQuorum(String key, AsyncContext asyncContext) {

// Request ID is passed in as request context.

byte[] reqContext = new byte[4];

Bits.putInt(reqContext, 0, requestId.incrementAndGet());

// Call the readIndex method and wait for the callback to execute.

this.node.readIndex(reqContext, new ReadIndexClosure() {

@Override

public void run(Status status, long index, byte[] reqCtx) {

if (status.isOk()) {

try {

// The ReadIndexClosure callback is successful. Read the latest data from the state machine and return it.

// If the status implementation involves version, read data based on the index number of input log.

asyncContext.sendResponse(new ValueCommand(fsm.getValue(key)));

} catch (KeyNotFoundException e) {

asyncContext.sendResponse(GetCommandProcessor.createKeyNotFoundResponse());

}

} else {

// In specific cases, for example, election, the read request will fail.

asyncContext.sendResponse(new BooleanCommand(false, status.getErrorMsg()));

}

}

});

}

So far, it seems that we have not seen anything special about SOFAJRaft as a lib because zk and etcd can also do what SOFAJRaft can. As such, is using SOFAJRaft necessary?

Next, I will introduce a more complex SOFAJRaft-based practice to show that SOFAJRaft leaves much scope for imagination and scalability.

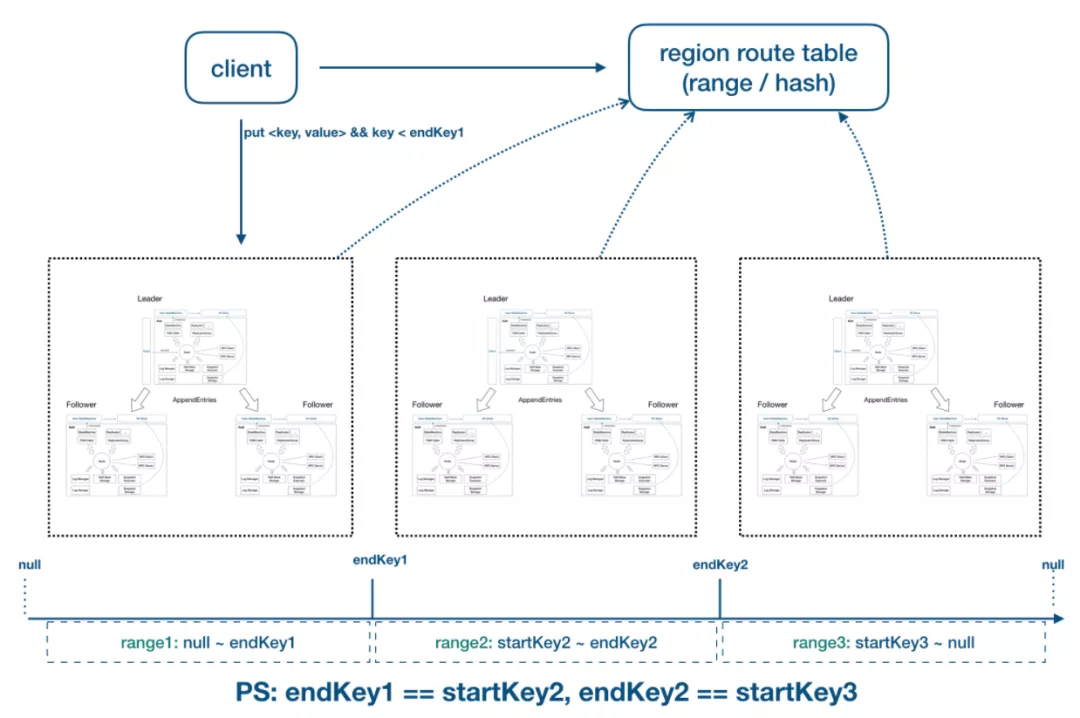

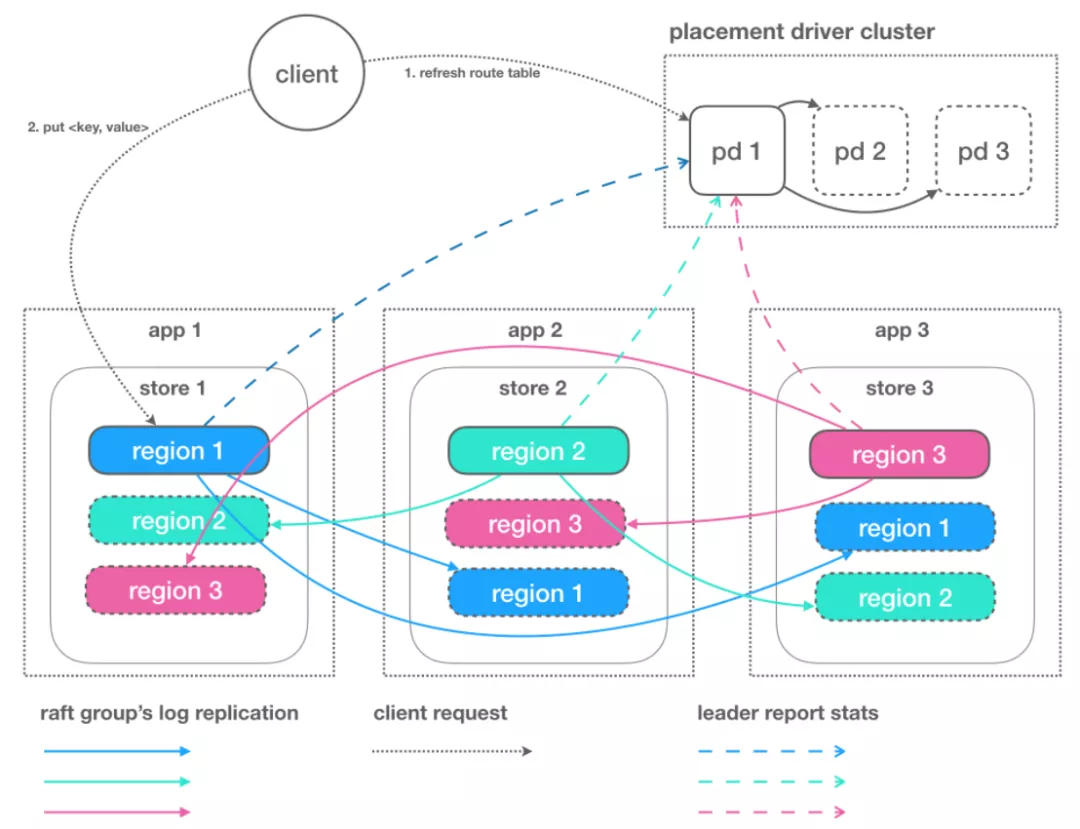

clusterId.

[startKey, endKey) that supports automatic splitting and automatic copy migration based on metrics, such as request traffic, load, and data volume.Alibaba Cloud Native - October 12, 2024

Alibaba Clouder - April 11, 2019

Wei Kuo - August 30, 2019

Alibaba Cloud_Academy - September 9, 2022

Alibaba Clouder - April 10, 2018

Xiangguang - January 11, 2021

Web Hosting Solution

Web Hosting Solution

Explore Web Hosting solutions that can power your personal website or empower your online business.

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Web Hosting

Web Hosting

Explore how our Web Hosting solutions help small and medium sized companies power their websites and online businesses.

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn MoreMore Posts by block