by Aaron Berliano Handoko, Solution Architect Alibaba Cloud Indonesia

Welcome to the Big Data Cloud Fighters, where we encapsulate the intensive, hands-on experience of mastering the vast and complex world of big data. Over the course of this bootcamp, participants delved deep into the core principles and cutting-edge technologies that drive data-driven decision-making in today's digital era. From foundational concepts to advanced applications, this bootcamp equipped attendees with the skills and knowledge needed to navigate and leverage big data effectively.

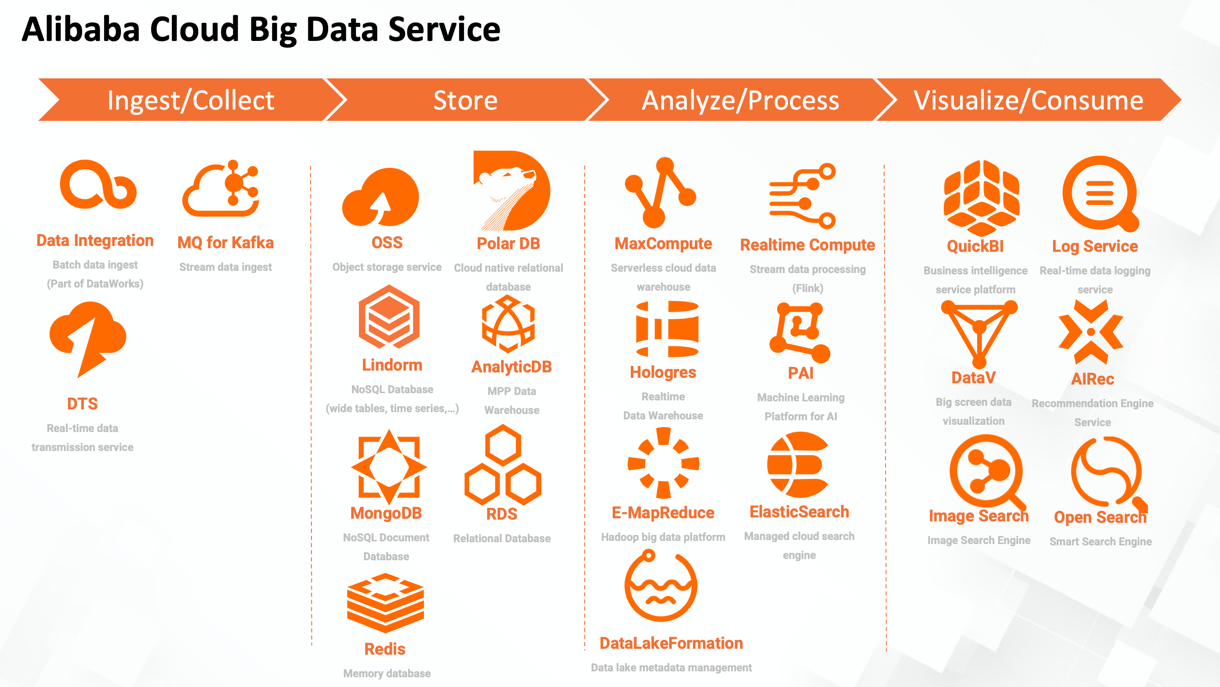

Our journey began with an exploration of the fundamental aspects of big data, including data collection, storage, and processing. Participants gained insights into various data architectures and learned to harness the power of distributed systems such as Hadoop and Spark. Some pain points that customer has when trying to adopt Big Data technology is its scalability, availability and data governance. In this bootcamp, the participants learned that cloud is essential when developing Big Data pipelines. With the help of Alibaba Cloud Big Data products, customers in Indonesia are able to utilize their data and fulfill their business requirements. Below is the Alibaba Cloud’s Big Data products portfolio.

If you have any questions, please contact one of our SAs

a) Batch Requirements

Batching jobs in the context of big data involves grouping large datasets into smaller, manageable chunks and processing them together to improve efficiency and performance. This is a common practice in big data processing to handle massive volumes of data that cannot be processed in a single operation due to resource constraints. Example scenario that may use batch job is data collection for national stores.

For batch processing we can utilize MaxCompute as the serverless Datawarehouse that can handle up to Petabytes of data, Hologres as the query accelerator of MaxCompute and also Dataworks for the data orchestration, data scheduling and data governance layer.

b) Realtime Requirements

Real-time processing in the context of big data involves the continuous ingestion, processing, and analysis of data as it is generated. Unlike batch processing, which handles data in large, predefined chunks, real-time processing deals with data streams in a near-instantaneous manner, enabling timely insights and actions. Example scenario that requires real time processing is Package Tracking in a logistic company.

The Alibaba Cloud technology stack that we can use includes Kafka for the message queue, Managed Flink for real time data processing and Hologres or AnalyticDB for the real time Datawarehouse.

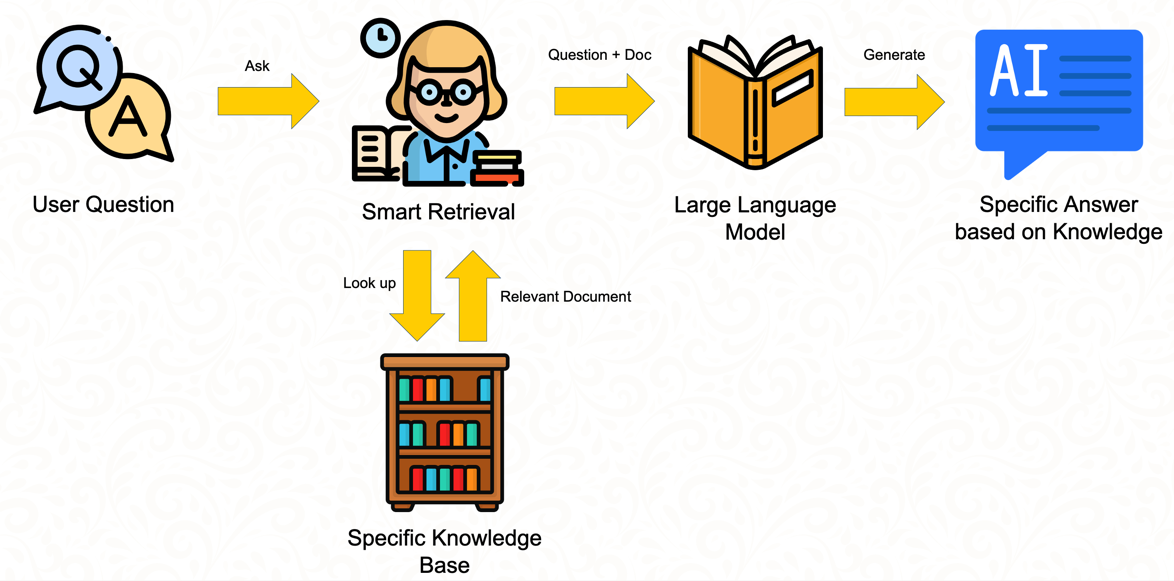

How about if we only the Large Language Model to answer strictly based on the knowledge that the company provide? Well, there are two option that you can do. The first is to do finetuning (retraining the model with custom data), which of course requires users to have huge amount of computing power. The second option is Retrieval Augmented Generation (RAG).

In RAG, we don’t exactly need an enormous amount of computing power as we only need enough GPU to run the Large Language Model.

Here is how the magic of RAG works:

Imagine you are going to a library to get answer for your Physic homework. It would take a lot of effort to scan through the whole library one by one. The easiest and fastest way to get the correct source is to ask the librarian to direct us to the book with the most relevant information. Once we get the book that we need we can then try to find the answers based on the questions.

Analogy on how RAG works

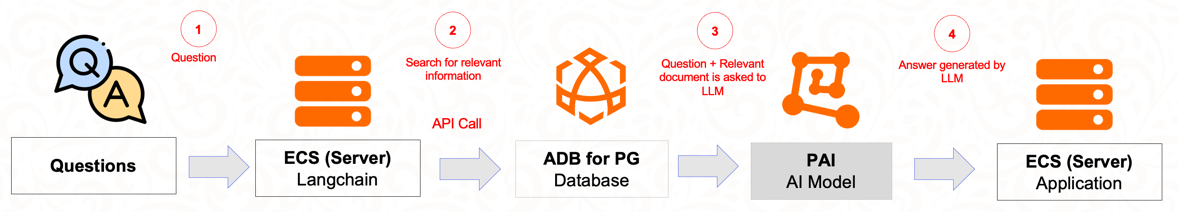

In RAG, the librarian is the smart retrieval (Langchain), while, the specific knowledge base is the custom knowledge of customers stored in vector database. Langchain will look in the vector database to find the most relevant information to the questions asked by the user. After the information is found then we can send both the user question and relevant document to the LLM in order to generat specific answer based on knowledge.

TIPS: In order to avoid hallucination to the answer we can do some prompt engineering to add specific instruction to the LLM. For example: “Answer this question strictly based on the information provided below!

In this bootcamp we used labex here materials to do the hands on training

Utilize Terraform to Install Alibaba Cloud Container for Kubernetes (ACK)

Streamline Your Database: Migrating and Replicating AWS RDS PostgreSQL to Alibaba Cloud PolarDB

115 posts | 21 followers

FollowAlibaba Cloud Community - August 9, 2024

Alibaba Cloud_Academy - September 14, 2023

Alibaba Cloud Community - July 9, 2024

Alibaba Cloud Indonesia - November 22, 2023

ApsaraDB - September 14, 2023

ApsaraDB - July 10, 2023

115 posts | 21 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba Cloud Indonesia