Recently I found it very slow to perform a sorting task in a log table. After the problem was finally solved, I summarized some experience in the index and MySQL execution process. However, I still have five unsolved problems and I hope that you can help me solve these problems.

These problems are related to the following:

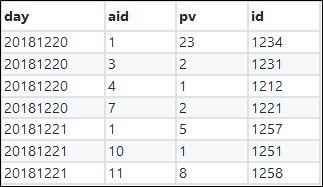

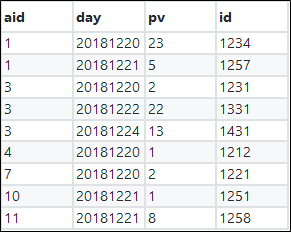

List the top 10 visited articles of this month and week respectively. The log table is as follows.

CREATE TABLE `article_rank` (

`id` int(11) unsigned NOT NULL AUTO_INCREMENT,

`aid` int(11) unsigned NOT NULL,

`pv` int(11) unsigned NOT NULL DEFAULT '1',

`day` int(11) NOT NULL COMMENT '日期 例如 20171016',

PRIMARY KEY (`id`),

KEY `idx_day_aid_pv` (`day`,`aid`,`pv`),

KEY `idx_aid_day_pv` (`aid`,`day`,`pv`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8To clearly verify the guesses, install a debugger for MySQL and enable slow query log collection to count scanned rows.

Edit the configuration file and add the following under the [mysqld] module:

slow_query_log=1

slow_query_log_file=xxx

long_query_time=0

log_queries_not_using_indexes=1Assume that the following SQL statement is used to query the top 10 articles by views during the five days from 2018-12-20 to 2018-12-24. Let's first use explain to see the analysis result.

mysql> explain select aid,sum(pv) as num from article_rank where day>=20181220 and day<=20181224 group by aid order by num desc limit 10;

+----+-------------+--------------+------------+-------+-------------------------------+----------------+---------+------+--------+----------+-----------------------------------------------------------+

| id | select_type | table | partitions | type | possible_keys | key | key_len | ref | rows | filtered | Extra |

+----+-------------+--------------+------------+-------+-------------------------------+----------------+---------+------+--------+----------+-----------------------------------------------------------+

| 1 | SIMPLE | article_rank | NULL | range | idx_day_aid_pv,idx_aid_day_pv | idx_day_aid_pv | 4 | NULL | 404607 | 100.00 | Using where; Using index; Using temporary; Using filesort |

+----+-------------+--------------+------------+-------+-------------------------------+----------------+---------+------+--------+----------+-----------------------------------------------------------+By default, the index idx_day_aid_pv is used. The Extra shows that the covering index is used when we use idx_day_aid_pv. However, a temporary table will be required and this involves sorting.

Let's check the information recorded in the slow query log.

# Time: 2019-03-17T03:02:27.984091Z

# User@Host: root[root] @ localhost [] Id: 6

# Query_time: 56.959484 Lock_time: 0.000195 Rows_sent: 10 Rows_examined: 1337315

SET timestamp=1552791747;

select aid,sum(pv) as num from article_rank where day>=20181220 and day<=20181224 group by aid order by num desc limit 10;We will query two data items: the number of matching rows and the number of rows after the group by operation.

mysql> select count(*) from article_rank where day>=20181220 and day<=20181224;

+----------+

| count(*) |

+----------+

| 785102 |

+----------+

mysql> select count(distinct aid) from article_rank where day>=20181220 and day<=20181224;

+---------------------+

| count(distinct aid) |

+---------------------+

| 552203 |

+---------------------+The result indicates that the value of Rows_examined in the slow query log is the total of the matching rows (785,102), the number of rows after group by (552,203), and the limit value.

To explain the preceding finding, we need to understand how this SQL statement runs.

For ease of understanding, I first simulate a small portion of the data corresponding to the idx_day_aid_pv index according to the indexing rule.

Because the leftmost column of the index idx_day_aid_pv is day, we need to iterate rows during the period from 20181220 to 20181224 to obtain the pv total from 20181220 to 20181224.

# 开启 optimizer_trace

set optimizer_trace='enabled=on';

# 执行 sql

select aid,sum(pv) as num from article_rank where day>=20181220 and day<=20181224 group by aid order by num desc limit 10;

# 查看 trace 信息

select trace from `information_schema`.`optimizer_trace`\G;The following shows a fraction of the final execution result.

{

"join_execution": {

"select#": 1,

"steps": [

{

"creating_tmp_table": {

"tmp_table_info": {

"table": "intermediate_tmp_table",

"row_length": 20,

"key_length": 4,

"unique_constraint": false,

"location": "memory (heap)",

"row_limit_estimate": 838860

}

}

},

{

"converting_tmp_table_to_ondisk": {

"cause": "memory_table_size_exceeded",

"tmp_table_info": {

"table": "intermediate_tmp_table",

"row_length": 20,

"key_length": 4,

"unique_constraint": false,

"location": "disk (InnoDB)",

"record_format": "fixed"

}

}

},

{

"filesort_information": [

{

"direction": "desc",

"table": "intermediate_tmp_table",

"field": "num"

}

],

"filesort_priority_queue_optimization": {

"limit": 10,

"rows_estimate": 1057,

"row_size": 36,

"memory_available": 262144,

"chosen": true

},

"filesort_execution": [

],

"filesort_summary": {

"rows": 11,

"examined_rows": 552203,

"number_of_tmp_files": 0,

"sort_buffer_size": 488,

"sort_mode": "<sort_key, additional_fields>"

}

}

]

}

}After the debugging with gdb, we find that the fields in the temporary table are aid and num.

Breakpoint 1, trace_tmp_table (trace=0x7eff94003088, table=0x7eff94937200) at /root/newdb/mysql-server/sql/sql_tmp_table.cc:2306

warning: Source file is more recent than executable.

2306 trace_tmp.add("row_length",table->s->reclength).

(gdb) p table->s->reclength

$1 = 20

(gdb) p table->s->fields

$2 = 2

(gdb) p (*(table->field+0))->field_name

$3 = 0x7eff94010b0c "aid"

(gdb) p (*(table->field+1))->field_name

$4 = 0x7eff94007518 "num"

(gdb) p (*(table->field+0))->row_pack_length()

$5 = 4

(gdb) p (*(table->field+1))->row_pack_length()

$6 = 15

(gdb) p (*(table->field+0))->type()

$7 = MYSQL_TYPE_LONG

(gdb) p (*(table->field+1))->type()

$8 = MYSQL_TYPE_NEWDECIMAL

(gdb)The preceding information shows the filed types: The aid field is of type MYSQL_TYPE_LONG and occupies 4 bytes; the num field is of type MYSQL_TYPE_NEWDECIMAL and occupies 15 bytes.

The SUM() and AVG() functions return a DECIMAL value for exact-value arguments (integer or DECIMAL), and a DOUBLE value for approximate-value arguments (FLOAT or DOUBLE). (Before MySQL 5.0.3, SUM() and AVG() return DOUBLE for all numeric arguments.)

According to the preceding information, the total length of the two fields is 19. However, the tmp_table_info.reclength shown in optimizer_trace is 20. Other experiments also indicate that the length of table->s->reclength is the total length of all the fields in table->field plus 1.

memory on the heap to store data processed by group by and find that the memory is insufficient.aid and num (sum(pv) as num).idx_day_aid_pv into the temporary table. The insert operation follows this rule: If the aid field does not exist, insert the data row directly; if that field already exists, add the pv values to num.idx_day_aid_pv from 20181220 to 20181224 and perform Step 3.num in the temporary table in the precedence queue.Here I also want to show the steps to perform precedence queue sorting:

# Query_time: 4.406927 Lock_time: 0.000200 Rows_sent: 10 Rows_examined: 1337315

SET timestamp=1552791804;

select aid,sum(pv) as num from article_rank force index(idx_aid_day_pv) where day>=20181220 and day<=20181224 group by aid order by num desc limit 10;Why does it take 12 times less time to scan the same number of rows (1,337,315 rows)?

For ease of understanding, I also simulate a small portion of the data corresponding to the idx_aid_day_pv index according to the indexing rule.

A temporary table is not required for group by. One reason why this solution shows much higher performance than SQL1 is that aid values in idx_aid_day_pv are definitely ordered. Therefore, a temporary table is not required for group by. A temporary table is only required during sorting. How can we verify this? We simply need to use the two methods and compare the results.

Performance for using idx_day_aid_pv:

mysql> explain select aid,sum(pv) as num from article_rank force index(idx_day_aid_pv) where day>=20181220 and day<=20181224 group by aid order by null limit 10;

+----+-------------+--------------+------------+-------+-------------------------------+----------------+---------+------+--------+----------+-------------------------------------------+

| id | select_type | table | partitions | type | possible_keys | key | key_len | ref | rows | filtered | Extra |

+----+-------------+--------------+------------+-------+-------------------------------+----------------+---------+------+--------+----------+-------------------------------------------+

| 1 | SIMPLE | article_rank | NULL | range | idx_day_aid_pv,idx_aid_day_pv | idx_day_aid_pv | 4 | NULL | 404607 | 100.00 | Using where; Using index; Using temporary |

+----+-------------+--------------+------------+-------+-------------------------------+----------------+---------+------+--------+----------+-------------------------------------------+Note that I use order by null to disallow the sorting of results obtained after the group by operation. If order by null is not added, the preceding SQL statement will have Using filesort

Performance for using idx_aid_day_pv:

mysql> explain select aid,sum(pv) as num from article_rank force index(idx_aid_day_pv) where day>=20181220 and day<=20181224 group by aid order by null limit 10;

+----+-------------+--------------+------------+-------+-------------------------------+----------------+---------+------+------+----------+--------------------------+

| id | select_type | table | partitions | type | possible_keys | key | key_len | ref | rows | filtered | Extra |

+----+-------------+--------------+------------+-------+-------------------------------+----------------+---------+------+------+----------+--------------------------+

| 1 | SIMPLE | article_rank | NULL | index | idx_day_aid_pv,idx_aid_day_pv | idx_aid_day_pv | 12 | NULL | 10 | 11.11 | Using where; Using index |

+----+-------------+--------------+------------+-------+-------------------------------+----------------+---------+------+------+----------+--------------------------+View optimizer trace information

# 开启optimizer_trace

set optimizer_trace='enabled=on';

# 执行 sql

select aid,sum(pv) as num from article_rank force index(idx_aid_day_pv) where day>=20181220 and day<=20181224 group by aid order by num desc limit 10;

# 查看 trace 信息

select trace from `information_schema`.`optimizer_trace`\G;The following shows a fraction of the final execution result.

{

"join_execution": {

"select#": 1,

"steps": [

{

"creating_tmp_table": {

"tmp_table_info": {

"table": "intermediate_tmp_table",

"row_length": 20,

"key_length": 0,

"unique_constraint": false,

"location": "memory (heap)",

"row_limit_estimate": 838860

}

}

},

{

"filesort_information": [

{

"direction": "desc",

"table": "intermediate_tmp_table",

"field": "num"

}

],

"filesort_priority_queue_optimization": {

"limit": 10,

"rows_estimate": 552213,

"row_size": 24,

"memory_available": 262144,

"chosen": true

},

"filesort_execution": [

],

"filesort_summary": {

"rows": 11,

"examined_rows": 552203,

"number_of_tmp_files": 0,

"sort_buffer_size": 352,

"sort_mode": "<sort_key, rowid>"

}

}

]

}

}The execution process is as follows:

aid and num (sum(pv) as num).idx_aid_day_pv and see if it matches the criterion. If the day field value is not in the range (20181220 - 20181224), read the next row; if the day filed value is in the range, accumulate the pv values (this operation is not to be performed in the temporary table).idx_aid_day_pv index. If the aid value is consistent with that shown in Step 1 and meets the criterion, accumulate the pv values (this operation is not to be performed in the temporary table). If the aid value is not consistent, write the preceding result set into the temporary table.idx_aid_day_pv index are scanned.num in the temporary table in the precedence queue.rowids and return the result set.Here I also want to show the steps to perform precedence queue sorting:

num and rowid values as the 10 elements of a Min heap (that is, the smallest num value is the value of the root).I have found that if I add a row with the day value of 20181219, the scanning index also reads that row, although it does not meet the criterion. Because in this test all the data is within the period of the five days from 20181220 to 20181224, the scanned rows happen to be all the data rows in the table.

What if the table stores data within the period of 10 days or 365 days instead of these 5 days? Is this solution still feasible? Let's first simulate data for 10 days by extending the closing date range by another 5 days. The number of rows remains unchanged (785,102 rows).

drop procedure if exists idata;

delimiter ;;

create procedure idata()

begin

declare i int;

declare aid int;

declare pv int;

declare post_day int;

set i=1;

while(i<=785102)do

set aid = round(rand()*500000);

set pv = round(rand()*100);

set post_day = 20181225 + i%5;

insert into article_rank (`aid`,`pv`,`day`) values(aid, pv, post_day);

set i=i+1;

end while;

end;;

delimiter ;

call idata();

# Query_time: 9.151270 Lock_time: 0.000508 Rows_sent: 10 Rows_examined: 2122417

SET timestamp=1552889936;

select aid,sum(pv) as num from article_rank force index(idx_aid_day_pv) where day>=20181220 and day<=20181224 group by aid order by num desc limit 10;The number of scanned rows is 2,122,417 because the scanning index need to iterate the entire index (the number of index rows is the number of all the table rows) and I have just inserted another 785,102 rows.

The result shows that when the data volume is doubled, the query time is also doubled. Therefore, this optimization method is not stable.

The default space of a temporary table is 16 MB.

mysql> show global variables like '%table_size';

+---------------------+----------+

| Variable_name | Value |

+---------------------+----------+

| max_heap_table_size | 16777216 |

| tmp_table_size | 16777216 |

+---------------------+----------+max_heap_table_size: This variable sets the maximum size to which user-created MEMORY tables are permitted to grow. The value of the variable is used to calculate MEMORY table MAX_ROWS values. Setting this variable has no effect on any existing MEMORY table, unless the table is re-created with a statement such as CREATE TABLE or altered with ALTER TABLE or TRUNCATE TABLE. A server restart also sets the maximum size of existing MEMORY tables to the global max_heap_table_size value.

tmp_table_size: The maximum size of internal in-memory temporary tables. This variable does not apply to user-created MEMORY tables.The actual limit is determined from whichever of the values of tmp_table_size and max_heap_table_size is smaller. If an in-memory temporary table exceeds the limit, MySQL automatically converts it to an on-disk temporary table. The internal_tmp_disk_storage_engine option defines the storage engine used for on-disk temporary tables.

That is to say, the maximum size of the temporary table is 16 MB and max_heap_table_size is also limited by tmp_table_size.

Now let's change the temporary table size to 32 MB and run the original SQL query.

set tmp_table_size=33554432;

set max_heap_table_size=33554432;

# Query_time: 5.910553 Lock_time: 0.000210 Rows_sent: 10 Rows_examined: 1337315

SET timestamp=1552803869;

select aid,sum(pv) as num from article_rank where day>=20181220 and day<=20181224 group by aid order by num desc limit 10;Due to the large number of query results, we specify in the optimizer that the temporary table uses the disk storage directly.

# Query_time: 6.144315 Lock_time: 0.000183 Rows_sent: 10 Rows_examined: 2122417

SET timestamp=1552802804;

select SQL_BIG_RESULT aid,sum(pv) as num from article_rank where day>=20181220 and day<=20181224 group by aid order by num desc limit 10;The number of scanned rows is the number of all the matching rows (785,102) multiplied by 2 plus the number of all the rows after group by (552,203) and the limit value.

By the way, I also want to mention that if this solution is adopted, the query time basically remains unchanged when the data volume is doubled. The reason is that the number of scanned rows remains unchanged. In this test, the query time is 6.197484 seconds.

Solution 1 does not provide stable performance. When the data rows in the entire table is the same as the number of rows in the query range and the temporary table size limit is not reached, this solution provides the best performance. The higher the ratio of the query data to the total data volume of the entire table, the lower the optimization.

Solution 2 requires the adjustment to the temporary table memory size and is feasible. However, when the database is larger than 32MB, this solution requires further increase in the temporary table size.

Solution 3 directly uses a disk to store the temporary table. Although one additional scan on the matching rows is required, the whole response time is increased by only 0.1s compared with Solution 2. In this example, the data volume is relatively small and therefore I think this time gap is acceptable.

The final conclusion is that Solution 3 is the most appropriate for this scenario.

# SQL1

select aid,sum(pv) as num from article_rank where day>=20181220 and day<=20181224 group by aid order by num desc limit 10;

# SQL2

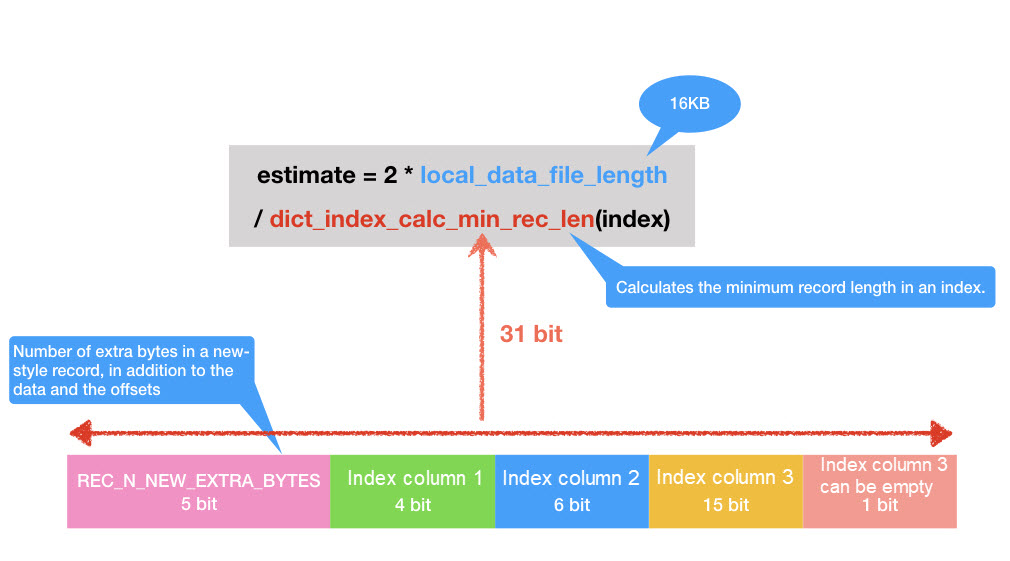

select aid,sum(pv) as num from article_rank force index(idx_aid_day_pv) where day>=20181220 and day<=20181224 group by aid order by num desc limit 10;group by operation is 552,203 for both SQL1 and SQL2, why does SQL1 have the problem of insufficient memory? What are details behind this problem?creating_tmp_table.tmp_table_info.row_limit_estimate in trace shows 838,860; this calculation is based on the temporary table size limit (16 MB). Because one row occupies 20 bytes, the total number of rows that can be included is 838860 (16777216/20). However, we actually need to put 785,102 rows into the temporary table. Why?SQL_BIG_RESULT for optimization? Why does writing into the temporary table in the memory alone lead to a 10× performance difference?filesort_priority_queue_optimization.rows_estimate. I see the calculation rule in gdb, as shown in the following figure.

2 posts | 1 followers

Followdigoal - September 17, 2019

ApsaraDB - November 26, 2025

ApsaraDB - December 27, 2023

ApsaraDB - July 8, 2021

Alibaba Cloud Native Community - February 13, 2026

ApsaraDB - July 4, 2022

2 posts | 1 followers

Follow Backup and Archive Solution

Backup and Archive Solution

Alibaba Cloud provides products and services to help you properly plan and execute data backup, massive data archiving, and storage-level disaster recovery.

Learn More Drive and Photo Service

Drive and Photo Service

A low-code, high-availability, and secure platform for enterprise file management and application

Learn More Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn More Sensitive Data Discovery and Protection

Sensitive Data Discovery and Protection

SDDP automatically discovers sensitive data in a large amount of user-authorized data, and detects, records, and analyzes sensitive data consumption activities.

Learn MoreMore Posts by zhoumengkang