By digoal

The cloud storage itself and the stress test conditions play an essential role while stress testing the ESSD performance. You can configure the stress test conditions for ESSD performance as shown in this article to fully use the system with multi-core and multi-concurrency for one million IOPS.

/dev/vdb. See ESSD documentation for more details. If a file system is involved, use the file name under the file system instead.Note: You can obtain accurate block storage disk performance by testing raw disks at the risk of destroying the file system structure. Before the test, please create snapshots for data backup. For more information, see Create a snapshot. To prevent data loss, we recommend you to test the block storage performance only on a new ECS instance without data.

1) Run the following commands in sequence to install libaio and FIO.

yum install -y https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm

sudo yum install libaio –y

sudo yum install libaio-devel –y

sudo yum install fio -y 2) Switch the path.

cd /tmp 3) Create the test100w.sh script.

vi test100w.sh 4) Paste the following snippet of code to the test100w.sh script:

Assume that there are 9 ESSD vd[b-j]

function RunFio

{

numjobs=$1 # 实例中的测试线程数,如示例中的8

iodepth=$2 # 同时发出I/O数的上限,如示例中的64

bs=$3 # 单次I/O的块文件大小,如示例中的4K

rw=$4 # 测试时的读写策略,如示例中的randwrite

filename=$5 # 指定测试文件的名称,如示例中的/data01/test

ioengine=$6 # io engine : libaio, sync等,参考man fio

direct=$7 # 是否跳过page cache ,参考man fio

nr_cpus=`cat /proc/cpuinfo |grep "processor" |wc -l`

if [ $nr_cpus -lt $numjobs ];then

echo “Numjobs is more than cpu cores, exit!”

exit -1

fi

let nu=$numjobs+1

cpulist=""

for ((i=1;i<10;i++))

do

list=`cat /sys/block/vdb/mq/*/cpu_list | awk '{if(i<=NF) print $i;}' i="$i" | tr -d ',' | tr '\n' ','`

if [ -z $list ];then

break

fi

cpulist=${cpulist}${list}

done

spincpu=`echo $cpulist | cut -d ',' -f 2-${nu}`

echo $spincpu

fio --ioengine=${ioengine} --runtime=60s --numjobs=${numjobs} --iodepth=${iodepth} --bs=${bs} --rw=${rw} --filename=${filename} --time_based=1 --direct=${direct} --name=test --group_reporting --cpus_allowed=$spincpu --cpus_allowed_policy=split

}

# 设置essd块设备 queue rq affinity,假设有9个essd盘,并且他们在vd[b-j]

echo 2 > /sys/block/vdb/queue/rq_affinity

echo 2 > /sys/block/vdc/queue/rq_affinity

echo 2 > /sys/block/vdd/queue/rq_affinity

echo 2 > /sys/block/vde/queue/rq_affinity

echo 2 > /sys/block/vdf/queue/rq_affinity

echo 2 > /sys/block/vdg/queue/rq_affinity

echo 2 > /sys/block/vdh/queue/rq_affinity

echo 2 > /sys/block/vdi/queue/rq_affinity

echo 2 > /sys/block/vdj/queue/rq_affinity

sleep 5

RunFio $1 $2 $3 $4 $5 $6 $7

# RunFio 16 64 8k randwrite /data01/test libaio 1 The test100w.sh script varies in different test environments. Modify the script according to the actual conditions in your situation.

/dev/vdb in RunFio 10 64 4k randwrite /dev/vdb./dev/vdb in the example if the data loss on cloud storage does not affect businesses. Otherwise, set filename = [detailed file path], such as /mnt/test.image in the example.. ./test100w.sh 16 64 8k randwrite /data01/test libaio 1

. ./test100w.sh 16 64 8k randread /data01/test libaio 1

. ./test100w.sh 16 64 8k write /data01/test libaio 1

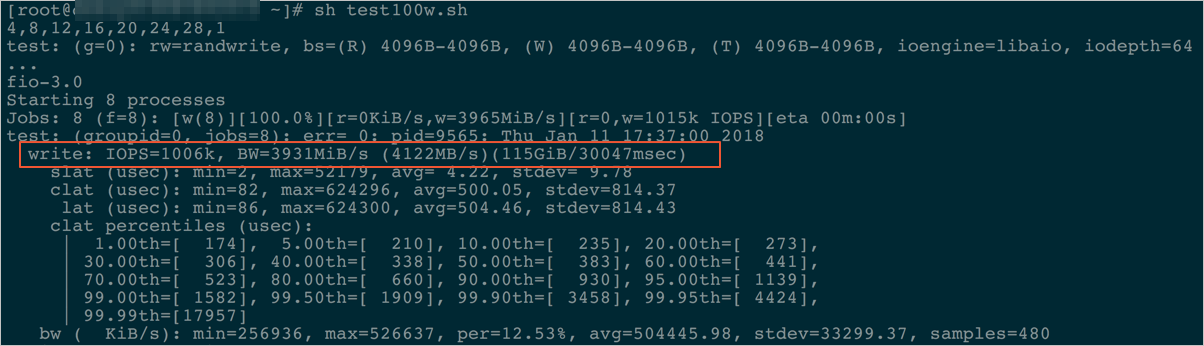

. ./test100w.sh 16 64 8k read /data01/test libaio 1 The ESSD performance test is complete when IOPS=*** appears.

write: IOPS=131k, BW=1024MiB/s (1074MB/s)(29.0GiB/30003msec)

The following command modifies the system parameter rq_affinity of the block device to 2.

echo 2 > /sys/block/vdb/queue/rq_affinity | rq_affinity Value | Description |

|---|---|

| 1 | Indicate that when the block device receives an I/O completion event, the I/O will be sent back to the Group for processing, where the vCPU that handles the I/O distribution process is located. In concurrent multithreading, I/O Completion may be performed on the same vCPU, causing bottlenecks and failing to improve performance. |

| 2 | Indicate that when a block device receives an I/O completion event, this I/O will be executed on the vCPU to which the I/O distribution process was originally issued. In concurrent multithreading, you can give each vCPU full play. |

The following command binds jobs to different CPU Cores:

fio -ioengine=libaio -runtime=30s -numjobs=${numjobs} -iodepth=${iodepth} -bs=${bs} -rw=${rw} -filename=${filename} -time_based=1 -direct=1 -name=test -group_reporting -cpus_allowed=$spincpu -cpus_allowed_policy=split Note:

In normal mode, a device only has one Request-Queue. This Request-Queue becomes a performance bottleneck when multiple threads run concurrently to process I/O. In Multi-Queue mode, a device has multiple Request-Queues to process I/O, which fully uses the backend storage. Assume that there are four I/O threads. All the four I/O threads need to be respectively bound to the CPU Cores that correspond to different Request-Queues. As such, you can fully use the Multi-Queue mode to improve storage performance.

| Parameter | Description | Example |

|---|---|---|

| numjobs | I/O threads. | 10 |

| /dev/vdb | The device name of the ESSD. | /dev/vdb |

| cpus_allowed_policy | FIO provides the parameters cpus_allowed_policy and cpus_allowed to bind vCPU. | split |

The preceding command runs several jobs that are bound to several CPU Cores and correspond to different Queue-Ids. To view the cpu_core_id bound to the Queue_Id, try:

Run ls /sys/block/vd*/mq/ to view the Queue_Id of the cloud storage with vd* as the device name.

Run cat /sys/block/vd*/mq//cpu_list to view the cpu_core_id bound to the Queue of the cloud storage with vd as the device name.

Read previous post: How to Use FIO to Test the IO Performance of ECS Local SSD and ESSD (Part 1)

How to Use FIO to Test the IO Performance of ECS Local SSD and ESSD (Part 1)

PostgreSQL: How ORG GIS FDW External Table Supports Pushdown

digoal - May 24, 2021

digoal - May 19, 2021

Alibaba Cloud ECS - April 18, 2019

Alibaba Cloud Community - April 25, 2022

Alibaba Clouder - May 15, 2018

Alibaba Cloud Native - May 23, 2022

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More Local Public Cloud Solution

Local Public Cloud Solution

This solution helps Internet Data Center (IDC) operators and telecommunication operators build a local public cloud from scratch.

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn MoreMore Posts by digoal