Watch the replay of the Apsara Conference 2022 via this link!

In March 2022, at the Alibaba Cloud Global Data Lake Summit, Alibaba Cloud brought an upgrade solution for Data Lake 3.0 from three aspects: Data Lake Management, Data Lake Storage, and Data Lake Compute. Alibaba Cloud upgraded the storage capabilities of the data lake again during the 2022 Apsara Conference in November.

A data lake is a unified storage platform that stores various types of data in a centralized manner, provides elastic capacity and throughput, covers a wide range of data sources, and supports multiple computing, processing, and analysis engines to access data directly. It can implement fine-grained authorization and auditing functions (such as data analysis, machine learning, data access, and management).

More enterprises choose data lakes as solutions for data storage and management. At the same time, the application scenarios of data lakes are constantly developing. All industries are building data lakes on the cloud. Simple analysis (at the beginning), Internet search promotion, in-depth analysis, and large-scale AI training (over the past two years) are all based on the data lake architecture.

Currently, the scale of cloud data lakes of many Alibaba Cloud customers has exceeded 100PB. Therefore, it can be predicted that the data analysis architecture based on data lakes is an unstoppable future development trend. Why is this architecture so popular?

Alex Chen (a researcher at Alibaba Group and Senior Product Director of Alibaba Cloud Intelligence) believes since enterprises generate data all the time, this data needs to be analyzed to activate its value. Data analysis can be divided into real-time analysis and exploratory analysis. Real-time analysis uses known data to answer known questions. Exploratory analysis uses known data to answer unknown questions, so the data needs to be stored in advance, which will undoubtedly increase storage costs.

Alibaba Cloud has selected the storage and computing separation architecture to reduce storage costs. This architecture provides independent extensibility. Customers can make data into the data lake and scale out the computing engine on demand. This decoupling mode will result in higher cost performance. Alibaba Cloud Object Storage Service (OSS) is the unified storage layer of the data lake and can connect to various business applications and computing and analysis platforms.

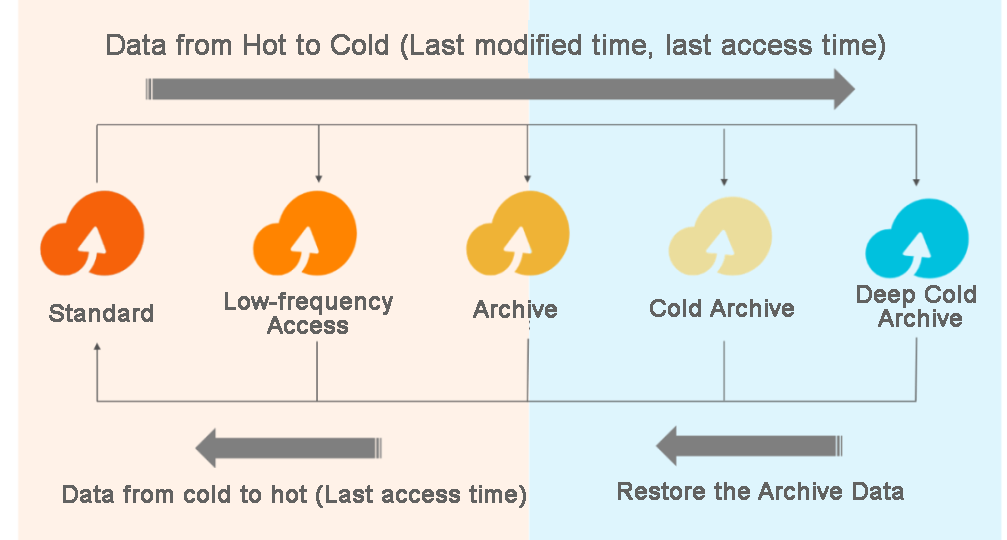

At the 2022 Apsara Conference, Alibaba Cloud Storage officially released the Deep Cold Archive of OSS. Select a lifecycle rule based on the last access time. This allows the server to identify hot and cold data automatically based on the last access time and implement hierarchical data storage. Even if there are multiple objects in one bucket, you can manage the lifecycle of each object and each file according to the last modification time or access time.

Archive or Cold Archive objects of OSS must be restored before they can be read. It takes several minutes to restore an Archive object, and it takes several hours to restore a Cold Archive object based on different restoration priorities. This brings great distress to some users.

OSS introduces the Direct Reading capability to allow users to read Archive/Cold Archive Storage directly. Data is accessed directly without being restored. At the same time, data lifecycle management policies and OSS Deep Cold Archive are used to reduce costs and increase efficiency, which can reduce 95% of the overall data lake cost.

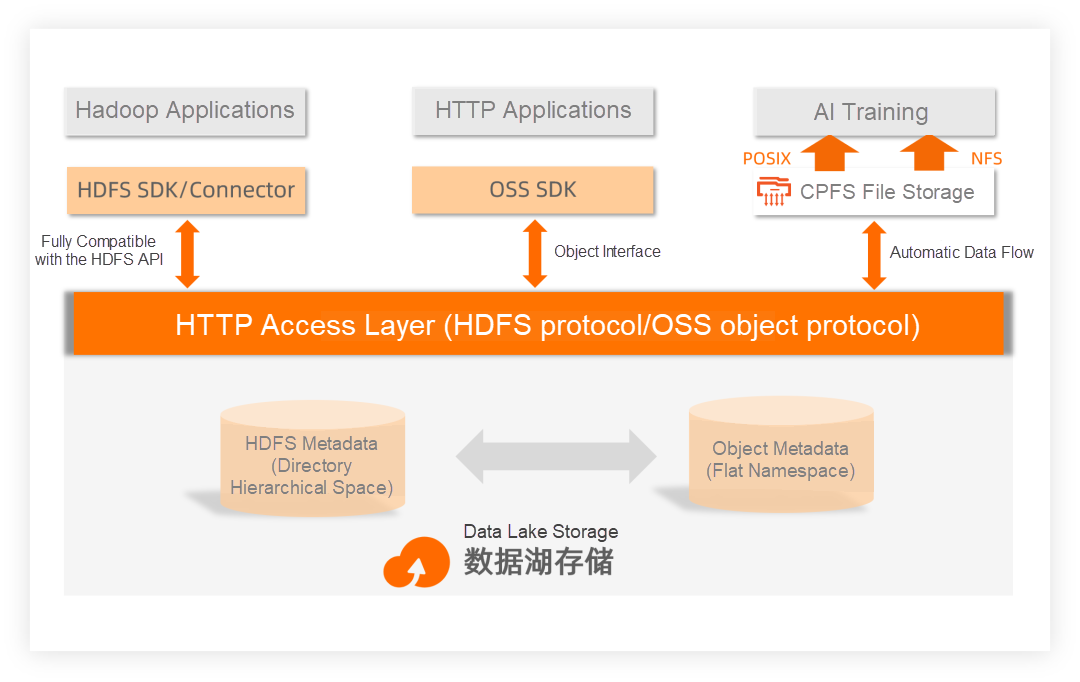

With the development of AI, IoT, and cloud-native technologies, the demand for unstructured data processing is growing. There is a growing trend to use the cloud OSS as unified storage. The Hadoop system has gradually changed from HDFS as unified storage to cloud storage (such as S3 and OSS) on the cloud as the data lake system for unified storage. Now, data lake architecture has entered the 3.0 era. In terms of storage, it is OSS-centric, and multi-protocol compatibility and unified metadata management are realized. In terms of management, its one-stop lake construction and management for lake storage and computing enable intelligent lake construction and lake management.

Yaxiong Peng (a Senior Product Expert at Alibaba Cloud Intelligence) pointed out that full compatibility with HDFS is supported under the data lake architecture 3.0. Users no longer need to build metadata management clusters to migrate self-built HDFS to the data lake architecture easily. At the same time, cloud-native data lake storage supports multi-protocol access and unified management of various metadata, enabling the seamless integration of HDFS and object storage layer, allowing efficient and unified data flow, data management, and data use between multiple ecosystems, and helping users accelerate business innovation. The read and write capability of 100Gbps/PB can further improve data processing efficiency.

The engine of the data analysis architecture is constantly iterating. One piece of data needs to be shared by multiple applications in AI and autonomous driving scenarios. As a unified storage base for data lakes on the cloud, OSS provides low-cost and reliable storage capabilities for massive data. Cloud Parallel File Storage (CPFS) is deeply integrated with OSS. When high-performance computing is required (such as inference and simulation), CPFS can access and analyze data in OSS quickly, achieving data flow on demand and block-level Lazyload.

In addition, CPFS allows you to mount and access a file system using the POSIX client or the NFS client. You can access a large number of small files easily.

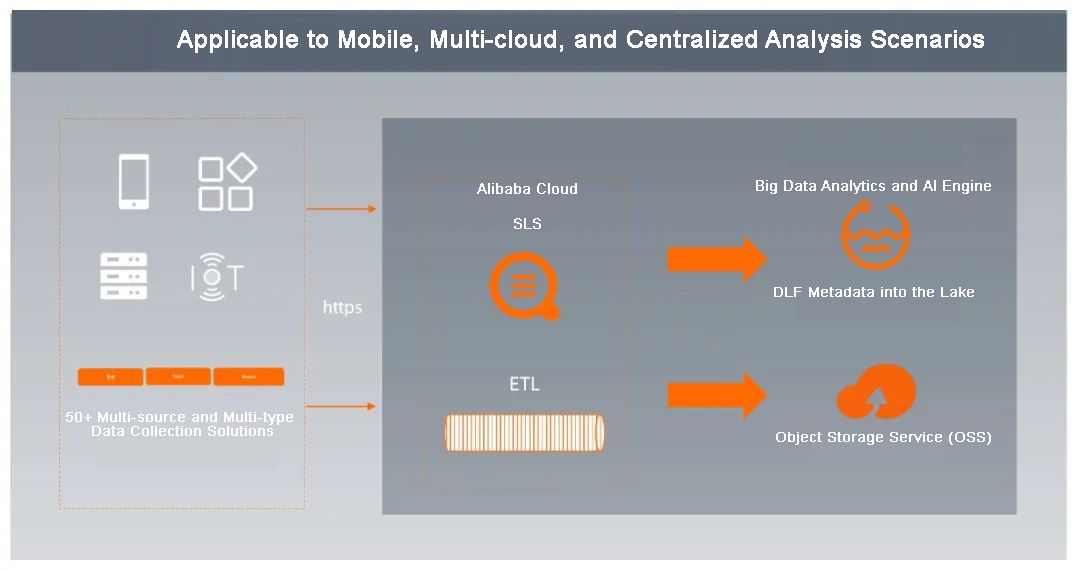

With the booming development of cloud computing, more IT system infrastructure is moving to the cloud, and data is moving away from enterprise data centers. According to statistics, 80% of data is generated outside the data center. At this time, enterprises can transmit data to their data centers or clouds through RESTful API, HTTP, or VPN.

When building an enterprise data lake, you can use Data Lake Formation (DLF) first to import data into the lake, manage the metadata, and use SLS to deliver global data to OSS in the data lake in real-time. Then, you can give full play to the capabilities of OSS to realize the hierarchical storage of hot and cold data, so that the overall data lake solution can achieve the purpose of reducing costs and increasing efficiency.

Cloud data centers and on-premises data centers need a unified namespace but also need to communicate with each other to facilitate data management. In the case of data intercommunication, the computing power can be transferred from offline to the cloud at any time and distributed on demand. Of course, this can only happen when data from traditional and emerging applications can be integrated (such as IoT, big data, and AI). Seamless cloud migration through hybrid cloud IT architecture has become the new trend for enterprise applications. Hybrid cloud storage will bridge on-premises data centers and public clouds. It has also become an indispensable part of the overall data lake solution.

A data lake is a future-oriented big data architecture. Only the data lake that achieves the integration of file objects, intelligent hierarchical storage of hot and cold data, and the data intercommunication between cloud data centers and on-premises data centers is the data lake with broad prospects. Alibaba Cloud Data Lake 3.0 has been implemented in the technological frontiers (such as the Internet, finance, education, and gaming). It has been widely used in industries with massive data scenarios, such as artificial intelligence, IoT, and autonomous driving. In the future, Alibaba Cloud hopes to work with partners to apply cloud-native data lakes to various industries and promote more enterprises to achieve digital innovation.

1,322 posts | 464 followers

FollowAlibaba Cloud Community - December 9, 2022

Apache Flink Community - May 10, 2024

Alibaba EMR - August 5, 2024

Alibaba Cloud Community - April 14, 2022

Alibaba Developer - August 19, 2021

Apache Flink Community - May 30, 2025

1,322 posts | 464 followers

Follow Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn MoreMore Posts by Alibaba Cloud Community