By Zhang Lei, Senior Technical Expert at Alibaba Cloud Container Platform, CNCF Ambassador, Senior Member, and Maintainer of Kubernetes

This year, developers worldwide began using containers for software testing and online releases and are now getting used to container-based software build and delivery. Discussions around "cloud-native" technology and the application governance methods in the multi-cloud era are now common. Naturally, the "sidecar" container pattern is the default choice. In today's era when the cloud has become a common infrastructure, considering containers as the basic dependency of modern software infrastructure is a norm. Now we use containers as naturally as we use Eclipse to write Java code every day.

However, two years ago, the entire container ecosystem was having fierce debates around Docker and the prospect of container services seemed completely uncertain. At that point, many public cloud providers in China did not have formal Kubernetes services. It was quite a cutting-edge exploration to manage the entire software lifecycle on the cloud by using the container technology. Who could imagine that containers would be really a part of technicians' daily work in just two years?

As the container technology grows more popular, the past two years have witnessed an important reform in the way modern software is delivered.

The virtualized container technology dates back to the end of the 1970s. In 1979, Bell Laboratories began to carry out the final development and test work for the release of the Unix V7 (Version 7 Unix) OS.

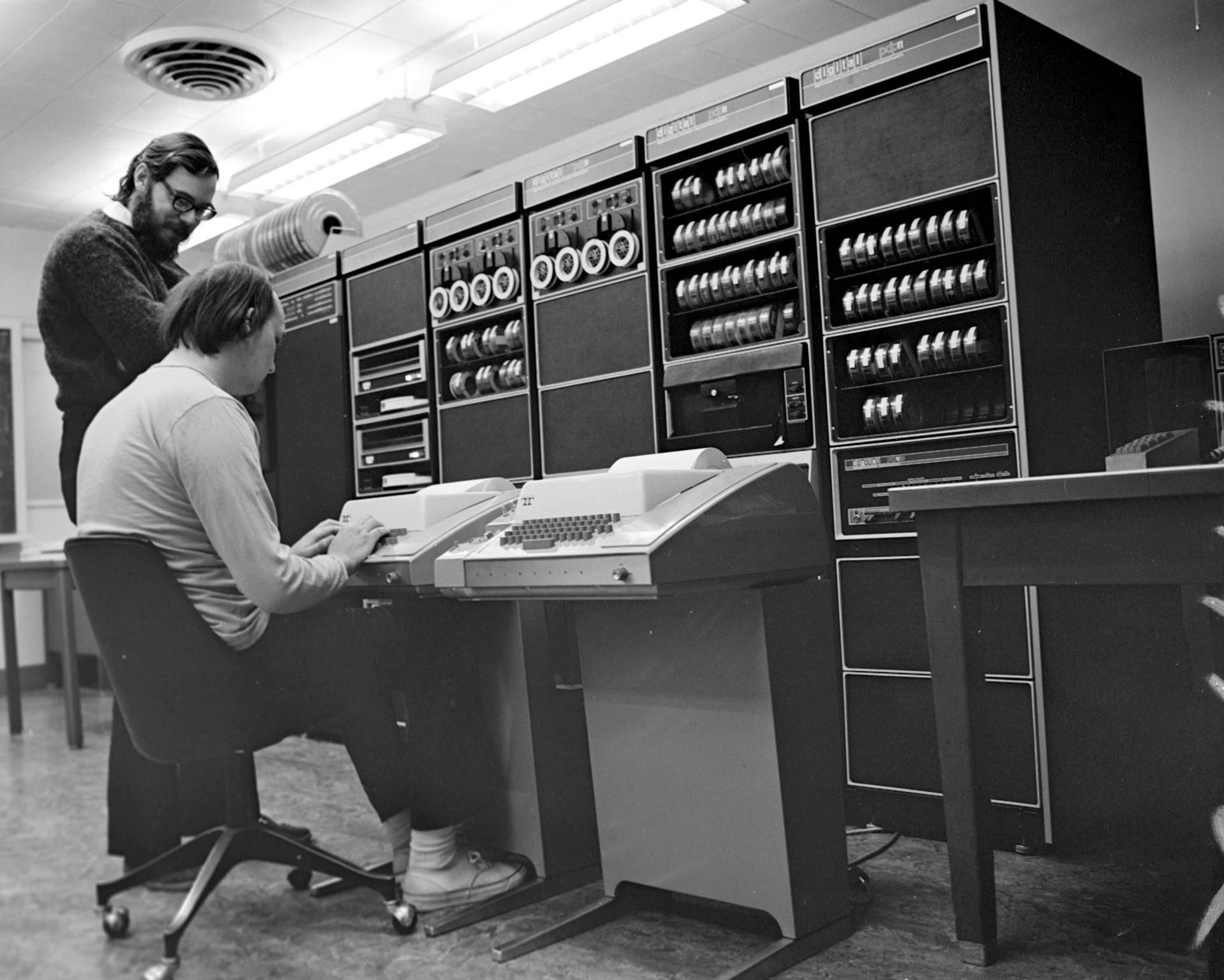

Ken Thompson(sitting) and Dennis Ritchie at PDP-11 ©wikipedia

During that time, the Unix OS was still an internal project of Bell Laboratories. The machine that Unix ran on was a huge PDP-series box, which looked like it had audio dials and controls. It was not easy even for Bell Laboratories to develop and maintain the huge Unix OS project during the last phase of the software crisis. What made things even more difficult was that programmers had to develop C language as well while developing Unix.

During the development of Unix V7, the efficiency of building and testing was one of the most difficult problems. The reason for this is obvious, When a system software application is compiled and installed, the entire test environment is actually "polluted". To implement the next build, installation, and test, the test environment must be rebuilt and re-configured. Today, with cloud computing capability, we may reproduce an entire cluster by using methods like virtual machines (VMs). However, the idea of quickly destroying and rebuilding infrastructure was a kind of fantasy at a time when a 64 KB memory chip cost 419 dollars.

To improve efficiency, the smart brains at Bell Laboratories devised the idea of creating an isolated environment for the software build and tests under the existing OS environment. Specifically, they wanted to find out whether they could change the view of an application by running some simple directives so that the current directory is used as the root directory. If that turned out to be feasible, all the dependencies required for running a software application would stand ready simply by putting the file system part of an entire OS into the current directory.

More importantly, with this capability, developers indirectly have the ability to quickly destroy and reproduce application infrastructure they no longer have to install and configure the dependencies after the environment is built. This is because all the dependencies required for running the software application would be pre-prepared in the form of a file directory under the OS. Developers only need to switch the root directory of the application to the file directory when they build and test the application.

Therefore, a system invocation called chroot (Change Root) was born.

As the name implies, chroot changes the root directory for the current running process and their children to a new location on a file system so that the current process no longer has access to the "upper world" of this location. This isolated environment received a vivid name-Chroot Jail.

It is worth mentioning that the Unix V7 OS that gave birth to chroot is the first-class internal release of Unix by Bell Laboratories. At the end of that year, Unix was formally commercialized by AT&T and allowed for external use, which paved the way for Unix becoming a classic operating system.

The birth of chroot opened the door for process isolation for the first time.

As more and more users employ this mechanism, chroot is also becoming an important tool for configuring the development and test environment and managing application dependencies. The concept of "Jail", which describes the process environment isolation, also inspires more achievements in this technical field.

In 2000, the "jail" command was released on the FreeBSD operating system, which also belongs to the Unix family.

It indicates the general availability of the FreeBSD jail environment. Compared with Chroot Jail, the FreeBSD jail mechanism extends the "isolation" concept to the complete view of a process and provides isolated process environments and user systems, and assigns independent IP addresses for jails. Therefore, to be exact, although chroot is the very origin of process environment isolation, it is the FreeBSD jail mechanism that actually makes jailed processes sandboxed. The key part is that the implementation of this sandbox is based on the OS-level isolation and restrictions rather than hardware virtualization. However, neither the FreeBSD jail (on Unix) nor the subsequent Oracle Solaris Containers (on Solaris) failed to play a more important role in a wider range of software and delivery scenarios. In the era of jails, the process-sandbox technology was always limited and known to a small community because the cloud concept did not get popularized at that time.

In fact, in the past few years when jails received excessive popularity, multiple technologies similar to the sandbox have also been developed on the rapidly-developing Linux platform, such as Linux VServer and OpenVZ (not included in the kernel trunk). However, like jails, these sandbox-like technologies are also only known to a small community. This proves again that a lack of the role of cloud in the development of the process-sandbox had a huge negative impact. We cannot talk about the cloud without mentioning the leading company in the infrastructure field-Google.

The impact of Google in the field of infrastructure, a core component behind cloud computing, is widely recognized in the industry. Google is playing an important role in this industry, whether it is the three ground-breaking papers or the internal infrastructure projects that have taken the lead in the industry for years, such as Borg and Omega. However, in the current cloud computing market, AWS, which was released only one year earlier than Google Cloud, is undoubtedly a leader in the industry. Considering their experience with GAE, many people may think this is a controversial conclusion.

Google App Engine (GAE) has left indelible impressions on the minds of several generations of Chinese technicians. However, even these loyal GAE users may not know that GAE is actually the core cloud product that Google planned to compete against AWS at the time. In fact, GAE itself is more a simplified version of Serverless than a PaaS. It was difficult for a product like GAE to win enterprise-level users in 2008, a time when most people did not understand what cloud computing was at all.

What we are going to talk about here is not Google's cloud strategy, but why Google technically sees application hosting services like GAE as cloud computing services.

One important reason that many people may already know is that the infrastructure technology stack of Google is actually a standard container technology stack rather than a virtual machine technology stack. More importantly, under Google's system, a container is no longer a simple sandbox for isolated processes, but a method to encapsulate an application itself, thanks to the unique application orchestration and management capabilities provided by the Borg project. Relying on this lightweight application encapsulation, Google's infrastructure is seen as a naturally application-centered hosting and programming framework. This is why many ex-Googlers jokes that they do not know how to write code without Borg. Now it would be easier to understand that this architecture and form, after the mapping to external cloud services, become a PaaS/Serverless product like GAE.

The large-scale application and maturity of the container-based infrastructure at Google date back between 2004 and 2007. One of the important milestones in this process is the release of the Process Container.

Process Container goals are quite straightforward and actually consistent with those of the aforementioned sandbox technology to provide OS-level resource restriction, priority control, resource audit, and process control capabilities for processes as the virtualization technology does. These capabilities are the basic requirement and dependency of Google's internal infrastructure implementation. They also make up Google's container technology prototype. After being released by Google engineers in 2006, Process Container was included in the Linux kernel trunk in the following year.

Since the term "Container" was used in the Linux kernel, Process Container was renamed as Cgroups in Linux. The emergence and maturity of Cgroups mark a new review and implementation of the "container" concept in Linux. This time, the advocate of the container technology is Google, a pioneer that uses the container technology at scale to define their world-class infrastructure.

In 2008, Linux Container (LXC), a complete container technology came into being in the Linux kernel by combining the resource management of Cgroups and the view isolation of Linux Namespaces. Although LXC provides users with capabilities similar to the early Linux sandbox technologies such as Jails and OpenVZ mentioned previously, it enjoyed a much better situation than its predecessors as the Linux operating system begins to quickly seize its share in the commercial server market.

Since 2008, industry giants such as AWS and Microsoft have made continuous efforts in the public cloud market, soon breeding an emerging industry called PaaS.

Due to the first-mover advantages of these old companies in the IaaS layer and the technical barriers in this regard, more and more technology companies that are affected by the public cloud and cloud computing successors begin to consider how to develop new technologies and business value on top of IaaS and avoid the wrong path that GAE once took. In this situation, a group of open-source and platform-level projects emerges to implement and apply the abstract "PaaS" concept for the first time.

These PaaS projects are positioned as application hosting services. Unlike public cloud hosting services like GAE, these open PaaS projects are expected to build an application management ecosystem completely independent of the IaaS layer. The goal is to occupy the higher-layer entries to the cloud and even all the data centers by utilizing the advantage that PaaS is closer to developers. This means that PaaS projects must be able to encapsulate applications submitted by users and quickly deploy them to the underlying infrastructure, without any dependency on the virtualization in the IaaS layer. The open-source, neutral, lightweight and agile Linux container naturally becomes the best choice to host and deploy applications by using PaaS.

After acquiring SpringSource (founder of the Spring Framework) in 2009, VMware used the name of a Java PaaS project within SpringSource as the name of its own internal PaaS project, which was later made an open-source project in 2011. This project is called Cloud Foundry. Created in 2009, Cloud Foundry defines PaaS clearly and completely for the first time.

This project has brought many important concepts to the cloud computing industry for the first time, which are also widely recognized. These concepts include "the direct application management, orchestration, and scheduling enabled by the PaaS project, with focus on the business logic rather than the infrastructure" and "the PaaS project enable application encapsulation and startup through the container technology". It is worth mentioning that Cloud Foundry uses Warden to start and operate containers. Warden was initially the encapsulation of LXC and later restructured as an architecture directly for Cgroups and Linux Namespace.

These increasingly popular PaaS projects have the same goal as the GAE project released earlier by Google. All these projects share the same concept that developers should focus on the business logic, which has the highest value, rather than the underlying infrastructure (for example, virtual machines). This concept did not become highly convincing until the popularity of the cloud made more and more people aware of the high complexity and cost of infrastructure management. In this blueprint, the Linux container has broken the limitations of the process sandbox and began to play the role of app containers. At this new stage, an equal relationship is established between containers and applications, eventually allowing platform layer systems to implement the full application lifecycle management.

If things go on like this, container technology and cloud computing are supposed to evolve towards the PaaS and "application-centered" trend. This would have been true if the Docker company had not been founded.

If not a witness to the changes, you may find it extremely hard to believe that PaaS or even the entire cloud computing industry has been completely transformed by a startup with its release of an open-source project in 2013. However, the release of this open-source project is indeed the microcosm of the transformation of the entire cloud computing industry over the past five years.

It is not necessary to elaborate on the release of the Docker project and its relationship with PaaS. The "dimensionality reduction attack" concept alone is enough to bring a clear end to originally fierce debates in the industry.

We know that Docker was nothing more than a user of LXC when it was released. The logic that Docker uses to create and use app containers has no essential difference from that of Warden. However, we now know that what really transforms PaaS is the most powerful killer of the Docker project-container images.

How to encapsulate applications itself is not a concern for developers. Therefore, PaaS projects may play a big role. However, how to define applications is closely related to each developer. To solve this problem, Cloud Foundry provides the Buildpack, which is the encapsulation of an application executable file (for example, WAR packages). The Buildpack has built-in startup and stop scripts that are recognized by Cloud Foundry and configuration information.

However, Docker directly packages the entire environment required for running an application (namely, the file system on the OS) by using a container image. This approach solves the consistency problem that has plagued PaaS users for a long time. Creating a Docker image that runs anywhere once published is much smarter than building a Buildpack that even does not enable a unified development and test environment.

More importantly, Docker also introduces the layers in creating container images. Implementing build, push, and update operations based on layers (namely, commits) is obviously a reference to Git. The advantage of this approach is also the same as that of GitHub. Building a Docker image is no longer a tedious and boring job because the image hosting warehouse, Docker Hub allows you and your software to participate in global software distribution.

At this point, you may realize that Docker actually solves a much bigger problem in a higher dimension-how the software should be delivered. When the software delivery methods are defined clearly and completely, it will be simple and easy to make a software hosting platform like a PaaS platform. This is the fundamental reason why Docker said several times that it is just standing on the shoulders of giants. Without the birth and improvement of many useful technologies like the Linux container in the past decade, it would have been a daydream to use one open-source project to define and unify the software delivery process.

Today, container images are a de facto standard for modern software delivery and distribution. However, Docker has not gained the same leadership in this field. The reason is apparent, Docker made some mistakes in the "orchestration battle" later after its huge success with the container technology. In fact, Docker has successfully solved the most critical technical problem of "application delivery" with the clever innovation of "Container Image". However, the container technology is not a "Silver Bullet" for the higher-level problem of how to define and manage applications. In the "application" field that is closely related to developers, demands for complexity and flexibility are always indispensable, while the container technology naturally requires "microserviceability " and "single responsibility" of applications, which is very difficult for the vast majority of real enterprise users. And these users happen to be the key to the cloud computing industry.

However, compared with the application definition method of Docker system with "single container" as the core, the Kubernetes project has put forward a complete set of containerized design modes and corresponding control models, thus clarifying how to build an application delivery and development paradigm with the container as its core that truly interfaces with developers. Docker, Mesosphere, and the Kubernetes project have different understandings and top-level design on the "application" layer, which is the core of the so-called "competition for orchestration".

At the end of 2017, Google's experience in compiling the world's most advanced containerized infrastructure over the past decade eventually helped the Kubernetes project gain a key leadership position, and pushed CNCF, an organization and ecosystem with the keyword of "Cloud-native", to its peak.

Most interesting of all, the "soul" that Google has poured into the Kubernetes project is neither the large-scale scheduling and resource management capabilities that Borg/Omega has accumulated over the years, nor is it the "Three Papers", which were the leading technology in the industry that other companies could not catch up within that year. The design that best reflects the concept of Google container in the Kubernetes project is "the application orchestration and management capabilities derived from the Borg/Omega system".

We know that Kubernetes is an API-heavy project, but we should also understand that Kubernetes is an API-centered project. The container design pattern, the controller model, and the extremely complex apiserver implementation and extension mechanism of Kubernetes only make sense based on this declarative API. However, behind these seemingly complex designs and implementations, they actually serve only one purpose- How users maximize the value of containers and clouds when managing applications.

For this purpose, Kubernetes combines containers and uses the concept of Pod to simulate the behavior of process groups. It is also for this purpose that Kubernetes insists on using the declarative API plus the controller model to orchestrate applications and use the creation and update (PATCH) of API resource objects to drive the continuous operation of the entire system. More specifically, with the Pod and container design patterns, the application infrastructure can interact with and respond to applications (rather than containers), enabling the direct connection between the cloud and the application. With the declarative API, the application infrastructure can be truly decoupled from the details and logic of the cloud, such as lower-level resources, scheduling, orchestration, network, and storage. We can now call these designs "Cloud Native Application Management Ideas", which is the key path to let developers focus on business logic and maximize the value of the cloud.

Therefore, what the Kubernetes project has been working on is to further clarify the constant topic of "application delivery". However, the Kubernetes project is trying to clearly define the concept of "application" in the cloud era, rather than delivering a container and a container image. Here, the application is an organic combination of a group of containers, and also includes a description of the network and storage requirements required for the application to run. A YAML file that describes an application like this is stored in Etcd, and then the controller model is used to drive the state of the entire infrastructure to constantly approach the state declared by the user. This is the core working principle of Kubernetes.

Coming back to 2019 when the software delivery is redefined by Kubernetes and containers.

At this point in time, the Kubernetes project is continuing to try to push the definition, management, and delivery of applications to a new height. We have actually seen some problems and shortcomings of the existing model, especially how the declarative API better aligns with the user experience. On this matter, the Kubernetes project still has a long way to go, but it is moving forward really fast.

Also, the entire cloud computing ecosystem is trying to rethink the "story" of PaaS. Cloud Run, released on Google Cloud Next 2019, has in fact indirectly declared that GAE is "reborn from the ashes" with the standard APIs of Kubernetes and Knative. Another typical example is that an increasing number of applications are abstracted into functions in a more "extreme" way so that they are hosted entirely in the infrastructure-independent environment (FaaS). If the container completely encapsulates the application environment to return the right of application delivery to the developer, then the function separates the relationship between the application and the environment to hand over the right of application delivery to the FaaS platform. It is not hard to see that cloud computing, after being disrupted by Docker in its development to PaaS, began to converge to PaaS with a brand-new idea of the container. But this time, PaaS may change its name to Serverless.

We also see that the boundary of the cloud is being rapidly smoothed by technology and open source. More and more software and frameworks are no longer designed to bind directly to a cloud. After all, you cannot ease users' worries and anxieties about business competition, nor can you prevent more and more users from deploying Kubernetes in all clouds and data centers around the world. We often compare the cloud to water, electricity, and coal, and advice developers not to care about "power generation" and "coal burning". But in fact, not only do developers not care about these things, but they may not even know where water, electricity, and coal come from. In the cloud world of the future, developers will deliver their applications to any place in the world without any difference, which is likely to be as natural as now when we plug the computer into any jack in the room. This is why more and more developers are discussing "Cloud-native".

We cannot foresee the future, but the evolution of code and technology is telling us the fact that the software of the future must be based on the cloud. This will be the ultimate trend of the constant self-revolution of the essential issue of "software delivery", and the core assumption of the "Cloud-native" concept. The so-called "Cloud-native" is actually defining an optimal path that enables applications to make maximum use of cloud capabilities and bring the value of cloud into play. On this path, without the carrier "application", "Cloud-native" is out of the question. Container technology is one of the important means to implement this concept and continue the revolution of software delivery.

As for the Kubernetes project, it is indeed the core and key to the implementation of the entire "Cloud-native" concept. But more importantly, in this technological revolution on software, Kubernetes does not need to work its way into the PaaS field. It will become a "highway" connecting the cloud and the application to quickly deliver applications to any place in the world in a standard and efficient way. The delivery destination here can be either an end-user or PaaS/Serverless, thus creating a more diversified application hosting ecosystem. The value of the cloud will definitely return to the application itself.

Under the cloud trend, many enterprises begin to evolve their business and technology to "Cloud-native". The most difficult challenge in this process is how to migrate, deliver and continuously release applications and software to Kubernetes system. In this wave of technological change, "Cloud-native Application Delivery" has become one of the most popular technical keywords in the cloud computing market in 2019.

Since 2011, Alibaba has begun to put the Cloud-native technology system into practice by leveraging containers. Since no examples are available in the entire industry for reference, Alibaba is gradually developing a containerized infrastructure architecture, which is comparable to that of the first-tier technology companies in the world and serves the entire Alibaba Group. Among these tens of thousands of cluster management experiences, the Alibaba Container Platform Team has explored and summarized four methods to make delivery more intelligent and standardized. Everything on Kubernetes. This means to use K8S to manage everything, including K8S itself.

Application release rollback policy. Applications are released in phases by rules, including publishing kubelets itself. The environment is divided into the simulated environment and the production environment by image segmentation. Sufficient effort should be made on the monitoring side to make Kubernetes more transparent, so as to discover, prevent and solve problems as early as possible.

Recently, the Alibaba Cloud Container Platform Team announced two community projects: Cloud Native App Hub (only available in Chinese) and OpenKruise. Cloud-Native App Hub is a Kubernetes application management center for all developers and OpenKruise is a Kubernetes automated open-source project set from the world's top-level Internet scenarios.

Cloud-Native App Hub provides a Helm Application Domestic Image Site for domestic developers to facilitate users to obtain cloud-native application resources. It also promotes the standardized application packaging format and delivers applications to the K8S cluster with one click, which greatly simplifies the process of delivering cloud-native applications to multiple clusters. The OpenKruise/Kruise project is committed to becoming the "Cloud Native Application Automation Engine" to solve many O&M pain points in large-scale application scenarios. The OpenKruise project is derived from the best practices for large-scale application deployment, release, and management in the Alibaba Economy over the past years. It solves the automation problems of applications on Kubernetes from different dimensions, including deployment, upgrades, elastic resizing, QoS adjustment, health check, migration, and repair.

In the next step, the Alibaba Cloud Container Platform Team will also use this as the basis and continue to work with the entire ecosystem to promote a series of standardization such as cloud-native application definitions, K8S CRD and Operator programming paradigms, and enhanced K8S automated plug-ins, which enable the cloud-native application to realize rapid delivery, update, and deployment in large-scale multi-cluster scenarios.

We look forward to working together with you to welcome the arrival of the Cloud-Native era!

Zhang worked for Hyper and Microsoft Research (MSR) and is currently working on Kubernetes and related upstream and downstream work.

How to Monitor and Autoscale Cloud Native Applications in Kubernetes

550 posts | 52 followers

FollowAlibaba Developer - March 3, 2020

Alibaba Developer - March 3, 2020

Alibaba Clouder - September 28, 2020

Alibaba Clouder - November 11, 2020

Alibaba Clouder - December 31, 2020

Alibaba Cloud ECS - September 10, 2020

550 posts | 52 followers

Follow Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn MoreMore Posts by Alibaba Cloud Native Community

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free