By Luotian

With the rapid growth of the Internet industry, there has been a significant increase in the number of users, resulting in immense pressure on systems due to concurrent requests.

Software systems strive for three goals: high performance, high concurrency, and high availability. There are both differences and connections between the three. Many skills and knowledge are involved. It would take too much time for a comprehensive discussion. Therefore, this article mainly focuses on high concurrency.

High concurrency poses challenges to systems such as performance degradation, resource contention, and stability issues.

High concurrency refers to the ability of a system or application to receive a large number of concurrent requests in the same period. Specifically, systems in high-concurrency environments need to be able to handle a large number of requests simultaneously without performance issues or response latency.

High-concurrency scenarios are commonly found in popular websites, e-commerce platforms, and social media applications on the Internet. For instance, on e-commerce platforms, there is a significant number of users simultaneously browsing, searching for products, and placing orders. Similarly, on social media platforms, there is a large influx of users posting, liking, and commenting concurrently. These situations require the system to efficiently handle a substantial number of requests while ensuring optimal system performance, availability, and user experience.

• Decreased system performance and increased latency

• Resource contention and exhaustion

• Challenges to system stability and availability

• Caching: relieve system load pressure and improve system response speed.

• Rate limiting: control concurrent page views and protect the system from overload.

• Degradation: guarantee the stability of core features, discard non-critical business or simplify processing.

In the development of websites or APPs, the caching mechanism is indispensable to improve the access speed of websites or APPs and reduce the pressure on databases. In a high-concurrency environment, the role of the caching mechanism is more obvious, which can not only effectively reduce the load of the database, but also improve the stability and performance of the system, thereby providing a better experience for users.

The principle of caching is to obtain the data from the cache first. If data exists in the cache, it is directly returned to the user. If data does not exist in the cache, the actual data is read from the slow device and put into the cache.

Browser caching refers to storing resources in a webpage such as HTML, CSS, JavaScript, and images in users' browsers so that the same resource can be directly obtained from the local cache when subsequent requests are made, instead of downloading it from the server again.

Browser caching is suitable for static web pages with only a few content changes and static resources. In these scenarios, browser caching can significantly improve website performance and user experience, and reduce server load.

With the browser caching, you can control the cache behavior by setting the Expires and Cache-Control fields in the response header.

Note

The browser caching stores real-time insensitive data, such as product frames, seller ratings, comments, and advertisement words. It has an expiration time and is controlled by the response header. Data with high real-time requirements is not suitable for browser caching.

Client-side caching refers to storing data in the browser to improve access speed and reduce server requests.

During the big sales promotion, to prevent the server from experiencing great traffic pressure, you can send some materials such as JS, CSS, and images to the client for caching in advance and avoid requesting these materials again during the promotion. In addition, some background data or style files can be stored in the client-side caching to ensure the normal operation of the APP in the event of server exceptions or network exceptions.

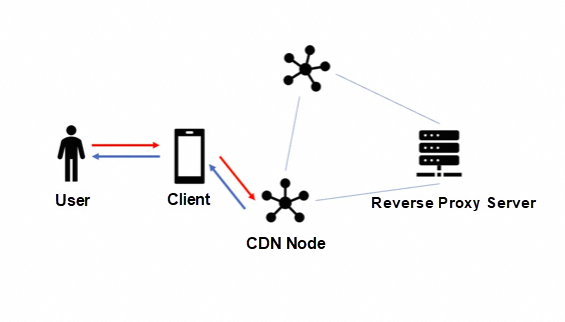

A Content Delivery Network (CDN) is a distributed network built on a bearer network. It consists of edge node servers distributed in different regions.

CDN caching is usually used to store static page data, event pages, and images. It has two caching mechanisms: the push mechanism which actively pushes data to the CDN nodes and the pull mechanism which pulls data from the source server and stores the data in the CDN nodes at the first access.

CDN caching can improve the website access speed and is suitable for scenarios where the website access is large, the access speed is slow, and the data changes are infrequent.

Common CDN caching tools include Cloudflare, Akamai, Fastly, and AWS CloudFront. These tools provide a globally distributed CDN network to accelerate content delivery and improve performance. They provide consoles and APIs for configuring CDN caching rules, managing cached content, and refreshing and updating the cache.

Reverse proxy caching refers to storing the response to requests in the reverse proxy server to improve service performance and user experience. It stores frequently requested static content in the proxy server, and when a user requests the same content, the proxy server returns the cached response directly without requesting the source server again.

This method is suitable for scenarios where the speed of accessing external services is slow but data changes are infrequent.

Local caching refers to storing data or resources in client storage media, such as hard disks, memory, or databases. It can be temporary, that is, it is valid only within the duration of the application. It can also be persistent, that is, it remains valid for different application sessions.

Local caching is suitable for scenarios where data is frequently accessed, or where offline access, bandwidth consumption reduction and better user experience are required.

Local caching is generally divided into disk caching, CPU caching, and application caching.

Distributed caching refers to a cache solution that scatteredly stores cached data on multiple servers.

Distributed caching is suitable for scenarios where high-concurrency reading, data sharing and collaborative processing are required, elasticity and scalability are provided, and the number of backend requests is reduced.

Keywords: Cache and database have no specific data, concurrent access

Cache penetration refers to the situation where the specific data does not exist in the database or cache, and each time a request must go through the cache and then access the database for this non-existent data record. A large number of requests may cause DB downtime.

Keywords: Expiration of a single hot key, concurrent access

Cache breakdown refers to the situation where, if the hot data exist in the database but not in the cache, when a large number of requests access the data that does not exist in the cache, the request finally to the DB may lead to DB downtime.

Keywords: Expiration of batch keys, concurrent access

Cache avalanche refers to the simultaneous expiration of a large number of cache keys, which results in a large number of requests to the database, and this may finally lead to DB downtime.

Cache consistency refers to the data consistency between the cache and the DB. We need to prevent the cache from being inconsistent with the DB by various means. We need to ensure that the cache is consistent with the DB data or that the data is eventually consistent.

The cache consistency issue can be addressed at different layers:

1. Database Layer:

• At the database layer, transactions can be used to ensure data consistency. By placing read and write operations in the same transaction, you can ensure that data updates and queries are consistent.

• You can use the trigger of the database. During the storing procedure, you can also actively trigger the update operation of the cache when the data is updated so that data consistency between the cache and the database can be ensured.

2. Cache Layer:

• At the cache layer, cache update policies can be used to maintain data consistency between the cache and the database by scheduled tasks and the asynchronous message queue (MQ) to update the cache regularly or asynchronously when the data is updated.

• You can use mutexes or distributed locks to ensure the atomicity of read and write operations on the cache and avoid data conflicts.

• You can set an appropriate expiration time for cached data to prevent data inconsistency caused by long-term expiration of cached data.

3. Application Layer:

• At the application layer, a read-write splitting strategy can be adopted to distribute read requests and write requests to different nodes. Read requests obtain data directly from the cache while write requests update the database and the cache to maintain data consistency.

• You can use cache middleware or cache components to automatically update the cache and reduce the complexity of manually maintaining the cache.

4. Monitoring and Alerting:

• You can establish a monitoring and alerting mechanism. By monitoring indicators such as the states of the cache layer and database layer, and data consistency, exceptions can be timely detected and alerts can be triggered to deal with problems.

The comprehensive use of the strategies at different layers can effectively deal with the cache consistency issue and ensure data consistency and system stability. Strategies at different layers can cooperate to form a perfect cache consistency solution.

We benefit a lot from caching. Every request of users is accompanied by a large number of caching. However, caching also brings great challenges, such as the issues mentioned above: cache penetration, cache breakdown, cache avalanche, and cache consistency.

In addition, some other cache issues might also be involved, such as cache skew, cache blocking, cache slow query, cache primary-secondary consistency, cache high availability, cache fault discovery and recovery, cluster scaling, and large keys and hot keys.

| Cache type | Introduction | Solution/tool | Advantages | Disadvantages | Scenarios |

|---|---|---|---|---|---|

| Browser caching | It is a cache of the storage in users' devices to store static resources and page content. | It controls caching behavior by setting caching-related fields in HTTP headers. | • It provides quick response to avoid frequent access to the server or network. • It reduces network bandwidth consumption and improves website performance. |

• The cached data may not be up-to-date, and the design of cache consistency and update mechanism needs to be considered. • The cache hit rate is limited by the choice of cache capacity and caching policy. |

• Caching of static resources. • Reducing the network bandwidth consumption. |

| Client-side caching | It is a cache of application storage in users' devices to store data, computation results, or other business-related content. | It uses Web APIs such as local storage, SessionStorage, LocalStorage, or IndexedDB to store and read data. | • It reduces backend load and improves system performance. • It provides quick response to avoid frequent access to the server or network. |

• The cached data may not be up-to-date, and the design of cache consistency and update mechanism needs to be considered. • The cache hit rate is limited by the choice of cache capacity and caching policy. |

• Frequently accessed data or computing results. • Relieving the backend load. |

| CDN caching | It is a cache of content delivery network to store and accelerate the distribution of static resources. | It deploys static resources to the CDN server and configures the CDN caching policy. Users' requests will be forwarded to the nearest CDN node to accelerate content distribution and access. | • It accelerates access to static resources and improves user experience. • It reduces the source server load and improves system scalability. |

• It is only suitable for caching static resources. Dynamic content cannot be cached. • It involves the complexity of CDN configuration and management. |

• Distribution of static resources and access to them. • Accelerating the loading of static resources and access to them. |

| Reverse proxy caching | It is a cache located at the frontend server to store and accelerate access to dynamic content and static resources. | It configures the reverse proxy server and sets up a caching policy to forward user requests to the cache server, reduce the load on the backend server, and accelerate content access. | • It accelerates the access to content and improves user experience. • It reduces the load on the source server and improves system scalability. |

• It is only suitable for specific Web servers and APPs. | • Caching of dynamic content and static resources, and acceleration of access to them. • Relieving the load on the backend server. |

| Local caching | It is a cache of an application in users' devices to store data and resources to improve the performance and response speed of APPs. | It uses a cache library or framework such as localStorage, sessionStorage, and Workbox to implement the local caching function. | • It improves the performance and response speed of APPs. • It reduces dependence on remote resources and improves offline experience. |

• The capacity of local caching is limited by the storage space of users' devices. | • Frequently accessed data or resources. • Performance improvement and faster response of the application. |

| Distributed caching | It is a cache used in distributed systems to store and share data. It is typically deployed on multiple servers. It provides high concurrent read and write capabilities and scalability of data access. It is commonly used in large-scale applications and systems. | It uses distributed caching systems such as Redis and Memcached to store and access cached data. | • It provides high concurrent read and write capabilities and scalability of data storage. | • Additional server resources are required to deploy and manage the distributed caching system. • Cache consistency and data synchronization issues need to be considered. |

• High concurrent read/write capabilities and data storage scalability. • Caching and data sharing of large-scale applications or systems. |

The above is a horizontal comparison between browser caching, client caching, CDN caching, reverse proxy caching, local caching, and distributed caching, including details of the introduction, solutions/tools, advantages and disadvantages, and applicable scenarios. According to the specific needs and system architecture, you can select the appropriate caching type and solution to improve system performance, reduce server load, improve user experience, and ensure data consistency.

Continue reading the part 2 of this article

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Three Strategies of High Concurrency Architecture Design - Part 2: Rate Limiting and Degradation

1,338 posts | 469 followers

FollowApsaraDB - December 29, 2025

ApsaraDB - February 4, 2026

Alibaba Cloud Community - May 14, 2024

ApsaraDB - February 4, 2026

Alibaba Cloud Native Community - January 19, 2026

kehuai - May 15, 2020

1,338 posts | 469 followers

Follow Application High Availability Service

Application High Availability Service

Application High Available Service is a SaaS-based service that helps you improve the availability of your applications.

Learn More Elastic High Performance Computing

Elastic High Performance Computing

A HPCaaS cloud platform providing an all-in-one high-performance public computing service

Learn More Remote Rendering Solution

Remote Rendering Solution

Connect your on-premises render farm to the cloud with Alibaba Cloud Elastic High Performance Computing (E-HPC) power and continue business success in a post-pandemic world

Learn MoreMore Posts by Alibaba Cloud Community