By Zhenyu

MaxCompute (formerly known as ODPS) is a leading distributed big data processing platform developed by Alibaba Cloud. It is widely utilized, especially within the Alibaba Group, and supports the core businesses of multiple business units (BUs). ODPS V2.0, continuously optimizing performance, aims to enhance the user experience and expression capabilities of SQL, as well as improve the productivity of ODPS developers.

Building upon the SQL engine of ODPS V2.0, MaxCompute simplifies SQL compilation and enhances the language's expressiveness. We present a series of articles titled Unleash the Power of MaxCompute to explore the capabilities of MaxCompute (ODPS V2.0).

In the previous article, I introduced Complex Types Functions of MaxCompute. Starting from this article, I will introduce the improvements of MaxCompute in the SQL DML.

• Scenario 1

I needed to write a replicated SQL query that involved retrieving data from multiple tables. Some tables required joining, while others required union operations. Additionally, I had to join intermediate data and output multiple tables. Eventually, the SQL became nested with multiple levels of subqueries, making it difficult to comprehend. The same query was repeated in different subqueries. To simplify maintenance, I split the complex statement into multiple statements. However, I discovered that each statement had to be submitted separately and queued. Furthermore, the intermediate results had to be written to unnecessary temporary tables and read out again in the subsequent statements, significantly slowing down the process.

• Scenario 2

While developing a new project, we needed to prepare basic data for a small data table. However, there was no INSERT ... VALUES statement available. As a result, it was not possible to maintain the data along with the DDL that created the table. We had to resort to using other scripts and the ODPS command line to prepare the required data.

• Scenario 3

When testing a newly developed UDF, simply writing SELECT myudf('123'); would result in an error. An additional step was required to create a dual table with a single row of data, which was quite cumbersome. Similarly, when testing a UDAF, multiple rows of data had to be prepared in the test table. Each time we tested different inputs, we either had to modify the table contents or create a new table. It would be ideal to have a way to test the UDF with different data combinations without the need to create a separate table.

• Scenario 4

During the migration of an ETL system from Oracle, I encountered challenges with statements such as WHERE EXISTS (SELECT...) and WHERE IN (SELECT...). It became apparent that the support for these semi-connected statements in ODPS was incomplete. As a workaround, I had to manually convert these statements into regular joins and apply filters once again.

MaxCompute utilizes an SQL engine based on ODPS V2.0, which significantly expands DML capabilities and improves usability and compatibility to address the aforementioned problems.

MaxCompute supports the SQL standard CTE. It can improve the readability and execution efficiency of SQL statements.

This article uses MaxCompute Studio as an example. First, please install MaxCompute Studio, connect to a MaxCompute project, and create a new MaxCompute script file, as follows.

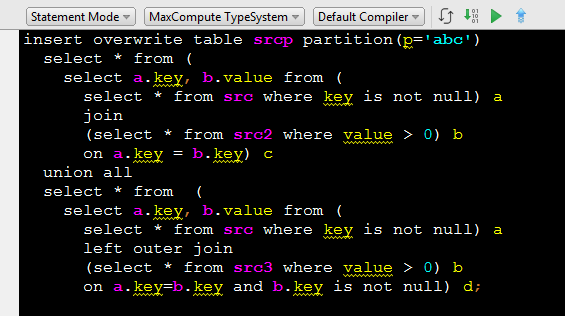

As you can see, both sides of the top-level Union consist of one Join each, with the left table of the Join being the same query. Unfortunately, using subqueries in this manner leads to code repetition.

Rewrite the preceding statement using CTE.

As you can see, the subquery corresponding to a only needs to be written once and reused later. Multiple subqueries can be specified in the WITH clause of CTE and reused repeatedly in the entire statement like using variables. In addition to reuse, there is no need to nest again and again.

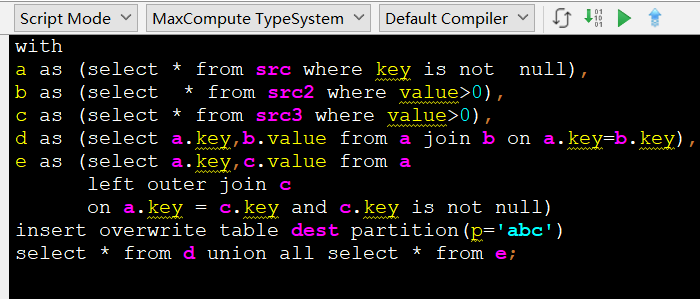

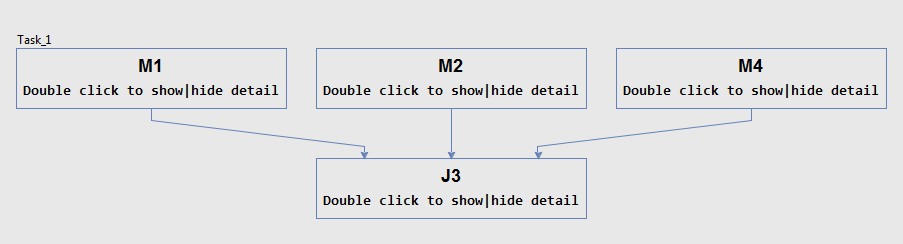

Compile this script, the execution plan is as follows:

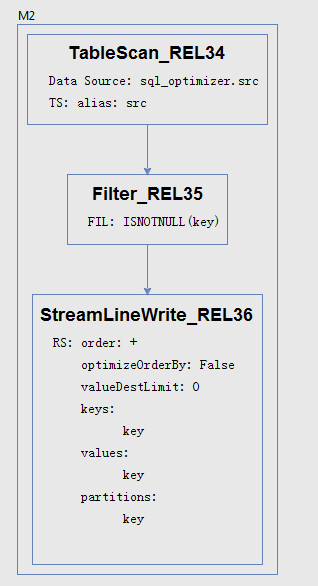

Among them, M1, M2, and M4 distributed tasks correspond to three input tables respectively. Double-click M2 to see the specific DAG executed (double-click again in DAG to return), which is as follows:

You can see the DAG that is filtered after the src is read. The src is read and filtered only once in the execution plan. [1]

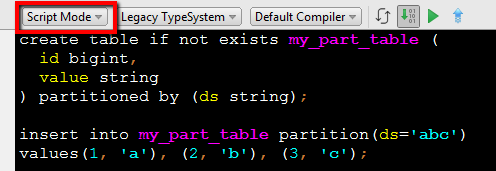

Create a new file as follows:

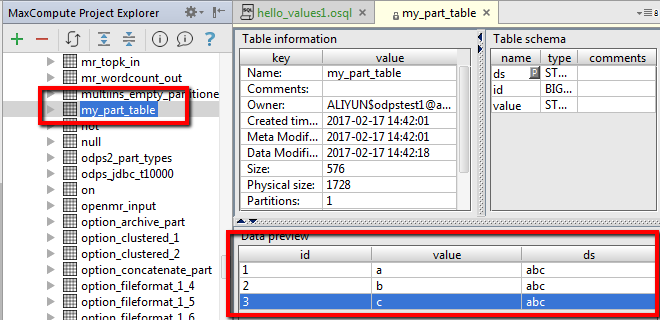

After execution, you can find the newly created table in the MaxCompute Project Explorer and see that the data in values has been inserted into the table, as follows:

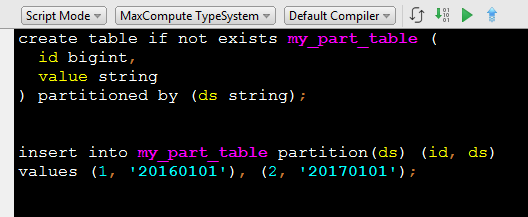

Sometimes, there are many columns in a table. When preparing data, if you want to insert only some columns of data, you can use the insert my_part_table feature.

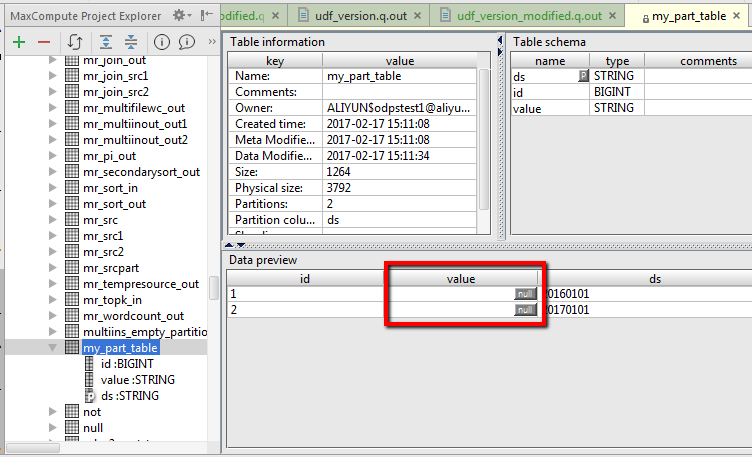

After execution, the destination table is found in the MaxCompute Project Explorer, and the data in the values has been inserted as follows:

For columns that are not specified in values, you can see that the default value is NULL. The insert my_part_table feature is not necessarily used with VALUES but can also be used with INSERT INTO ... SELECT...

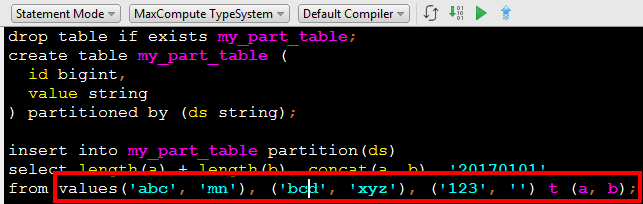

INSERT... VALUES... has a limit. That is, values must be constants. However, sometimes you want to perform some simple operations on the inserted data. In this case, you can use the VALUES TABLE feature of MaxCompute as follows:

The VALUES (...), (...) t (a, b) is equivalent to defining a table named t with columns a and b. The type is (a string, b string), and the type is derived from the VALUES table. In this way, when no physical table is prepared, a multi-row table with arbitrary data can be simulated, and arbitrary operations can be performed.

In fact, the VALUES table is not limited to use in INSERT statements. Any DML statement can be used.

There is also a special form of the VALUES table.

select abs(-1), length('abc'), getdate();In other words, you can directly execute the SELECT statement without writing the from statement, as long as the SELECT expression table does not use any upstream table data. In this case, the underlying implementation is to select data from an anonymous VALUES table that contains only one row and no columns. In this way, if you want to test some functions, such as your own UDFs, you no longer need to manually create DUAL tables.

MaxCompute supports the SEMI JOIN statement. For the SEMI JOIN statement, the right table does not appear in the result set and is only used to filter data in the left table. Supported syntax includes LEFT SEMI JOIN, LEFT ANTI JOIN, (NOT) IN SUBQUERY, and (NOT) EXISTS.

The data in the left table is returned. When the JOIN condition is met, that is, the ID of a row in mytable1 appears in all IDs in mytable2, this row is retained in the result set.

For example:

SELECT * from mytable1 a LEFT SEMI JOIN mytable2 b on a.id=b.id;Only the data in mytable1 is returned as long as the ID of mytable1 appears in the ID of mytable2.

The data in the left table is returned. If the JOIN condition is not met, that is, the ID of a row in mytable1 does not appear in all IDs in mytable2, this row is retained in the result set.

For example:

SELECT * from mytable1 a LEFT ANTI JOIN mytable2 b on a.id=b.id;Only the data in mytable1 is returned as long as the ID of mytable1 does not appear in the ID of mytable2.

IN SUBQUERY is similar to LEFT SEMI JOIN.

For example:

SELECT * from mytable1 where id in (select id from mytable2);is equivalent to

SELECT * from mytable1 a LEFT SEMI JOIN mytable2 b on a.id=b.id;The original ODPS also supports IN SUBQUERY but does not support the correlated condition. But MaxCompute supports it.

For example:

SELECT * from mytable1 where id in (select id from mytable2 where value = mytable1.value);The where value = mytable1.value in the subquery is a correlated condition. The original ODPS will report an error for this expression that not only references the source table in the subquery but also references the outer query source table. MaxCompute supports this usage. Such filter conditions actually form part of the ON condition in SEMI JOIN.

NOT IN SUBQUERY is similar to LEFT ANTI JOIN but with one significant difference.

For example:

SELECT * from mytable1 where id not in (select id from mytable2);If none of the IDs in mytable2 are NULL, this statement is equivalent to:

SELECT * from mytable1 a LEFT ANTI JOIN mytable2 b on a.id=b.id;However, if mytable2 contains any columns that are NULL, the NOT IN expression is NULL. As a result, the WHERE condition is not satisfied and no data is returned. This is different from the LEFT ANTI JOIN.

The original ODPS also supports [NOT] IN SUBQUERY not as a JOIN condition. For example, if it appears in a non-WHERE statement, or if it is in a WHERE statement, it cannot be converted to a JOIN condition. MaxCompute still supports this usage. But in this case, it cannot be converted to SEMI JOIN, and a separate job must be implemented to run SUBQUERY. So, the correlated condition is not supported.

For example:

SELECT * from mytable1 where id in (select id from mytable2) OR value > 0;As the WHERE contains OR, it cannot be converted to SEMI JOIN. A separate job is enabled to execute subqueries.

In addition, when dealing with partition tables, there will be special treatment.

SELECT * from sales_detail where ds in (select dt from sales_date);If the ds is a partition key column, the select dt from sales_date will enable a separate job to execute the subquery without converting it to SEMIJOIN. The executed results will be compared with ds one by one. The partitions whose ds values are not in the returned results in the sales_detail will not be read to ensure that partition pruning is still valid.

If at least one row of data is contained in SUBQUERY, TRUE is returned. Otherwise, FALSE is returned. It works in the opposite when it comes to NOT EXISTS. MaxCompute supports only subqueries that contain correlated WHERE conditions. EXISTS SUBQUERY/NOT EXISTS SUBQUERY is implemented by converting to LEFT SEMI JOIN or LEFT ANTI JOIN

For example:

SELECT * from mytable1 where exists (select * from mytable2 where id = mytable1.id);`is equivalent to

SELECT * from mytable1 a LEFT SEMI JOIN mytable2 b on a.id=b.id;while

SELECT * from mytable1 where not exists (select * from mytable2 where id = mytable1.id);`is equivalent to

SELECT * from mytable1 a LEFT ANTI JOIN mytable2 b on a.id=b.id;• MaxCompute supports UNION [DISTINCT], where DISTINCT is ignored.

SELECT * FROM src1 UNION SELECT * FROM src2;The effect of execution is equivalent to

SELECT DISTINCT * FROM (SELECT * FROM src1 UNION ALL SELECT * FROM src2) t;SELECT * FROM table1, table2 WHERE table1.id = table2.id;The effect of execution is equivalent to

SELECT * FROM table1 JOIN table2 ON table1.id = table2.id;This feature is mainly to facilitate migration from other database systems. For credit purchases, we still recommend that you use JOIN to clearly indicate your intention.

In a complete query statement, for example,

SELECT key, max(value) FROM src t WHERE value > 0 GROUP BY key HAVING sum(value) > 100 ORDER BY key LIMIT 100;In fact, the logical execution sequence is FROM -> WHERE -> GROUY BY -> HAVING -> SELECT -> ORDER BY -> LIMIT. The former is the input of the latter, which is actually different from the standard writing sequence. Many confusing problems are caused by this. For example, in ORDER BY, you can only reference the columns generated in the SELECT list instead of accessing the columns in the FROM source table. HAVING can access group by key and aggregate functions. If the GROUP BY clause is used in the SELECT statement, you can access only the GROUP KEY and aggregate functions, instead of the columns in the FROM source table.

MaxCompute supports writing query statements in the order in which they are executed. For example, the preceding statement can be written as

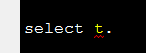

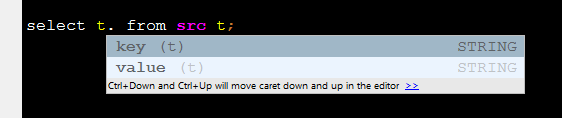

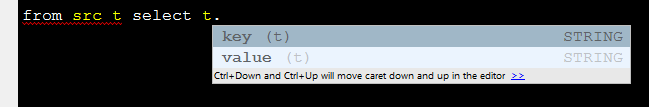

FROM src t WHERE value > 0 GROUP BY key HAVING sum(value) > 100 SELECT key, max(value) ORDER BY key LIMIT 100;If the writing order is the same as the execution order, it is not easy to confuse. This has an additional benefit. When you write SQL statements in MaxCompute Studio, you can use intelligent prompts. If you write the SELECT statement before, MaxCompute Studio cannot know which columns may be accessed because the FROM statement has not been written. As a result, the prompt cannot jump out.

For example:

You need to write FROM first, and then back to write the SELECT list. Only in this way can the prompt jump out.

For example:

If you use the above FROM statement to write, the prompt can jump out according to the context.

For example:

ODPS V1.0 does not support top-level UNION. ODPS V2.0 can support the top-level UNION.

For example,

SELECT * FROM src UNION ALL SELECT * FROM src;In most DBMS systems, such as MySQL and Hive. If the CLUSTER BY, DISTRIBUTE BY, SORT BY, ORDER BY, or LIMIT clause is included after UNION, it applies to the results of all previous UNION operations, rather than the last route of UNION. ODPS V2.0 also uses this behavior when setting odps.sql.type.system.odps2 to true;.

For example:

set odps.sql.type.system.odps2=true;

SELECT explode(array(1, 3)) AS (a) UNION ALL SELECT explode(array(0, 2, 4)) AS (a) ORDER BY a LIMIT 3;Return:

| a |

| 0 |

| 1 |

| 2 |

MaxCompute significantly enhances support for DML statements, catering to your usability, compatibility, and performance requirements. SQL experts will notice that most of the mentioned features align with standard SQL functionality. MaxCompute will continue to improve compatibility with standard SQL and commonly used industry products.

Additionally, MaxCompute offers a specialized script mode and parameterized view to handle extensive data processing in complex business scenarios. We will delve into these features in the next article.

• [1]: Whether subqueries are merged or split is determined by the cost-based optimizer (CBO) of ODPS V2.0. The way SQL is written, whether using CTEs or subqueries, doesn't guarantee that the physical execution plan will be merged or split.

137 posts | 21 followers

FollowAlibaba Cloud MaxCompute - January 29, 2024

Alibaba Cloud MaxCompute - January 29, 2024

Alibaba Cloud MaxCompute - January 29, 2024

Alibaba Cloud MaxCompute - February 4, 2024

Alibaba Cloud MaxCompute - February 7, 2024

Alibaba Cloud MaxCompute - May 5, 2019

137 posts | 21 followers

Follow MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn MoreMore Posts by Alibaba Cloud MaxCompute