As enterprises continue to grow in scale and data volume, the value of data is becoming increasingly important. The frequent and complex changes and updates in data require prompt capture and processing. To meet this need, the Change Data Capture (CDC) technology for databases has emerged. However, from the perspective of the Extract, Transform, Load (ETL) architecture, CDC only fulfills the data extraction capability.

To achieve a complete ETL architecture and enable efficient and real-time data integration, processing, and synchronization, Alibaba Cloud Function Compute (FC) is deeply integrated with CDC technology. This integration allows enterprises to build a comprehensive ETL architecture for data extraction, transformation, and loading. By using CDC as an event-driven data source and triggering the execution of Serverless functions based on data changes, real-time data processing and synchronization can be achieved. This significantly enhances the accuracy and efficiency of business decisions and analysis.

The following step-by-step explanation illustrates how FC + CDC adapts to services that implement the ETL model. Beginning with the ETL model, let's delve into the process.

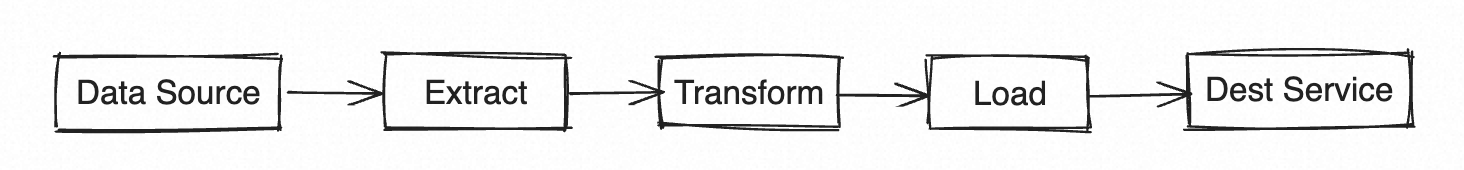

In the field of big data, a system architecture that handles data forwarding and processing can be abstracted into an ETL model. The ETL model consists of three main steps: extraction, transformation, and loading.

This architecture is widely applied to help enterprises manage and utilize data for data-driven decision-making and business transformation.

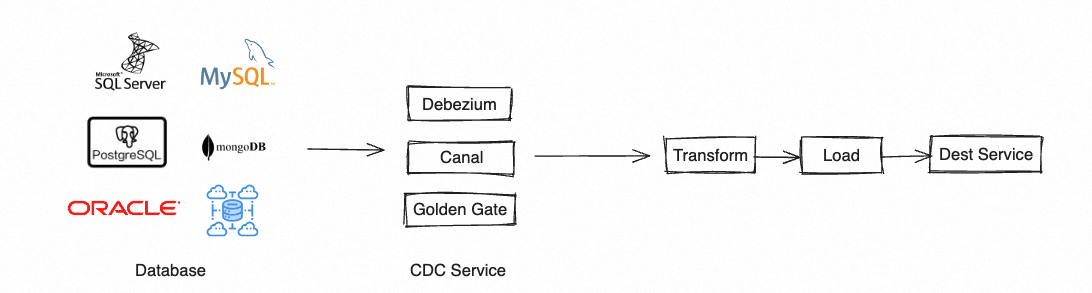

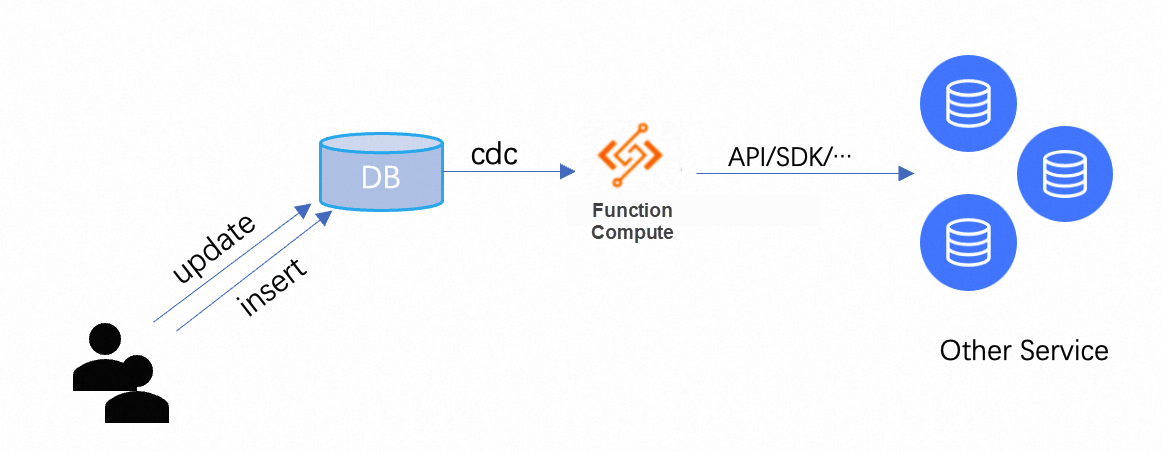

CDC and Extract (E) are two concepts in data processing. CDC aims to capture changed data in a database, while Extract focuses on extracting specific data sets from a data source. However, when it comes to the business itself, both are aimed at obtaining the data required by the business from the data source. Therefore, the combination of CDC and ETL is an inevitable result. This combination can build a more complete and efficient data processing flow, enabling real-time incremental data extraction and processing. Compared to traditional batch extraction methods, CDC captures data changes in a more timely manner, resulting in more real-time and accurate data in the target system.

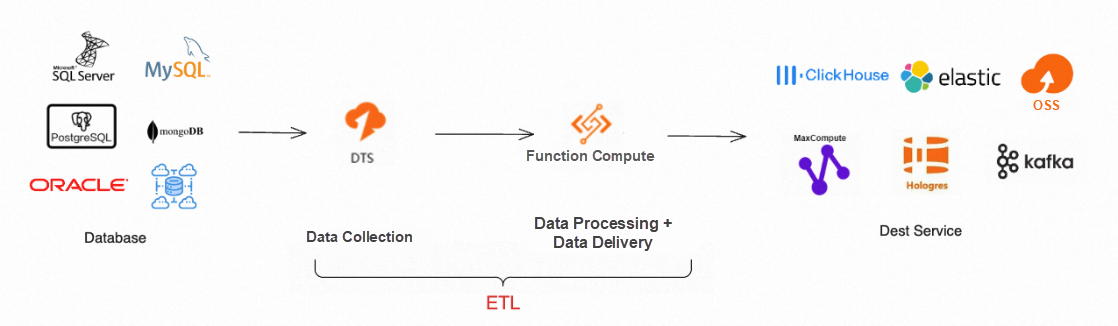

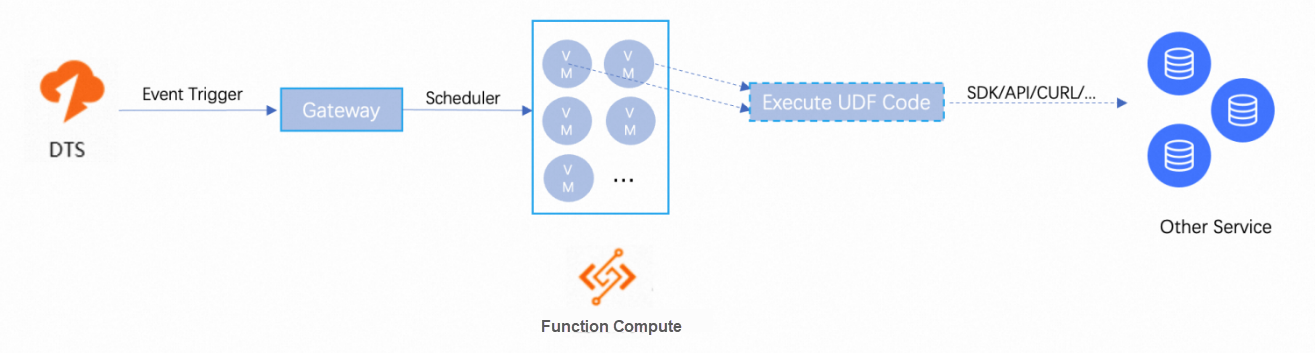

In the Alibaba Cloud database product system, Data Transmission Service (DTS) plays the role of CDC. As a real-time data stream transmission service, DTS captures changes in upstream databases and pushes these changes to downstream services. When the downstream service is Function Compute, you can leverage its custom code capability to customize data processing (T) and delivery (L). As shown in the diagram below, the deep integration of FC and DTS builds a complete ETL model, providing assistance in the rapid construction of business systems.

To better understand the operation mode of the aforementioned DTS + FC architecture, let's analyze the internal details.

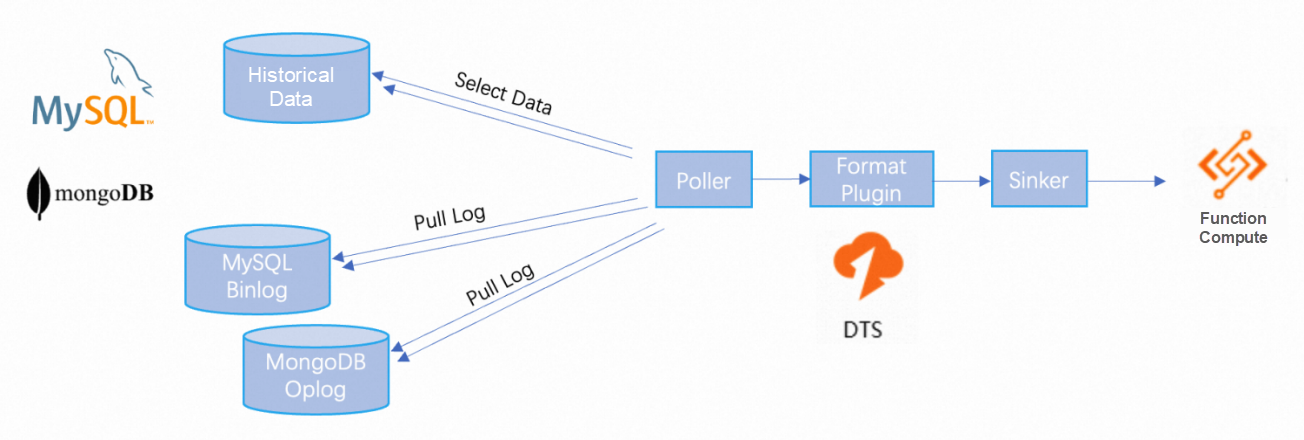

DTS provides comprehensive capabilities in data collection and transmission services. The DTS system can be divided into the following three modules:

1. Poller: This module obtains data from rich upstream database services. Specific features are as follows:

2. Format Plugin: This module formats the obtained data into the Canal Jaon format. A standardized format can facilitate the reuse of the data parsing logic in different data sources.

3. Sinker: This module pushes the formatted data to the downstream FC;

The close integration between FC and DTS ensures that FC can receive database data collected by DTS. Data processing and delivery are achieved through user-defined code. The specific features are as follows:

As shown in the figure above, you only need to focus on the business logic of data processing and delivery and complete the implementation using simple code snippets. The FC backend automatically scales compute nodes to execute the code. You don't have to worry about tasks such as system infrastructure construction, resource operation and maintenance, scaling, monitoring, and alerting, which greatly improves development efficiency. As a Serverless application, FC supports pay-as-you-go billing to avoid resource inefficiency caused by long-term reservation of machine resources.

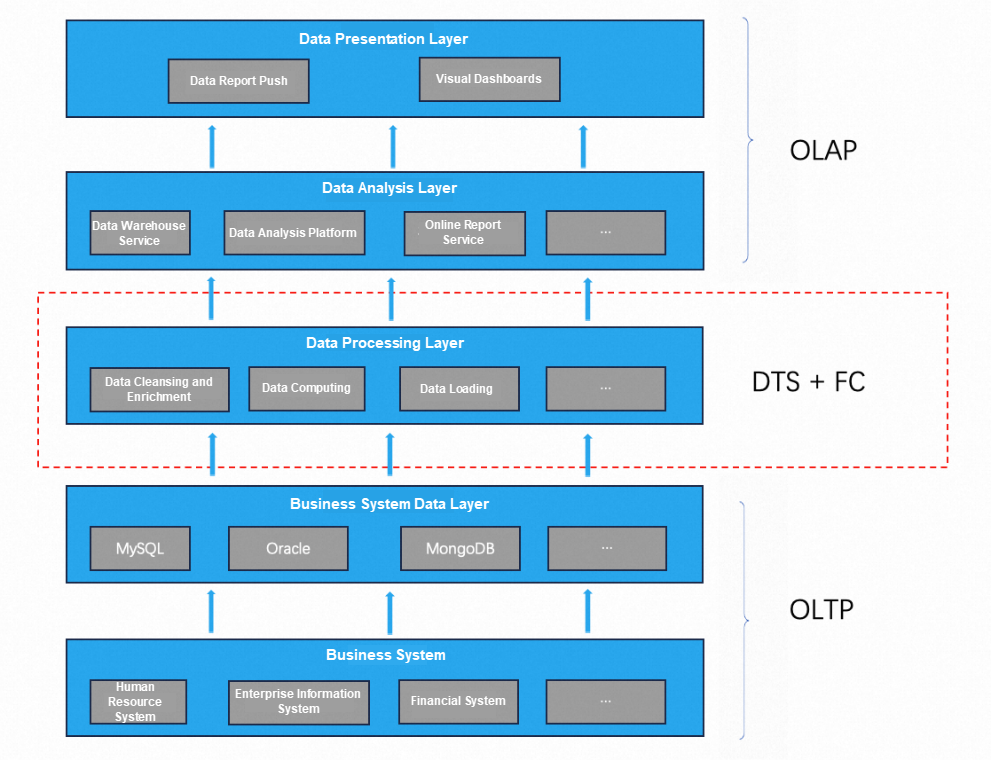

• OLTP: Online transaction processing. It operates in transaction units and needs to support highly concurrent writing and data consistency. Common services include relational databases such as MySQL or PostgreSQL, order processing systems, and customer relationship management systems.

• OLAP: nline analysis and processing. It is often used to extract, aggregate, and analyze information from large amounts of data to meet data analysis and decision support needs. OLAP systems are usually query-based and can perform complex data queries and analysis. Common services include AnalyticDB, ClickHouse, and Power BI.

From the above description, it can be concluded that OLTP and OLAP are two different data processing services that meet different business requirements. OLTP systems are suitable for processing real-time transactions and business operations, while OLAP systems are suitable for analyzing and supporting decisions based on large amounts of data. In practice, the data sources of OLAP are different OLTP databases. Therefore, OLAP itself does not generate data, but extracts data through ETL from OLTP data to OLAP databases or data warehouses for integration and cleansing to achieve analyzable data standards. DTS + FC can connect these two types of services and establish data paths.

• Event: In a business system, an event refers to an important and meaningful occurrence or state change in the system or business. Events can be triggered internally or input externally and are usually related to workflows, data changes, or user actions.

• Event-driven: Event-driven architecture is a system design paradigm in which events are the core components of the system. In this architecture, the various components of the system communicate and cooperate by subscribing to and responding to events, achieving a loosely coupled and scalable system architecture.

CDC is used to capture data changes in databases. It is often used as an event to drive the execution of subsequent processes. Common scenarios include:

• Subscription and publishing system: CDC can be part of the subscription and publishing system to publish data changes in the database as events to relevant subscribers. This can be used to implement an event-driven system architecture based on the publish-subscribe pattern.

• Data verification: CDC can push the changed data in the database to FC. Customized data verification to check the rationality and compliance of data is very important in systems such as finance and financial orders.

• Data audit: CDC can push the changed data in the database to FC and persist it to any third-party service through FC for data audit and data traceability.

• Change notification: When certain key data changes, specific notifications are sent in ways such as email, DingTalk, SMS, and phone calls.

The combination of CDC and Function Compute allows for real-time data processing and response, while reducing reliance on infrastructure and management. In practical applications, CDC can be used as an event-driven data source, where data changes serve as events to trigger the execution of Serverless functions. This enables real-time data processing and distribution. Additionally, the elastic scaling capability of Function Compute allows for the dynamic allocation of computing resources based on actual loads. In conclusion, the integration of DTS and Function Compute provides enterprises with a more efficient, flexible, and reliable solution for data processing.

CloudFlow: Streamlined Development Makes It Easier to Build Applications on the Cloud

99 posts | 7 followers

FollowAlibaba Cloud Community - December 21, 2021

Alibaba Clouder - June 23, 2020

Alibaba Clouder - June 16, 2020

Alibaba Cloud Serverless - February 28, 2023

Alibaba Clouder - September 6, 2019

Alibaba Cloud Serverless - April 21, 2022

99 posts | 7 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More Lindorm

Lindorm

Lindorm is an elastic cloud-native database service that supports multiple data models. It is capable of processing various types of data and is compatible with multiple database engine, such as Apache HBase®, Apache Cassandra®, and OpenTSDB.

Learn More Oracle Database Migration Solution

Oracle Database Migration Solution

Migrate your legacy Oracle databases to Alibaba Cloud to save on long-term costs and take advantage of improved scalability, reliability, robust security, high performance, and cloud-native features.

Learn MoreMore Posts by Alibaba Cloud Serverless