By Liao Xiangli

The previous audio and video technologies of NetEase Cloud Music were mostly applied to the data processing of the music library. Based on the experience of the service of audio and video algorithms, the NetEase Cloud Music Music Library Team and the Audio and Video Algorithm Team collaborated to build a centralized audio and video algorithm processing platform. This article explains how NetEase Cloud Music uses Serverless technology to optimize its entire audio and video processing platform.

This article is split into three parts:

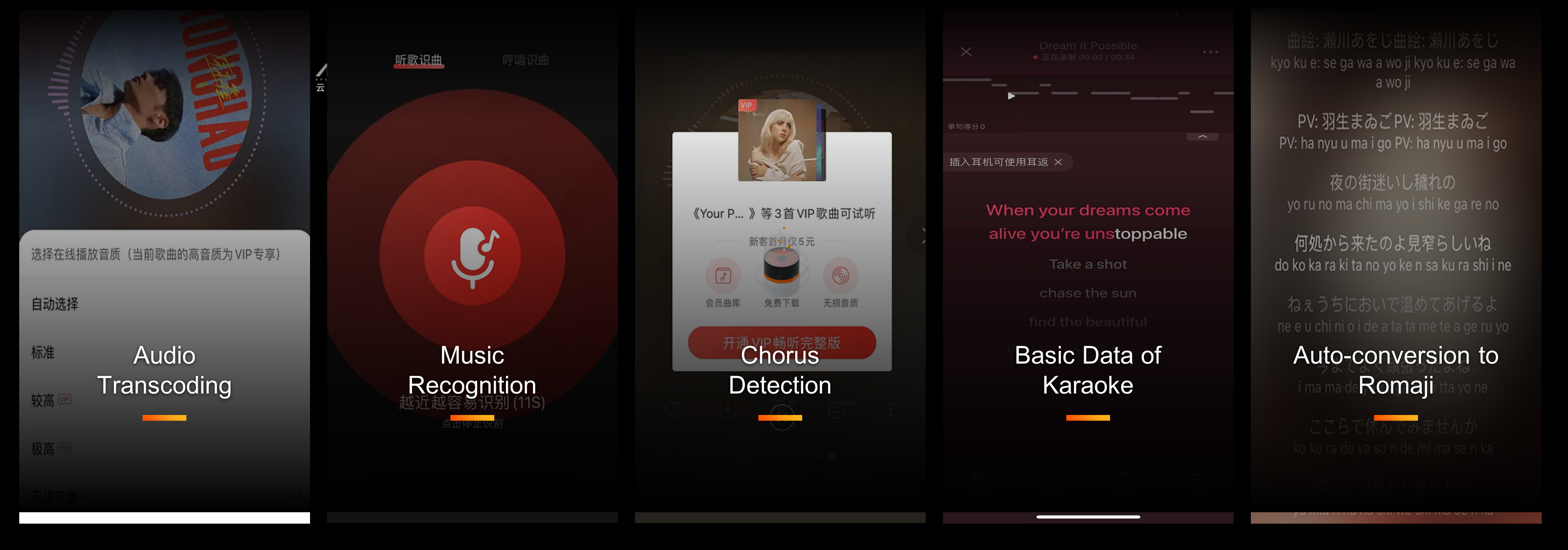

As a music-based company, audio and video technology is widely used in many business scenarios of NetEase Cloud Music. Here are five common scenarios:

As you can see from the scenarios above, audio and video technology is widely used in different scenarios and plays an important role.

Audio and video technology can be divided into three categories: analysis and understanding, processing, and creation and production. Part of these are processed on the end in the form of SDK. More parts aim to provide general audio and video capabilities in the form of services through algorithm engineering and backend cluster deployment management. This part is the focus of our article today.

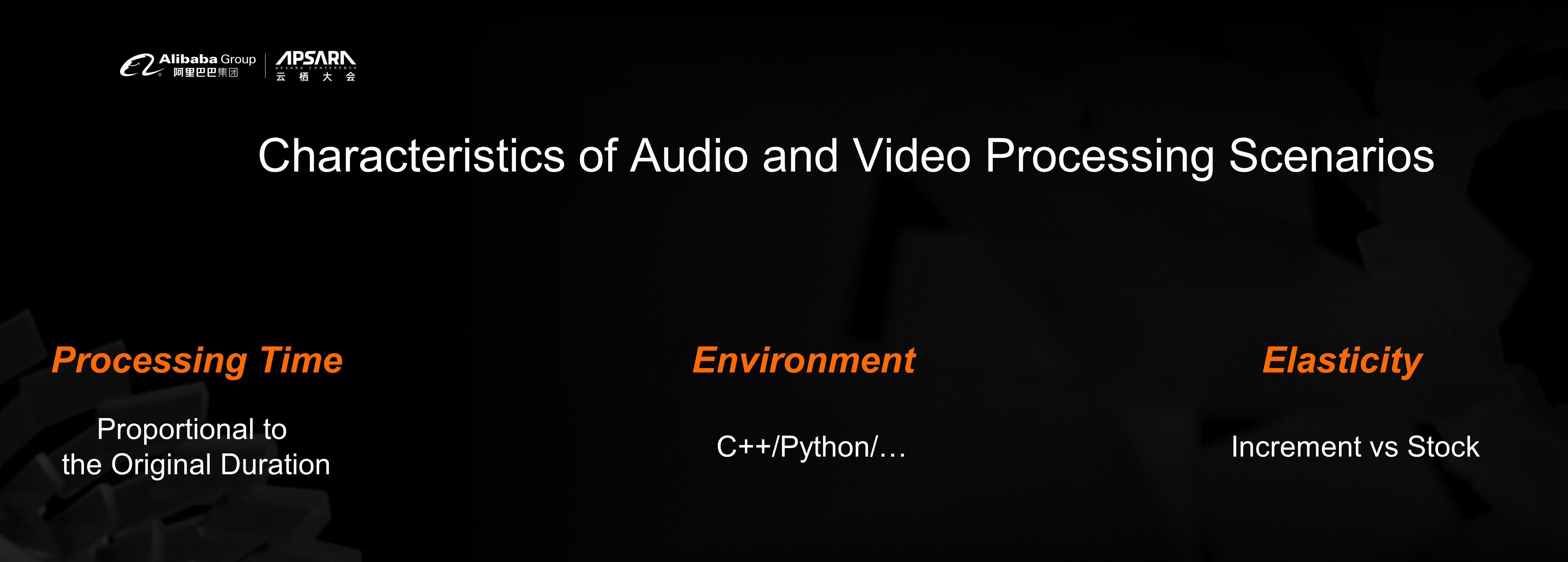

We need to understand the characteristics of many related audio and video algorithms in the service deployment of audio and video algorithms, such as the deployment environment, execution time, and whether they support concurrent processing. With the increase of our landing algorithms, we have summarized the following rules:

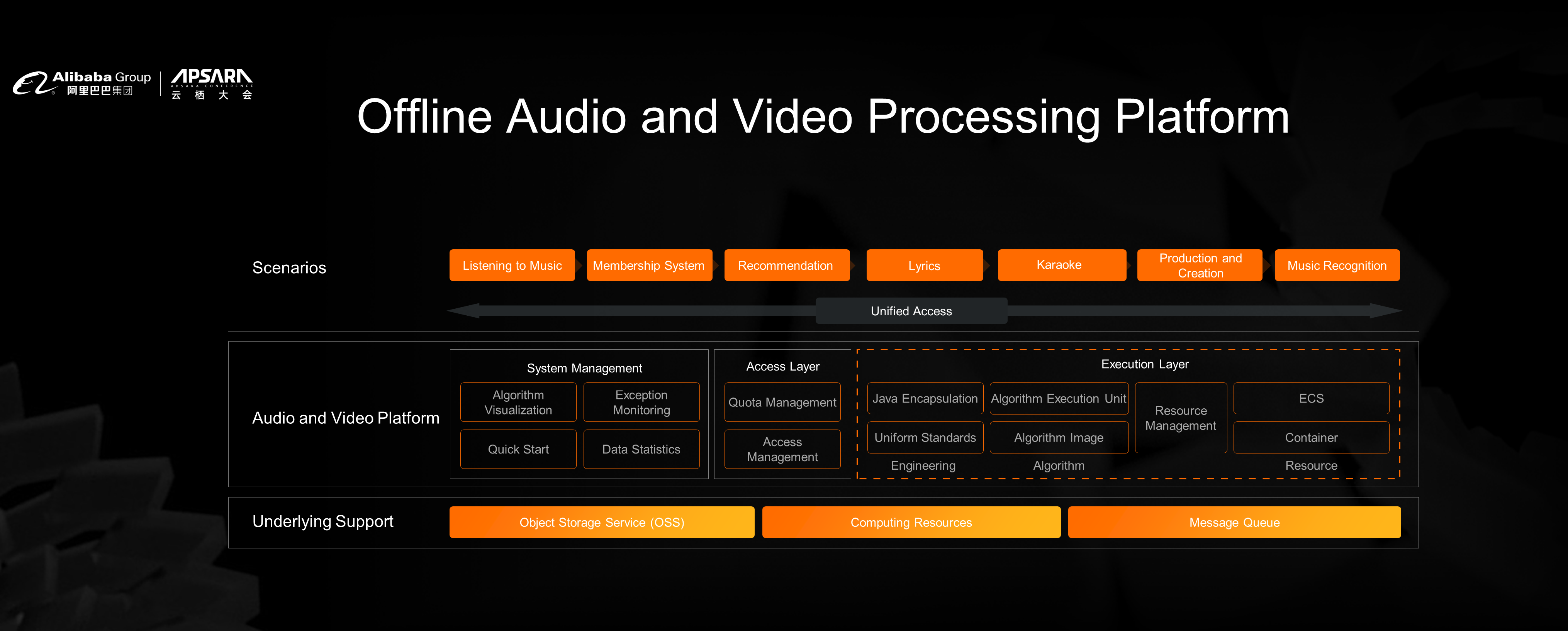

Based on our understanding of engineering and the characteristics of audio and video processing algorithms, the overall architecture of the audio and video processing platform of cloud music is listed below:

We have made a unified design for the common parts of different audio and video algorithm processing, including the visualization, monitoring, quick start, and processing data statistics of algorithm processing. We have designed a unified and configurable management mode for the allocation of resources, so the common parts of the whole system can be abstracted and reused as much as possible.

The most critical aspect of the entire audio and video algorithm processing platform is the interaction and design of the execution unit, which is also the focus of this article. Cloud music solves many connection and deployment efficiency issues through unified connection standards and image delivery. For the use of resources, since we continuously have new algorithms and stock/incremental services, we used internal private cloud host application/recycling and content containerization before migrating to the cloud.

In order to describe the operation process of the cloud music execution unit better, we will refine it in the following figure.

The interaction between the execution unit and other systems is decoupled through message queues. In the execution unit, we adapt to algorithms with a different concurrent performance by controlling the concurrency of message queues and trying to control the main work of the execution unit only for algorithm computing. As such, we can finally achieve the minimum granularity scale-out when the system is scaled out.

In this mode, we have landed more than 60 audio and video algorithms. Over the past year, service-oriented algorithms accounted for half of the 60 audio and video algorithms. These algorithms provide service capabilities to more than 100 of our business scenarios. However, more complex algorithms and business scenarios put higher requirements on our service efficiency, O&M deployment, and elasticity. Before we migrated to the cloud, we used more than 1000 ECS instances and physical machines of different specifications.

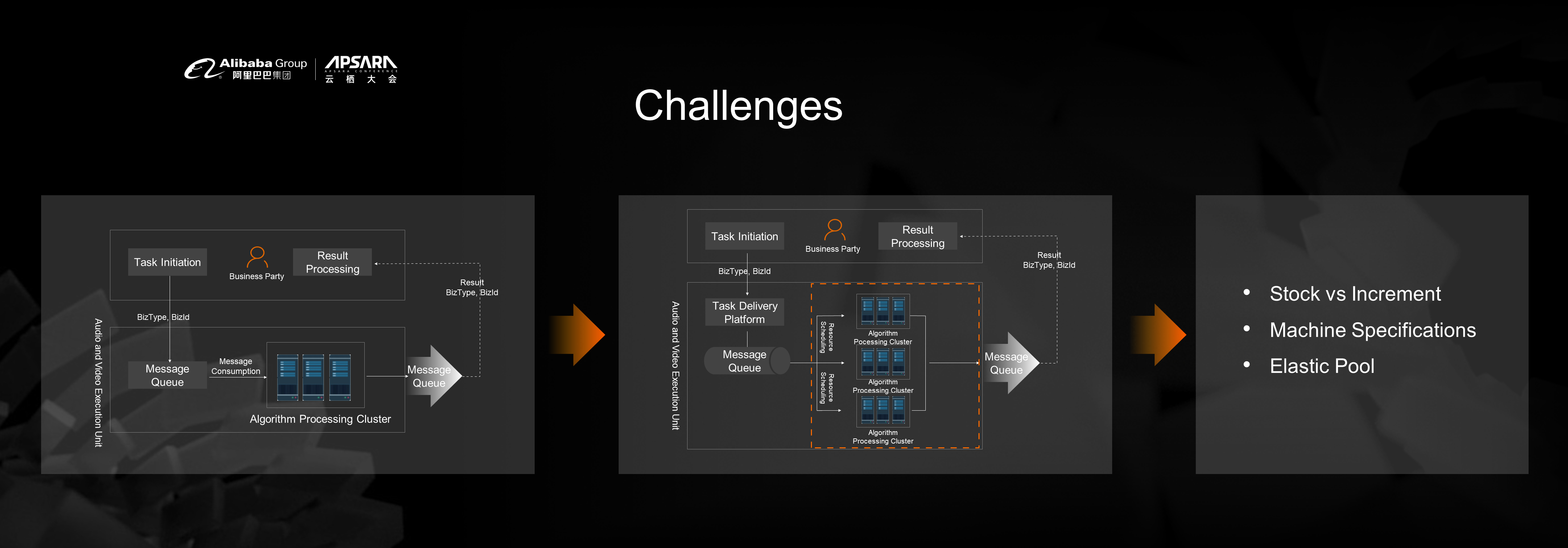

As the complexity of business scenarios and algorithms increases, many methods are used to simplify the connection between internal business scenarios and algorithms. However, more algorithms that mix with stock and incremental processing, the scale of business scenarios with various traffic, and the same type of algorithms may reuse the same algorithm. This makes us spend more time processing machine resources than we spend on development.

This also prompted us to start thinking about more ways to solve the problems we encountered. There are three pain points:

The first pain point is that the difference between stock and increment increases, and the implementation of new algorithms increases. We spend more time coordinating stock and incrementing resources. Secondly, with the increase in algorithm complexity, we need to pay attention to the overall specifications and utilization rate of machines when applying for/purchasing machines. Finally, we hope that the processing of stocks can be accelerated, and there are enough resources when processing it. When processing a large amount of audio and video data, we can compress the time when the stock is inconsistent with the increment. In general, we hope to have large-scale elastic resources, so audio and video algorithm services do not need to pay much attention to machine management.

However, the actual transformation is concerned with the final service capacity but also needs to consider the ROI. Specifically:

These are consistent with the definition of Serverless. You do not need to manage servers to build and run applications with excellent elasticity. Based on the considerations above, we chose the public cloud Function Compute (FC) method. It can visually map our current computing execution process and meet the needs of future attempts to use schemas for algorithm orchestration. Next, I will focus on introducing Function Compute.

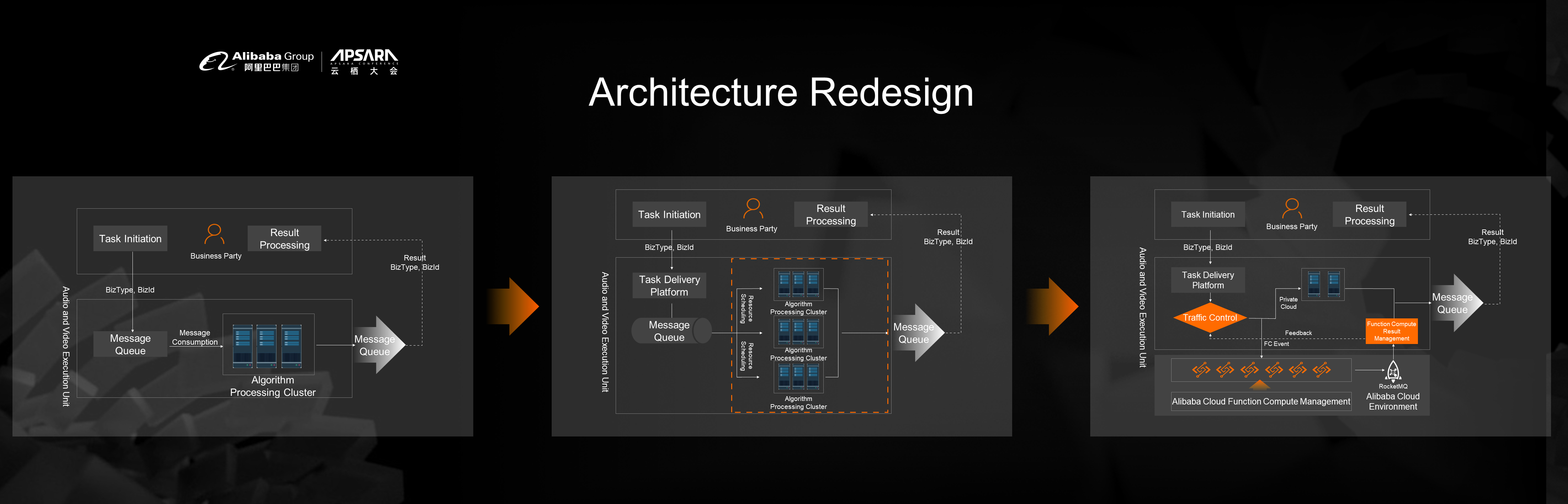

We quickly trialed FC for a week. However, a complete, highly reliable architecture requires more considerations. The overall external input and output of the system remain unchanged, and the system has traffic control capability. In addition, the system can be downgraded to the private cloud for processing in case of special circumstances to ensure the high reliability of the system. The specific architectural transformation is shown in the following figure:

The focus of the transformation is to make the development environment of NetEase Cloud Music adapt to Function Compute. We focused on the transformation of deployment, monitoring, and hybrid cloud. In terms of deployment, we fully applied the support of Function Compute on CI/CD and image deployment to realize automatic image pulling. In terms of monitoring design, we use the monitoring and alert function on the cloud and convert it into the parameters of our existing internal monitoring system, so the overall development and operation and maintenance processing can maintain consistency. We consider that the code design can be compatible with the implementation of hybrid cloud deployment. Finally, we completed the Serverless transformation of our audio and video processing platform.

The Function Compute (FC) billing policy shows us that three major factors affect the final cost: the memory specifications, the number of times to trigger computing, and the cost of outbound internet traffic. The technical architecture needs us to pay more attention to the first two. The traffic is also costly. This is also a focus for us.

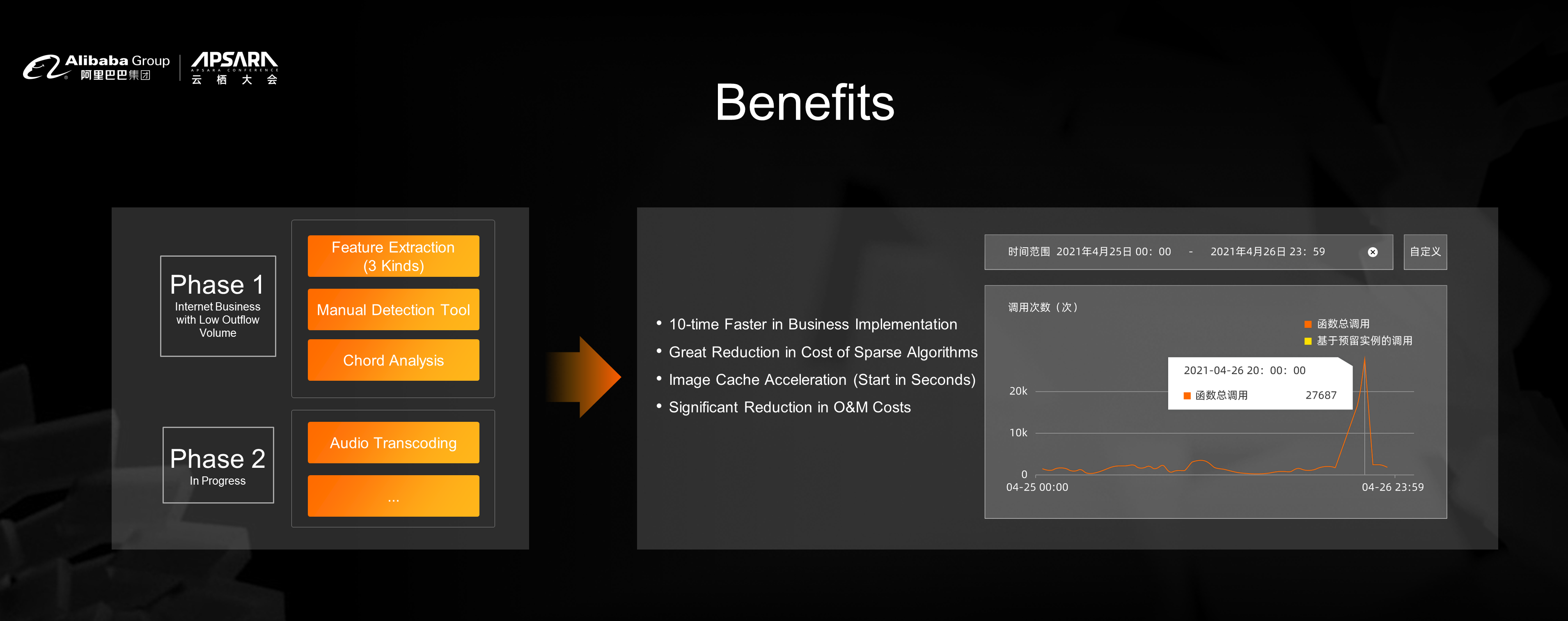

According to the cost characteristics of Function Compute (FC), under the condition that the storage system still uses NetEase's private cloud, in the first stage, we selected the audio and video algorithm with less outbound internet traffic. As for the relatively small amount of outbound internet traffic, let me give an example to extract the characteristics of the audio. For example, if audio goes in and extracts a 256-dimensional array, the obtained result is only a 256-dimensional array, which is much smaller than the audio traffic. Thus, the cost of outbound internet traffic will be small.

In the first stage of introducing Function Compute, the algorithm of the feature extraction class was improved by ten. The algorithm of sparse class can be understood as the algorithm with a low daily utilization rate, which has been greatly saved in cost. In addition, the startup speed of our nodes is optimized through the Function Compute image cache acceleration capability, so all services can be pulled up in seconds. These efforts reduce a large amount of O&M costs in algorithm O&M processing, allowing us to focus more on algorithms and businesses.

The figure on the right side above shows an operation example of one of the cloud music algorithms. As you can see, we have a large range of elastic changes, and Function Compute meets this requirement.

In the future, we hope to use Serverless technology to liberate our investment in O&M and solve the problem of outbound Internet traffic from storage, so audio and video algorithms in more scenarios can be implemented naturally. Secondly, as the complexity of algorithms increases, the use of computing resources becomes more complex. We hope to optimize the computing process using GPU instances. Lastly, there are more real-time audio and video processing scenarios in the cloud music business scenarios. Similarly, it has clear peak and trough fluctuations. We hope to accumulate more experience using Serverless services and help the development of real-time audio and video technology for cloud music.

Liao Xiangli is the Head of Research and Development of the NetEase Cloud Music Library. He joined NetEase Cloud in 2015.

The Timing App and the Practice of Online Education in the Serverless Field

99 posts | 7 followers

FollowAlibaba Cloud Community - December 21, 2021

Alibaba Cloud Serverless - April 7, 2022

Alibaba Cloud Community - December 5, 2025

Alibaba Cloud Community - August 4, 2023

Alibaba Cloud Community - August 15, 2025

Alibaba Container Service - July 22, 2024

99 posts | 7 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Serverless Workflow

Serverless Workflow

Visualization, O&M-free orchestration, and Coordination of Stateful Application Scenarios

Learn More Serverless Application Engine

Serverless Application Engine

Serverless Application Engine (SAE) is the world's first application-oriented serverless PaaS, providing a cost-effective and highly efficient one-stop application hosting solution.

Learn MoreMore Posts by Alibaba Cloud Serverless