During the 2021 Double 11 Global Shopping Festival, Mobile Taobao realized automatic modeling and automatic design technologies, which can meet the personalized needs of consumers on a large scale and achieve the effect of placing virtual goods in a house. Users don't need to view goods through pictures and videos anymore. Instead, they can display the designated goods virtually in a real house. AI design carries out the whole house matching design according to the designated goods and a real house type to present the overall design effect to consumers. Everyone can realize their dream of having a private designer.

3D Model Room: Consumers can view the goods' display effect in different scenarios.

Click here to view the original video

Place Virtual Goods in a House: Consumers can put the virtual goods in their real house to see how it looks.

Click here to view the original video

Image-based 3D reconstruction technology uses SfM technology for sparse reconstruction and MVS technology for dense reconstruction. Then, it carries out surface reconstruction, texture mapping, and material mapping. Finally, the shape, texture, material, and other information containing an explicit 3D representation of objects are obtained, which can support various 3D applications. The core assumption is that the camera takes pictures of objects from various angles, and the colors obtained from the same points on the surface of the objects are consistent. However, this assumption does not hold a firm footing because shooting is affected by light and object material. NeRF was the most popular algorithm in 3D modeling in 2020. NeRF neural rendering technology breaks through this assumption and allows users to see different objects from different angles. NeRF proposes a new 3D representation, which uses a neural network to implicitly represent the shape, texture, and material of an object. The rendering results can be obtained at various angles with high reduction through end-to-end training. However, NeRF technology is still in the beginning stage. The problems of training speed, inference speed, modeling robustness, and lack of explicit 3D representation have seriously affected the application of this technology. Many scientists worldwide have conducted in-depth research and exploration based on this technology.

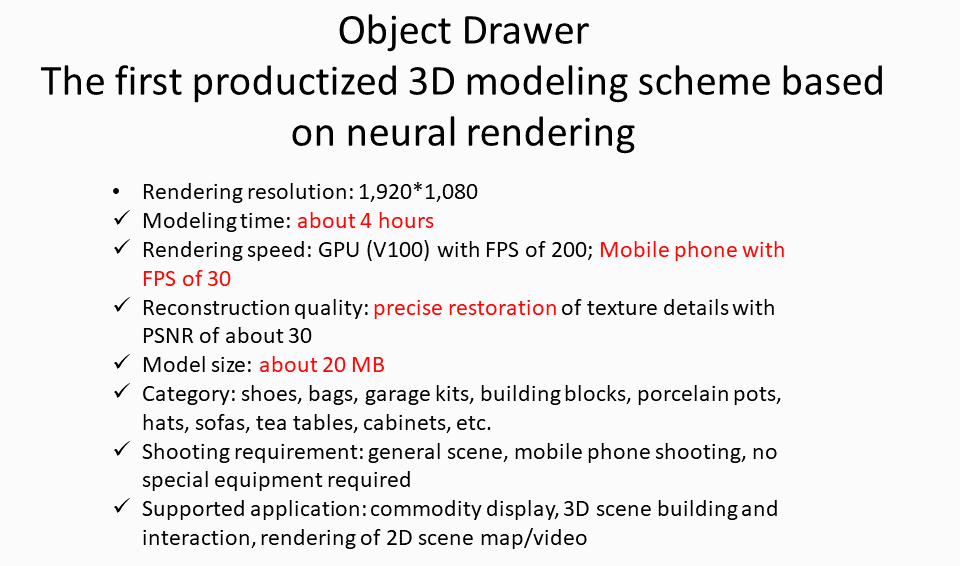

Alibaba TaoXi Technology released the Object Drawer product, which increased the inference speed of NeRF by 10,000 times and the training speed by more than ten times. It has solved the neural rendering application problems, such as light migration, 3D layout, and high-frequency texture. It has improved the modeling reduction degree, thus enabling the neural rendering technology to reach practical standards. This technology has been released to some Taobao and Tmall merchants for product modeling.

This article is divided into three parts: The first part analyzes the traditional 3D reconstruction technology and NeRF neural rendering technology, the second part introduces the Object Drawer modeling scheme, and the third part introduces the Object Drawer application scheme.

The overall process of traditional 3D reconstruction technology is listed below:

sparse reconstruction → dense reconstruction → mesh reconstruction → texture mapping → material mapping

The algorithm for sparse reconstruction is Structure from Motion (SfM). First, it extracts image feature points on each picture. Then, it establishes the matching relationship between different image feature points. Next, it generates the camera pose of each picture according to the matching relationship between feature points. Enough feature points can be extracted from objects with rich textures. We can also add strongly textured references around weakly textured objects to add feature points. Therefore, the general accuracy of camera pose estimation is relatively high, and currently, the algorithm can reach a positioning accuracy of less than 1 mm.

The algorithm for dense reconstruction is Multi-View Stereo (MVS). Its function is to obtain the 3D spatial position of each pixel based on the camera position and sparse 3D point position obtained by sparse reconstruction in the previous step. By doing so, it can realize dense reconstruction. Since the color of a single pixel is not distinguishable, the traditional method selects a pixel block (Patch) around the pixel point and uses manual features to describe the pixel block. MVSNet, proposed in 2018, introduced deep learning technology to solve the MVS problem. According to a hypothetical series of depths, the mapping relationship of pixels between different images can be obtained. According to the color deviation caused by the mapping relationship, the cost volume can be constructed to represent the matching error at different depths. Finally, the depth value with the smallest matching error is selected as the final depth value of the pixel. Due to the use of neural network features, the reconstruction accuracy of MVSNet is much higher than traditional methods. Like traditional MVS, it is still based on the characteristics of Patch blocks to find pixel matching relationships between different images. For weakly textured areas, the image features of adjacent pixels are very close, and this matching relationship will have errors, which will be reflected in the depth map. The final dense point cloud will have a large error.

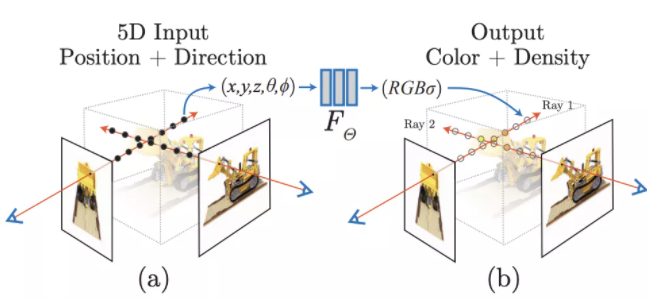

A major breakthrough in 3D modeling in 2020 was NeRF technology. NeRF is a neural rendering method that implicitly represents the shape, texture, and material of a 3D model with a neural network to obtain a 3D model rendering graph at any angle. The details of the algorithm are listed below:

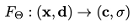

The NeRF network is a function. The input is 5D coordinates, including the space midpoint coordinate x=(x,y,z) and the camera angle direction d=(θ,φ). The output is color c=(r,g,b) and density σ. The expression is  . These are the specific details. First,

. These are the specific details. First, x is input into the MLP network to output σ and intermediate features. Then, the intermediate features and viewing angle direction d are input into the full connection layer to obtain RGB values. In other words, the voxel density is only related to the spatial coordinate x, and the output color is related to the spatial coordinate and viewing angle direction.

The process of voxel rendering is to obtain a ray passing through the object space corresponding to each pixel in the 2D image by adjusting the camera pose. Then, obtain the color value of the pixel by integrating the colors of all consecutive spatial points on the ray. In NeRF, hierarchical sampling is used to achieve an approximate effect. Specifically, an arbitrary number of points, n, are sampled from each ray passing through the object space, and the n points are integrated according to the following formula to obtain the color value of one pixel of the 2D image. Repeat the process above for each pixel to obtain a complete 2D image. Sampling is divided into coarse sampling and fine sampling. Coarse sampling is a random sampling of 64 points, and fine sampling is a sampling of 128 points according to the probability of coarse sampling.

During NeRF training, the difference between the color obtained by coarse sampling and fine sampling and the real color is used as Loss.

NeRF uses the neural network for the representation of the 3D model first. The shape, texture, material, and other information of the 3D model are implicitly stored in the neural network. This representation bypasses the direct solution of the high-precision shape and material of the 3D model but obtains high-precision rendering results directly. Rendering is the most common scenario of 3D models. NeRF technology proposes a new neural rendering technology. The rendering process can be differentiated and trained end-to-end. This allows NeRF to restore the rendering effect of 3D models at various angles accurately. NeRF technology assumes that objects are inconsistent from different angles, which goes beyond the assumption of the traditional MVS method. It improves the robustness of 3D modeling and can be applied to various weakly textured objects.

Although NeRF implicitly represents the 3D model, some methods can also be used to explicitly extract the NeRF model and perform visual analysis. Our analysis results are listed below:

First, the surface of the 3D model obtained by NeRF is rough, but it does not affect the rendering effect. Since NeRF does not intersect the ray and the surface of the object at one point but uses the probability of a series of points, this method reduces the requirements for the fineness of the model shape and relies on the overall rendering process to complete the color correction. Second, the 3D model of NeRF is often flawed. The weakly textured area is difficult to deal with using the traditional method, and the model generated by NeRF often has depression and other problems in the weakly textured area. However, these depressions have limited influence on the rendering results. Third, the shape of NeRF model converges quickly. In general, two to four epochs are trained. The convergence of the model shape is not very different from the final result, while the color requires dozens of epochs.

Object Drawer is deeply optimized based on NeRF and proposes a complete product solution. It solves the following core problems:

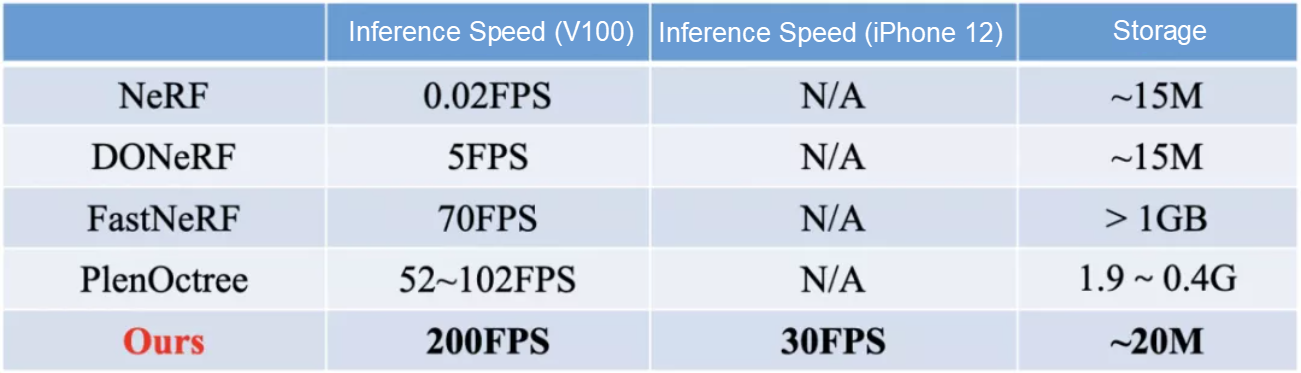

The inference speed of NeRF is 50 frames/s for images with a resolution of 1,920*1,080, while the practical requirement is more than 30 frames/s, with a gap of 1,500 times. We conclude several reasons for the slow speed of NeRF. First, there are few effective pixels, and the generated 2D image has less than 1/3 effective pixels. Being able to obtain effective pixels quickly can improve inference speed. Second, there are few effective voxels. 192 points are sampled, of which only the density σ of points near the surface is relatively large. Other points do not need to be inferred. Third, network inference is slow and requires inference through a 12-layer connected network to obtain the color and density of one voxel. If this can be optimized, the inference can be accelerated substantially. Optimization can be carried out through the way of space-for-time substitution, including FastNeRF, PlenOctree, and other methods. However, if we only store the NeRF output results, the storage space becomes too large, reaching the size of 200 MB ~ 1 GB. Such a large model is not practical. We analyze it from three aspects:

We need to get effective pixels quickly to support neural rendering. First, we can extract the mesh model in advance based on the density σ of the NeRF model. The mesh model and the neural network represent the 3D model together. The mesh model supports rasterized rendering, which can obtain 2D effective pixels quickly. Only rendering for effective pixels can improve the rendering speed significantly.

A large number of points in space are not near the surface of the object, and the efficiency of random sampling is very low. The surface information of the object can be obtained quickly using the mesh model. This way, sampling is only carried out near the surface. By doing so, the sampling efficiency can be improved significantly. We can carry out fine sampling directly, which can achieve the same effect with only 32 points.

The 12-layer neural network is used for model representation, which contains a large number of redundant nodes. There are two main methods of network acceleration. One is network pruning, and the other is model distillation. We experimentally verify that the method of network pruning is superior to model distillation in NeRF network optimization. With L1 regularization, we can optimize 80% of nodes, reduce the network scale to 1/5 of the original, and keep the effect unchanged.

It is far from enough that the network is optimized by 300 times. On this basis, our latest method has accelerated the network by more than 30 times and achieved a speed increase of 10,000 times. The latest work is being sorted out and is expected to be published in the next few months.

|

|

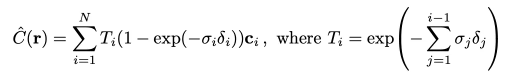

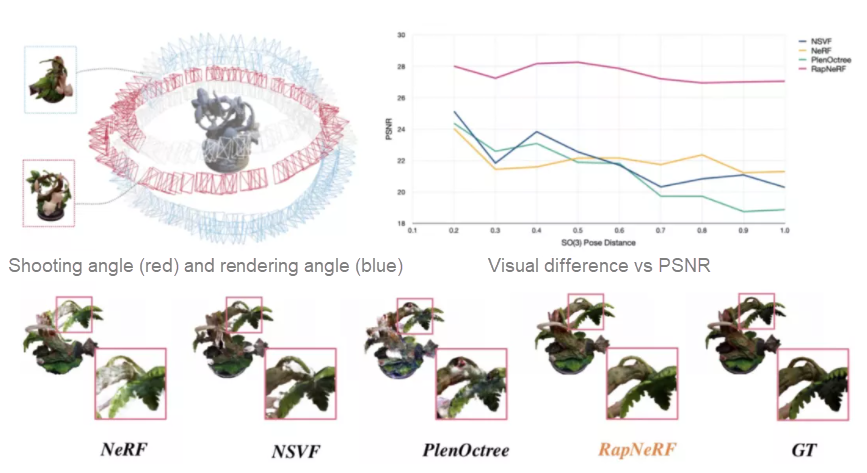

The Performance of NeRF (left) and Object Drawer (right) under Novel View Extrapolation

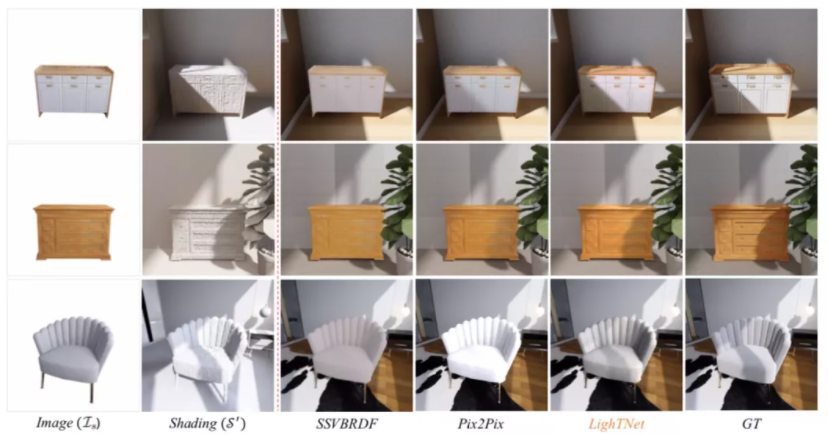

The problem of viewing angle robustness is one of the key problems in neural rendering. The captured images cannot cover all viewing angles. When the output viewing angle changes and is different from the shooting viewing angle, neural rendering is required to have good generalization capabilities to support the generation of images from new viewing angles. Object Drawer proposes RapNeRF technology, which uses random viewing angle enhancement and average viewing angle embedding technology to improve viewing angle robustness. Experiments show that with the increase of viewing angle difference, NeRF and other models have seen a significant decrease in PSNR, while for Object Drawer, PSNR remains virtually unchanged. Case analysis shows that when viewing angle difference is very large, Object Drawer can also give high-definition images, which solves this problem well.

A Result Comparison of Some Methods Regarding the Novel View Extrapolation Problem

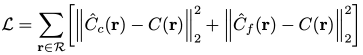

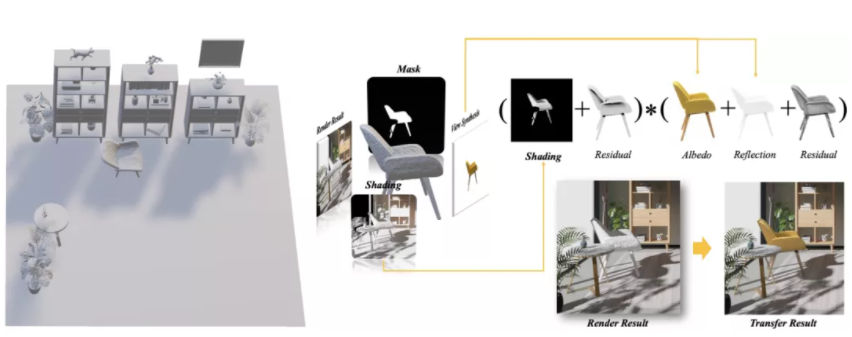

Object Drawer (LighTNet) Light Migration Solution

3D models need to be able to support various 3D applications, including 3D layout, rendering, 3D interaction, etc. Usually, neural rendering technology can only support the rendering of objects or scenes at any angle, which limits the application substantially. Object Drawer presents a solution that integrates implicit expressions and 3D mesh models. The specific scheme is to extract the explicit spatial geometric expression of the model from the implicit expression and establish the mesh model. Then, form a 3D coarse model that can be applied to the existing rendering engine through methods such as texture mapping and material recognition. The 3D coarse model and neural network represent a commodity together. On the one hand, 3D coarse models can be imported into graphics tools directly. For example, standard high-precision CAD models are generally used in practical applications such as 3D scene design. On the other hand, neural rendering can get high-definition rendered images of objects from any viewing angle. We propose LighTNet to reflect the lighting effects of physical rendering on the 3D coarse model, such as reflection and shadow. It transfers the realistic lighting effects reflected by physical rendering on the object to the object view map generated by neural rendering. LighTNet uses PBR rendering for 3D coarse models to obtain rough shading, mask, depth, and other information, as well as neural rendering results as input information. Then, it predicts the corresponding shading and correction values of neural rendering. The corrected shading and neural rendering can obtain neural rendering results with lighting effects through intrinsic fusion. Next, it replaces the coarse-model projection in the physical rendering results with the obtained high-definition composite image with lighting effects. This way, it can achieve realistic scene rendering effects. The experimental data shows that the new method can adapt to the migration of shadow effects with various complex light source conditions and details. The visual effects are far superior to other alternatives. The average PSNR in the 3D-FRONT test data set is 30.17.

The Light Migration Modeling Effect

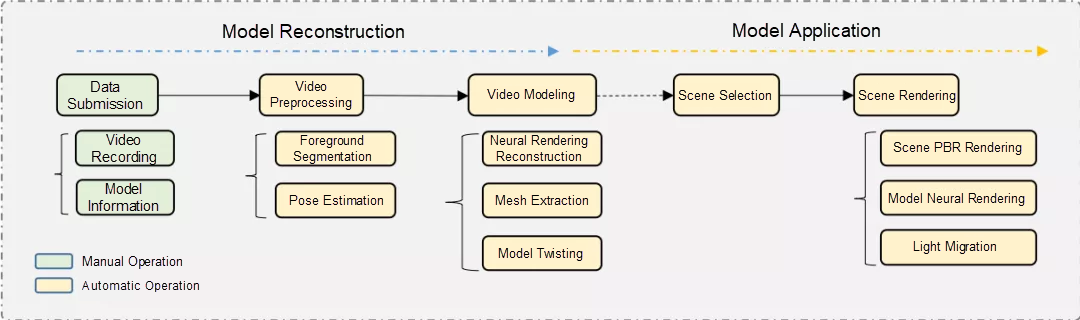

Considering the use cost, user convenience, and reconstruction quality of the 3D modeling service, Object Drawer uses videos that record the object from all angles as input. It also formulates standardized specifications for shooting video in terms of shooting duration, distance, angle, definition, shooting environment, and object proportion. Object Drawer completes model reconstruction through video and supports the application of the reconstructed model to PBR scene rendering.

First, the user manually submits the video and model information (actual size, category, name, etc.) of the model to be reconstructed on the Web modeling platform of Object Drawer. Object Drawer will trigger the model reconstruction task, start decoding the video, and verify the feasibility of the data automatically. It will filter the fragments with poor shooting quality or incomplete objects to ensure the accuracy of reconstruction. Then, the neural rendering reconstruction of the object is completed using the verified image sequence. Mesh is extracted according to the reconstructed neural network, and the model is twisted to determine the orientation. Finally, the model is uploaded to the model database, thus completing model reconstruction. Compared with traditional modeling procedures, the processing of shapes, textures, and materials is completed in one step during neural rendering reconstruction, with end-to-end optimization and better overall robustness and restoration performance.

When the model is applied, Object Drawer transforms the rendering engine to support the neural rendering of the model and migrates the PBR scene light to the neural rendering of the model to complete the synthesis of the reconstructed model and scene.

3D models can build virtual scenes, display goods in the scenes, and even put goods into consumers' real rooms, bringing a brand-new experience. The construction of the scene and the design for the real house type need to rely on the designer. Each design takes a lot of time and energy and consumes a lot of costs. It is difficult to be applied on a large scale in the field of commodity display. Alibaba TaoXi Technology has proposed 3D magic pen, which can carry out house type reconstruction, AI layout, AI combination, and other work automatically. It can also integrate furniture commodity display with consumers' personalized scene requirements automatically, thus realizing large-scale application of design. More than one million AI designs have been completed and applied in Mobile Taobao and Shejijia. Specific technologies include house type reconstruction, AI layout, and AI combination.

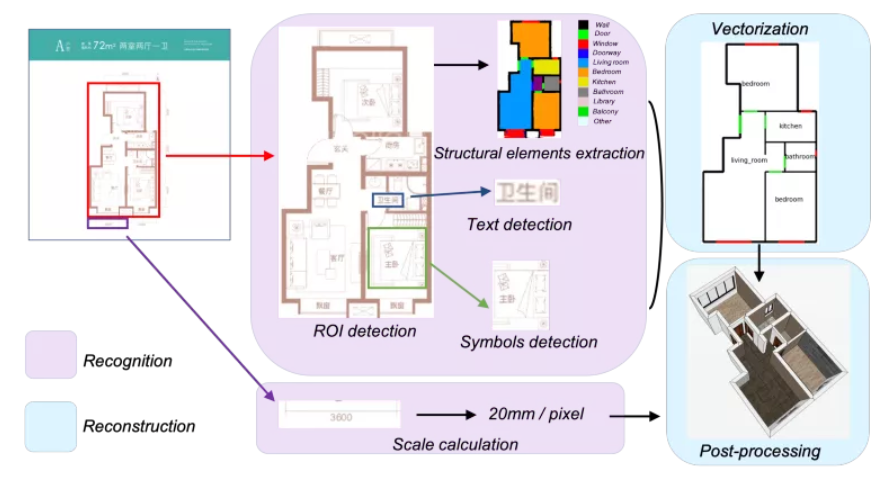

House type reconstruction requires generating a 3D house type map represented by vectors according to the house type map represented by a 2D image. One of the difficulties is that information, such as building elements, scales, and room names, needs to be identified, and there is a correlation between the information. Another difficulty is that the result of recognition needs to be vectorized instead of segmented by pixels. The current segmentation algorithm can achieve relatively high accuracy. However, noise error vectorization will lead to the mistake of a missed wall or additional wall in house type map recognition, even for high IOU results. Thus, the map cannot be used directly. First of all, we get the house type area through the detection method. For the detected house type area, we will divide it by element through the segmentation model and obtain accurate room type information through text detection and symbol detection. The scale module calculates the endpoints and numbers of the scale line segments. The reconstruction module integrates the elements obtained by the detection and segmentation above to perform vectorization optimization based on differential rendering. Finally, an accurate 3D reconstruction result is obtained by synthesizing the scale module information.

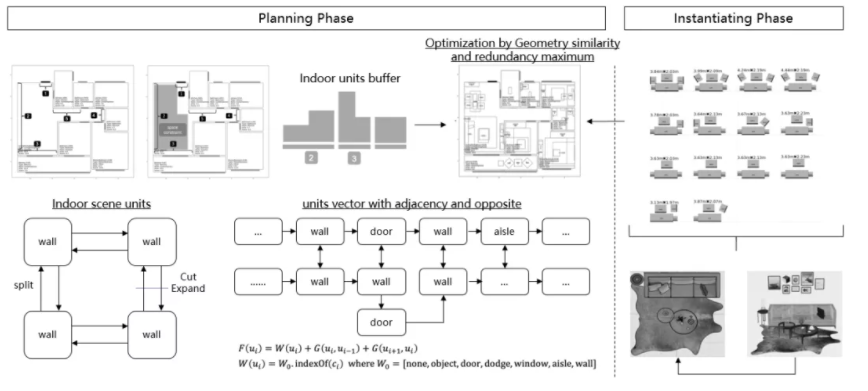

AI layout needs to generate a reasonable design scheme according to the house type map, taking the division of functional areas into consideration. The layout within the functional areas includes interior finish layout, furnishing layout, and accessory layout. AI layout generation can be described as layout optimization or scene generation problems that implement different element levels under rigid spatial constraints and diverse semantic elements. Academia studies it as a kind of scene synthesis. The existing researches and frameworks generally concern two stages or directions, object-oriented and space-oriented. The former realizes the analysis or generation of object-to-object relations (based on relation graph or semantic constraints), while the latter realizes the prediction of the position, size, and posture of objects in space based on spatial constraints. The two correspond to the local layout and overall layout modules mentioned above. From the perspective of business implementation, these studies often focus too much on the expression of the graph theory paradigm at the object-oriented level but despise the generalization capability of the space-oriented method. The house type data for the experimental results is too simple (or contains too ideal assumptions.) The difference from structural complexity of the actual house type is too large, which also leads to the feasibility, accuracy, and richness problems of the layout and scene using the open house type data. It mostly stays at the From Paper to Paper stage and does not solve this problem. We transform the original data of the house type and furniture into hierarchical geometric feature descriptions (spatial features and combined features.) The space constraints for house types are described using associated components and shared buffers. The furniture space measurement is described using hierarchical combined roles and redundancy. The overall problem is transformed into a hierarchical matching optimization problem between the two, and the overall algorithm is feasible by ensuring that the problem at each layer can be solved. Finally, the AI layout of the real house type is generated automatically.

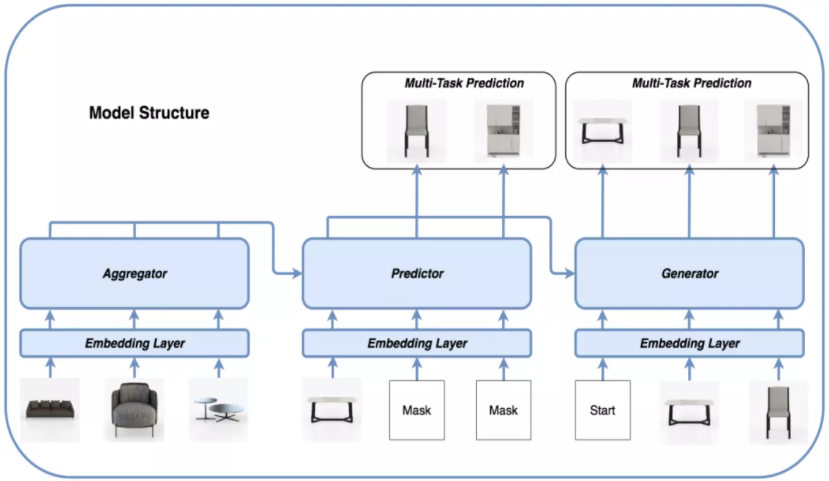

AI furnishing combination provides consumers with a 3D scene-based shopping experience by changing the way of viewing goods. Previously, consumers can only view pictures and watch videos to check for goods. Now, they can view the actual combination effect in a real scene. The algorithm of AI solves the problem of displaying a single piece of furniture in real scenes. It can generate the furnishing effect of the whole house with only a single piece of furniture. In other words, it solves the problem of N out of one in the home decoration industry. In the combination field, the current academic research is more about the problem of pairwise combination, one out of N, and measurement of cross-category combination. The research is mainly concentrated in the field of clothing, rarely involving the field of home decoration. Compared with the problems above, the problem of N out of one is also one of the more difficult combination problems. Our first edition of the furnishing combination model also adopts the method of expanding from pairwise combination to the whole house combination. This method is inherently lacking in consideration of the overall combination effect of the whole house. As a result, some furniture may be discordant with the overall layout. On this basis, we re-combed the problem of furnishing combination. We use a sequential generation framework instead of pairwise comparative combination, thus improving the coordination of the overall combination. Although there are some combination methods based on sequential generation in the academic circles, they are not modeled for the characteristics of furniture combination. They lack the thinking about furniture details, combination consistency, and diversity, as well as the ability to express the strong and weak combination relationships between different functional areas. Ultimately, the results are unsatisfactory. We use the transformer-based sequential generation model as the basic framework to transform the combination problem into a one-by-one generation problem for each piece of furniture. The generation of each piece of furniture will consider all the furniture generated earlier, thus improving the coordination of the overall combination. We adopt three transformer modules in cascading mode. Each module is responsible for different functions and expresses the strong and weak relationship of furniture combination inside and outside different functional areas.

Considering the product modeling and application problems, Object Drawer has given preliminary solutions to achieve industry-leading results in terms of inference speed, high-frequency texture restoration, light migration, and viewing angle robustness. It can support large-scale applications in categories such as shoes, furniture, and bags. However, the new model representation and rendering process also bring new challenges:

The model represented by neural network lacks explicit 3D expression, while model editing requires the What You See Is What You Get modification of the 3D model. The current representation requires a new editing paradigm to support the needs of model editing.

Simulation is needed to enable the 3D model to move. The traditional way to achieve this is to use mathematical functions for each physical phenomenon or law to perform fitting calculations and get the simulation results. The original simulation algorithms studied explicit 3D models. The simulation method also requires a new simulation paradigm design for implicit 3D expression. At the same time, the original reconstruction of a large number of simulation work is a huge workload. We also need to find out how to migrate the original simulation capability to implicit expression.

Neural rendering provides a good solution for static object modeling, but large-scale applications require the emergence of more new products. We have just got started in this regard. The new 3D model representation and the new rendering paradigm have brought new problems and new solutions. Professionals in the industry are welcome to communicate together to develop 3D modeling technology.

The team is the basic algorithm team of the Business Machine Intelligence Department. The team is responsible for the research and innovation of algorithms related to e-commerce platforms, such as Taobao and Tmall. They have published many papers at top international conferences, such as KDD, ICCV, CVPR, NIPS, and AAAI. In terms of 3D machine learning, we explore the advanced technology of 2D vision algorithms and the integration of 3D geometry and vision algorithm. In 3D modeling and AI design fields, we have globally advanced technologies. We look forward to promoting technological innovation in the home industry.

1,339 posts | 470 followers

FollowAlibaba Cloud Community - November 23, 2021

Alibaba Cloud Community - November 3, 2021

淘系技术 - April 14, 2020

Alibaba Clouder - December 23, 2020

Alibaba Cloud Community - December 30, 2022

Alibaba Clouder - January 8, 2019

1,339 posts | 470 followers

Follow Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More Livestreaming for E-Commerce Solution

Livestreaming for E-Commerce Solution

Set up an all-in-one live shopping platform quickly and simply and bring the in-person shopping experience to online audiences through a fast and reliable global network

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn MoreMore Posts by Alibaba Cloud Community