Retrieval-Augmented Generation

Retrieval Augmentation Generation (RAG) is an architecture that can augment the capabilities of AI models including large language models (LLMs) like Qwen. RAG adds an information retrieval system that provides the models with relevant contextual data, such as data of specific domains or an organization's internal knowledge base, without the need to re-train the model. RAG improves LLM output cost-effectively, making the results more relevant and accurate.

Alibaba Cloud’s vector data databases store, manage, and retrieve vector embedding data as high-dimensional data effectively and efficiently, making them the ideal choice for the information retrieval system of a RAG architecture.

AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL is an online MPP (Massively Parallel Processing) data warehousing service based on the open-source Greenplum database. Based on MPP architecture, AnalyticDB for PostgreSQL also provides the vector database feature to help you store, analyze, and retrieve vector data. AnalyticDB for PostgreSQL is selected as one of the recommended vector databases by OpenAI Cookbook.![]() Learn more about the vector database features >

Learn more about the vector database features >![]() Learn more about the RAG service of AnalyticDB for PostgreSQL >

Learn more about the RAG service of AnalyticDB for PostgreSQL >![]() See how to build a RAG plugin with AnalyticDB for PostgreSQL >

See how to build a RAG plugin with AnalyticDB for PostgreSQL >![]() See how to use AnalyticDB for PostgreSQL as a vector database in OpenAI Cookbook >

See how to use AnalyticDB for PostgreSQL as a vector database in OpenAI Cookbook >

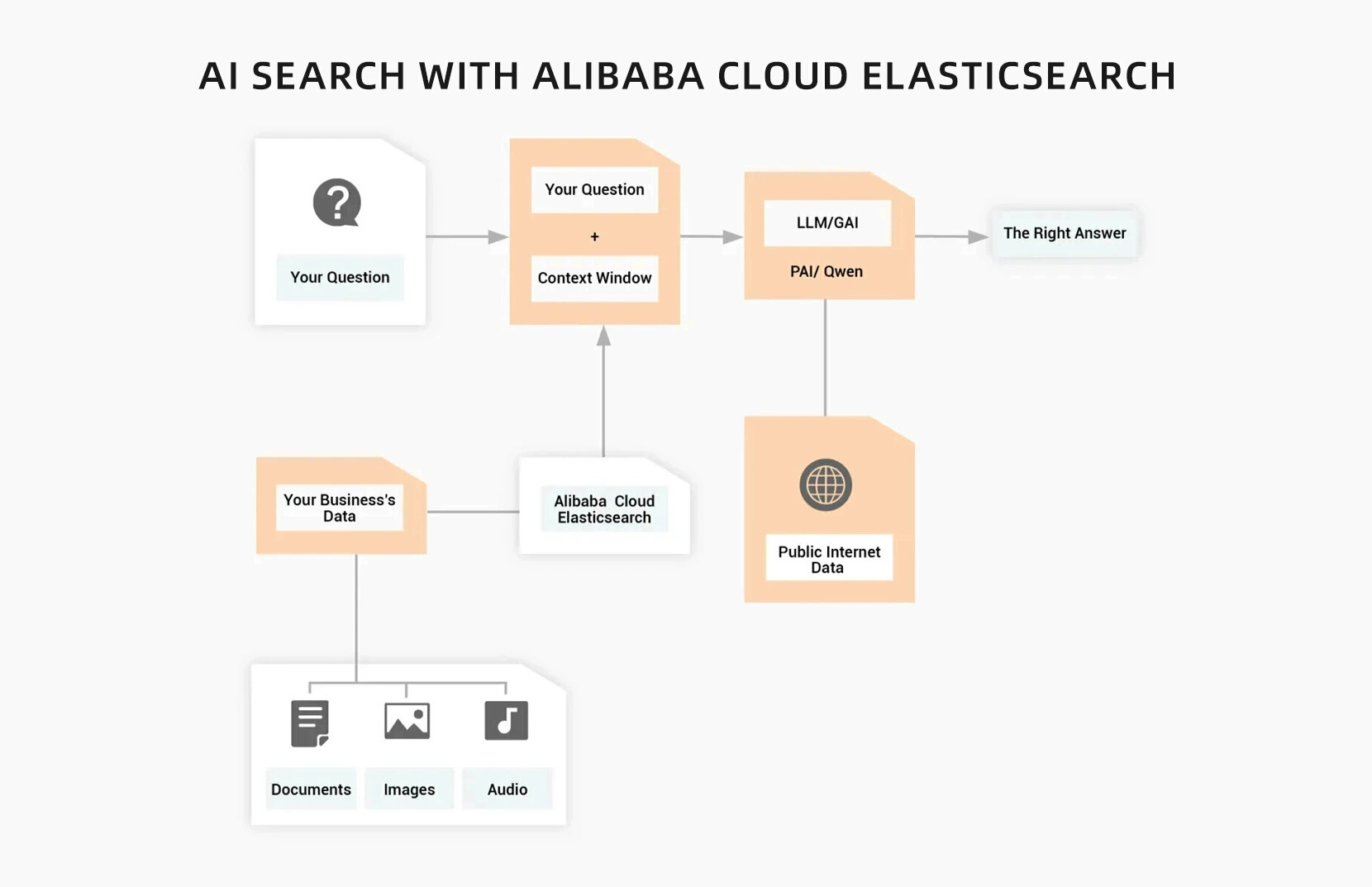

Alibaba Cloud Elasticsearch

Alibaba Cloud Elasticsearch offers twice the cost-efficiency of the open-source Elasticsearch engine. Its powerful toolkit helps you easily build AI-powered search applications that are seamlessly integrated with large language models, featuring high-performance semantic search even without exact keyword matches, full-text answers to complex questions, and personalized recommendations. It also provides robust access control, security monitoring, and automatic updates for enterprises. ![]() Download "The Complete Guide to Alibaba Cloud Elasticsearch“ whitepaper >

Download "The Complete Guide to Alibaba Cloud Elasticsearch“ whitepaper >![]() Learn more about the new AI search solution with Elasticsearch >

Learn more about the new AI search solution with Elasticsearch >

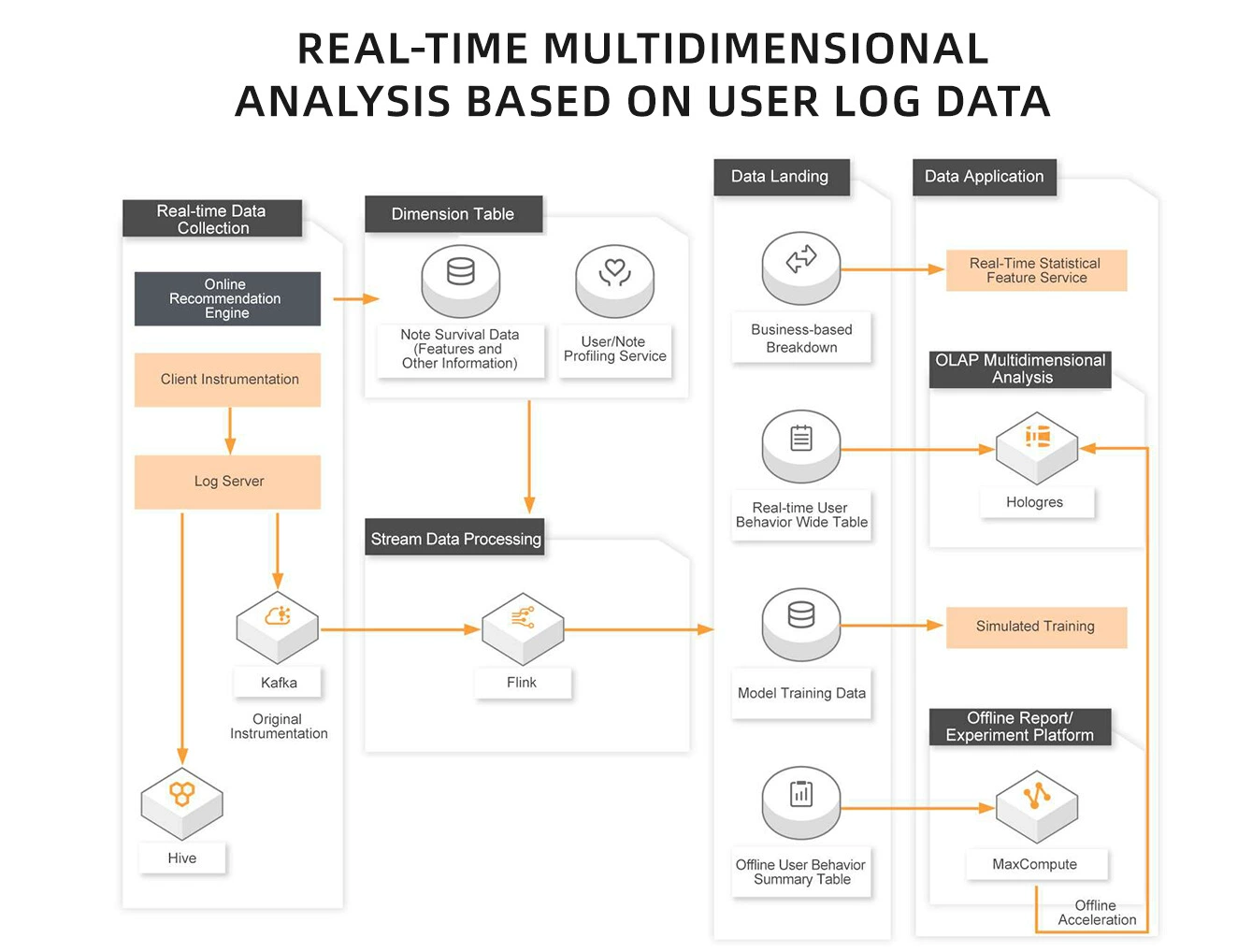

Hologres

Hologres is an all-in-one real-time data warehouse engine that is compatible with PostgreSQL. It supports online analytical processing (OLAP) and ad hoc analysis of PB-scale data. It is deeply integrated with MaxCompute, Flink, and DataWorks, and provides a full-stack data warehouse solution that combines online and offline processing. Hologres is also integrated with Platform for AI (PAI), and its built-in vector search engine Proxima supports real-time computing of feature data and real-time vector retrieval.![]() Learn more about "Vector Processing Based on Proxima" >

Learn more about "Vector Processing Based on Proxima" >

OpenSearch

OpenSearch is a one-stop platform built based on the large-scale distributed search engine developed by Alibaba. OpenSearch Vector Search Edition is designed for vector scenarios and helps enterprises build high-performance, low-cost, easy-to-use, and powerful multimodal vector search services for text, images, audio files, and video, etc. OpenSearch delivers high performance and reduces costs if you build vector indexes for a large amount of unstructured data, and retrieve data based on the vector indexes.![]() Implement LLM-based enterprise-specific conversational search >

Implement LLM-based enterprise-specific conversational search >![]() Implement multimodal search for e-commerce >

Implement multimodal search for e-commerce >![]() Implement multimodal search for education >

Implement multimodal search for education >

Creating an AI-Powered RAG Service With Model Studio

This tutorial provides a step-by-step guide on how to set up a RAG service using Alibaba Cloud Model Studio, Compute Nest, and AnalyticDB for PostgreSQL. With Model Studio, you can leverage top-tier generative AI models like Qwen to develop, deploy, and manage AI applications effortlessly. This setup ensures secure and efficient data handling within your enterprise, enhancing AI capabilities and enabling seamless natural language queries.

Related Resources

Blog

Quickly Building a RAG Service on Compute Nest with LLM on PAI-EAS and AnalyticDB for PostgreSQL

This blog describes how to create a RAG service using Compute Nest with LLMs on PAI-EAS, and AnalyticDB for PostgreSQL as the vector database.

Blog

Igniting the AI Revolution - A Journey with Qwen, RAG, and LangChain

This blog explores the transformative AI Revolution journey and explains the concept of RAG and LangChain.

Blog

Next-Level Conversations: LLM + VectorDB with Alibaba Cloud Is Customizable and Cost-Efficient

This blog explains the benefits of LLMs and vector databases of Alibaba Cloud (with examples).