對長時間的語音資料流進行識別,適用於會議演講、ApsaraVideo for Live等長時間不間斷識別的情境。

功能簡介

NUI SDK提供更小的工具包和更完善的狀態管理。為滿足不同使用者需求,NUI SDK既能提供全鏈路的語音能力,同時可做原子能力SDK進行使用,並保持介面的統一。

使用須知

輸入格式:PCM編碼、16bit採樣位元、單聲道(mono)。

音頻採樣率:8000Hz/16000Hz。

設定返回結果:是否返回中間識別結果、在後處理中添加標點、將中文數字轉為阿拉伯數字輸出。

設定多語言識別:在管控台編輯專案中進行模型選擇,詳情請參見模型選擇。

服務地址

訪問類型 | 說明 | URL |

|---|---|---|

外網訪問 | 所有伺服器均可使用外網訪問URL(SDK中預設設定了外網訪問URL)。 | wss://nls-gateway.cn-shanghai.aliyuncs.com/ws/v1 |

上海ECS內網訪問 | 使用阿里雲上海ECS(ECS地區為華東2(上海)),可使用內網訪問URL。 ECS的傳統網路不能訪問AnyTunnel,即不能在內網訪問Voice Messaging Service;如果希望使用AnyTunnel,需要建立專用網路在其內部訪問。 說明

| ws://nls-gateway.cn-shanghai-internal.aliyuncs.com:80/ws/v1 |

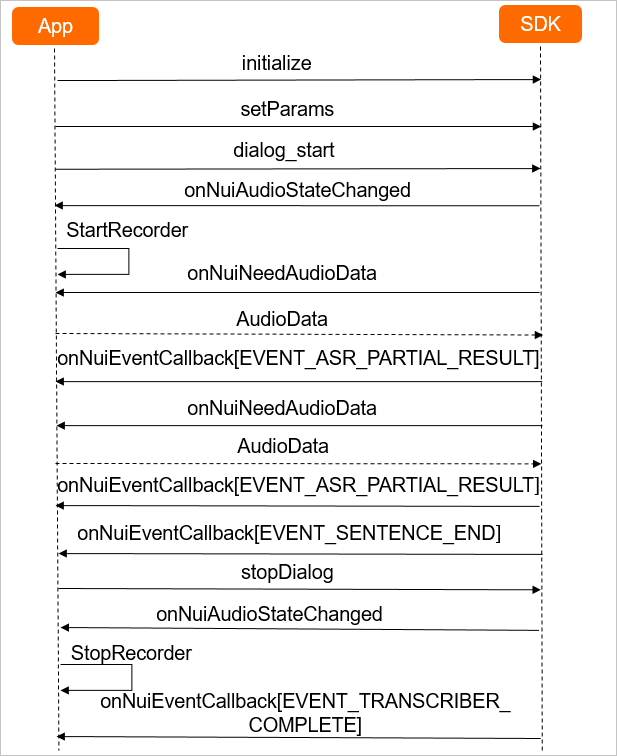

互動流程

所有服務端的響應都會在返回資訊的header包含表示本次識別任務的task_id參數,請記錄該值,如果出現錯誤,請將task_id和錯誤資訊提交到工單。

1. 鑒權和初始化

用戶端在與服務端建立WebSocket串連時,使用Token進行鑒權。關於Token擷取請參見擷取Token。

初始化參數如下。

參數 | 類型 | 是否必選 | 說明 |

|---|---|---|---|

workspace | String | 是 | 工作目錄路徑,SDK從該路徑讀取設定檔。 |

app_key | String | 是 | 管控台建立專案的appkey。 |

token | String | 是 | 請確保該Token可以使用並在有效期間內。 說明 Token可以在初始化時設定,也可通過參數設定進行更新。 |

device_id | String | 是 | 裝置標識,唯一表示一台裝置(如Mac地址/SN/UniquePsuedoID)。 |

debug_path | String | 否 | debug目錄。當初始化SDK時的save_log參數取值為true時,該目錄用於儲存中間音頻檔案。 |

save_wav | String | 否 | 當初始化SDK時的save_log參數取值為true時,該參數生效。表示是否儲存音頻debug,該資料儲存在debug目錄中,需要確保debug_path有效可寫。 |

2. 開始識別

用戶端發起即時語音辨識請求前需要進行參數設定,各參數由SDK中setParams介面以JSON格式設定,該參數設定一次即可。各參數含義如下。

參數 | 類型 | 是否必選 | 說明 |

|---|---|---|---|

appkey | String | 否 | 管控台建立的專案appkey,一般在初始化時設定。 |

token | String | 否 | 如果需要更新,則進行設定。 |

service_type | Int | 是 | 需要請求的Voice Messaging Service類型,即時語音辨識為“4”。 |

direct_ip | String | 否 | 支援用戶端自行DNS解析後傳入IP進行訪問。 |

nls_config | JsonObject | 否 | 訪問Voice Messaging Service相關的參數配置,詳情請參見下表。 |

參數nls_config配置如下。

參數 | 類型 | 是否必選 | 說明 |

|---|---|---|---|

sr_format | String | 否 | 音頻編碼格式,支援OPUS編碼和PCM原始音頻。預設值:OPUS。 說明 如果使用8000Hz採樣率,則只支援PCM格式。 |

sample_rate | Integer | 否 | 音頻採樣率,預設值:16000Hz。根據音頻採樣率在管控台對應專案中配置支援該採樣率及情境的模型。 |

enable_intermediate_result | Boolean | 否 | 是否返回中間識別結果,預設值:False。 |

enable_punctuation_prediction | Boolean | 否 | 是否在後處理中添加標點,預設值:False。 |

enable_inverse_text_normalization | Boolean | 否 | 是否在後處理中執行ITN。設定為true時,中文數字將轉為阿拉伯數字輸出,預設值:False。 說明 不會對詞資訊進行ITN轉換。 |

customization_id | String | 否 | 自學習模型ID。 |

vocabulary_id | String | 否 | 定製泛熱詞ID。 |

max_sentence_silence | Integer | 否 | 語音斷句檢測閾值,靜音時間長度超過該閾值被認為斷句。取值範圍:200ms~2000ms,預設值:800ms。 |

enable_words | Boolean | 否 | 是否開啟返回詞資訊。預設值:False。 |

enable_ignore_sentence_timeout | Boolean | 否 | 是否忽略即時識別中的單句識別逾時。預設值:False。 |

disfluency | Boolean | 否 | 是否對識別文本進行順滑(去除語氣詞、重複說等)。預設值:False。 |

vad_model | String | 否 | 設定服務端的vad模型id,預設無需設定。 |

speech_noise_threshold | float | 否 | 噪音參數閾值,取值範圍:-1~+1。

說明 該參數屬進階參數,調整需謹慎並進行重點測試。 |

3. 發送資料

用戶端迴圈發送語音資料,持續接收識別結果:

EVENT_SENTENCE_START事件表示服務端檢測到了一句話的開始。即時語音辨識服務的智能斷句功能會判斷出一句話的開始與結束,如:

{ "header": { "namespace": "SpeechTranscriber", "name": "SentenceBegin", "status": 20000000, "message_id": "a426f3d4618447519c9d85d1a0d15bf6", "task_id": "5ec521b5aa104e3abccf3d3618222547", "status_text": "Gateway:SUCCESS:Success." }, "payload": { "index": 1, "time": 0 } }header對象參數說明:

參數

類型

說明

namespace

String

訊息所屬的命名空間。

name

String

訊息名稱。SentenceBegin表示一個句子的開始。

status

Integer

狀態代碼。表示請求是否成功,見錯誤碼。

message_id

String

本次訊息的ID,由SDK自動產生。

task_id

String

任務全域唯一ID,請記錄該值,便於排查問題。

status_text

String

狀態訊息。

payload對象參數說明:

參數

類型

說明

index

Integer

句子編號,從1開始遞增。

time

Integer

當前已處理的音頻時間長度,單位:毫秒。

若enable_intermediate_result設定為true,SDK會持續多次通過onNuiEventCallback回調上報EVENT_ASR_PARTIAL_RESULT事件,即中間識別結果,如:

{ "header": { "namespace": "SpeechTranscriber", "name": "TranscriptionResultChanged", "status": 20000000, "message_id": "dc21193fada84380a3b6137875ab9178", "task_id": "5ec521b5aa104e3abccf3d3618222547", "status_text": "Gateway:SUCCESS:Success." }, "payload": { "index": 1, "time": 1835, "result": "北京的天", "confidence": 1.0, "words": [{ "text": "北京", "startTime": 630, "endTime": 930 }, { "text": "的", "startTime": 930, "endTime": 1110 }, { "text": "天", "startTime": 1110, "endTime": 1140 }] } }說明header對象中name參數取值為TranscriptionResultChanged表示句子的中間識別結果。其餘參數說明參見上述表格。

payload對象參數說明:

參數

類型

說明

index

Integer

句子編號,從1開始遞增。

time

Integer

當前已處理的音頻時間長度,單位:毫秒。

result

String

當前句子的識別結果。

words

List< Word >

當前句子的詞資訊,需要將enable_words設定為true。

confidence

Double

當前句子識別結果的信賴度,取值範圍0.0~1.0,值越大表示信賴度越高。

EVENT_SENTENCE_END事件表示服務端檢測到了一句話的結束,並返回該句話的識別結果,如:

{ "header": { "namespace": "SpeechTranscriber", "name": "SentenceEnd", "status": 20000000, "message_id": "c3a9ae4b231649d5ae05d4af36fd1c8a", "task_id": "5ec521b5aa104e3abccf3d3618222547", "status_text": "Gateway:SUCCESS:Success." }, "payload": { "index": 1, "time": 1820, "begin_time": 0, "result": "北京的天氣。", "confidence": 1.0, "words": [{ "text": "北京", "startTime": 630, "endTime": 930 }, { "text": "的", "startTime": 930, "endTime": 1110 }, { "text": "天氣", "startTime": 1110, "endTime": 1380 }] } }說明header對象中name參數取值為SentenceEnd表示句子識別結束。其餘參數說明參見上述表格。

payload對象參數說明:

參數

類型

說明

index

Integer

句子編號,從1開始遞增。

time

Integer

當前已處理的音頻時間長度,單位:毫秒。

begin_time

Integer

當前句子對應的SentenceBegin事件的時間,單位:毫秒。

result

String

當前句子的識別結果。

words

List< Word >

當前句子的詞資訊,需要將enable_words設定為true。

confidence

Double

當前句子識別結果的信賴度,取值範圍0.0~1.0,值越大表示信賴度越高。

其中,Word對象:

參數

類型

說明

text

String

文本。

startTime

Integer

詞開始時間,單位:毫秒。

endTime

Integer

詞結束時間,單位:毫秒。

4. 結束識別

用戶端通知服務端語音資料發送完畢,服務端識別結束後通知用戶端識別完畢。

錯誤碼

即時語音辨識的錯誤碼資訊,請參見錯誤碼。