EMR Serverless Spark相容spark-submit命令列參數,簡化了任務執行流程。本文通過一個樣本,為您示範如何進行Spark Submit開發,確保您能夠快速上手。

前提條件

已建立工作空間,詳情請參見管理工作空間。

已提前完成商務應用程式開發,並構建好JAR包。

操作步驟

步驟一:開發JAR包

本快速入門旨在帶您快速熟悉Spark Submit任務,為您提供了工程檔案以及測試JAR包,您可以直接下載以備後續步驟使用。

單擊spark-examples_2.12-3.5.2.jar,直接下載測試JAR包。

spark-examples_2.12-3.5.2.jar是Spark內建的一個簡單樣本,用於計算圓周率π的值,它必須與esr-4.x引擎版本結合使用以提交任務。如果您使用esr-5.x引擎版本提交任務,請下載spark-examples_2.13-4.0.1.jar以便進行本文的驗證。

步驟二:上傳JAR包至OSS

本文樣本是上傳spark-examples_2.12-3.5.2.jar,上傳操作可以參見簡單上傳。

步驟三:開發並運行任務

在EMR Serverless Spark頁面,單擊左側的資料開發。

在開發目錄頁簽下,單擊

表徵圖。

表徵圖。輸入名稱,類型選擇,然後單擊確定。

在右上方選擇隊列。

添加隊列的具體操作,請參見管理資源隊列。

在建立的任務開發中,配置以下資訊,其餘參數無需配置,然後單擊運行。

參數

說明

指令碼

填寫您的Spark Submit指令碼。

例如,指令碼內容如下。

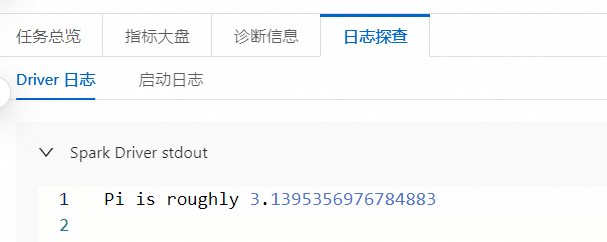

--class org.apache.spark.examples.SparkPi \ --conf spark.executor.memory=2g \ oss://<YourBucket>/spark-examples_2.12-3.5.2.jar在下方的運行記錄地區,單擊任務操作列的日志探查。

在日志探查頁簽,您可以查看相關的日誌資訊。

步驟四:發布任務

發行的任務可以作為工作流程節點的任務。

任務運行完成後,單擊右側的發布。

在任務發布對話方塊中,可以輸入發布資訊,然後單擊確定。

(可選)步驟五:查看Spark UI

任務正常運行後,您可以在Spark UI上查看任務的運行情況。

在左側導覽列,單擊任務歷史。

在Application頁面,單擊目標任務操作列的Spark UI。

將自動開啟Spark UI頁面,可查看任務詳情。

相關文檔

任務發布完成後,您可以在工作流程調度中使用,詳情請參見管理工作流程。任務編排完整的開發流程樣本,請參見SparkSQL開發快速入門。