Data Studio是阿里巴巴基於15年巨量資料經驗打造的智能湖倉一體資料開發平台,相容阿里雲多項計算服務,提供智能化ETL、資料目錄管理及跨引擎工作流程編排的產品能力。通過個人開發環境執行個體支援Python開發、Notebook分析與Git整合,Data Studio還支援豐富多樣的外掛程式生態,實現即時離線一體化、湖倉一體化、巨量資料AI一體化,助力“Data+AI”全生命週期的資料管理。

Data Studio介紹

Data Studio是智能湖倉一體資料開發平台,內建阿里巴巴15年巨量資料建設方法論,深度適配阿里雲MaxCompute、E-MapReduce、Hologres、Flink、PAI等數十種巨量資料和AI計算服務,為資料倉儲、資料湖、OpenLake湖倉一體資料架構提供智能化ETL開發服務,它支援:

資料目錄:具備湖倉一體中繼資料管理能力的資料目錄。

Workflow:支援編排數十種引擎類型的即時及離線資料開發節點及AI節點的工作流程研發模式。

個人開發環境:支援運行Python節點開發及調試,支援Notebook互動式分析,以及整合Git代碼管理與NAS/OSS儲存。

Notebook:智能化互動式資料開發和分析工具,支援面向多種資料引擎開展SQL或Python分析,即時運行或調試代碼,擷取可視化資料結果。

啟用新版資料開發(Data Studio)

參考如下方式啟用新版資料開發(Data Studio):

建立工作空間時,選擇使用新版資料開發(Data Studio)。具體操作請參見建立工作空間。

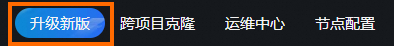

舊版資料開發(DataStudio)支援通過單擊資料開發頁面頂部的升級新版按鈕,按介面提示,將資料移轉至新版資料開發(Data Studio)。

新版資料開發(Data Studio)支援的地區:華東1(杭州)、華東2(上海)、華北2(北京)、華北3(張家口)、華北6(烏蘭察布)、華南1(深圳)、西南1(成都)、中國香港、日本(東京)、新加坡、馬來西亞(吉隆坡)、印尼(雅加達)、泰國(曼穀)、德國(法蘭克福)、英國(倫敦)、美國(矽谷)、美國(維吉尼亞)。

如您在使用新版資料開發過程中遇到問題,可添加DataWorks資料開發升級到新版專屬答疑群。

新版資料開發與舊版資料開發中的資料相互獨立,不互連。

舊版資料開發升級新版為無法復原操作,成功升級後將無法回退至舊版。切換前建議先建立開啟新版資料開發的工作空間進行測試,確保新版資料開發滿足業務需求後再升級。

自2025年2月19日起,主帳號在新版資料開發支援地區首次開通DataWorks並建立工作空間時,預設啟用新版資料開發,不再支援使用舊版資料開發。

進入Data Studio

進入DataWorks工作空間列表頁,在頂部切換至目標地區,找到目標工作空間,單擊操作列的,進入Data Studio。

該入口僅對已開啟使用新版資料開發(Data Studio)功能的工作空間可見,具體操作請參見啟用新版資料開發(Data Studio)。

Data Studio僅支援在PC端Chrome瀏覽器69以上版本使用。

Data Studio主要功能

資料開發的主要功能介紹如下。您可參考附錄:資料開發相關概念輔助理解。

類型 | 描述 |

流程管理 | DataWorks資料開發提供工作流程開發模式。工作流程是一種全新的研發方式,以業務視角出發的DAG可視化開發介面,輕鬆管理複雜的任務工程。 說明 在Data Studio資料開發中,每個工作空間支援建立的工作流程內部節點及對象數量限制如下:

若當前工作空間的工作流程及對象數量達到上限,您將無法再執行建立操作。 |

任務開發 |

DataWorks支援的節點類型,詳情請參見節點開發。 |

任務調度 |

更多調度相關說明,詳情請參見節點調度配置。 |

品質管控 | 提供正常化任務發布機制,及多種方式的品質管控機制。包括但不限於以下情境: |

其他 |

|

Data Studio介面

您可以通過Data Studio功能引導瞭解資料開發操作介面,以及各模組功能如何使用。

任務開發流程

DataWorks資料開發支援建立多種類型引擎的即時同步任務、離線調度任務(包括離線同步任務、離線加工任務)、手動觸發任務。其中,資料同步相關能力您可前往Data Integration模組瞭解。

DataWorks的工作空間分為標準模式和簡單模式,不同模式的工作空間下,任務開發流程存在一定差異,兩種模式的資料開發流程示意如下。

標準模式工作空間開發流程

簡單模式工作空間開發流程

基本流程:以標準模式為例,調度任務的開發流程包括開發、調試、調度配置、發布、營運等階段。其中,任務開發的通用開發流程,請參見新版Data Studio流程指引。

流程管控:任務在開發過程中可結合Data Studio內建的程式碼檢閱、資料治理預設的檢查項、開放平台基於擴充程式實現自訂邏輯校正等功能,保障開發工作單位符合規範。

資料開發方式

Data Studio支援您自訂開發過程,您可以通過工作流程(Workflow)方式快速構建資料處理流程,也可以手動建立各任務節點,然後配置其依賴關係。

詳情請參見工作流程編排。

Data Studio支援的節點合集

Data Studio支援數十種不同類型的節點,提供Data Integration、MaxCompute、Hologres、EMR、Flink、Python、Notebook、ADB等不同類型的節點,同時,多種類型節點支援週期性任務調度,您可基於業務需要選擇合適的節點進行相關開發操作。DataWorks支援的節點合集,詳情請參見支援的節點類型。

附錄:資料開發相關概念

任務開發相關

概念 | 描述 |

工作流程(Workflow) | 一種全新的研發方式,以業務視角出發的DAG可視化開發介面,輕鬆管理複雜的任務工程。Workflow支援編排數十種不同類型的節點,提供Data Integration、MaxCompute、Hologres、EMR、Flink、Python、Notebook、ADB等不同類型的節點;支援Workflow級的調度配置。支援周期調度工作流程和觸發式工作流程。 |

手動工作流程 | 面向某一特定業務需求的任務、表、資源、函數的集合。 手動工作流程與周期工作流程的區別為:手動工作流程中的任務需手動觸發運行,而周期工作流程中的任務是按計劃來定時觸發運行。 |

任務節點 | 任務節點是DataWorks的基本執行單元。Data Studio提供多種類型的節點,包括用於資料同步的Data Integration節點,用於資料清洗的引擎計算節點(例如,ODPS SQL、Hologres SQL、EMR Hive),以及可對引擎計算節點進行複雜邏輯處理的通用節點(例如,可統籌管理多個節點的虛擬節點、可迴圈執行代碼的do-while節點),多種節點配合使用,滿足您不同的資料處理需求。 |

任務調度相關

概念 | 描述 |

依賴關係 | 任務間通過依賴關係定義任務的運行順序。如果節點A運行後,節點B才能運行,我們稱A是B的上遊依賴,或者B依賴A。在DAG中,依賴關係用節點間的箭頭表示。 |

輸出名 | 每個任務(Task)輸出點的名稱。它是您在單個租戶(阿里雲帳號)內設定依賴關係時,用於串連上下遊兩個任務(Task)的虛擬實體。 當您在設定某任務與其它任務形成上下遊依賴關係時,必鬚根據輸出名稱(而不是節點名稱或節點ID)來完成設定。設定完成後該任務的輸出名也同時作為其下遊節點的輸入名稱。 |

輸出表名 | 輸出表名建議配置為當前任務的產出表,正確填寫輸出表名可以方便下遊設定依賴時確認資料是否來自期望的上遊表。自動解析產生輸出表名時不建議手動修改,輸出表名僅作為標識,修改輸出表名不會影響SQL指令碼實際產出的表名,實際產出表名以SQL邏輯為準。 說明 節點的輸出名需要全域唯一,而輸出表名無此限制。 |

調度資源群組 | 指用於任務調度的資源群組。 |

調度參數 | 調度參數是代碼中用於調度運行時動態取值的變數。代碼在重複運行時若希望擷取到運行環境的一些資訊,例如日期、時間等,可根據DataWorks調度系統的調度參數定義,動態為代碼中的變數賦值。 |

業務日期 | 通常指的是與商務活動直接相關的日期,這個日期反映了業務資料的實際發生時間。這個概念在離線計算情境中尤為重要,例如在零售業務中,您需要統計20241010日的營業額,往往會在20241011日淩晨再開始計算,這時所計算出來的資料實際是20241010日的營業額,這個20241010,就是業務日期。 |

定時時間 | 使用者為周期任務設定預期執行的時間點,可精確到分鐘層級。 重要 影響任務啟動並執行因素較多,並不意味著定時時間到了,任務就會立即執行。在任務執行前,DataWorks會檢測上遊任務是否運行成功、定時時間是否已達到、調度資源是否充足,當上述條件均已滿足後,才開始正式觸發任務的運行。 |