SAP HANA 高可用性デプロイメントガイド

リリース履歴

バージョン | リビジョン 日付 | コンテンツの変更 | リリース日 |

1.0 | 2018/9/7 | ||

2.0 | 2019/4/23 | 1. デプロイプロセスとドキュメント構造を最適化 2. すべてのスクリーンショットを更新 3. SAP HANA ファイルシステムの LVM 構成プロセスを追加 | 2019/4/23 |

2.1 | 2019/7/4 | 1. デプロイアーキテクチャを更新 2. NAS パラメータを最適化 | 2019/7/4 |

2.2 | 2020/10/27 | 1. SAP HANA 認定インスタンス仕様を更新 | 2020/10/27 |

2.3 | 2020/12/10 | 1. クロックソースの設定、DHCP によるホスト名の自動構成の無効化など、オペレーティングシステムとストレージの構成コンテンツを追加 2. SAP HANA 認定インスタンス仕様を更新 | 2020/12/10 |

2.4 | 2021/05/28 |

| 2021/05/28 |

2.5 | 2021/06/08 | 1. ファイルシステムの計画と構成を最適化 | 2021/06/08 |

2.6 | 2021/11/11 | 1. fence_aliyun sbd アルゴリズムを更新 2. クラウド製品のデプロイを最適化 | 2021/11/11 |

概要

このドキュメントでは、Alibaba Cloud のゾーン内に SAP HANA 高可用性 (HA) をデプロイする方法について説明します。

このドキュメントは、SAP の標準ドキュメントの代わりとなるものではありません。このドキュメントに記載されているインストール方法とデプロイ方法は、参考情報としてのみ提供されています。デプロイ前に、公式の SAP インストールおよび構成ドキュメントと、このドキュメントで推奨されている SAP ノートをお読みになることをお勧めします。

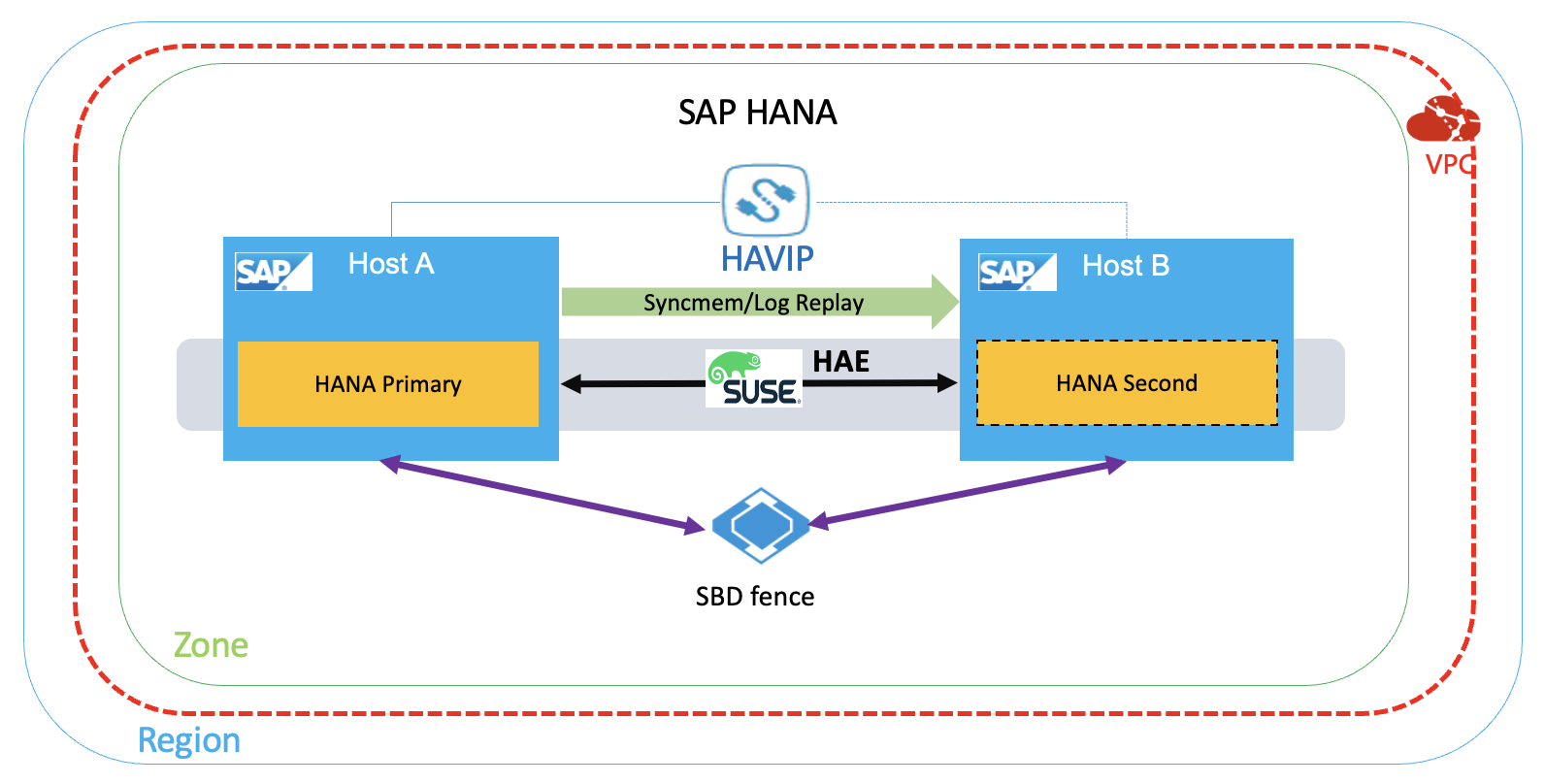

HANA HA アーキテクチャ

このデプロイメントのアーキテクチャは次のとおりです。

準備

インストールメディア

アクセス方法 | アクセス原則 | 備考 |

直接アップロード | パッケージを ECS にアップロードする | |

OSS + ossutil | パッケージを OSS にアップロードしてから ECS にダウンロードする。 |

VPC ネットワーク計画

ネットワーク | ロケーション | 用途 | 割り当てられたサブネット |

サービスネットワーク | 華東 2 ゾーン F | ビジネス/SR 用 | 192.168.10.0/24 |

ハートビートネットワーク | 華東 2 ゾーン F | HA 用 | 192.168.20.0/24 |

ホスト計画

ホスト名 | ロール | ハートビートアドレス | サービスアドレス | 仮想アドレス |

saphana-01 | Hana プライマリノード | 192.168.10.19 | 192.168.10.168 | 192.168.10.12 |

saphana-02 | Hana セカンダリノード | 192.168.20.20 | 192.168.10.169 | 192.168.10.12 |

ファイルシステム計画

この例のファイルシステムは次のように分割されています。

プロパティ | ファイルシステムサイズ | クラウドディスク容量 * 数 | クラウドディスクタイプ | ファイルシステム | VG | LVM ベースのストライプ | マウントポイント |

データディスク | 900G | 300G * 3 | SSD または ESSD | XFS | datavg | はい | /hana/data |

データディスク | 400G | 400G * 3 | SSD または ESSD | XFS | logvg | いいえ | /hana/log |

データディスク | 300G | 300G * 1 | SSD または ESSD | XFS | sharedvg | いいえ | /hana/shared |

データディスク | 50G | 50G * 1 | ウルトラディスク | XFS | sapcg | いいえ | /usr/sap |

VPC の作成

Virtual Private Cloud (VPC) は、Alibaba Cloud 上に構築された分離されたネットワーク環境です。VPC は論理的に相互に分離されています。VPC は、クラウド上の専用のプライベートネットワークです。IP アドレス範囲の選択、ルートテーブルとゲートウェイの構成など、独自の VPC インスタンスを完全に制御できます。詳細と関連ドキュメントについては、「製品ドキュメント」をご参照ください。

[VPC コンソール] にログインし、VPC を作成する をクリックします。計画どおりに VPC、SAP サービスサブネット、ハートビートサブネットを作成します。

SAP HANA ECS インスタンスの作成

SAP HANA プライマリノード ECS インスタンスの作成

ECS 購入ページ

[ECS 製品ページ] にアクセスして購入ページを開きます。SAP HANA の下のインスタンスタイプを選択し、購入 をクリックします。

支払い方法の選択

次の支払い方法のいずれかを選択します。サブスクリプションまたは従量課金制。

リージョンとゾーンの選択

リージョンとゾーンを選択します。デフォルトでは、ゾーンはランダムに割り当てられます。必要に応じてゾーンを選択できます。リージョンとゾーンの選択の詳細については、「リージョンとゾーン」をご参照ください。この例では、華東 2 ゾーン A が選択されています。

インスタンス仕様の選択

これまでの SAP HANA 認定インスタンスタイプについては、「SAP HANA 認定 ECS インスタンス」をご参照ください。また、SAP 公式 Web サイトにアクセスして、「認定およびサポートされている SAP HANA ハードウェア」を入手することもできます。

イメージの選択

パブリック、カスタム、または共有イメージを選択するか、マーケットプレイスからイメージを選択できます。

パブリック、カスタム、または共有イメージを選択するか、マーケットプレイスからイメージを選択できます。

必要に応じて、SAP HANA のイメージタイプとバージョンを選択できます。「イメージマーケットプレイスから選択」をクリックしてイメージマーケットプレイスに入ります。キーワード「sap」を照会します。この例では、「SUSE Linux Enterprise Server for SAP 12 SP3」イメージが使用されています。

ストレージの構成

システムディスク:必須。オペレーティングシステムのインストールに使用されます。システムディスクのクラウドディスクタイプと容量を指定する必要があります。

データディスク:オプション。クラウドディスクをデータディスクとして作成する場合は、クラウドディスクタイプ、容量、数量、および暗号化するするかどうかを指定する必要があります。空のクラウドディスクを作成するか、スナップショットを使用してクラウドディスクを作成できます。最大 16 個のクラウドディスクをデータディスクとして構成できます。

データディスクの容量は、HANA インスタンスの数に応じて調整する必要があります。

この例では、/hana/data は、同じ容量の 3 つの SSD または ESSD を使用して LVM でストライプ化され、SAP HANA のパフォーマンス要件を満たしています。/hana/log、/hana/shared は単一の SSD または ESSD を使用し、すべてのファイルシステムは XFS です。

SAP HANA ストレージ要件の詳細については、「SAP HANA のサイジング」をご参照ください。

ネットワークタイプの選択

次へ:[ネットワークとセキュリティグループ] をクリックして、ネットワークとセキュリティグループを構成します。

1. ネットワークタイプを選択します。

計画どおりに VPC とビジネス CIDR ブロックを選択します。

2. パブリックネットワーク帯域幅を設定します。

必要に応じて、適切なパブリックネットワーク帯域幅を設定します。

セキュリティグループの選択

セキュリティグループを選択します。セキュリティグループを作成しない場合は、デフォルトのセキュリティグループを保持します。デフォルトのセキュリティグループのルールについては、「デフォルトのセキュリティグループルール」をご参照ください。

ENI 構成

2 番目の ENI は、ECS インスタンスが正常に作成された後に追加する必要があります。

デプロイメントセットの構成

指定されたデプロイメントセットに ECS インスタンスを作成すると、指定されたデプロイメントセットの ECS インスタンスは、物理サーバーに基づいて厳密に分散されます。これにより、ハードウェア障害などの例外が発生した場合にサービスの高可用性が確保されます。

デプロイメントセットの詳細については、「デプロイメントセット」をご参照ください。

この例では、HA アーキテクチャの 2 つの SAP HANA インスタンスがデプロイメントセット S4HANA_HA に追加されます。また、この方法を使用して SAP ASCS および SCS 高可用性デプロイメントの ECS インスタンスを管理することをお勧めします。

作成済みのデプロイメントセットを選択します。使用可能なデプロイメントセットがない場合は、[ECS コンソール] にアクセスしてデプロイメントセットを作成できます。

デプロイメントセットが作成されたら、ページに戻り、デプロイメントセットを選択してシステム構成とグループ化設定を完了します。

SAP HANA セカンダリノード ECS インスタンスの作成

上記の手順に従って、SAP HANA セカンダリノード ECS インスタンスを作成します。

SAP HANA プライマリノード ECS インスタンスと HANA セカンダリノード ECS インスタンスは、同じデプロイメントセットにデプロイする必要があります。

クラウド リソースのデプロイ

ENI の構成

ENI は、VPC の ECS インスタンスに追加できる仮想ネットワークカードです。ENI を使用すると、高可用性クラスタを構築し、低コストでフェールオーバーを実装し、洗練されたネットワーク管理を実現できます。すべてのリージョンで ENI がサポートされています。詳細については、「ENI」をご参照ください。

ENI の作成

この例では、計画どおりに各 ECS インスタンスの ENI を作成します。

[1] [ECS コンソール] にログインし、左側のナビゲーションバーから [ECS コンソールネットワークとセキュリティ > ENIENI を作成する] > [] を選択します。リージョンを選択します。 をクリックします。

[2] 計画どおりに、対応する VPC と vSwitch を選択します。ENI を作成したら、HANA ECS インスタンスにバインドします。

[3] オペレーティングシステムにログインして NIC を構成します。

SUSE グラフィックウィンドウにログインしてネットワーク構成を入力するか、ターミナルから yast2 network を実行し、計画どおりに新しい ENI の静的 IP アドレスとサブネットマスクを構成し、ENI がアクティブであることを確認します。次のコマンドを実行して、ENI の構成とステータスを照会できます。

ip addr shプライマリ NIC の内部 IP アドレスを変更する必要がある場合は、「プライマリプライベート IP アドレス」をご参照ください。

HaVip の構成

プライベート高可用性仮想 IP アドレス (HaVip) は、個別に作成およびリリースできるプライベート IP リソースです。HaVip のユニークな点は、ARP を使用して ECS で IP アドレスをブロードキャストできることです。このデプロイメントでは、HaVip はクラスタの仮想 IP アドレスとして使用され、クラスタ内の各ノードにアタッチされます。

HaVip の作成

HaVip は、HANA インスタンスがサービスを提供するために使用され、サービスサブネット上の IP アドレスです。

[VPC コンソール] にログインし、[VPC コンソール高可用性仮想 IP(HAVIP)を作成する] を選択し、 をクリックします。ビジネス CIDR ブロック内の vSwitch (プライマリ NIC) を選択し、必要に応じて自動または手動の IP アドレス割り当てを選択します。

製品の適用については、Alibaba Cloud ソリューションアーキテクト (SA) にお問い合わせください。

SAP HANA プライマリノードとセカンダリノードの関連付け

作成した HaVIP の管理ページにアクセスし、クラスタ内の 2 つの ECS インスタンスをバインドします。

初期バインド後、2 つの ECS インスタンスはスタンバイ状態になります。正しいステータスは、SUSE HAE のクラスタソフトウェアがリソースを引き継いだ後にのみ表示されます。

フェンスの構成

Alibaba Cloud は、SAP システムデプロイメントでフェンス機能を実現するための 2 つのソリューションを提供しています。ソリューション 1:共有ブロックストレージを使用することをお勧めします。選択したリージョンで共有ブロックストレージがサポートされていない場合は、ソリューション 2:fence_aliyun を選択します。

ソリューション 1:共有ブロックストレージ

ECS 共有ブロックストレージとは、複数の ECS インスタンスがデータを同時に読み書きできるデータブロックレベルのストレージデバイスを指します。高い同時実行率、高性能、高信頼性を備えています。単一のブロックは、最大 16 個の ECS インスタンスにアタッチできます。

HA クラスタの SBD デバイスとして、ECS インスタンスと同じリージョンとゾーンの共有ブロックストレージを選択し、HA クラスタの ECS インスタンスにアタッチします。

製品の適用については、Alibaba Cloud ソリューションアーキテクト (SA) にお問い合わせください。

[1] 共有ブロックストレージの作成

[ECS コンソール] にログインし、[ECS ストレージとスナップショット] を選択し、[共有ブロックストレージ] をクリックし、同じリージョンとゾーンに共有ブロックストレージを作成します。

共有ブロックストレージデバイスが作成されたら、[共有ブロックストレージコンソール] に戻り、HA クラスタ内の 2 つの ECS インスタンスにアタッチします。

[2] 共有ブロックストレージの構成

オペレーティングシステムにログインしてディスク情報を確認します。

lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

vda 253:0 0 100G 0 disk

└─vda1 253:1 0 100G 0 part /

vdb 253:16 0 500G 0 disk

vdc 253:32 0 500G 0 disk

vdd 253:48 0 500G 0 disk

vde 253:64 0 64G 0 disk

vdf 253:80 0 20G 0 diskこの例では、/dev/vdf ディスクは共有ブロックストレージデバイス ID です。

ウォッチドッグの構成(クラスタ内の 2 つのノード)

echo "modprobe softdog" > /etc/init.d/boot.local

echo "softdog" > /etc/modules-load.d/watchdog.conf

modprobe softdog

//ウォッチドッグ構成の確認

ls -l /dev/watchdog

crw------- 1 root root 10, 130 Apr 23 12:09 /dev/watchdog

lsmod | grep -e wdt -e dog

softdog 16384 0

grep -e wdt -e dog /etc/modules-load.d/watchdog.conf

softdogSBD の構成(クラスタ内の 2 つのノード)

sbd -d /dev/vdf -4 60 -1 30 create

// SBD パラメータの設定

vim /etc/sysconfig/sbd

// SBD_DEVICE 値を共有ブロックストレージデバイスのデバイス ID に置き換えます

SBD_DEVICE="/dev/vdf"

SBD_STARTMODE="clean"

SBD_OPTS="-W"SBD ステータスの確認

両方のノードで SBD ステータスを確認します

sbd -d /dev/vdf list両方の SAP HANA ノードで SBD ステータスがクリアであることを確認します。

sbd -d /dev/vdf list

0 saphana-01 clear

1 saphana-02 clearSBD 構成の確認

フェンスされたノードが無効になっていることを確認します。この操作により、ノードが再起動されます。

この例では、プライマリノード saphana01 にログインします

sbd -d /dev/vdf message saphana-02 resetセカンダリノード saphana-02 が正常に再起動した場合、構成は成功です。

セカンダリノードが再起動した後、手動でリセットしてクリア状態にする必要があります。

sbd -d /dev/vdf list

0 saphana-01 clear

1 saphana-02 reset saphana-01

sbd -d /dev/vdf message saphana-02 clear

sbd -d /dev/vdf list

0 saphana-01 clear

1 saphana-02 clear saphana-01ソリューション 2:fence aliyun

Fence_aliyun は Alibaba Cloud プラットフォーム向けに開発され、高可用性環境で SAP システムの障害ノードを分離するために使用されます。Alibaba Cloud API を呼び出すことで、Alibaba Cloud リソースを柔軟にスケジュールおよび管理し、同じゾーンに SAP システムをデプロイして、コア SAP アプリケーションの高可用性デプロイ要件を満たすことができます。

Fence_aliyun は、Alibaba Cloud 環境向けに開発されたオープンソースのフェンスエージェントです。SAP システム高可用性環境の障害ノードを分離するために使用されます。

SUSE Enterprise Server for SAP Applications 12 SP4 以降のバージョンには、fence_aliyun コンポーネントがネイティブに統合されています。このコンポーネントを使用すると、追加のダウンロードやインストールを行うことなく、Alibaba Cloud パブリッククラウドで SAP システムの高可用性デプロイに直接使用できます。

[1] 環境の準備

この例では、Alibaba Cloud SUSE CSP イメージを使用して SUSE コンポーネントをダウンロードまたは更新します。SUSE SMT 更新ソースに直接接続して、SUSE コンポーネントをダウンロードまたは更新できます。

カスタムイメージを使用している場合は、「SUSE Connect コマンドラインツールを使用して SLES を登録する方法」を参照して、SUSE 公式更新リポジトリに接続してください。

python などのオープンソースソフトウェアをインストールするには、インターネットに接続する必要があります。ECS インスタンスまたは NAT ゲートウェイに EIP を構成していることを確認してください。

python と pip のインストール

Fence_aliyun は python3.6 以降のバージョンのみをサポートしています。最小バージョン要件を満たしていることを確認してください。

// Python3 のバージョンの確認。

python3 -V

Python 3.6.15

// Python パッケージ管理ツール pip のバージョンの確認。

pip -V

pip 21.2.4 from /usr/lib/python3.6/site-packages/pip (python 3.6)Python 3 がインストールされていないか、Python 3.6 より前のバージョンの場合は、python 3.6 以降をインストールする必要があります。

以下は、python 3.6.15 をインストールする例です。

// python3.6 のインストール

wget https://www.python.org/ftp/python/3.6.15/Python-3.6.15.tgz

tar -xf Python-3.6.15.tgz

./configure

make && make install

// インストールの確認

python3 -V

// pip のインストール

curl https://bootstrap.pypa.io/pip/3.6/get-pip.py -o get-pip.py

python3 get-pip.py

// インストールの確認

pip3 -V[2] aliyun SDK のインストール

aliyun-python-sdk-core バージョンが 2.13.35 以降であり、aliyun-python-sdk-ecs バージョンが 4.24.8 以降であることを確認してください。

python3 -m pip install --upgrade pip

pip3 install --upgrade aliyun-python-sdk-core

pip3 install --upgrade aliyun-python-sdk-ecs

// 依存関係パッケージのインストール

pip3 install pycurl pexpect

zypper install libcurl-devel

// インストールの確認

pip3 list | grep aliyun-python

aliyun-python-sdk-core 2.13.35

aliyun-python-sdk-core-v3 2.13.32

aliyun-python-sdk-ecs 4.24.8[3] RAM ロールの構成

Fence_aliyun は RAM ロールを使用して、ECS インスタンスなどのクラウド リソースのステータスを取得し、インスタンスを起動または停止します。

[Alibaba Cloud コンソール] にログインします。[アクセス制御ポリシー] を選択します。[ポリシー] ページで、[ポリシーの作成] をクリックします。

この例では、ポリシー名は SAP-HA-ROLE-POLICY です。ポリシーの内容は次のとおりです。

{

"Version": "1",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ecs:StartInstance",

"ecs:StopInstance",

"ecs:RebootInstance",

"ecs:DescribeInstances"

],

"Resource": [

"acs:ecs:*:*:instance/*"

]

}

]

}ロールへのアクセス許可ポリシーの付与

コンソールに戻り、[ロール] を選択し、AliyunECSAccessingHBRRole を見つけます。[アクセス許可の追加] をクリックし、[権限を追加する] を選択し、SAP-HA-ROLE-POLICY を AliyunECSAccessingHBRRole ロールに追加します。

ECS インスタンスへの RAM ロールの承認

[ECS コンソール] で、[その他] を選択し、[詳細] を選択し、AliyunECSAccessingHBRRole を選択または手動で作成します。

[4] fence_aliyun のインストールと構成

fence_aliyun の最新バージョンのダウンロード

fence_aliyun をダウンロードするには、github にアクセスする必要があります。ECS インスタンスのネットワーク環境が github に適切にアクセスできることを確認してください。問題が発生した場合は、[チケットを送信] してヘルプを求めてください。

curl https://raw.githubusercontent.com/ClusterLabs/fence-agents/master/agents/aliyun/fence_aliyun.py > /usr/sbin/fence_aliyun

// アクセス許可の構成

chmod 755 /usr/sbin/fence_aliyun

chown root:root /usr/sbin/fence_aliyun適応ユーザー環境

// インタープリターを python3 に指定します

sed -i "1s|@PYTHON@|$(which python3 2>/dev/null || which python 2>/dev/null)|" /usr/sbin/fence_aliyun

// フェンスエージェントの lib ディレクトリを指定します

sed -i "s|@FENCEAGENTSLIBDIR@|/usr/share/fence|" /usr/sbin/fence_aliyunインストールの確認

// fence_aliyun を使用して ECS インスタンスの実行ステータスを照会します

// 構文例:

// fence_aliyun --[リージョン ID] --ram-role [RAM ロール] --action status --plug '[ECS インスタンス ID]'

// 例:

fence_aliyun --region cn-beijing --ram-role AliyunECSAccessingHBRRole --action status --plug 'i-xxxxxxxxxxxxxxxxxxxx'

// 構成が正常な場合、インスタンスのステータスが返されます。例:

Status: ONリージョンとリージョン ID の詳細については、「リージョンとゾーン」をご参照ください。

オペレーティングシステムの構成

ホスト名の変更

HA クラスタの 2 つの ECS インスタンスで、2 つの SAP HANA サーバー間のホスト名解決を構成できます。

この例のファイル /etc/hosts の内容は次のとおりです。

127.0.0.1 localhost

//サービスネットワーク

192.168.10.168 saphana-01 saphana-01

192.168.10.169 saphana-02 saphana-02

//ハートビートネットワーク

192.168.20.19 hana-ha01 hana-ha01

192.168.20.20 hana-ha02 hana-ha02SSH パスワードなし接続サービスの構成

2 つの HANA サーバーで SSH パスワードなし接続サービスを構成する必要があります。操作は次のとおりです。

認証公開鍵の構成

HANA プライマリノードで次のコマンドを実行します。

ssh-keygen -t rsa

ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.10.169HANA セカンダリノードで次のコマンドを実行します。

ssh-keygen -t rsa

ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.10.168構成の確認

SSH パスワードなし接続サービスを確認します。SSH を介して相互にノードにログインします。両方のログインプロセスでパスワードが不要な場合、サービスは成功です。

DHCP によるホスト名の自動構成の無効化

HANA プライマリノードと HANA セカンダリノードの両方で次のコマンドを実行します。

sed -i '/DHCLIENT_SET_HOSTNAME/ c\DHCLIENT_SET_HOSTNAME="no"' /etc/sysconfig/network/dhcpetc/hosts 内のファイルの自動更新の無効化

sed -i "s/^ - update_etc_hosts/#- update_etc_hosts/" /etc/cloud/cloud.cfgクロックソースを tsc に設定

[1] 現在の時間ソース構成を確認します

cat /sys/devices/system/clocksource/clocksource0/current_clocksource

kvm-clock[2] 使用可能なクロックソースを照会します

cat /sys/devices/system/clocksource/clocksource0/available_clocksource

kvm-clock tsc acpi_pm[3] クロックソースを tsc に設定します

sudo bash -c 'echo tsc > /sys/devices/system/clocksource/clocksource0/current_clocksource'設定が成功すると、カーネルメッセージに次の情報が表示されます。

dmesg | less

clocksource: Switched to clocksource tsc[4] システム起動時のクロックソースを tsc に構成します

/etc/default/grub をバックアップおよび編集し、GRUB_CMDLINE_LINUX に clocksource=tsc tsc=reliable を追加します。

GRUB_CMDLINE_LINUX=" net.ifnames=0 console=tty0 console=ttyS0,115200n8 clocksource=tsc tsc=reliable"

// grub.cfg ファイルを生成します

grub2-mkconfig -o /boot/grub2/grub.cfg[ECS コンソール] にログインし、ECS インスタンスを再起動して変更を有効にします。

SAP 用 ECS メトリクスコレクター

ECS メトリクスコレクターは、SAP システムが Alibaba Cloud で使用して、将来のパフォーマンス統計と問題分析に必要な仮想マシン構成情報と基盤となる物理リソース使用量情報を収集する監視エージェントです。

SAP アプリケーションとデータベースごとにメトリクスコレクターをインストールする必要があります。監視エージェントのデプロイ方法の詳細については、「SAP デプロイメントガイド用 ECS メトリクスコレクター」をご参照ください。

SAP HANA ファイルシステムのパーティション分割

前のファイルシステム計画によると、LVM を使用してクラウドディスクを管理および構成します。

LVM の詳細については、「LVM HOWTO」をご参照ください。

PV&VG の作成

pvcreate /dev/vdb /dev/vdc /dev/vdd /dev/vde /dev/vdf /dev/vdg

vgcreate datavg /dev/vdb /dev/vdc /dev/vdd

vgcreate logvg /dev/vde

vgcreate sharedvg /dev/vdf

vgcreate sapvg /dev/vdgLV の作成

// datavg 内の 3 つの 300GB SSD のストライプを作成します

lvcreate -l 100%FREE -n datalv -i 3 -I 256 datavg

lvcreate -l 100%FREE -n loglv logvg

lvcreate -l 100%FREE -n sharedlv sharedvg

lvcreate -l 100%FREE -n usrsaplv sapvgマウントポイントを作成し、ファイルシステムをフォーマットします

mkdir -p /usr/sap /hana/data /hana/log /hana/shared

mkfs.xfs /dev/sapvg/usrsaplv

mkfs.xfs /dev/datavg/datalv

mkfs.xfs /dev/logvg/loglv

mkfs.xfs /dev/sharedvg/sharedlvファイルシステムをマウントし、自動起動構成に追加します

vim /etc/fstab

// 次の項目を追加します。

/dev/mapper/datavg-datalv /hana/data xfs defaults 0 0

/dev/mapper/logvg-loglv /hana/log xfs defaults 0 0

/dev/mapper/sharedvg-sharedlv /hana/shared xfs defaults 0 0

/dev/mapper/sapvg-usrsaplv /usr/sap xfs defaults 0 0

// ファイルシステムのマウント

mount -aSAP HANA のインストールと構成

SAP HANA のインストール

SAP HANA のプライマリノードとセカンダリノードのシステム ID とインスタンス ID は同じである必要があります。この例では、SAP HANA のシステム ID は H01、インスタンス ID は 00 です。

SAP HANA のインストールと構成については、「SAP HANA プラットフォーム」をご参照ください。

HANA システムレプリケーションの構成

SLES Cluster HA のインストールと構成

SUSE HAE のインストール

SUSE HAE の操作の詳細については、「SUSE 製品ドキュメント」をご参照ください。

SAP HANA データベースのプライマリノードとセカンダリノードに SUSE HAE と SAPHanaSR がインストールされているかどうかを確認します。

この例では、有料の SUSE イメージが使用されています。イメージには SUSE SMT サーバーがプリインストールされており、コンポーネントの確認とインストールに使用できます。イメージがカスタムイメージまたはその他のイメージの場合は、SUSE 認定イメージを購入して公式の SUSE SMT サーバーに登録するか、zypper リポジトリを手動で構成する必要があります。

次のコンポーネントが正しくインストールされていることを確認します。

// SAP バージョンの SLES 12 のコンポーネント

zypper in -y patterns-sles-sap_server

zypper in -y patterns-ha-ha_sles sap_suse_cluster_connector saptune fence-agents

// SAP バージョンの SLES 15 のコンポーネント

zypper in -y patterns-server-enterprise-sap_server

zypper in -y patterns-ha-ha_sles sap-suse-cluster-connector corosync-qdevice saptune fence-agentsクラスタの構成

SAP HANA インスタンスの VNC から Corosync を構成します。

// クラスタ構成ページに移動します

yast2 cluster[1] 通信チャネルの構成

[チャネル] にはハートビート CIDR ブロックを、[冗長チャネル] にはサービス CIDR ブロックを選択します。

[メンバーアドレス] を正しい順序で追加します([冗長チャネル] 入力サービスアドレス)

[予期される投票数]:2

[トランスポート]:ユニキャスト

[2] セキュリティの構成

「[セキュリティ認証を有効にする]」を選択し、「[認証キーファイルの生成]」をクリックします

[3] Csync2 の構成

同期ホストを追加します

[推奨ファイルの追加] をクリックします

[事前共有キーの生成] をクリックします

[csync2 をオンにする] をクリックします

conntrackd を構成します。デフォルト値を保持し、次の手順に進みます。

[4] サービスの構成

クラスタサービスが起動時に自動的に起動しないことを確認します。

構成が完了したら、ファイルを保存して終了します。Corosync 構成ファイルを SAP HANA のセカンダリノードにコピーします。

# scp -pr /etc/corosync/authkey /etc/corosync/corosync.conf root@saphana-02:/etc/corosync/[5] クラスタの起動

両方のノードで次のコマンドを実行します。

# systemctl start pacemaker[6] クラスタステータスの表示

これで両方のノードがオンラインになったので、後で管理対象リソースを構成します。

crm_mon -r

Stack: corosync

Current DC: saphana-02 (version 1.1.16-4.8-77ea74d) - partition with quorum

Last updated: Tue Apr 23 11:22:38 2019

Last change: Tue Apr 23 11:22:36 2019 by hacluster via crmd on saphana-02

2 nodes configured

0 resources configured

Online: [ saphana-01 saphana-02 ]

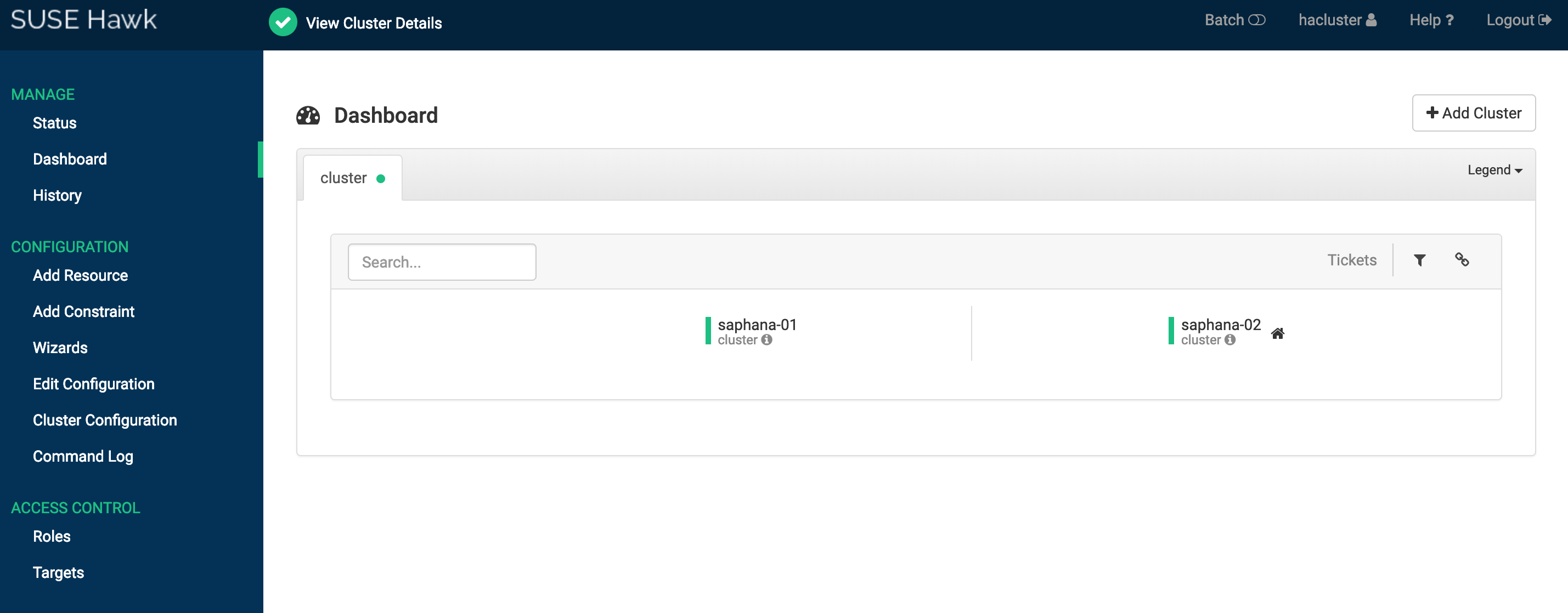

No resources[7] WEB ページグラフィカル構成の起動

(1) 2 つの ECS インスタンスで Hawk2 サービスをアクティブにします。

// hacluster ユーザーのパスワードを設定します。

passwd hacluster

// サービスを再起動して設定を有効にします。

systemctl restart hawk(2) Hawk2 へのアクセス

ブラウザを開き、hawk WEB コンソールにアクセスします

https://[ECS インスタンス IP アドレス]:7630ユーザー名 hacluster とパスワードを入力してログインします。

SAP HANA と SUSE HAE の統合

この例では、SBD フェンスデバイスの 2 つの構成を紹介します。SBD フェンススキームに基づいて、対応する構成スクリプトを選択します。

ソリューション 1:共有ブロックストレージを使用して SBD フェンスを実装する

クラスタ内のノードにログインし、スクリプト内の SID、InstanceNumber、および params ip を SAP システムの値に置き換えます。

この例では、SID:H01、InstanceNumber:00、params ip:192.168.10.12、スクリプトファイル名 HANA_HA_script.txt です

###SAP HANA トポロジは、HANA ランドスケープを監視および分析し、2 つのノード間でステータスを通信するリソースエージェントです##

primitive rsc_SAPHanaTopology_HDB ocf:suse:SAPHanaTopology \

operations $id=rsc_SAPHanaTopology_HDB-operations \

op monitor interval=10 timeout=600 \

op start interval=0 timeout=600 \

op stop interval=0 timeout=300 \

params SID=H01 InstanceNumber=00

###このファイルは、仮想 IP とともにクラスタ内のリソースを定義します###

primitive rsc_SAPHana_HDB ocf:suse:SAPHana \

operations $id=rsc_SAPHana_HDB-operations \

op start interval=0 timeout=3600 \

op stop interval=0 timeout=3600 \

op promote interval=0 timeout=3600 \

op monitor interval=60 role=Master timeout=700 \

op monitor interval=61 role=Slave timeout=700 \

params SID=H01 InstanceNumber=00 PREFER_SITE_TAKEOVER=true DUPLICATE_PRIMARY_TIMEOUT=7200 AUTOMATED_REGISTER=false

//これは sbd 設定用です##

primitive rsc_sbd stonith:external/sbd \

op monitor interval=20 timeout=15 \

meta target-role=Started maintenance=false

//これは VIP リソース設定用です##

primitive rsc_vip IPaddr2 \

operations $id=rsc_vip-operations \

op monitor interval=10s timeout=20s \

params ip=192.168.10.12

ms msl_SAPHana_HDB rsc_SAPHana_HDB \

meta is-managed=true notify=true clone-max=2 clone-node-max=1 target-role=Started interleave=true maintenance=false

clone cln_SAPHanaTopology_HDB rsc_SAPHanaTopology_HDB \

meta is-managed=true clone-node-max=1 target-role=Started interleave=true maintenance=false

colocation col_saphana_ip_HDB 2000: rsc_vip:Started msl_SAPHana_HDB:Master

order ord_SAPHana_HDB Optional:cln_SAPHanaTopology_HDB msl_SAPHana_HDB

property cib-bootstrap-options:\

have-watchdog=true \

cluster-infrastructure=corosync \

cluster-name=cluster \

no-quorum-policy=ignore \

stonith-enabled=true \

stonith-action=reboot \

stonith-timeout=150s

rsc_defaults rsc-options: \

migration-threshold=5000 \

resource-stickiness=1000

op_defaults op-options: \

timeout=600 \

record-pending=trueソリューション 2:フェンスエージェントを使用して SBD フェンスを実装する

クラスタノードにログインし、txt ファイルを作成します。前の例のスクリプトをコピーし、SAP HANA のデプロイ状況に基づいて次のパラメータを変更します。

plug の値を SAP HANA クラスタ内の 2 つの ECS インスタンスの ID に置き換えます。

ram_role の値を、上記で構成した RAM ロールの値に置き換えます。

region の値を ECS インスタンスのリージョン ID に置き換えます。

IP アドレスをクラスタの HaVip アドレスに置き換えます。

SID と InstanceNumber を SAP HANA インスタンスの SID と ID に置き換えます。

location パラメータを SAP HANA インスタンスのホスト名に置き換えます。

Alibaba Cloud リージョンとリージョン ID の関係は明白です。「リージョンとゾーン」をご参照ください。

この例のスクリプトファイル名:HANA_HA_script.txt

primitive res_ALIYUN_STONITH_1 stonith:fence_aliyun \

op monitor interval=120 timeout=60 \

params plug=

i-xxxxxxxxxxxxxxxxxxxx //ECS インスタンス ID

ram_role=

AliyunECSAccessingHBRRole //RAM ロール

region=

cn-beijing //リージョン ID

\

meta target-role=Started

primitive res_ALIYUN_STONITH_2 stonith:fence_aliyun \

op monitor interval=120 timeout=60 \

params plug=

i-xxxxxxxxxxxxxxxxxxxx //ECS インスタンス ID

ram_role=

AliyunECSAccessingHBRRole //RAM ロール

region=

cn-beijing //リージョン ID

\

meta target-role=Started

// havip リソース

primitive rsc_vip IPaddr2 \

operations $id=rsc_vip-operations \

op monitor interval=10s timeout=20s \

params ip=

192.168.10.12 //havip アドレス

// SAP HANA トポロジは、HANA ランドスケープを監視および分析し、2 つのノード間でステータスを通信するリソースエージェントです

primitive rsc_SAPHanaTopology_HDB ocf:suse:SAPHanaTopology \

operations $id=rsc_SAPHanaTopology_HDB-operations \

op monitor interval=10 timeout=600 \

op start interval=0 timeout=600 \

op stop interval=0 timeout=300 \

params SID=

H01 //SAP HANA SID

InstanceNumber=

00 //SAP HANA ID

// このファイルは、仮想 IP とともにクラスタ内のリソースを定義します

primitive rsc_SAPHana_HDB ocf:suse:SAPHana \

operations $id=rsc_SAPHana_HDB-operations \

op start interval=0 timeout=3600 \

op stop interval=0 timeout=3600 \

op promote interval=0 timeout=3600 \

op monitor interval=60 role=Master timeout=700 \

op monitor interval=61 role=Slave timeout=700 \

params SID=

H01 //SAP HANA SID

InstanceNumber=

00 //SAP HANA ID

PREFER_SITE_TAKEOVER=true DUPLICATE_PRIMARY_TIMEOUT=7200 AUTOMATED_REGISTER=false

ms msl_SAPHana_HDB rsc_SAPHana_HDB \

meta is-managed=true notify=true clone-max=2 clone-node-max=1 target-role=Started interleave=true maintenance=false

clone cln_SAPHanaTopology_HDB rsc_SAPHanaTopology_HDB \

meta is-managed=true clone-node-max=1 target-role=Started interleave=true maintenance=false

colocation col_saphana_ip_HDB 2000: rsc_vip:Started msl_SAPHana_HDB:Master

location

loc_hana-master_stonith_not_on_hana-master //ロケーションパラメータ

res_ALIYUN_STONITH_1 -inf:

hana-master //SAP HANA インスタンスのホスト名

//Stonith 1 は、プライマリノードを制御しているため、プライマリノードで実行しないでください

location

loc_hana-slave_stonith_not_on_hana-slave //ロケーションパラメータ

res_ALIYUN_STONITH_2 -inf:

hana-slave //SAP HANA インスタンスのホスト名

order ord_SAPHana_HDB Optional: cln_SAPHanaTopology_HDB msl_SAPHana_HDB

property cib-bootstrap-options: \

have-watchdog=false \

cluster-infrastructure=corosync \

cluster-name=cluster \

stonith-enabled=true \

stonith-action=off \

stonith-timeout=150s

rsc_defaults rsc-options: \

migration-threshold=5000 \

resource-stickiness=1000

op_defaults op-options: \

timeout=600root ユーザーとして次のコマンドを実行して、SUSE HAE が SAP HANA リソースを引き継ぐことを許可します。

crm configure load update HANA_HA_script.txtクラスタステータスの確認

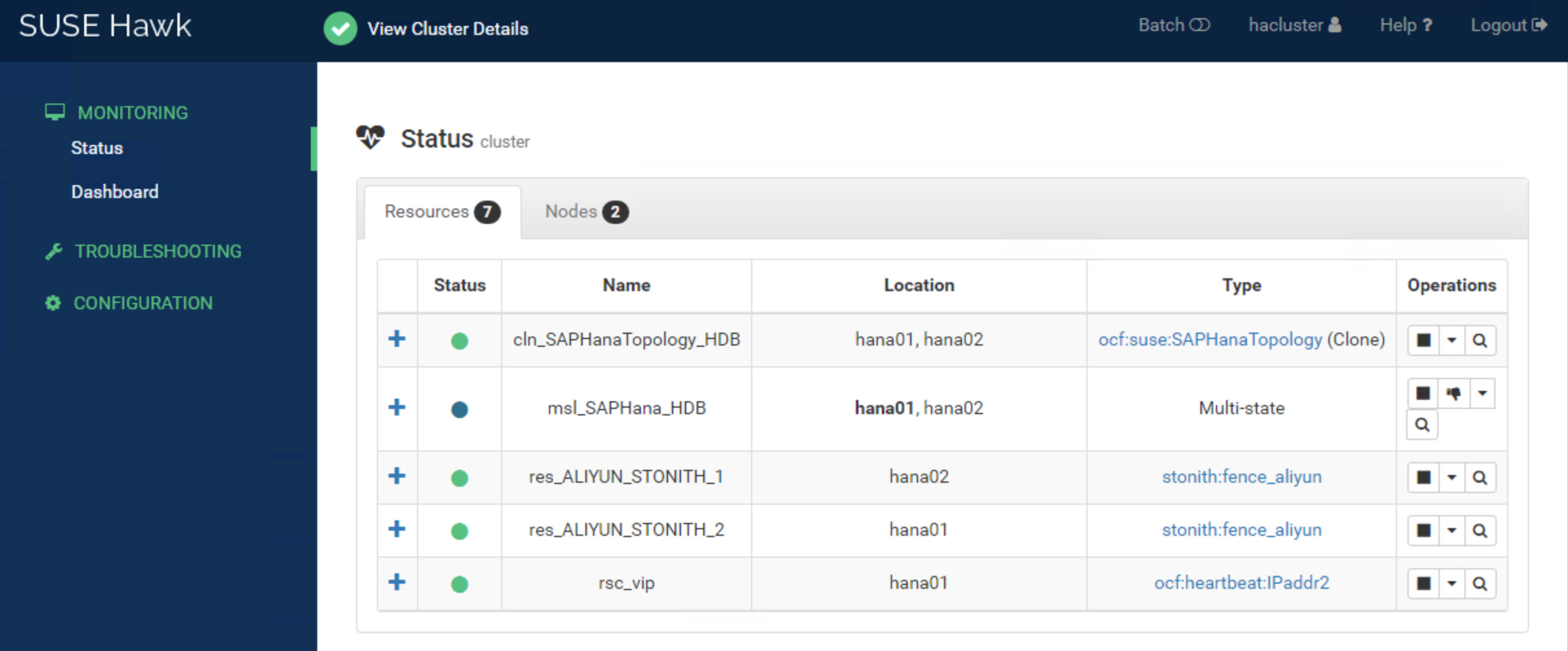

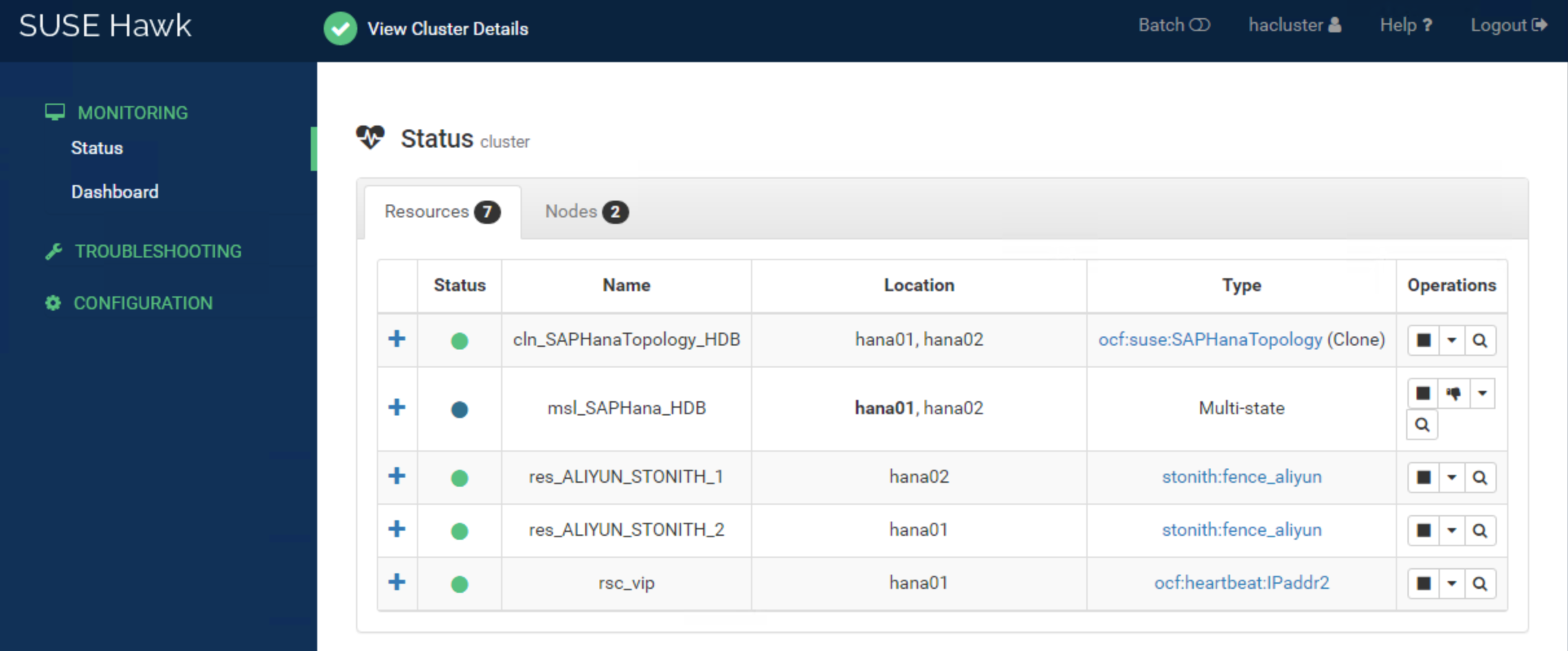

Hawk2 WEB コンソールにログインし、https://[ECS インスタンス IP アドレス]:7630 でサーバーにアクセスします。

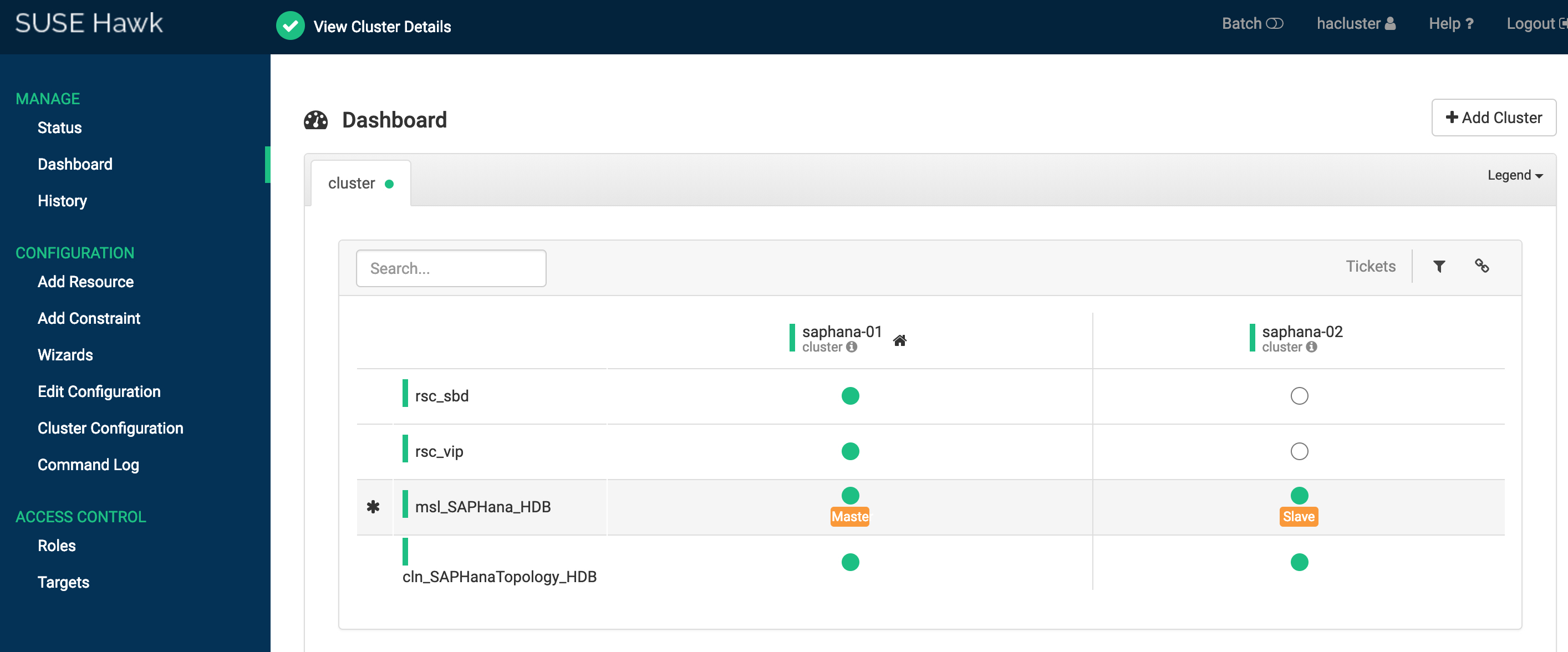

ソリューション 1:SUSE HAE クラスタのステータスとダッシュボードは次のとおりです。

任意のノードにログインして crmsh コマンドを実行し、クラスタステータスを確認することもできます。

crm_mon -r

Stack: corosync

Current DC: saphana-01 (version 1.1.16-4.8-77ea74d) - partition with quorum

Last updated: Wed Apr 24 11:48:38 2019

Last change: Wed Apr 24 11:48:35 2019 by root via crm_attribute on saphana-01

2 nodes configured

6 resources configured

Online: [ saphana-01 saphana-02 ]

Full list of resources:

rsc_sbd (stonith:external/sbd): Started saphana-01

rsc_vip (ocf::heartbeat:IPaddr2): Started saphana-01

Master/Slave Set: msl_SAPHana_HDB [rsc_SAPHana_HDB]

Masters: [ saphana-01 ]

Slaves: [ saphana-02 ]

Clone Set: cln_SAPHanaTopology_HDB [rsc_SAPHanaTopology_HDB]

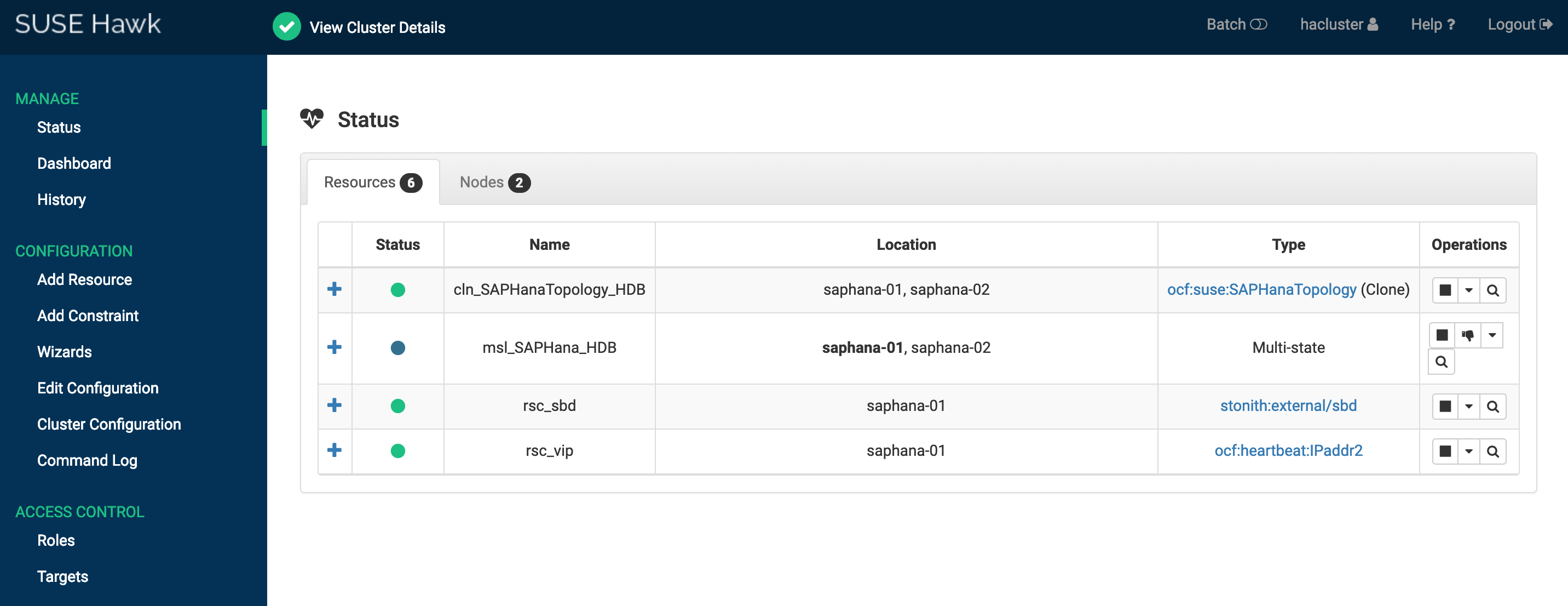

Started: [ saphana-01 saphana-02 ]ソリューション 2:フェンスエージェントの SUSE HAE クラスタのステータスとダッシュボードは次のとおりです。

任意のノードにログインして crmsh コマンドを実行し、クラスタステータスを確認することもできます。

crm_mon -r

Stack: corosync

Current DC: hana02 (version 2.0.1+20190417.13d370ca9-3.21.1-2.0.1+20190417.13d370ca9) - partition with quorum

Last updated: Sat Jan 29 13:14:47 2022

Last change: Sat Jan 29 13:13:44 2022 by root via crm_attribute on hana01

2 nodes configured

7 resources configured

Online: [ hana01 hana02 ]

Full list of resources:

res_ALIYUN_STONITH_1 (stonith:fence_aliyun): Started hana02

res_ALIYUN_STONITH_2 (stonith:fence_aliyun): Started hana01

rsc_vip (ocf::heartbeat:IPaddr2): Started hana01

Clone Set: msl_SAPHana_HDB [rsc_SAPHana_HDB] (promotable)

Masters: [ hana01 ]

Slaves: [ hana02 ]

Clone Set: cln_SAPHanaTopology_HDB [rsc_SAPHanaTopology_HDB]

Started: [ hana01 hana02 ]