Alibaba Cloud E-MapReduce (EMR) allows you to store data of DataServing clusters in OSS-HDFS and WAL logs in Hadoop Distributed File System (HDFS). This topic describes how to use OSS-HDFS as the storage backend of HBase.

Background information

OSS-HDFS is a cloud-native data lake storage service. OSS-HDFS provides unified metadata management capabilities and is fully compatible with the HDFS API. OSS-HDFS also supports Portable Operating System Interface (POSIX). OSS-HDFS allows you to manage data in various data lake-based computing scenarios in the big data and AI fields. For more information, see Overview.

Limits

This feature is supported only by DataServing clusters in EMR V3.42, EMR V5.8.0, and their later minor versions.

Procedure

Enable OSS-HDFS and grant access permissions. For more information, see Enable OSS-HDFS and grant access permissions.

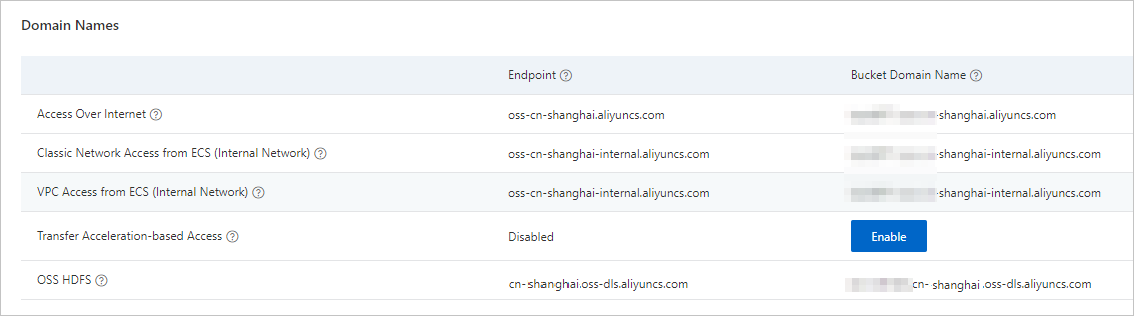

Obtain the endpoint of OSS-HDFS.

On the Overview page of a bucket in the Object Storage Service (OSS) console, copy the domain name of OSS-HDFS. This way, you can use the domain name as the value of the hbase.rootdir parameter when you create an EMR HBase cluster.

Use OSS-HDFS.

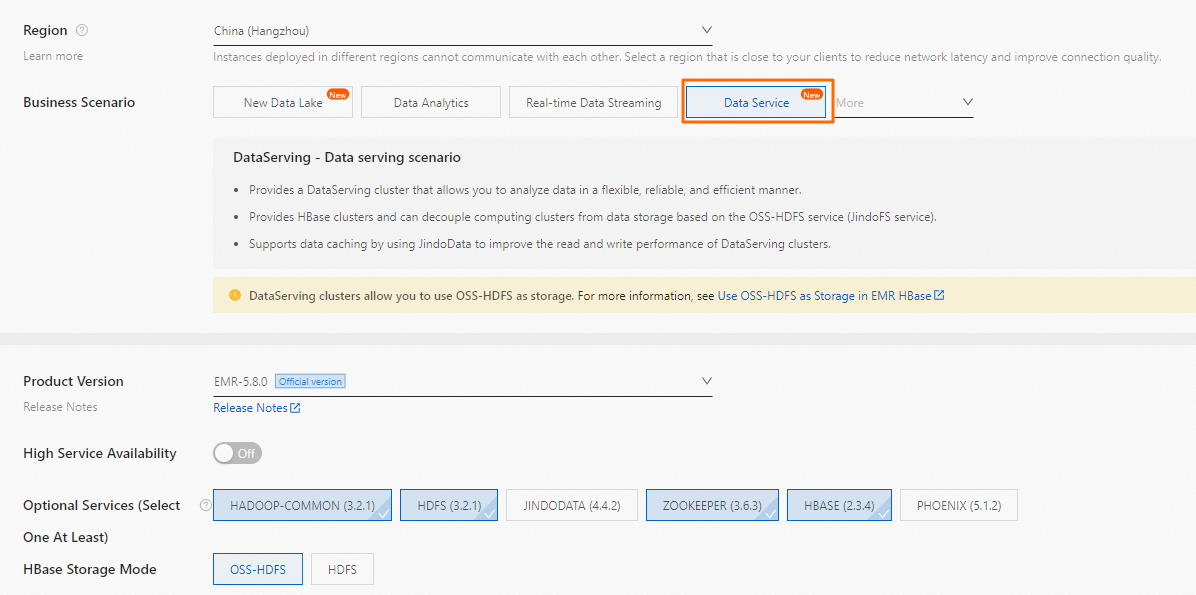

Create a DataServing cluster. For more information, see Create a cluster.

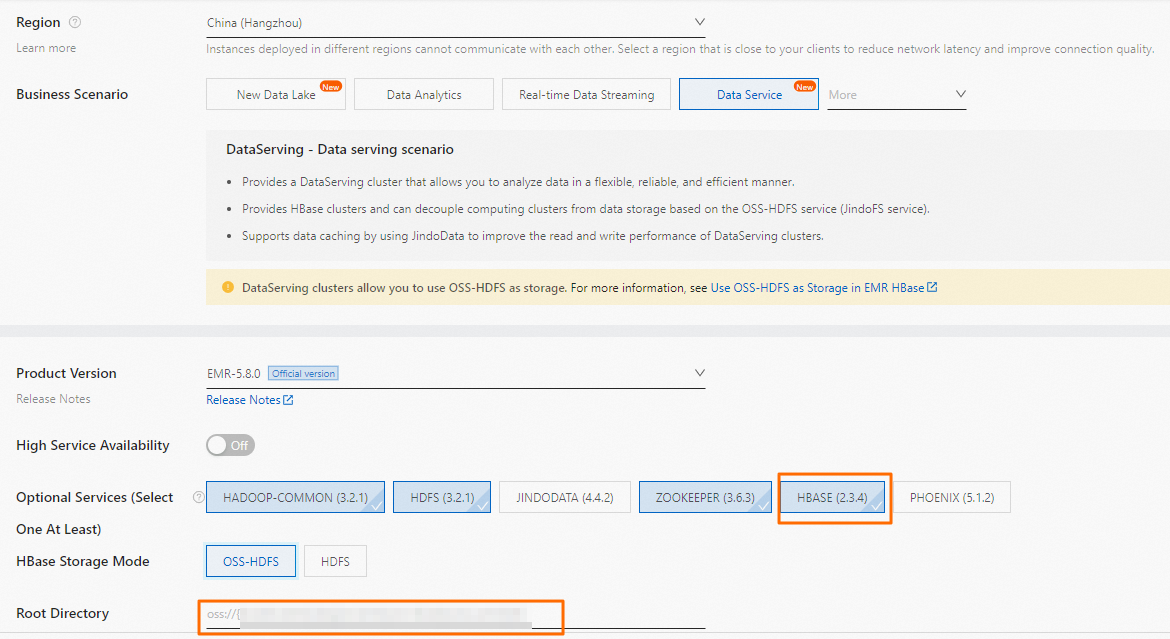

Configure the following parameters when you create the cluster:

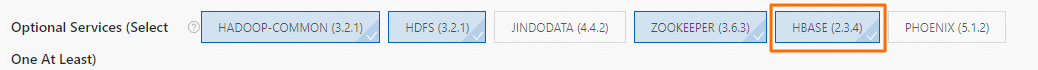

Configure the following parameters when you create the cluster:Optional Services (Select One At Least): Select all options except JindoData.

HBase Storage Mode: Select OSS-HDFS.

Root Directory: Enter the directory of an OSS bucket for which OSS-HDFS is enabled. The directory is in the oss://${OSS-HDFS endpoint}/${dir} format. Example: oss://test_bucket.cn-hangzhou.oss-dls.aliyuncs.com/hbase.

NoteTake note of the following items in actual operations:

Replace ${OSS-HDFS endpoint} with the endpoint of OSS-HDFS that you obtain in Step 2.

Replace ${dir} with the root directory of HBase.

Create an HBase table.

Log on to the DataServing cluster. For more information, see Log on to a cluster.

Run the following command to access HBase Shell:

hbase shellRun the following command to create an HBase table named bar that has a column family named f:

create 'bar','f'Run the

listcommand to view the information about the HBase table.The following information is returned:

TABLE bar 1 row(s) Took 0.0138 seconds

Exit HBase shell and run the following command to verify data in the HBase table.

Syntax:

hadoop fs -ls oss://${OSS-HDFS endpoint}/{dir}Example:

hadoop fs -ls oss://test_bucket.cn-hangzhou.oss-dls.aliyuncs.com/hbase/data/defaultIf the information similar to the following output is returned, the HBase table is created in OSS-HDFS.

Found 1 items drwxrwxrwx - hbase supergroup 0 2022-07-28 14:45 oss://test_bucket.cn-hangzhou.oss-dls.aliyuncs.com/hbase/data/default/bar

Optional:Delete the EMR HBase cluster and create a new cluster.

After the data of the EMR HBase cluster is stored in OSS-HDFS, the cluster can be deleted and a new cluster can be created in the directory of the OSS bucket for which OSS-HDFS is enabled.

ImportantThe HBase versions of the deleted cluster and the new cluster must be the same. Otherwise, problems such as incompatibility may occur in the new cluster, which makes the new cluster unavailable.

You can use only one HBase cluster in a directory at the same time. If you write data to multiple HBase clusters in a directory at a time, the metadata or data written to the clusters may differ from the original ones, which makes the clusters unavailable.

Log on to the DataServing cluster. For more information, see Log on to a cluster.

Run the following command to access HBase Shell:

hbase shellRun the following command to perform the FLUSH operation. This ensures that data in all tables in the memory is flushed to HFile.

flush 'bar'Run the following command to disable related tables. This prevents new data from being written to the tables.

disable 'bar'Create a new cluster whose Hbase version is the same as that of the deleted cluster. Specify the same directory for the new cluster to store data.

After the new cluster is created, you can continue to use data stored in OSS-HDFS.