Simple Log Service allows you to use Function Compute to transform streaming data. You can configure a Function Compute trigger to detect data updates and call a function. This allows you to consume the incremental data in a Logstore and transform data. You can use template functions or user-defined functions to transform data.

Prerequisites

Simple Log Service is authorized to call functions. You can perform authorization on the Cloud Resource Access Authorization page.

Function Compute

Simple Log Service

Create a project and logstores

You need to create one Simple Log Service project and two logstores. One logstore is used to store the collected logs (Function Compute is triggered based on incremental logs, so continuous log collection is required), and the other logstore is used to store the logs generated by the Simple Log Service triggers.

ImportantThe Simple Log Service project and the Function Compute service must belong to the same region.

Limits

You can associate a maximum of five times the number of Logstores in a Simple Log Service project with the project.

We recommend that you configure no more than five Simple Log Service triggers for each Logstore. Otherwise, data may not be efficiently shipped to Function Compute.

Scenarios

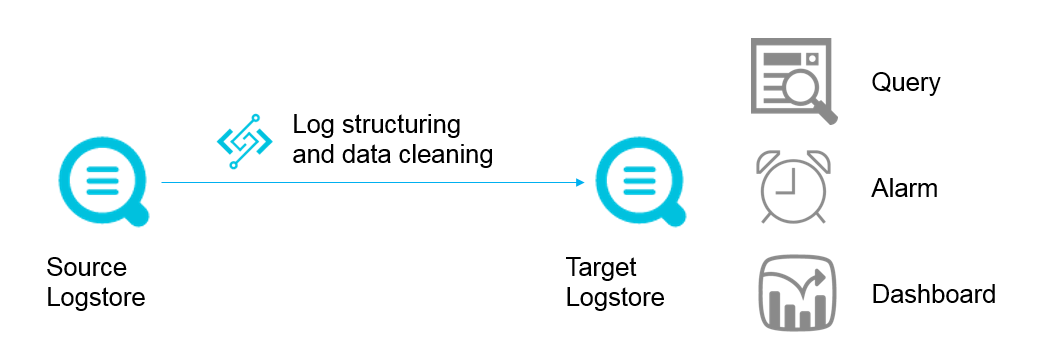

Data cleansing and transformation

You can use Simple Log Service to collect, transform, query, and analyze logs in an efficient manner.

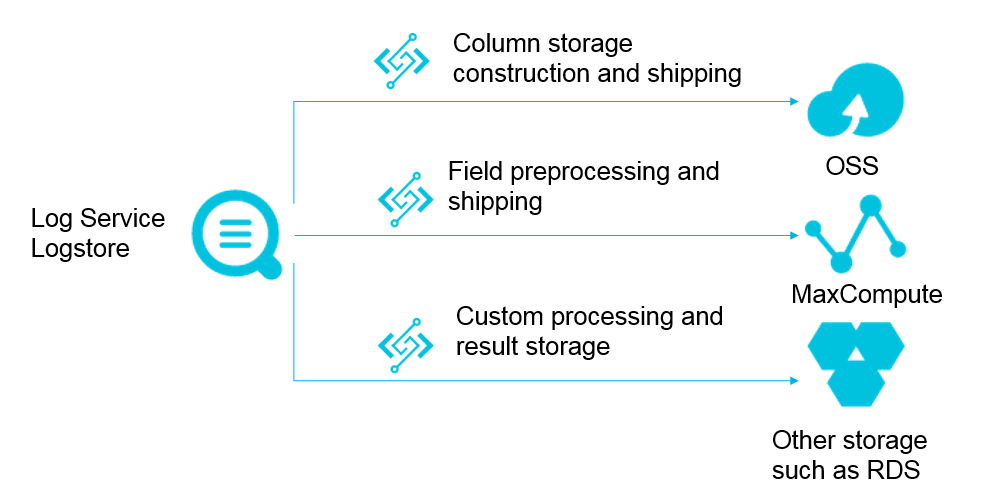

Data shipping

You can use Simple Log Service to ship data to a specified destination. In this scenario, Simple Log Service serves as a data channel for big data services in the cloud.

Data transformation functions

Function types

Template functions

For more information, see aliyun-log-fc-functions.

User-defined functions

Function formats are related to function implementations. For more information, see Create a custom function.

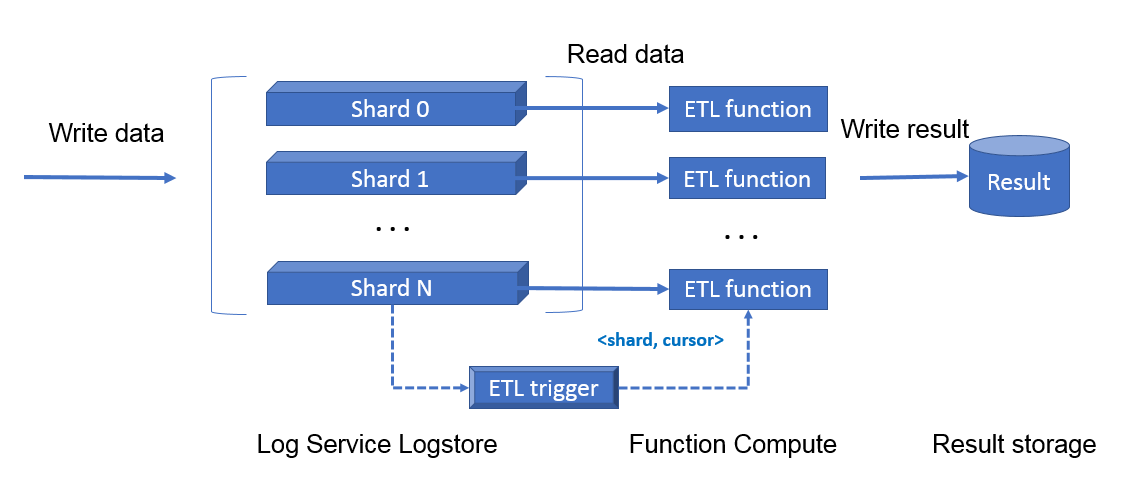

Function calling mechanism

A Function Compute trigger is used to call a function. After you create a Function Compute trigger for a Logstore in Simple Log Service, a timer is started to monitor the shards of the Logstore based on the trigger configuration. If new data is written to the Logstore, a three-tuple data record in the

<shard_id,begin_cursor,end_cursor >format is generated as a function event. Then, the function is called.NoteIf no new data is written to a Logstore and the storage system is updated, the cursor information may change. As a result, a function is called for each shard but no data is transformed. In this case, you can use the cursor information to obtain data from the shards. If no data is obtained, a function is called but no data is transformed. You can ignore the function calls. For more information, see Create a custom function.

A Function Compute trigger calls a function based on time. For example, the call interval in a Function Compute trigger for a Logstore is set to 60 seconds. If data is continuously written to Shard 0, the function is called at 60-second intervals to transform data based on the cursor range of the previous 60 seconds.

Step 1: Create a Simple Log Service trigger

Log on to the Function Compute console. In the left-side navigation pane, click Functions.

In the top navigation bar, select a region. On the Functions page, click the function that you want to manage.

On the function details page, click the Configurations tab. In the left-side navigation pane, click Triggers. Then, click Create Trigger.

In the Create Trigger panel, configure parameters and click OK.

Parameter

Description

Example

Trigger Type

The type of the trigger. Select Log Service.

Log Service

Name

The name of the trigger.

log_trigger

Version or Alias

The version or alias of the trigger. Default value: LATEST. If you want to create a trigger for another version or alias, select a version or alias in the upper-right corner of the function details page. For more information about versions and aliases, see Manage versions and Manage aliases.

LATEST

Log Service Project

The name of the existing Simple Log Service project.

aliyun-fc-cn-hangzhou-2238f0df-a742-524f-9f90-976ba457****

Logstore

The name of the existing Logstore. The trigger created in this example subscribes to data in the Logstore and sends the data to Function Compute at regular intervals for custom processing.

function-log

Trigger Interval

The interval at which Simple Log Service invokes the function.

Valid values: 3 to 600. Unit: seconds. Default value: 60.

60

Retries

The maximum number of retries that are allowed for each invocation.

Valid values: 0 to 100. Default value: 3.

NoteIf the function is triggered, status=200 is returned, and the value of the

X-Fc-Error-Typeparameter in the response header is notUnhandledInvocationErrororHandledInvocationError. In other cases, the function fails to be triggered. For more information aboutX-Fc-Error-Type, see Response parameters.If the function fails to be triggered, the system retries to invoke the function until the function is invoked. The number of retries follows the value of this parameter. If the function still fails after the value of this parameter is reached, the system retries the request in exponential backoff mode with increased intervals.

3

Trigger Log

The Logstore to which you want to store the logs that are generated when Simple Log Service invokes the function.

function-log2

Invocation Parameters

The invocation parameters. You can configure custom parameters in this editor. The custom parameters are passed to the function as the value of the parameter parameter of the event that is used to invoke the function. The value of the Invocation Parameters parameter must be a string in the JSON format.

By default, this parameter is empty.

N/A

Role Name

Select AliyunLogETLRole.

NoteAfter you configure the preceding parameters, click OK. If this is the first time that you create a trigger of this type, click Authorize Now in the dialog box that appears.

AliyunLogETLRole

After the trigger is created, it is displayed on the Triggers tab. To modify or delete a trigger, see Trigger management.

Step 2: Configure the input parameter of the function

On the Code tab of the function details page, click the

icon next Test Function and select Configure Test Parameters from the drop-down list.

icon next Test Function and select Configure Test Parameters from the drop-down list. In the Configure Test Parameters panel, click the Create New Test Event or Modify Existing Test Event tab, enter the event name and event content, and then click OK.

An event is used to invoke a function in Function Compute. The parameters of the event are used as the input parameters of the function. The following sample code provides an example on the format of the event content:

{ "parameter": {}, "source": { "endpoint": "http://cn-hangzhou-intranet.log.aliyuncs.com", "projectName": "aliyun-fc-cn-hangzhou-2238f0df-a742-524f-9f90-976ba457****", "logstoreName": "function-log", "shardId": 0, "beginCursor": "MTUyOTQ4MDIwOTY1NTk3ODQ2Mw==", "endCursor": "MTUyOTQ4MDIwOTY1NTk3ODQ2NA==" }, "jobName": "1f7043ced683de1a4e3d8d70b5a412843d81****", "taskId": "c2691505-38da-4d1b-998a-f1d4bb8c****", "cursorTime": 1529486425 }Parameter

Description

Example

parameter

The value of the Invocation Parameters parameter that you configure when you create the trigger.

N/A

source

The log block information that you want the function to read from Simple Log Service.

endpoint: the endpoint of the Alibaba Cloud region in which the Simple Log Service project resides.

projectName: the name of the Simple Log Service project.

logstoreName: the name of the Logstore.

shardId: the ID of a specific shard in the Logstore.

beginCursor: the offset from which data consumption starts.

endCursor: the offset at which data consumption ends.

{ "endpoint": "http://cn-hangzhou-intranet.log.aliyuncs.com", "projectName": "aliyun-fc-cn-hangzhou-2238f0df-a742-524f-9f90-976ba457****", "logstoreName": "function-log", "shardId": 0, "beginCursor": "MTUyOTQ4MDIwOTY1NTk3ODQ2Mw==", "endCursor": "MTUyOTQ4MDIwOTY1NTk3ODQ2NA==" }jobName

The name of an ETL job in Simple Log Service. Simple Log Service triggers must correspond to ETL jobs in Simple Log Service.

1f7043ced683de1a4e3d8d70b5a412843d81****

taskId

For an ETL job, the taskId parameter specifies the identifier for a function invocation.

c2691505-38da-4d1b-998a-f1d4bb8c****

cursorTime

The UNIX timestamp of the time when the last log arrives at Simple Log Service.

1529486425

Step 3: Write and test function code

After you create the Simple Log Service trigger, you can write function code and test the function code to verify whether the code is valid. The function is invoked when Simple Log Service collects incremental logs. Function Compute obtains the corresponding logs, and then displays the collected logs.

On the function details page, click the Code tab, enter function code in the code editor, and then click Deploy.

In this example, the function code is written in Python. The following sample code provides an example on how to extract most logical logs. You can obtain the

AccessKey IDandAccessKey secretfromcontextandcredsin the code.""" The sample code is used to implement the following features: * Parse Simple Log Service events from the event parameter. * Initialize the Simple Log Service client based on the preceding information. * Obtain real-time log data from the source Logstore. This sample code is mainly doing the following things: * Get SLS processing related information from event * Initiate SLS client * Pull logs from source log store """ #!/usr/bin/env python # -*- coding: utf-8 -*- import logging import json import os from aliyun.log import LogClient logger = logging.getLogger() def handler(event, context): # Query the key information from context.credentials. # Access keys can be fetched through context.credentials print("The content in context entity is: \n") print(context) creds = context.credentials access_key_id = creds.access_key_id access_key_secret = creds.access_key_secret security_token = creds.security_token # Parse the event parameter to the OBJECT data type. # parse event in object event_obj = json.loads(event.decode()) print("The content in event entity is: \n") print(event_obj) # Query the following information from event.source: log project name, Logstore name, the endpoint to access the Simple Log Service project, start cursor, end cursor, and shard ID. # Get the name of log project, the name of log store, the endpoint of sls, begin cursor, end cursor and shardId from event.source source = event_obj['source'] log_project = source['projectName'] log_store = source['logstoreName'] endpoint = source['endpoint'] begin_cursor = source['beginCursor'] end_cursor = source['endCursor'] shard_id = source['shardId'] # Initialize the Simple Log Service client. # Initialize client of sls client = LogClient(endpoint=endpoint, accessKeyId=access_key_id, accessKey=access_key_secret, securityToken=security_token) # Read logs based on the start and end cursors in the source Logstore. In this example, the specified cursors include all logs of the function invocation. # Read data from source logstore within cursor: [begin_cursor, end_cursor) in the example, which contains all the logs trigger the invocation while True: response = client.pull_logs(project_name=log_project, logstore_name=log_store, shard_id=shard_id, cursor=begin_cursor, count=100, end_cursor=end_cursor, compress=False) log_group_cnt = response.get_loggroup_count() if log_group_cnt == 0: break logger.info("get %d log group from %s" % (log_group_cnt, log_store)) logger.info(response.get_loggroup_list()) begin_cursor = response.get_next_cursor() return 'success'Click Test Function.

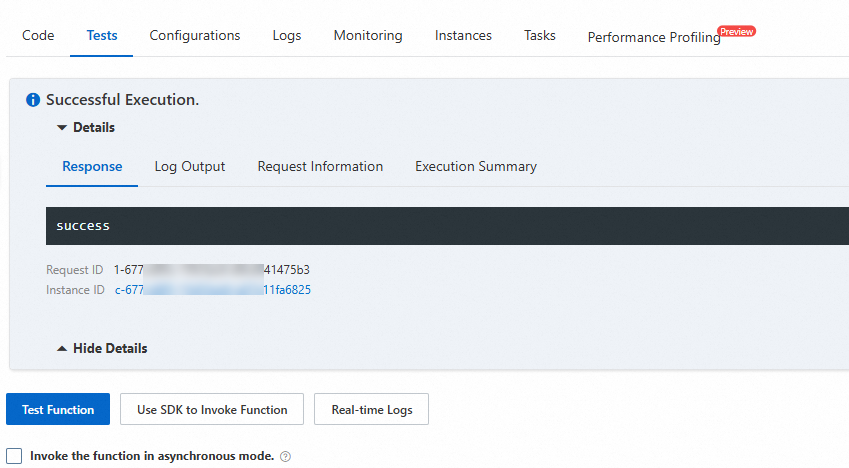

After the function is executed, you can view the results on the Code tab.

(Optional) Step 4: Test the function by using a simulated event

On the Code tab of the function details page, click the

icon next Test Function and select Configure Test Parameters from the drop-down list.

icon next Test Function and select Configure Test Parameters from the drop-down list. In the Configure Test Parameters panel, click the Create New Test Event or Modify Existing Test Event tab, enter the event name and event content, and then click OK. When creating a new test event, use Simple Log Service as the event template. For more information on configuring test data, see event.

After you have configured the virtual event, click Test Function.

After the function is executed, you can view the result on the Tests tab.

Input parameter description

eventWhen the Simple Log Service trigger activates, it sends the event data to the runtime. The runtime then converts this data into a JSON object and delivers it to the function's input parameter

event. The JSON format is as follows:{ "parameter": {}, "source": { "endpoint": "http://cn-hangzhou-intranet.log.aliyuncs.com", "projectName": "fc-test-project", "logstoreName": "fc-test-logstore", "shardId": 0, "beginCursor": "MTUyOTQ4MDIwOTY1NTk3ODQ2Mw==", "endCursor": "MTUyOTQ4MDIwOTY1NTk3ODQ2NA==" }, "jobName": "1f7043ced683de1a4e3d8d70b5a412843d81****", "taskId": "c2691505-38da-4d1b-998a-f1d4bb8c****", "cursorTime": 1529486425 }Below is a description of the parameters:

Parameter

Description

parameter

The value of the invocation parameter that you entered when you configured the trigger.

source

The log block information that you want the function to read.

endpoint: The Alibaba Cloud region where the log project is located.

projectName: The name of the log project.

logstoreName: The name of the logstore that Function Compute wants to consume. The current trigger periodically subscribes to data from this Logstore to the function service for custom processing.

shardId: A specific shard in the logstore.

beginCursor: The position where data consumption starts.

endCursor: The position where data consumption stops.

NoteWhen you debug the function, you can call the GetCursor - Query cursor by time API to obtain beginCursor and endCursor, and construct a function event for testing based on the preceding example.

jobName

The name of the Simple Log Service ETL job. The Simple Log Service trigger configured for the function corresponds to a Simple Log Service ETL job.

This parameter is automatically generated by Function Compute. You do not need to configure it.

taskId

For an ETL job, taskId is a deterministic function invocation identifier.

This parameter is automatically generated by Function Compute. You do not need to configure it.

cursorTime

The Unix timestamp of the time when the last log arrives at Simple Log Service. Unit: seconds.

contextWhen Function Compute executes your function, it provides the function's input parameter

contextwith a context object. This object includes details about the invocation, service, function, , and the execution environment.This topic describes how to obtain key information through

context.credentials. For more information about other fields, see Context.

What to do next

Query trigger logs

You can create indexes for your Logstore in which trigger logs are stored and view the execution results of the trigger. For more information, see Create indexes.

View the operational logs of the function

You can use a CLI to view the detailed information about function calls. For more information, see View function invocation logs.

FAQ

If new logs are generated but your Simple Log Service trigger does not trigger function execution, see What do I do if a trigger cannot trigger function execution?

Every shard of Simple Log Service triggers the function when new data is written. Therefore, the trigger frequency includes the number of times that a Logstore is triggered as a whole. In addition, when the trigger is delayed, data catchup occurs, which may shorten the trigger interval of triggers. For more information, see Why is the execution frequency of a Simple Log Service trigger higher than expected?