Serverless ApsaraDB RDS for PostgreSQL instances provide real-time scaling to respond to changing business requirements, allow you to quickly and independently scale computing resources to adapt to fluctuating workloads, prevent inefficient use of resources, and reduce O&M costs. This topic describes the features and the architecture of serverless RDS instances and how to use serverless RDS instances. Serverless RDS instances help reduce costs and improve the O&M efficiency.

Introduction

ApsaraDB RDS for PostgreSQL Serverless instances provide real-time elasticity for CPU and memory resources. They are a new type of ApsaraDB RDS for PostgreSQL offering that is based on a cloud disk architecture. These instances provide vertical resource isolation for network resources, namespaces, and storage, and also offer pay-as-you-go billing for compute resources. They are cost-effective, easy to use, and flexible. This lets you quickly and independently scale compute resources up or down to handle fluctuating business workloads. This rapid response to business changes helps optimize costs and improve enterprise efficiency.

A serverless RDS instance is billed based on RDS Capacity Units (RCUs). The performance of an RCU is equivalent to the performance of an RDS instance that provides up to 1 core and 2 GB of memory. A serverless RDS instance automatically adjusts the number of RCUs within the range that you specify based on your workloads.

The maximum number of connections to a serverless RDS instance is fixed as 2,400, which cannot be modified and does not vary based on the number of RCUs.

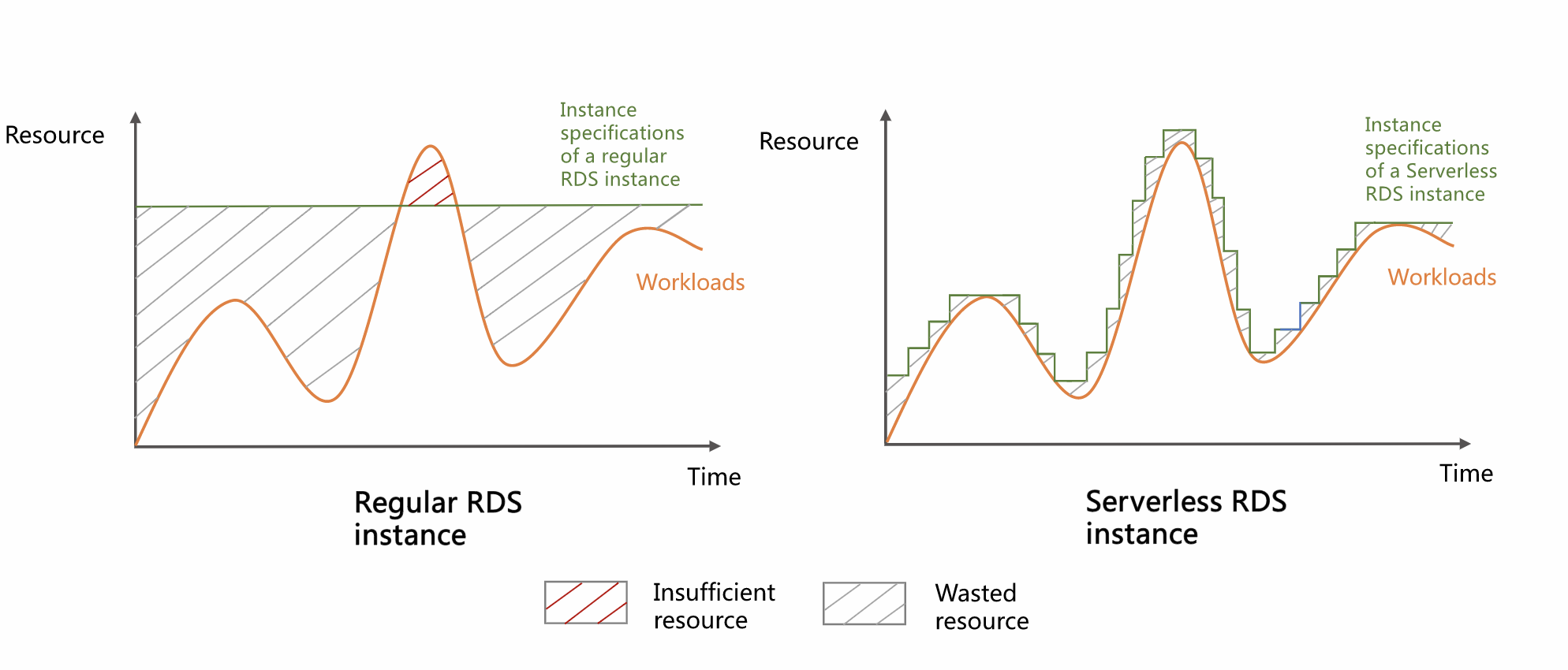

The following figure shows how resource usage and specifications change for regular instances and Serverless instances in a scenario with fluctuating workloads:

Referring to the preceding figure, we can obtain the following conclusions:

Regular RDS instance: Low resource utilization during off-peak hours translates into wasted costs, while insufficient resources during peak hours affect service performance.

Serverless RDS instance:

Its compute resources adjust to business demands in real time. This minimizes resource waste, improves resource utilization, and reduces resource costs.

Resources are scaled to match the workload requirements during peak hours, which ensures performance and service stability.

You are charged based on the actual volume of resources that are used to run your workloads. This significantly reduces costs.

No human intervention is required. This improves O&M efficiency and reduces costs for O&M administrators and developers.

The automatic start and stop feature is supported for the serverless RDS instance. If no connections are established to the serverless RDS instance, the instance is automatically suspended to release computing resources and reduce costs. If a connection is established to the serverless RDS instance, the instance automatically resumes.

The serverless RDS instance supports auto scaling and is optimized for high-throughput write operations and high-concurrency processing operations. This is suitable for scenarios in which large amounts of data and large traffic fluctuations are involved.

Benefits

Low cost: Serverless RDS instances do not rely on other infrastructure and services and can provide stable and efficient data storage and access services. This deployment model is ideal for startups and scenarios in which you want to run workloads immediately after resources are created. You are charged only for the resources that you use based on the pay-as-you-go billing method.

Large storage capacity: You can purchase a storage capacity of up to 32 TB for a serverless RDS instance. The system automatically expands the storage capacity based on the data volume of the RDS instance. This effectively prevents your services from being adversely affected by insufficient storage.

Auto scaling of computing resources: The computing resources that are required for read and write operations can be automatically scaled without human intervention. This greatly reduces O&M costs and risks of human errors.

Fully managed and maintenance-free services: Serverless RDS instances are fully managed by Alibaba Cloud, allowing you to focus on developing your applications instead of O&M operations, such as system deployment, scaling, and alert handling. The O&M operations are performed in the background and are transparent at the service layer.

Scenarios

Scenarios that require infrequent access to underlying databases, such as databases in development and testing environments.

Software as a service (SaaS) scenarios, such as website building of small and medium-sized enterprises

Individual developers

Educational scenarios, such as teaching and student experiments

Scenarios that handle inconsistent and unpredictable workloads, such as IoT and edge computing

Scenarios which require fully managed or maintenance-free services

Scenarios in which services are changing or unpredictable

Scenarios in which intermittent scheduled tasks are involved

Billing rules

Usage

Change the scaling range of RCUs

You can adjust the scaling range of RCUs as needed. You can specify the upper and lower limits of RCUs to optimize resource configurations.

Configure scheduled tasks to adjust the number of RCUs for a serverless RDS instance

If you have strict requirements on the instance stability within a specific period of time, you can configure scheduled tasks to adjust the number of the RCUs for the serverless RDS instance to ensure the stability within the period of time.

Change the scaling policy of a serverless RDS instance

The default scaling policy of a serverless RDS instance is Do Not Execute Forcefully. and you can use the default scaling policy to prevent potential service interruptions. If a higher level of performance rather than continuous availability is required, you can manually change the scaling policy to Execute Forcefully.

Configure the automatic start and stop feature

If no connections are established to a serverless RDS instance within 10 minutes after you enable the automatic start and stop feature, the instance is automatically suspended. In this case, no RCUs are used, and no computing fees are generated. If a connection is established to the serverless RDS instance, the serverless RDS instance automatically resumes, and computing fees are generated.

FAQ

Q: Why do I receive Cloud Monitor alerts about the CPU or memory of my Serverless instance?

A: This usually occurs because of pre-configured global alert rules. If an alert rule for metrics such as CPU or memory already exists for all ApsaraDB RDS for PostgreSQL instances (or for a specific resource group), a newly created Serverless instance automatically inherits these rules. Although a Serverless instance can scale automatically, the system still triggers and sends an alert notification when its resource usage briefly reaches the alert threshold.

To manage these alerts, log on to the Cloud Monitor console. On the page, use the advanced filter feature to view and manage the alert rules that are enabled for the target Serverless instance.

Q: Why does my instance fail to scale out (increase RCUs) in time under high load, causing the service to become unresponsive?

A: An instance may fail to scale out (increase RCUs) in time under high load for two main reasons:

RCU limit reached: The instance's compute resources have reached the configured maximum RCU value. In this case, evaluate your peak business requirements and increase the maximum RCU limit as needed.

Scaling latency cannot match sudden traffic spikes: The elastic scaling mechanism for a serverless instance requires time to respond. A scale-out is typically triggered when CPU or memory usage exceeds 80%, and the process takes about 5 seconds. If service traffic surges dramatically in a short period (for example, less than 5 seconds), the instance may become unresponsive due to resource exhaustion before the scaling process can be triggered and can complete. For business scenarios with sudden, ultra-high concurrency, we recommend using regular instances with pre-provisioned, fixed resources to ensure service stability.

Q: Why does the instance not automatically scale in (decrease RCUs) after the business load decreases?

A: A Serverless instance scales in (decreases RCUs) only when both of the following conditions are met: CPU usage is below 50% and memory usage is below 50%.

A common reason for not scaling in is that the database's page cache occupies a large amount of memory. Even after the business load decreases, PostgreSQL may keep data in memory to optimize future query performance. This can keep memory usage above 50%, which prevents the scale-in conditions from being met. To trigger a scale-in immediately, you can restart the instance to force the release of the page cache and reduce memory usage.

Q: How do I start an instance that is paused and has no connections?

A: In the console, disable the automatic start and stop feature for the instance. The instance then starts automatically.