This topic describes how you are billed for Deep Learning Containers (DLC) of Platform for AI (PAI).

Note

The pricing information in this topic is only for reference. You can view the actual prices in your billing statements.

Billable resources

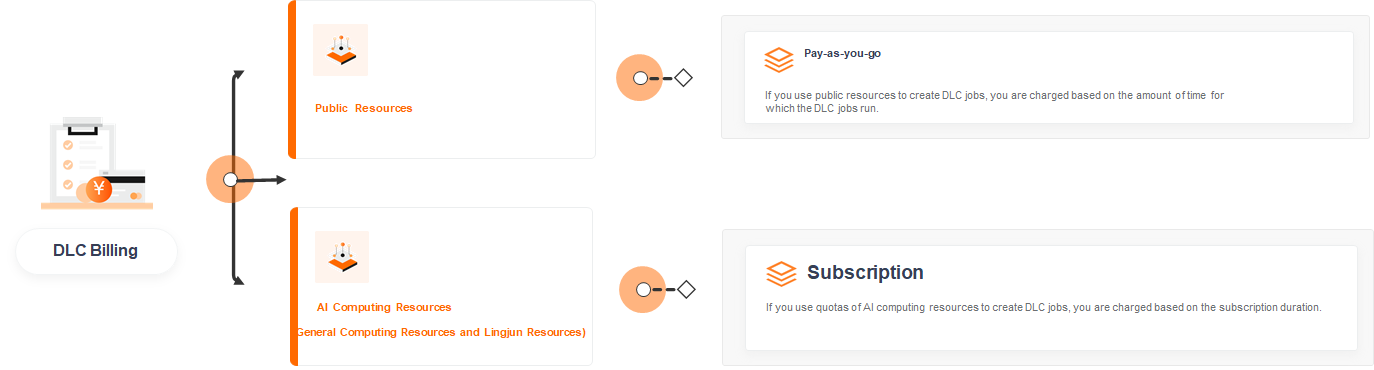

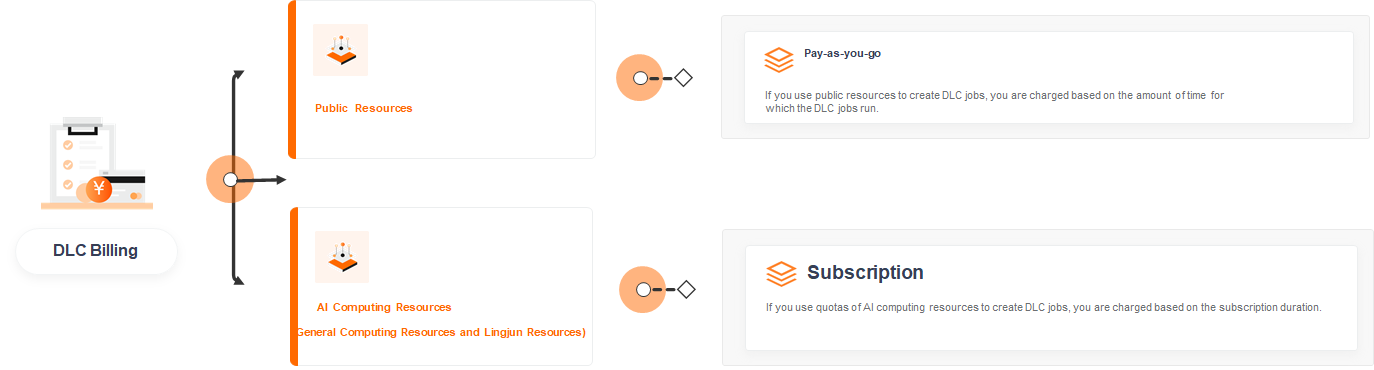

The following figure shows the billable resources of DLC.

Billing methods

The following table describes the billing methods of DLC.

Billing method | Billable resource | Billable item | Billing rule | How to stop billing |

Pay-as-you-go | Public resources | The amount of time for which DLC jobs run by using public resources. | If you run DLC jobs by using public resources, you are charged based on the usage duration. | |

Subscription | AI computing resources (general computing resources and Lingjun resources) | For more information, see Billing of AI computing resources. | For more information, see Billing of AI computing resources. | N/A |

Public resources

Pay-as-you-go

To use the pay-as-you-go billing method, you must use public resources to create DLC jobs.

Resource | Billing formula | Unit price | Billing duration | Scaling | Usage notes |

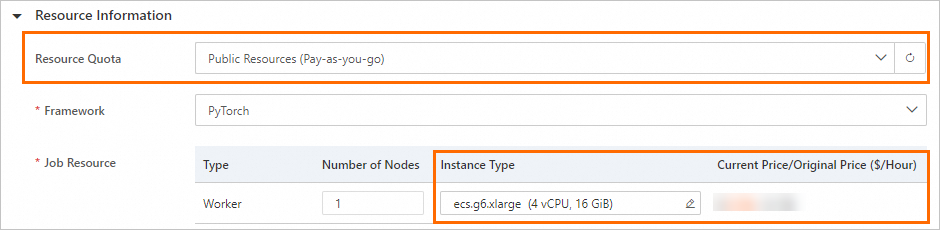

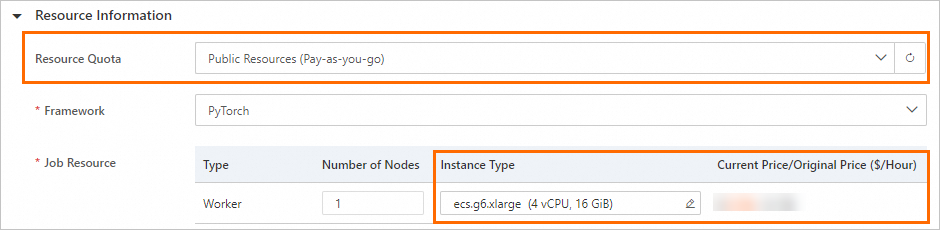

Public resources | Bill amount = Number of nodes × (Unit price/60) × Usage duration (minutes)

| The unit prices may vary based on the instance type and region. To view the unit prices, go to the Create Job page in the PAI console. For more information, see Submit a job in the console. In the Resource Information section, set the Source parameter to Public Resources and select an instance type. You can view the unit price of the instance in the Current Price/Original Price ($/Hour) column. | Running duration of DLC jobs. | N/A | None |

AI computing resources

Subscription

AI computing resources include general computing resources and Lingjun resources. AI computing resources support only the subscription billing method. You can add resource quotas for purchased AI computing resources and use the quotas to create DLC jobs. For more information, see Billing of AI computing resources.

Billing examples

Important The following example is only for reference. You can view the actual prices in the console or on the buy page.

Public resources

AI computing resources

For billing examples of AI computing resources, see Billing of AI computing resources.

Appendix: Public resource group instance types

The following table describes some of the instance types that can be used to create DLC jobs in the public resource group. For a complete list of the supported instance types, go to the DLC page in the PAI console and click Create Job. You can view the supported instance types in the Resource Information section. For more information, see Submit a job in the console. The supported instance types may vary based on the region.

Instance type | Specification | GPU |

ecs.g6.xlarge | 4 vCPUs + 16 GB memory | None |

ecs.c6.large | 2 vCPUs + 4 GB memory | None |

ecs.g6.large | 2 vCPUs + 8 GB memory | None |

ecs.g6.2xlarge | 8 vCPUs + 32 GB memory | None |

ecs.g6.4xlarge | 16 vCPUs + 64 GB memory | None |

ecs.g6.8xlarge | 32 vCPUs + 128 GB memory | None |

ecs.r7.large | 2 vCPUs + 16 GB of memory | None |

ecs.r7.xlarge | 4 vCPUs + 32 GB memory | None |

ecs.r7.2xlarge | 8 vCPUs + 64 GB memory | None |

ecs.r7.4xlarge | 16 vCPUs + 128 GB memory | None |

ecs.r7.6xlarge | 24 vCPUs + 192 GB memory | None |

ecs.r7.8xlarge | 32 vCPUs + 256 GB memory | None |

ecs.r7.16xlarge | 64 vCPUs + 512 GB memory | None |

ecs.g5.xlarge | 4 vCPUs + 16 GB memory | None |

ecs.g7.xlarge | 4 vCPUs + 16 GB memory | None |

ecs.g7.2xlarge | 8 vCPUs + 32 GB memory | None |

ecs.g5.2xlarge | 8 vCPUs + 32 GB memory | None |

ecs.g6.3xlarge | 12 vCPUs + 48 GB memory | None |

ecs.g7.3xlarge | 12 vCPUs + 48 GB memory | None |

ecs.g7.4xlarge | 16 vCPUs + 64 GB memory | None |

ecs.r7.3xlarge | 12 vCPUs + 96 GB memory | None |

ecs.c6e.8xlarge | 32 vCPUs + 64 GB memory | None |

ecs.g6.6xlarge | 24 vCPUs + 96 GB memory | None |

ecs.g7.6xlarge | 24 vCPUs + 96 GB memory | None |

ecs.g5.4xlarge | 16 vCPUs + 64 GB memory | None |

ecs.hfc6.8xlarge | 32 vCPUs + 64 GB memory | None |

ecs.g7.8xlarge | 32 vCPUs + 128 GB memory | None |

ecs.hfc6.10xlarge | 40 vCPUs + 96 GB memory | None |

ecs.g6.13xlarge | 52 vCPUs + 192 GB memory | None |

ecs.g5.8xlarge | 32 vCPUs + 128 GB memory | None |

ecs.hfc6.16xlarge | 64 vCPUs + 128 GB memory | None |

ecs.g7.16xlarge | 64 vCPUs + 256 GB memory | None |

ecs.hfc6.20xlarge | 80 vCPUs + 192 GB memory | None |

ecs.g6.26xlarge | 104 vCPUs + 384 GB memory | None |

ecs.g5.16xlarge | 64 vCPUs + 256 GB memory | None |

ecs.r5.8xlarge | 32 vCPUs + 256 GB memory | None |

ecs.re6.13xlarge | 52 vCPUs + 768 GB memory | None |

ecs.re6.26xlarge | 104 vCPU + 1,536 GB memory | None |

ecs.re6.52xlarge | 208 vCPU + 3,072 GB memory | None |

ecs.g7.32xlarge | 128 vCPU + 512 GB memory | None |

ecs.gn7i-c8g1.2xlarge | 8 vCPUs + 30 GB memory | 1 × NVIDIA A10 |

ecs.gn6v-c8g1.2xlarge | 8 vCPUs + 32 GB memory | 1 × NVIDIA V100 |

ecs.gn6e-c12g1.24xlarge | 96 vCPUs + 736 GB memory | 8 × NVIDIA V100 |

ecs.gn6v-c8g1.16xlarge | 64 vCPUs + 256 GB memory | 8 × NVIDIA V100 |

ecs.gn6v-c10g1.20xlarge | 82 vCPUs + 336 GB memory | 8 × NVIDIA V100 |

ecs.gn6e-c12g1.12xlarge | 48 vCPUs + 338 GB memory | 4 × NVIDIA V100 |

ecs.gn6v-c8g1.8xlarge | 32 vCPUs + 128 GB memory | 4 × NVIDIA V100 |

ecs.gn6i-c24g1.24xlarge | 96 vCPUs + 372 GB memory | 4 × NVIDIA T4 |

ecs.gn5-c8g1.4xlarge | 16 vCPUs + 120 GB memory | 2 × NVIDIA P100 |

ecs.gn7i-c32g1.16xlarge | 64 vCPUs + 376 GB memory | 2 × NVIDIA A10 |

ecs.gn6i-c24g1.12xlarge | 48 vCPUs + 186 GB memory | 2 × NVIDIA T4 |

ecs.gn6e-c12g1.3xlarge | 12 vCPUs + 92 GB memory | 1 × NVIDIA V100 |

ecs.gn5-c4g1.xlarge | 4 vCPUs + 30 GB memory | 1 × NVIDIA P100 |

ecs.gn5-c8g1.2xlarge | 8 vCPUs + 60 GB memory | 1 × NVIDIA P100 |

ecs.gn5-c28g1.7xlarge | 28 vCPUs + 112 GB memory | 1 × NVIDIA P100 |

ecs.gn6i-c4g1.xlarge | 4 vCPUs + 15 GB memory | 1 × NVIDIA T4 |

ecs.gn6i-c8g1.2xlarge | 8 vCPUs + 31 GB memory | 1 × NVIDIA T4 |

ecs.gn6i-c16g1.4xlarge | 16 vCPUs + 62 GB memory | 1 × NVIDIA T4 |

ecs.gn6i-c24g1.6xlarge | 24 vCPUs + 93 GB memory | 1 × NVIDIA T4 |

ecs.gn7i-c32g1.8xlarge | 32 vCPUs + 188 GB memory | 1 × NVIDIA A10 |

ecs.gn7e-c16g1.4xlarge | 16 vCPUs + 125 GB memory | 1 × GU50 |

ecs.gn7-c12g1.3xlarge | 12 vCPUs + 95 GB memory | 1 × GU50 |

ecs.gn7i-c16g1.4xlarge | 16 vCPUs + 60 GB memory | 1 × NVIDIA A10 |

ecs.gn7-c13g1.26xlarge | 104 vCPUs + 760 GB memory | 8 × GU50 |

ecs.ebmgn7e.32xlarge | 128 vCPUs + 1,024 GB memory | 8 × GU50 |

ecs.gn7i-c32g1.32xlarge | 128 vCPUs + 752 GB memory | 4 × NVIDIA A10 |

ecs.gn7-c13g1.13xlarge | 52 vCPUs + 380 GB memory | 4 × GU50 |

ecs.gn7s-c32g1.32xlarge | 128 vCPUs + 1,000 GB memory | 4 × NVIDIA A30 |

ecs.gn7s-c56g1.14xlarge | 56 vCPUs + 440 GB memory | 1 × NVIDIA A30 |

ecs.gn7s-c48g1.12xlarge | 48 vCPUs + 380 GB memory | 1 × NVIDIA A30 |

ecs.gn7s-c16g1.4xlarge | 16 vCPUs + 120 GB memory | 1 × NVIDIA A30 |

ecs.gn7s-c8g1.2xlarge | 8 vCPUs + 60 GB memory | 1 × NVIDIA A30 |

ecs.gn7s-c32g1.8xlarge | 32 vCPUs + 250 GB memory | 1 × NVIDIA A30 |