Optimized prompts allow large language models (LLMs) to generate results that are more consistent with your expectations. OpenSearch LLM-Based Conversational Search Edition supports custom prompt templates. You can create dedicated prompt templates based on your business scenarios. This topic describes how to create and manage custom prompt templates.

Create a prompt template

The system provides a default prompt template. If the default prompt template is used, the LLM answers questions by using only the context information. The LLM does not use public content to answer questions if no results are found in the context. To create a prompt template, perform the following steps: On the details page of an OpenSearch LLM-Based Conversational Search Edition instance, choose Configuration Center > Prompt Management. On the Prompt Management page, click Create Template. In the Create Prompt Template panel, configure the Prompt Template Name, Template Overview, and message parameters by following the on-screen instructions, and click OK.

Parameters

Prompt Template Name: required. The name of the prompt template. The name is unique and must be 1 to 30 characters in length. It must start with a letter and can contain letters, digits, and underscores (_).

Template Overview: optional. The description of the prompt template. The template description must be 1 to 30 characters in length and can contain underscores (_) and hyphens (-). It must start with a letter or digit.

message: the list of messages in the form of role-content pairs. You can click the plus sign (+) to add more role-content pairs. Valid values of the role field include system, user, and assistant. The content field is required. The value of this field cannot contain <# or [#. The value can be up to 1,024 characters in length.

system: the system message, which has the highest priority and is used to instruct the LLM to respond based on the preset features, specifications, or roles. System messages are optional. If you specify a system message, it must be placed at the beginning of the message list.

user and assistant: the user and model messages, which alternatively appear to simulate a real conversation. The message list must end with a user message.

Custom variable: You can specify custom variables in the

${parameters.Variable name}format in the prompt. The variable name must be 1 to 64 characters in length and can contain letters, digits, and underscores (_).When you specify a custom variable, you can specify a default value for the variable in the following format:

${(parameters.name)!}: This variable has no default value.

${(parameters.name)!"LLM"}: The default value is LLM.

NoteYou can use variables to pass values to the final prompt that is sent to the LLM. If you specify a variable, you must pass the variable value by calling an operation. Configure the variable based on the specified syntax. A variable cannot have the same name as one of the following built-in variables:

{question}: the original question that you enter.

{docs}: the content that is generated by merging documents.

Sample message configurations

After you create a prompt template, find the prompt template on the Prompt Management page and click Q&A Test in the Actions column to go to the Q&A Test page. By default, the knowledge base contains the knowledge related to OpenSearch LLM-Based Conversational Search Edition.

Default prompt template

role

content

Q&A test

Description

system

You are a helpful assistant.

Question: 1+1=

Answer: I cannot answer this question based on the known information. The context information does not contain mathematical operation-related content. Therefore, I cannot provide an answer to the 1+1= question based on the available information.

Question: Provide a brief introduction to OpenSearch LLM-Based Conversational Search Edition.

Answer: OpenSearch LLM-Based Conversational Search Edition is an intelligent OpenSearch service provided by Alibaba Cloud. Based on the built-in LLMs, OpenSearch LLM-Based Conversational Search Edition provides dedicated conversational search services for enterprises. It can help quickly build a conversational search system and automatically generate conversational search results in various formats such as reference images and reference links. The conversational search service is intelligent and of high quality.

Knowledge that is not contained in the knowledge base: The LLM does not use public content to answer the question if no results are found in the context. The LLM answers the question based on the known information to ensure the reliability and authenticity of the answer.

Knowledge that is contained in the knowledge base: The LLM provides an accurate and reliable answer based on the known information.

user

The context contains multiple independent documents, each of which is placed between the <article> and </article> tags. Context:

"${docs}"

Answer the question in a detailed and organized manner based on the preceding context. Make sure that the question is adequately answered based on the context. If the information provided by the context is insufficient to answer the question, return the following message: I cannot answer this question based on the known information. Do not use content that is not included in the context to generate answers. Make sure that each statement in the answer is supported by the corresponding content in the context. Answer the question in English.

Question: "${question}"

Custom prompt template that allows the LLM to answer questions based on public content if no results are found in the context

role

content

Q&A test

Description

system

You are a helpful assistant.

Question: 1+1=

Answer: 1+1=2.

Question: Provide a brief introduction to OpenSearch LLM-Based Conversational Search Edition.

Answer: OpenSearch LLM-Based Conversational Search Edition is an intelligent OpenSearch service provided by Alibaba Cloud. Based on the built-in LLMs, OpenSearch LLM-Based Conversational Search Edition provides dedicated conversational search services for enterprises. It can help quickly build a conversational search system and automatically generate conversational search results in various formats such as reference images and reference links. The conversational search service is intelligent and of high quality.

Knowledge that is not contained in the knowledge base: The LLM uses public content to answer the question if no results are found in the context.

Knowledge that is contained in the knowledge base: The LLM provides an accurate and reliable answer based on the known information.

user

The context contains multiple independent documents, each of which is placed between the <article> and </article> tags. Context:

"${docs}"

You have a large amount of knowledge, including knowledge related to mathematical calculation. Answer the following question accurately.

Question: "${question}"

Custom prompt template that contains custom variables in the

${(parameters. Variable name)!"Variable Value"}formatrole

content

Q&A test

Description

system

You are a helpful assistant.

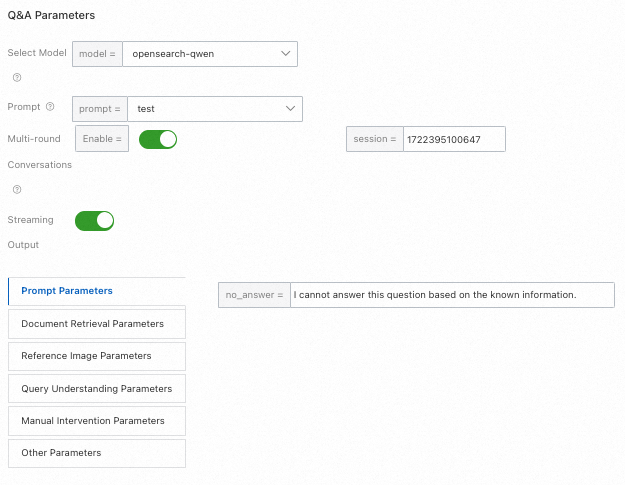

Model: opensearch-qwen

On the Q&A Test page, you can view the custom variables on the Prompt Parameters tab.

Question: 1+1=

Answer: I cannot answer this question based on the known information. The context information does not contain content related to mathematical calculation. It provides only an introduction to OpenSearch LLM-Based Conversational Search Edition and other Alibaba Cloud services and describes some services and products. No information related to mathematical operation is provided.

You can modify variable values in the console.

Question: 1+1=

Answer: Sorry, I cannot answer the question about mathematical calculation based on the known information. This is because the context does not contain information related to mathematical operation. If you need to resolve mathematical questions, we recommend that you provide relevant mathematical information or use a dedicated mathematical calculation tool.

The custom variable no_answer is used. If the information is insufficient to answer the question, the LLM returns the default value of the no_answer variable: I cannot answer this question based on the known information. You can modify the variable value. In this example, the value is changed to Sorry.

user

The context contains multiple independent documents, each of which is placed between the <article> and </article> tags. Context:

"${docs}"

Answer the question in a detailed and organized manner based on the preceding context. Make sure that the question is adequately answered based on the context. If the information provided by the context is insufficient to answer the question, return the following message: ${(parameters.no_answer)!"I cannot answer this question based on the known information"}. Do not use content that is not included in the context to generate answers. Make sure that each statement in the answer is supported by the corresponding content in the context. Answer the question in English.

Question: "${question}"

Manage prompt templates

You can manage all prompt templates on the Prompt Management page. You can view, modify, and delete prompt templates. If you click Q&A Test in the Actions column of a prompt template, the Q&A Test page appears. You can use the prompt template to perform a Q&A test.

The default prompt template cannot be modified.

You can modify the configurations of a prompt template except for the template name.