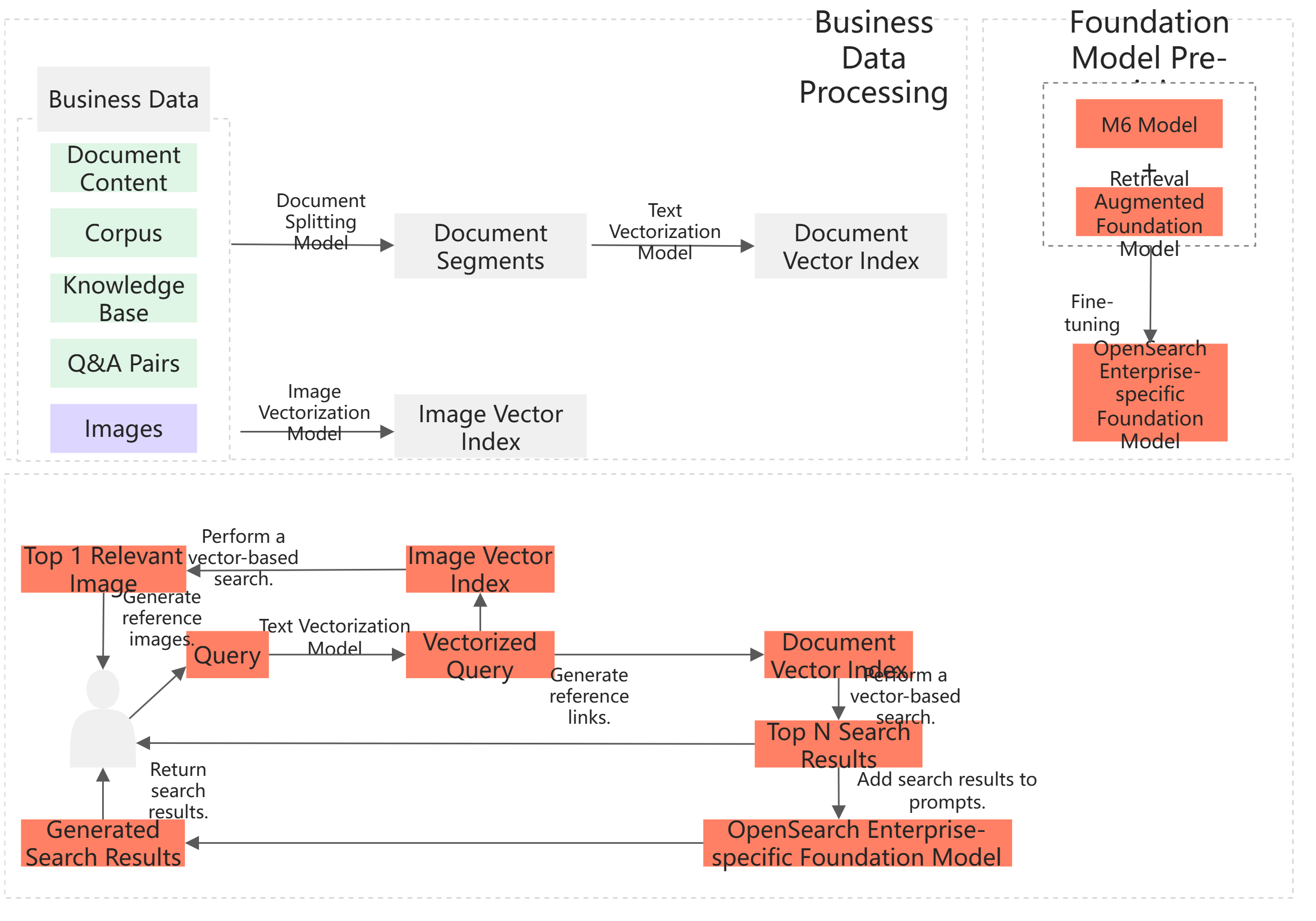

The OpenSearch LLM-Based Conversational Search Edition integrates unstructured data processing, vector models, text & vector retrieval, and large language models (LLMs), providing an out-of-the-box Retrieval-Augmented Generation (RAG) solution. It supports the rapid import of diverse data formats to build a multimodal search service that includes dialogue, links, and images, helping developers quickly set up a RAG system.

Overview

The LLM-Based Conversational Search Edition is designed for industry search scenarios, providing enterprise-specific Q&A search services. Based on a built-in LLM, it allows for the rapid setup of a Q&A search system. The LLM-Based Conversational Search Edition can automatically generate Q&A results, reference images, reference links, and other content based on the customer's own business data, offering a more intelligent and high-quality Q&A search service.

Service architecture

Features

Multimodal RAG: Supports image content understanding and can build a multimodal knowledge base through OCR and LLM, providing diverse output results.

RAG performance evaluation: Supports full-link performance evaluation, comparing RAG effects under different models and parameter configurations, facilitating effect comparison and selection.

Rich model capabilities and customized model training: With built-in vector, reranking, and LLMs, allows for the training of exclusive models based on business data.

Real-time data update: Supports real-time construction of incremental vector indexes and real-time data synchronization updates.

Table Q&A: Supports table Q&A based on NL2SQL, enabling conversational search Q&A through enterprise structured databases.

Zero deployment and fully managed without maintenance: Fully managed cloud-based MaaS service, requiring no deployment or maintenance.

Benefits

One-stop quick access: Built-in full-link RAG process, allowing for the construction of a RAG system within minutes by uploading business data through the console.

Better RAG effect: Built-in vector, reranking, and LLMs, with model capabilities topping industry rankings multiple times, ensuring over 95% RAG accuracy.

Flexible fine-tuning methods: Supports various fine-tuning methods such as custom prompt, parameter modification, search sorting, and customized model training, with a built-in full-link RAG effect evaluation model.

Comprehensive related features: Supports multimodal content understanding, structured and unstructured data parsing, multi-round conversation, streaming output, intent recognition, agent, and other comprehensive RAG-related features.

Convenient access methods: Supports zero-code quick access to various ecosystems such as DingTalk robots and Lark, and supports diverse and flexible access methods such as API/SDK, allowing for the embedding of API-Key into various open-source large model application development frameworks.

Enterprise-level capability improvement: Supports enterprise-level document permission isolation and real-time incremental data updates.

Version selection

The OpenSearch-LLM-Based Conversational Search Edition offers two versions: Standard Edition and Professional Edition. The table below introduces the features of these two versions and their differences.

Comparison item | Standard Edition | Professional Edition |

Customized model training | Not supported. | Supports SFT based on your own business data. |

LLM selection | Supports Qwen series, open-source models, and external models. | Supports Qwen series, open-source models, external models, and customized models. |

Limits | Maximum 10 QPS. | No throttling, so long as the purchased GPU resources supports the inference requests. |

Computing resource billing | Pay-as-you-go based on computing resources consumed during invocation. | Billed according to the purchased GPU specifications, with no additional computing resource fees required. |

Scenarios | Suitable for general intelligent customer service, enterprise knowledge base, E-commerce shopping guide, among others. | Suitable for scenarios where business data is relatively special and requires training to use exclusive LLMs for intelligent customer service, enterprise knowledge base, E-commerce shopping guide, among others. |

Scenarios

Intelligent customer service:

Provide intelligent pre-sales and after-sales customer service in apps, miniapps, and websites. Classify and judge user intentions based on different inputs and provide corresponding support and answers.

Supports fixed Q&A pairs based on manual intervention.

Supports the return of multimodal content such as images and videos.

Supports NL2SQL-based database queries for user orders, logistics information, and others.

Enterprise knowledge base:

Build an enterprise knowledge base on internal portal websites and chat software to provide knowledge support and quick navigation entry for employees and users.

Supports enterprise-level document permission isolation.

Supports real-time data updates and index construction.

Supports the parsing and understanding of various unstructured data.

E-commerce shopping guide:

Add intelligent shopping guide capabilities to existing search boxes or shopping guide customer service in E-commerce and retail apps and websites. Combine user dialogue information to intelligently recommend related products.

Supports the understanding of multimodal content such as images, which can include product images and other information.

Supports returning the link to the product search for quick access to the target product.

Supports adjusting product search sorting based on operational needs.

Content and community summary:

In content and community apps and websites, based on internal content data, add intelligent summarization capabilities to the existing search box. Generate strategies and references based on user dialogue information using website content summaries.

Supports the rapid import of webpage and website content.

Supports the understanding of multimodal content such as images based on OCR and large models.

Supports returning reference links to original documents.

Table Q&A:

For structured data information in various industry scenarios such as business and finance, quickly search for related content based on NL2SQL capabilities and quickly summarize and return relevant information through LLM.

Supports custom table structures.

Supports automatic synchronization of MaxCompute data source data.

Supports information extraction, summarization, and aggregation based on NL2SQL.