The Assistant API is designed to help developers easily build model applications, such as personal assistants, smart shopping guides, and meeting assistants. Compared to text generation API, the Assistant API has built in capabilities for dialogue management and tool calling, reducing development difficulty and cost.

What is an assistant

An assistant is a type of AI conversational assistant characterized by:

Support for multiple models: An assistant can be configured with various foundational models and enhanced with system instructions to tailor the personality and abilities of the model.

Tool calling: An assistant can use a variety of tools, including official tools of Model Studio such as Python code interpreter, and custom tools through function calling.

Conversation management: An assistant can manage conversations using the thread objects, which store message history and truncate it when it exceeds the context length of the model. A thread object is created once and subsequently appended with messages as the user responds.

To experience an assistant, you can simply create an agent application in the console, which does not require any programming skills. Alternatively, you can go to Get started with Assistant API for a step-by-step guide to create an assistant with the Assistant API and integrate it with your existing business code.

Agent applications and assistants are both LLM applications. But their features and management (create, read, update, and delete) methods are different.

Agent application: Designed to be no-code or low-code. Can be managed only in the console.

Assistant: Designed to be pure-code. Can be managed only through the Assistant API.

Differences from the text generation API

The primary element of text generation API is messages, which can be generated by models like Qwen-Plus and Qwen-Max. This lightweight API requires manual management of conversation status, tool definition, knowledge base retrieval, and code execution to build up a basic application.

On top of the text generation API, the Assistant API introduces the following core elements:

Message objects: Encapsulate the role and content of conversation information, similar to messages in the text generation API.

Assistant objects: Encapsulate a foundational model, default instructions, and tools.

Thread objects: Represent the current conversation status.

Run objects: Execute the assistant on a thread, including text responses and tool using.

The following sections delve into how these elements work together to create an assistant.

Interaction method

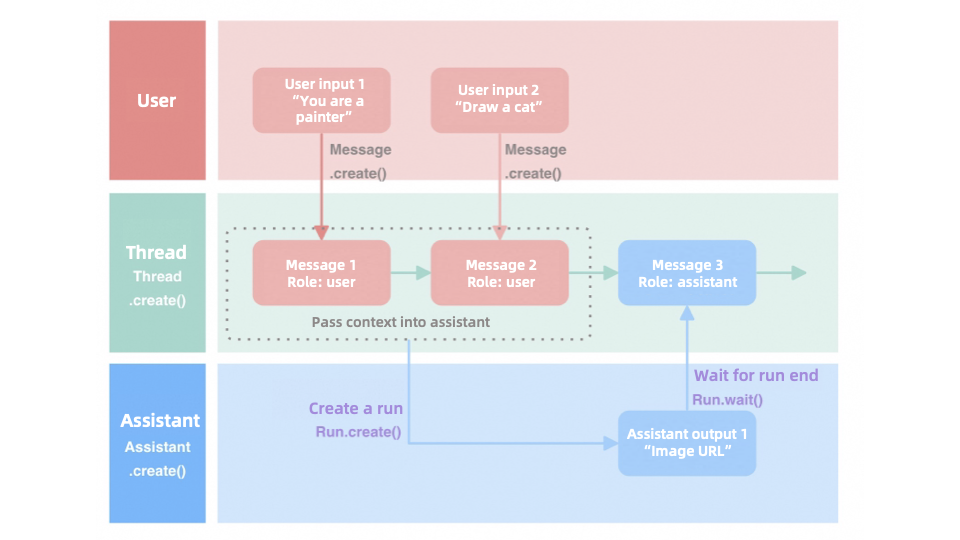

The Assistant API uses the thread mechanism to ensure messages are processed sequentially, preserving the continuity of the conversation. The process is as follows:

Create a message: Users create a message instance with the

Message.create()method, assigning it to a specific thread to maintain context association.Initialize a runtime environment: The

Run.create()function initializes the runtime environment of the assistant object, setting up the necessary configuration for message processing.Wait for the results: The

wait()function pauses execution until the assistant object completes processing and returns results, ensuring program synchronization and data sequence.

Consider a simple drawing assistant as an example:

|

Model support

For model compatibility with the Assistant API, refer to the actual execution results. For more information about the models, see Models and pricing.

Tool support

For plug-in compatibility with the Assistant API, refer to the actual execution results. For more information about the plug-ins, see Plug-in.

Tool | Identifier | Description |

Python code interpreter | code_interpreter | Facilitates the execution of Python code, ideal for programming tasks, mathematics, and data analysis. |

Image generation | text_to_image | Transforms textual descriptions into visual images, diversifying response formats. |

Custom plug-in | ${plugin_id} | Enables integration with custom business interfaces to extend AI functionalities. |

Function calling | function | Executes designated functions on on-premises devices, independent of external network services. |

Get started

If you want to test the LLMs or get started with the Assistant API:

Playground: Test the inference capabilities of LLMs to find the most suitable one for your assistant.

Get started: The basic usage and examples of the Assistant API to help you get started with your assistant.

API references: Detailed explanations of the parameters to help you resolve development challenges.