Qwen-VL answers questions based on the images or videos you provide. It supports single or multiple image inputs and is suitable for various tasks, such as image captioning, visual question answering, and object detection.

Supported regions: Singapore, Virginia, and Beijing. Use an API key for each corresponding region.

Try it online: Vision (Singapore), Vision (Virginia), or Vision (Beijing)

Getting started

Prerequisites

If you use an SDK to make calls, install the SDK. The DashScope Python SDK must be version 1.24.6 or later, and the DashScope Java SDK must be version 2.21.10 or later.

The following example shows how to call the model to describe image content. For more information about local files and image limits, see How to pass local files and Image limits.

OpenAI compatible

Python

from openai import OpenAI

import os

client = OpenAI(

# The API keys for different regions are different. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

api_key=os.getenv("DASHSCOPE_API_KEY"),

# The following base_url is for the Singapore region. If you use a model in the Virginia region, change the base_url to https://dashscope-us.aliyuncs.com/compatible-mode/v1

# If you use a model in the China (Beijing) region, replace the base_url with https://dashscope.aliyuncs.com/compatible-mode/v1

base_url="https://dashscope-intl.aliyuncs.com/compatible-mode/v1"

)

completion = client.chat.completions.create(

model="qwen3-vl-plus", # This example uses qwen3-vl-plus. You can replace the model name as needed. For a list of models, see https://www.alibabacloud.com/help/en/model-studio/getting-started/models

messages=[

{

"role": "user",

"content": [

{

"type": "image_url",

"image_url": {

"url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"

},

},

{"type": "text", "text": "What is depicted in the image?"},

],

},

],

)

print(completion.choices[0].message.content)Response

This is a photo taken on a beach. In the photo, a person and a dog are sitting on the sand, with the sea and sky in the background. The person and dog appear to be interacting, with the dog's front paw resting on the person's hand. Sunlight is coming from the right side of the frame, adding a warm atmosphere to the scene.Node.js

import OpenAI from "openai";

const openai = new OpenAI({

// The API keys for different regions are different. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

// If you have not configured the environment variable, replace the following line with your Model Studio API key: apiKey: "sk-xxx"

apiKey: process.env.DASHSCOPE_API_KEY,

// The following base_url is for the Singapore region. If you use a model in the Virginia region, change the base_url to https://dashscope-us.aliyuncs.com/compatible-mode/v1

// If you use a model in the China (Beijing) region, replace the base_url with https://dashscope.aliyuncs.com/compatible-mode/v1

baseURL: "https://dashscope-intl.aliyuncs.com/compatible-mode/v1"

});

async function main() {

const response = await openai.chat.completions.create({

model: "qwen3-vl-plus", // This example uses qwen3-vl-plus. You can replace the model name as needed. For a list of models, see https://www.alibabacloud.com/help/en/model-studio/getting-started/models

messages: [

{

role: "user",

content: [{

type: "image_url",

image_url: {

"url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"

}

},

{

type: "text",

text: "What is depicted in the image?"

}

]

}

]

});

console.log(response.choices[0].message.content);

}

main()Response

This is a photo taken on a beach. In the photo, a person and a dog are sitting on the sand, with the sea and sky in the background. The person and dog appear to be interacting, with the dog's front paw resting on the person's hand. Sunlight is coming from the right side of the frame, adding a warm atmosphere to the scene.curl

# ======= Important =======

# The following is the base_url for the Singapore region. If you use a model in the Virginia region, change the base_url to https://dashscope-us.aliyuncs.com/compatible-mode/v1/chat/completions

# If you use a model in the China (Beijing) region, replace the base_url with: https://dashscope.aliyuncs.com/compatible-mode/v1/chat/completions

# The API keys for different regions are different. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

# === Delete this comment before execution ===

curl --location 'https://dashscope-intl.aliyuncs.com/compatible-mode/v1/chat/completions' \

--header "Authorization: Bearer $DASHSCOPE_API_KEY" \

--header 'Content-Type: application/json' \

--data '{

"model": "qwen3-vl-plus",

"messages": [

{"role": "user",

"content": [

{"type": "image_url", "image_url": {"url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"}},

{"type": "text", "text": "What is depicted in the image?"}

]

}]

}'Response

{

"choices": [

{

"message": {

"content": "This is a photo taken on a beach. In the photo, a person and a dog are sitting on the sand, with the sea and sky in the background. The person and dog appear to be interacting, with the dog's front paw resting on the person's hand. Sunlight is coming from the right side of the frame, adding a warm atmosphere to the scene.",

"role": "assistant"

},

"finish_reason": "stop",

"index": 0,

"logprobs": null

}

],

"object": "chat.completion",

"usage": {

"prompt_tokens": 1270,

"completion_tokens": 54,

"total_tokens": 1324

},

"created": 1725948561,

"system_fingerprint": null,

"model": "qwen3-vl-plus",

"id": "chatcmpl-0fd66f46-b09e-9164-a84f-3ebbbedbac15"

}DashScope

Python

import os

import dashscope

# The following is the base_url for the Singapore region. If you use a model in the Virginia region, change the base_url to https://dashscope-us.aliyuncs.com/api/v1

# If you use a model in the China (Beijing) region, replace the base_url with: https://dashscope.aliyuncs.com/api/v1

dashscope.base_http_api_url = 'https://dashscope-intl.aliyuncs.com/api/v1'

messages = [

{

"role": "user",

"content": [

{"image": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"},

{"text": "What is depicted in the image?"}]

}]

response = dashscope.MultiModalConversation.call(

# The API keys for different regions are different. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

# If you have not configured the environment variable, replace the following line with your Model Studio API key: api_key="sk-xxx"

api_key=os.getenv('DASHSCOPE_API_KEY'),

model='qwen3-vl-plus', # This example uses qwen3-vl-plus. You can replace the model name as needed. For a list of models, see https://www.alibabacloud.com/help/en/model-studio/getting-started/models

messages=messages

)

print(response.output.choices[0].message.content[0]["text"])Response

This is a photo taken on a beach. In the photo, there is a woman and a dog. The woman is sitting on the sand, smiling and interacting with the dog. The dog is wearing a collar and appears to be shaking hands with the woman. The background is the sea and the sky, and the sunlight shining on them creates a warm atmosphere.Java

import java.util.Arrays;

import java.util.Collections;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

import com.alibaba.dashscope.utils.Constants;

public class Main {

// The following is the base_url for the Singapore region. If you use a model in the Virginia region, change the base_url to https://dashscope-us.aliyuncs.com/api/v1

// If you use a model in the China (Beijing) region, replace the base_url with: https://dashscope.aliyuncs.com/api/v1

static {

Constants.baseHttpApiUrl="https://dashscope-intl.aliyuncs.com/api/v1";

}

public static void simpleMultiModalConversationCall()

throws ApiException, NoApiKeyException, UploadFileException {

MultiModalConversation conv = new MultiModalConversation();

MultiModalMessage userMessage = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(

Collections.singletonMap("image", "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"),

Collections.singletonMap("text", "What is depicted in the image?"))).build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

// The API keys for different regions are different. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

// If you have not configured the environment variable, replace the following line with your Model Studio API key: .apiKey("sk-xxx")

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model("qwen3-vl-plus") // This example uses qwen3-vl-plus. You can replace the model name as needed. For a list of models, see https://www.alibabacloud.com/help/en/model-studio/getting-started/models

.messages(Arrays.asList(userMessage))

.build();

MultiModalConversationResult result = conv.call(param);

System.out.println(result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("text"));

}

public static void main(String[] args) {

try {

simpleMultiModalConversationCall();

} catch (ApiException | NoApiKeyException | UploadFileException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}Response

This is a photo taken on a beach. In the photo, there is a person in a plaid shirt and a dog with a collar. The person and the dog are sitting face to face, seemingly interacting. The background is the sea and the sky, and the sunlight shining on them creates a warm atmosphere.curl

# ======= Important =======

# The following is the base_url for the Singapore region. If you use a model in the Virginia region, change the base_url to https://dashscope-us.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# If you use a model in the China (Beijing) region, replace the base_url with: https://dashscope.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# The API keys for different regions are different. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

# === Delete this comment before execution ===

curl -X POST https://dashscope-intl.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation \

-H "Authorization: Bearer $DASHSCOPE_API_KEY" \

-H 'Content-Type: application/json' \

-d '{

"model": "qwen3-vl-plus",

"input":{

"messages":[

{

"role": "user",

"content": [

{"image": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"},

{"text": "What is depicted in the image?"}

]

}

]

}

}'Response

{

"output": {

"choices": [

{

"finish_reason": "stop",

"message": {

"role": "assistant",

"content": [

{

"text": "This is a photo taken on a beach. In the photo, there is a person in a plaid shirt and a dog with a collar. They are sitting on the sand, with the sea and sky in the background. Sunlight is coming from the right side of the frame, adding a warm atmosphere to the scene."

}

]

}

}

]

},

"usage": {

"output_tokens": 55,

"input_tokens": 1271,

"image_tokens": 1247

},

"request_id": "ccf845a3-dc33-9cda-b581-20fe7dc23f70"

}Model selection

For tasks such as high-precision object recognition and localization (including 3D localization), agent tool calling, document and webpage parsing, complex problem-solving, and long video understanding, Qwen3-VL is the preferred choice. A comparison of the models in this series is as follows:

qwen3-vl-plus: The most powerful model.qwen3-vl-flash: This model is faster and more cost-effective. It is a high-value option that balances performance and cost and is suitable for scenarios that are sensitive to response speed.

For simple tasks such as image captioning and short video summary extraction, you can choose Qwen2.5-VL. A comparison of the models in this series is as follows:

qwen-vl-max: The best-performing model in the Qwen2.5-VL series.qwen-vl-plus(part of Qwen2.5-VL): This model is faster and provides a good balance between performance and cost.

For model names, context, pricing, and snapshot versions, see Model list. For concurrent request limits, see Rate limits.

Performance overview

Visual question answering

Describe the content of an image or classify and label it, such as identifying people, places, animals, and plants.

If the sun is glaring, what item from this picture should I use? | When the sun is glaring, you should use the pink sunglasses from the picture. Sunglasses can effectively block strong light, reduce UV damage to your eyes, and help protect your vision and improve visual comfort in bright sunlight. |

Creative writing

Generate vivid text descriptions based on image or video content. This is suitable for creative scenarios such as story writing, copywriting, and short video scripts.

Please help me write an interesting social media post based on the content of the picture. | Sure, this picture is full of a rich Mid-Autumn Festival atmosphere, with a clever combination of classical and modern elements. Based on the main elements in the picture, I have prepared several social media posts in different styles for you to choose from. Poetic and beautiful style Tonight, the moon is bright and everyone is watching, wondering where autumn thoughts will fall. Chang'e flies to the moon, and the Jade Rabbit pounds medicine—the romance of the ancients is lit up tonight. May this bright moon light your way home and carry my deepest thoughts. Happy Mid-Autumn Festival! Warm and blessing style The moon is full, and people are reunited. The Mid-Autumn night is the gentlest. Watch the fireworks bloom, admire the full moon in the sky, take a bite of a mooncake, and want for peace and health. May all that you and I want for in our hearts come true. Wishing everyone a happy Mid-Autumn Festival and a happy family! |

OCR and information extraction

Recognize text and formulas in images or extract information from receipts, certificates, and forms, with support for formatted text output. The Qwen3-VL model has expanded its language support to 33 languages. For a list of supported languages, see Model feature comparison.

Extract the following from the image: ['Invoice Code', 'Invoice Number', 'Destination', 'Fuel Surcharge', 'Fare', 'Travel Date', 'Departure Time', 'Train Number', 'Seat Number']. Please output in JSON format. | { "Invoice Code": "221021325353", "Invoice Number": "10283819", "Destination": "Development Zone", "Fuel Surcharge": "2.0", "Fare": "8.00<Full>", "Travel Date": "2013-06-29", "Departure Time": "Serial", "Train Number": "040", "Seat Number": "371" } |

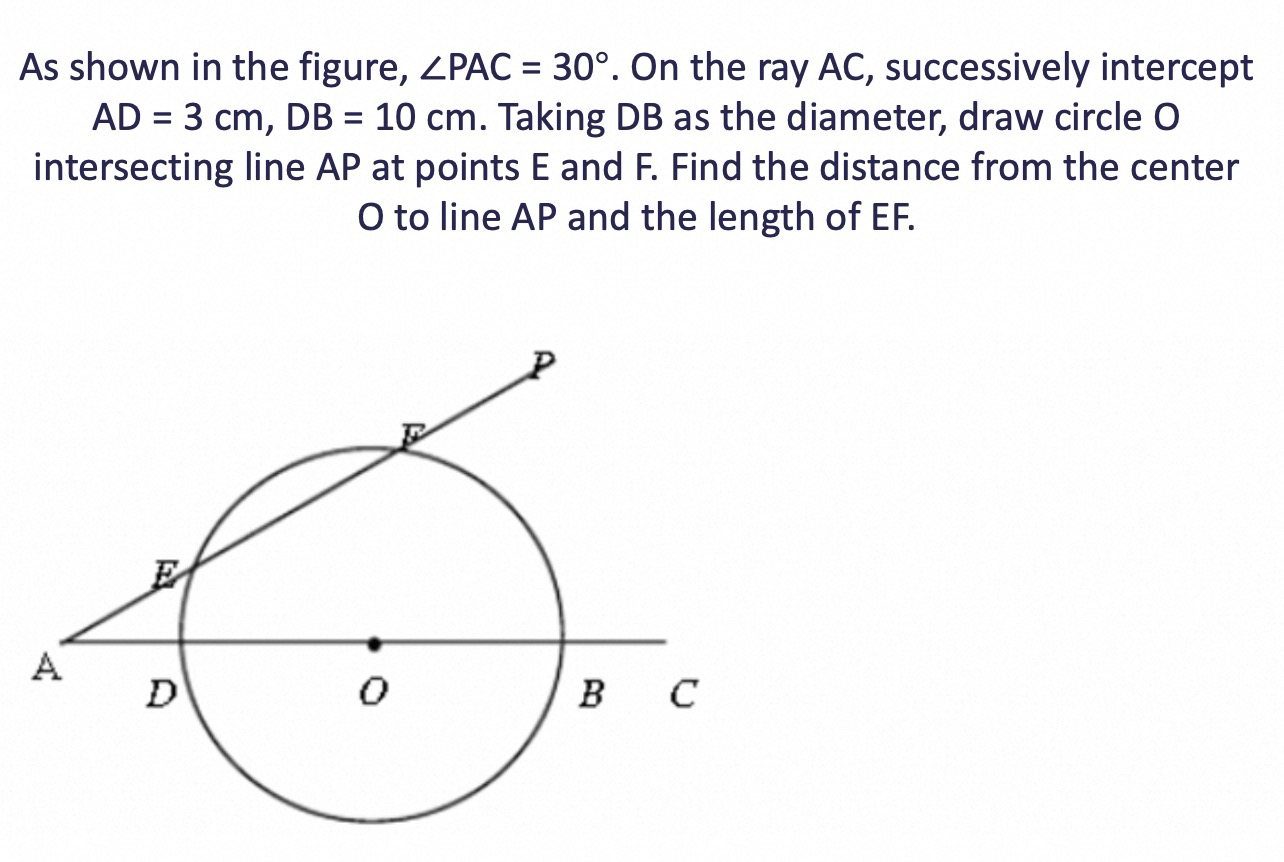

Multi-disciplinary problem solving

Solve problems in images, such as math, physics, and chemistry problems. This feature is suitable for primary, secondary, university, and adult education.

Please solve the math problem in the image step by step. |

|

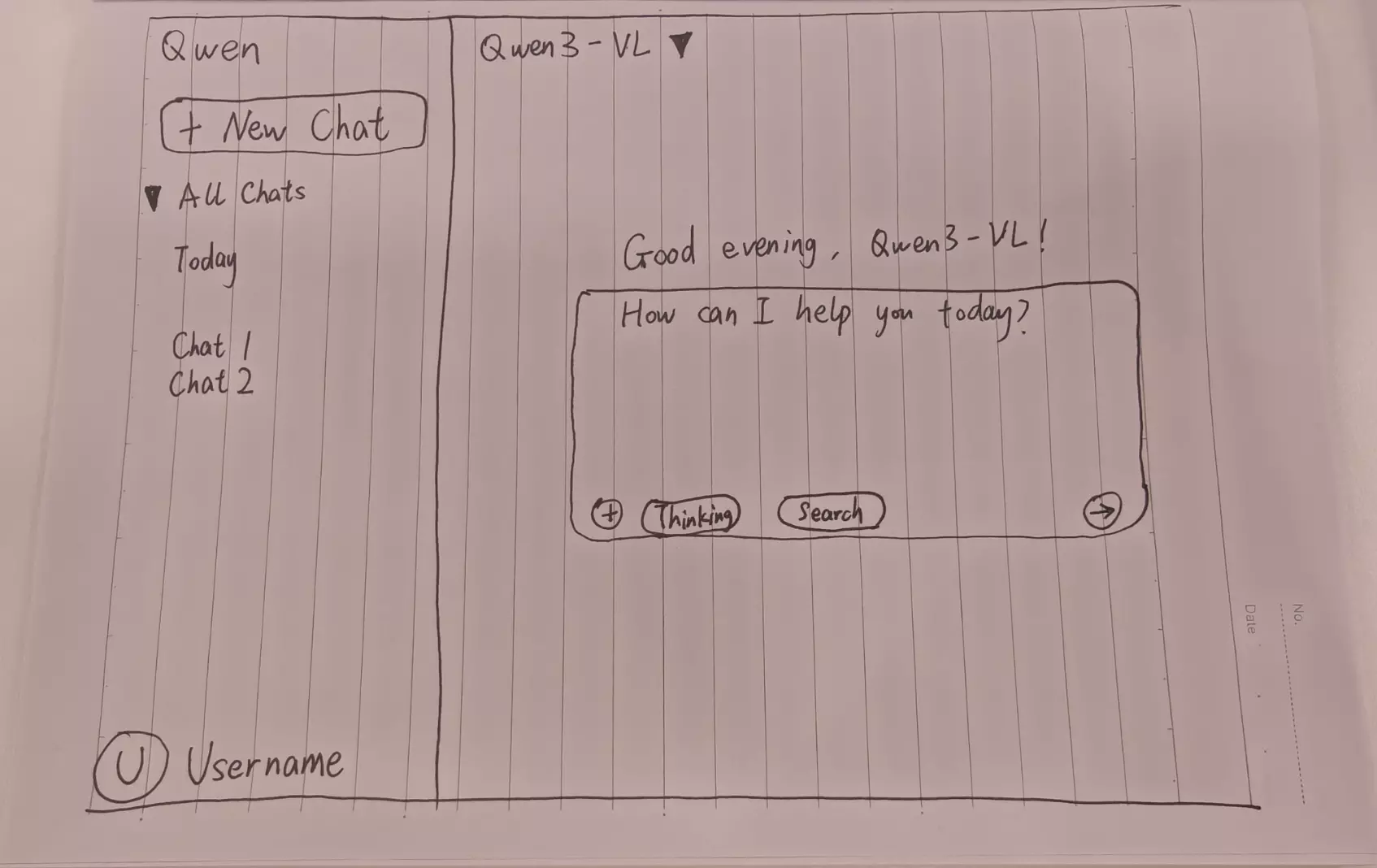

Visual coding

Generate code from images or videos. This can be used to create HTML, CSS, and JS code from design drafts, website screenshots, and more.

Design a webpage using HTML and CSS based on my sketch, with black as the main color. |

Webpage preview |

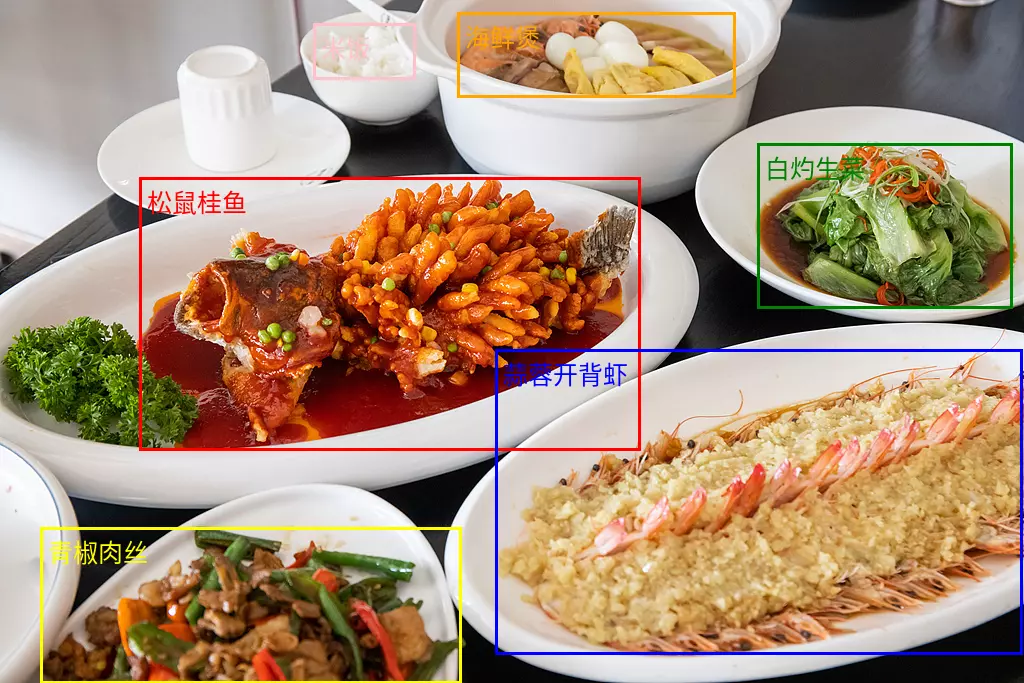

Object localization

The model supports 2D and 3D localization, which can be used to determine object orientation, perspective changes, and occlusion relationships. 3D localization is a new feature of the Qwen3-VL model.

For the Qwen2.5-VL model, object detection is robust within a resolution range of 480 × 480 to 2560 × 2560. Outside this range, detection accuracy may decrease, with occasional bounding box drift.

For more information about how to draw the localization results on the original image, see FAQ.

2D localization

| Visualization of 2D localization results

|

Document parsing

Parse image-based documents, such as scans or image PDFs, into QwenVL HTML or QwenVL Markdown format. This format not only accurately recognizes text but also obtains the position information of elements such as images and tables. The Qwen3-VL model adds the ability to parse documents into Markdown format.

Recommended prompts are:qwenvl html(to parse into HTML format) orqwenvl markdown(to parse into Markdown format).

qwenvl markdown. |

Visualization of results |

Video understanding

Analyze video content, such as locating specific events and obtaining timestamps, or generating summaries of key time periods.

Please describe the series of actions of the person in the video. Output in JSON format with start_time, end_time, and event. Please use HH:mm:ss for the timestamp. | { "events": [ { "start_time": "00:00:00", "end_time": "00:00:05", "event": "The person walks towards the table holding a cardboard box and places it on the table." }, { "start_time": "00:00:05", "end_time": "00:00:15", "event": "The person picks up a scanner and scans the label on the cardboard box." }, { "start_time": "00:00:15", "end_time": "00:00:21", "event": "The person puts the scanner back in its place and then picks up a pen to write information in a notebook."}] } |

Core capabilities

Enable or disable thinking mode

The

qwen3-vl-plusandqwen3-vl-flashseries models are hybrid thinking models. They can respond after thinking or respond directly. Use theenable_thinkingparameter to control whether to enable thinking mode:true: Thinking mode is enabled.false(default): Disables thinking mode

Models with a

thinkingsuffix, such asqwen3-vl-235b-a22b-thinking, are thinking-only models. They always think before responding, and you cannot disable this feature.

Model configuration: In general conversation scenarios that do not involve agent tool calls, we recommend that you do not set a

System Messageto maintain optimal performance. You can pass instructions such as model role settings and output format requirements through theUser Message.Prioritize streaming output: When thinking mode is enabled, both streaming and non-streaming output are supported. To avoid timeouts due to long response content, we recommend that you prioritize using streaming output.

Limit thinking length: Deep thinking models sometimes output lengthy reasoning processes. You can use the

thinking_budgetparameter to limit the length of the thinking process. If the number of tokens generated during the model's thinking process exceedsthinking_budget, the reasoning content is truncated, and the model immediately starts to generate the final response. The default value ofthinking_budgetis the model's maximum chain-of-thought length, listed in Models.

OpenAI compatible

The enable_thinking parameter is not a standard OpenAI parameter. If you use the OpenAI SDK for Python, you can pass it through extra_body.

import os

from openai import OpenAI

client = OpenAI(

# The API keys for different regions are different. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

api_key=os.getenv("DASHSCOPE_API_KEY"),

# The following base_url is for the Singapore region. If you use a model in the Virginia region, change the base_url to https://dashscope-us.aliyuncs.com/compatible-mode/v1

# If you use a model in the China (Beijing) region, replace the base_url with https://dashscope.aliyuncs.com/compatible-mode/v1

base_url="https://dashscope-intl.aliyuncs.com/compatible-mode/v1"

)

reasoning_content = "" # Define the complete thinking process

answer_content = "" # Define the complete response

is_answering = False # Determine whether to end the thinking process and start responding

enable_thinking = True

# Create a chat completion request

completion = client.chat.completions.create(

model="qwen3-vl-plus",

messages=[

{

"role": "user",

"content": [

{

"type": "image_url",

"image_url": {

"url": "https://img.alicdn.com/imgextra/i1/O1CN01gDEY8M1W114Hi3XcN_!!6000000002727-0-tps-1024-406.jpg"

},

},

{"type": "text", "text": "How do I solve this problem?"},

],

},

],

stream=True,

# The enable_thinking parameter enables the thinking process, and the thinking_budget parameter sets the maximum number of tokens for the reasoning process.

# For qwen3-vl-plus and qwen3-vl-flash, thinking can be enabled or disabled with enable_thinking. For models with the 'thinking' suffix, such as qwen3-vl-235b-a22b-thinking, enable_thinking can only be set to true. This does not apply to other Qwen-VL models.

extra_body={

'enable_thinking': enable_thinking,

"thinking_budget": 81920},

# Uncomment the following lines to return token usage in the last chunk

# stream_options={

# "include_usage": True

# }

)

if enable_thinking:

print("\n" + "=" * 20 + "Thinking Process" + "=" * 20 + "\n")

for chunk in completion:

# If chunk.choices is empty, print usage

if not chunk.choices:

print("\nUsage:")

print(chunk.usage)

else:

delta = chunk.choices[0].delta

# Print the thinking process

if hasattr(delta, 'reasoning_content') and delta.reasoning_content is not None:

print(delta.reasoning_content, end='', flush=True)

reasoning_content += delta.reasoning_content

else:

# Start responding

if delta.content != "" and is_answering is False:

print("\n" + "=" * 20 + "Complete Response" + "=" * 20 + "\n")

is_answering = True

# Print the response process

print(delta.content, end='', flush=True)

answer_content += delta.content

# print("=" * 20 + "Complete Thinking Process" + "=" * 20 + "\n")

# print(reasoning_content)

# print("=" * 20 + "Complete Response" + "=" * 20 + "\n")

# print(answer_content)import OpenAI from "openai";

// Initialize the OpenAI client

const openai = new OpenAI({

// The API keys for different regions are different. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

// If you have not configured the environment variable, replace the following line with your Model Studio API key: apiKey: "sk-xxx"

apiKey: process.env.DASHSCOPE_API_KEY,

// The following base_url is for the Singapore region. If you use a model in the Virginia region, change the base_url to https://dashscope-us.aliyuncs.com/compatible-mode/v1

// If you use a model in the China (Beijing) region, replace the base_url with https://dashscope.aliyuncs.com/compatible-mode/v1

baseURL: "https://dashscope-intl.aliyuncs.com/compatible-mode/v1"

});

let reasoningContent = '';

let answerContent = '';

let isAnswering = false;

let enableThinking = true;

let messages = [

{

role: "user",

content: [

{ type: "image_url", image_url: { "url": "https://img.alicdn.com/imgextra/i1/O1CN01gDEY8M1W114Hi3XcN_!!6000000002727-0-tps-1024-406.jpg" } },

{ type: "text", text: "Solve this problem" },

]

}]

async function main() {

try {

const stream = await openai.chat.completions.create({

model: 'qwen3-vl-plus',

messages: messages,

stream: true,

// Note: In the Node.js SDK, non-standard parameters like enableThinking are passed as top-level properties and do not need to be in extra_body.

enable_thinking: enableThinking,

thinking_budget: 81920

});

if (enableThinking){console.log('\n' + '='.repeat(20) + 'Thinking Process' + '='.repeat(20) + '\n');}

for await (const chunk of stream) {

if (!chunk.choices?.length) {

console.log('\nUsage:');

console.log(chunk.usage);

continue;

}

const delta = chunk.choices[0].delta;

// Process the thinking process

if (delta.reasoning_content) {

process.stdout.write(delta.reasoning_content);

reasoningContent += delta.reasoning_content;

}

// Process the formal response

else if (delta.content) {

if (!isAnswering) {

console.log('\n' + '='.repeat(20) + 'Complete Response' + '='.repeat(20) + '\n');

isAnswering = true;

}

process.stdout.write(delta.content);

answerContent += delta.content;

}

}

} catch (error) {

console.error('Error:', error);

}

}

main();# ======= Important =======

# The following is the base_url for the Singapore region. If you use a model in the Virginia region, change the base_url to https://dashscope-us.aliyuncs.com/compatible-mode/v1/chat/completions

# If you use a model in the China (Beijing) region, replace the base_url with: https://dashscope.aliyuncs.com/compatible-mode/v1/chat/completions

# The API keys for different regions are different. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

# === Delete this comment before execution ===

curl --location 'https://dashscope-intl.aliyuncs.com/compatible-mode/v1/chat/completions' \

--header "Authorization: Bearer $DASHSCOPE_API_KEY" \

--header 'Content-Type: application/json' \

--data '{

"model": "qwen3-vl-plus",

"messages": [

{

"role": "user",

"content": [

{

"type": "image_url",

"image_url": {

"url": "https://img.alicdn.com/imgextra/i1/O1CN01gDEY8M1W114Hi3XcN_!!6000000002727-0-tps-1024-406.jpg"

}

},

{

"type": "text",

"text": "Please solve this problem"

}

]

}

],

"stream":true,

"stream_options":{"include_usage":true},

"enable_thinking": true,

"thinking_budget": 81920

}'DashScope

import os

import dashscope

from dashscope import MultiModalConversation

# The following is the base_url for the Singapore region. If you use a model in the Virginia region, change the base_url to https://dashscope-us.aliyuncs.com/api/v1

# If you use a model in the China (Beijing) region, replace the base_url with: https://dashscope.aliyuncs.com/api/v1

dashscope.base_http_api_url = "https://dashscope-intl.aliyuncs.com/api/v1"

enable_thinking=True

messages = [

{

"role": "user",

"content": [

{"image": "https://img.alicdn.com/imgextra/i1/O1CN01gDEY8M1W114Hi3XcN_!!6000000002727-0-tps-1024-406.jpg"},

{"text": "Solve this problem?"}

]

}

]

response = MultiModalConversation.call(

# If you have not configured the environment variable, replace the following line with your Model Studio API key: api_key="sk-xxx",

# The API keys for different regions are different. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

api_key=os.getenv('DASHSCOPE_API_KEY'),

model="qwen3-vl-plus",

messages=messages,

stream=True,

# The enable_thinking parameter enables the thinking process.

# For qwen3-vl-plus and qwen3-vl-flash, thinking can be enabled or disabled with enable_thinking. For models with the 'thinking' suffix, such as qwen3-vl-235b-a22b-thinking, enable_thinking can only be set to true. This does not apply to other Qwen-VL models.

enable_thinking=enable_thinking,

# The thinking_budget parameter sets the maximum number of tokens for the reasoning process.

thinking_budget=81920,

)

# Define the complete thinking process

reasoning_content = ""

# Define the complete response

answer_content = ""

# Determine whether to end the thinking process and start responding

is_answering = False

if enable_thinking:

print("=" * 20 + "Thinking Process" + "=" * 20)

for chunk in response:

# If both the thinking process and the response are empty, ignore

message = chunk.output.choices[0].message

reasoning_content_chunk = message.get("reasoning_content", None)

if (chunk.output.choices[0].message.content == [] and

reasoning_content_chunk == ""):

pass

else:

# If it is currently in the thinking process

if reasoning_content_chunk is not None and chunk.output.choices[0].message.content == []:

print(chunk.output.choices[0].message.reasoning_content, end="")

reasoning_content += chunk.output.choices[0].message.reasoning_content

# If it is currently responding

elif chunk.output.choices[0].message.content != []:

if not is_answering:

print("\n" + "=" * 20 + "Complete Response" + "=" * 20)

is_answering = True

print(chunk.output.choices[0].message.content[0]["text"], end="")

answer_content += chunk.output.choices[0].message.content[0]["text"]

# To print the complete thinking process and complete response, uncomment and run the following code

# print("=" * 20 + "Complete Thinking Process" + "=" * 20 + "\n")

# print(f"{reasoning_content}")

# print("=" * 20 + "Complete Response" + "=" * 20 + "\n")

# print(f"{answer_content}")// DashScope SDK version >= 2.21.10

import java.util.*;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import io.reactivex.Flowable;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.exception.UploadFileException;

import com.alibaba.dashscope.exception.InputRequiredException;

import java.lang.System;

import com.alibaba.dashscope.utils.Constants;

public class Main {

// The following is the base_url for the Singapore region. If you use a model in the Virginia region, change the base_url to https://dashscope-us.aliyuncs.com/api/v1

// If you use a model in the China (Beijing) region, replace the base_url with: https://dashscope.aliyuncs.com/api/v1

static {Constants.baseHttpApiUrl="https://dashscope-intl.aliyuncs.com/api/v1";}

private static final Logger logger = LoggerFactory.getLogger(Main.class);

private static StringBuilder reasoningContent = new StringBuilder();

private static StringBuilder finalContent = new StringBuilder();

private static boolean isFirstPrint = true;

private static void handleGenerationResult(MultiModalConversationResult message) {

String re = message.getOutput().getChoices().get(0).getMessage().getReasoningContent();

String reasoning = Objects.isNull(re)?"":re; // Default value

List<Map<String, Object>> content = message.getOutput().getChoices().get(0).getMessage().getContent();

if (!reasoning.isEmpty()) {

reasoningContent.append(reasoning);

if (isFirstPrint) {

System.out.println("====================Thinking Process====================");

isFirstPrint = false;

}

System.out.print(reasoning);

}

if (Objects.nonNull(content) && !content.isEmpty()) {

Object text = content.get(0).get("text");

finalContent.append(content.get(0).get("text"));

if (!isFirstPrint) {

System.out.println("\n====================Complete Response====================");

isFirstPrint = true;

}

System.out.print(text);

}

}

public static MultiModalConversationParam buildMultiModalConversationParam(MultiModalMessage Msg) {

return MultiModalConversationParam.builder()

// If you have not configured the environment variable, replace the following line with your Model Studio API key: .apiKey("sk-xxx")

// The API keys for different regions are different. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model("qwen3-vl-plus")

.messages(Arrays.asList(Msg))

.enableThinking(true)

.thinkingBudget(81920)

.incrementalOutput(true)

.build();

}

public static void streamCallWithMessage(MultiModalConversation conv, MultiModalMessage Msg)

throws NoApiKeyException, ApiException, InputRequiredException, UploadFileException {

MultiModalConversationParam param = buildMultiModalConversationParam(Msg);

Flowable<MultiModalConversationResult> result = conv.streamCall(param);

result.blockingForEach(message -> {

handleGenerationResult(message);

});

}

public static void main(String[] args) {

try {

MultiModalConversation conv = new MultiModalConversation();

MultiModalMessage userMsg = MultiModalMessage.builder()

.role(Role.USER.getValue())

.content(Arrays.asList(Collections.singletonMap("image", "https://img.alicdn.com/imgextra/i1/O1CN01gDEY8M1W114Hi3XcN_!!6000000002727-0-tps-1024-406.jpg"),

Collections.singletonMap("text", "Please solve this problem")))

.build();

streamCallWithMessage(conv, userMsg);

// Print the final result

// if (reasoningContent.length() > 0) {

// System.out.println("\n====================Complete Response====================");

// System.out.println(finalContent.toString());

// }

} catch (ApiException | NoApiKeyException | UploadFileException | InputRequiredException e) {

logger.error("An exception occurred: {}", e.getMessage());

}

System.exit(0);

}

}# ======= Important =======

# The API keys for different regions are different. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

# The following is the base_url for the Singapore region. If you use a model in the Virginia region, change the base_url to https://dashscope-us.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# If you use a model in the China (Beijing) region, replace the base_url with: https://dashscope.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# === Delete this comment before execution ===

curl -X POST https://dashscope-intl.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation \

-H "Authorization: Bearer $DASHSCOPE_API_KEY" \

-H 'Content-Type: application/json' \

-H 'X-DashScope-SSE: enable' \

-d '{

"model": "qwen3-vl-plus",

"input":{

"messages":[

{

"role": "user",

"content": [

{"image": "https://img.alicdn.com/imgextra/i1/O1CN01gDEY8M1W114Hi3XcN_!!6000000002727-0-tps-1024-406.jpg"},

{"text": "Please solve this problem"}

]

}

]

},

"parameters":{

"enable_thinking": true,

"incremental_output": true,

"thinking_budget": 81920

}

}'Multiple image input

Qwen-VL supports passing multiple images in a single request, which can be used for tasks such as product comparison and multi-page document processing. To do this, you can include multiple image objects in the user message's content array.

The number of images is limited by the model's total token limit for text and images. The total number of tokens for all images and text must be less than the model's maximum input.

OpenAI compatible

Python

import os

from openai import OpenAI

client = OpenAI(

# The API keys for different regions are different. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

api_key=os.getenv("DASHSCOPE_API_KEY"),

# The following base_url is for the Singapore region. If you use a model in the Virginia region, change the base_url to https://dashscope-us.aliyuncs.com/compatible-mode/v1

# If you use a model in the China (Beijing) region, replace the base_url with https://dashscope.aliyuncs.com/compatible-mode/v1

base_url="https://dashscope-intl.aliyuncs.com/compatible-mode/v1",

)

completion = client.chat.completions.create(

model="qwen3-vl-plus", # This example uses qwen3-vl-plus. You can replace the model name as needed. For a list of models, see https://www.alibabacloud.com/help/en/model-studio/getting-started/models

messages=[

{"role": "user","content": [

{"type": "image_url","image_url": {"url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"},},

{"type": "image_url","image_url": {"url": "https://dashscope.oss-cn-beijing.aliyuncs.com/images/tiger.png"},},

{"type": "text", "text": "What do these images depict?"},

],

}

],

)

print(completion.choices[0].message.content)Response

Image 1 shows a scene of a woman and a Labrador retriever interacting on a beach. The woman is wearing a plaid shirt and sitting on the sand, shaking hands with the dog. The background is the ocean waves and the sky, and the whole picture is filled with a warm and pleasant atmosphere.

Image 2 shows a scene of a tiger walking in a forest. The tiger's coat is orange with black stripes, and it is stepping forward. The surroundings are dense trees and vegetation, and the ground is covered with fallen leaves. The whole picture gives a feeling of wild nature.Node.js

import OpenAI from "openai";

const openai = new OpenAI(

{

// The API keys for different regions are different. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

// If you have not configured the environment variable, replace the following line with your Model Studio API key: apiKey: "sk-xxx"

apiKey: process.env.DASHSCOPE_API_KEY,

// The following is the base_url for the Singapore region. If you use a model in the Virginia region, change the base_url to https://dashscope-us.aliyuncs.com/compatible-mode/v1

// If you use a model in the China (Beijing) region, replace the base_url with: https://dashscope.aliyuncs.com/compatible-mode/v1

baseURL: "https://dashscope-intl.aliyuncs.com/compatible-mode/v1"

}

);

async function main() {

const response = await openai.chat.completions.create({

model: "qwen3-vl-plus", // This example uses qwen3-vl-plus. You can replace the model name as needed. For a list of models, see https://www.alibabacloud.com/help/en/model-studio/getting-started/models

messages: [

{role: "user",content: [

{type: "image_url",image_url: {"url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"}},

{type: "image_url",image_url: {"url": "https://dashscope.oss-cn-beijing.aliyuncs.com/images/tiger.png"}},

{type: "text", text: "What do these images depict?" },

]}]

});

console.log(response.choices[0].message.content);

}

main()Response

In the first image, a person and a dog are interacting on a beach. The person is wearing a plaid shirt, and the dog is wearing a collar. They seem to be shaking hands or giving a high-five.

In the second image, a tiger is walking in a forest. The tiger's coat is orange with black stripes, and the background is green trees and vegetation.curl

# ======= Important =======

# The API keys for different regions are different. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

# The following is the base_url for the Singapore region. If you use a model in the Virginia region, change the base_url to https://dashscope-us.aliyuncs.com/compatible-mode/v1/chat/completions

# If you use a model in the China (Beijing) region, replace the base_url with: https://dashscope.aliyuncs.com/compatible-mode/v1/chat/completions

# === Delete this comment before execution ===

curl -X POST https://dashscope-intl.aliyuncs.com/compatible-mode/v1/chat/completions \

-H "Authorization: Bearer $DASHSCOPE_API_KEY" \

-H 'Content-Type: application/json' \

-d '{

"model": "qwen3-vl-plus",

"messages": [

{

"role": "user",

"content": [

{

"type": "image_url",

"image_url": {

"url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"

}

},

{

"type": "image_url",

"image_url": {

"url": "https://dashscope.oss-cn-beijing.aliyuncs.com/images/tiger.png"

}

},

{

"type": "text",

"text": "What do these images depict?"

}

]

}

]

}'Response

{

"choices": [

{

"message": {

"content": "Image 1 shows a scene of a woman and a Labrador retriever interacting on a beach. The woman is wearing a plaid shirt and sitting on the sand, shaking hands with the dog. The background is a sea view and a sunset sky, and the whole picture looks very warm and harmonious.\n\nImage 2 shows a scene of a tiger walking in a forest. The tiger's coat is orange with black stripes, and it is stepping forward. The surroundings are dense trees and vegetation, and the ground is covered with fallen leaves. The whole picture is full of natural wildness and vitality.",

"role": "assistant"

},

"finish_reason": "stop",

"index": 0,

"logprobs": null

}

],

"object": "chat.completion",

"usage": {

"prompt_tokens": 2497,

"completion_tokens": 109,

"total_tokens": 2606

},

"created": 1725948561,

"system_fingerprint": null,

"model": "qwen3-vl-plus",

"id": "chatcmpl-0fd66f46-b09e-9164-a84f-3ebbbedbac15"

}DashScope

Python

import os

import dashscope

# The following is the base_url for the Singapore region. If you use a model in the Virginia region, change the base_url to https://dashscope-us.aliyuncs.com/api/v1

# If you use a model in the China (Beijing) region, replace the base_url with: https://dashscope.aliyuncs.com/api/v1

dashscope.base_http_api_url = 'https://dashscope-intl.aliyuncs.com/api/v1'

messages = [

{

"role": "user",

"content": [

{"image": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"},

{"image": "https://dashscope.oss-cn-beijing.aliyuncs.com/images/tiger.png"},

{"text": "What do these images depict?"}

]

}

]

response = dashscope.MultiModalConversation.call(

# The API keys for different regions are different. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

# If you have not configured the environment variable, replace the following line with your Model Studio API key: api_key="sk-xxx"

api_key=os.getenv('DASHSCOPE_API_KEY'),

model='qwen3-vl-plus', # This example uses qwen3-vl-plus. You can replace the model name as needed. For a list of models, see https://www.alibabacloud.com/help/en/model-studio/getting-started/models

messages=messages

)

print(response.output.choices[0].message.content[0]["text"])Return result

These images show some animals and natural scenes. In the first image, a person and a dog are interacting on a beach. The second image is of a tiger walking in a forest.Java

import java.util.Arrays;

import java.util.Collections;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

import com.alibaba.dashscope.utils.Constants;

public class Main {

static {

// The following is the base_url for the Singapore region. If you use a model in the Virginia region, change the base_url to https://dashscope-us.aliyuncs.com/api/v1

// If you use a model in the China (Beijing) region, replace the base_url with: https://dashscope.aliyuncs.com/api/v1

Constants.baseHttpApiUrl="https://dashscope-intl.aliyuncs.com/api/v1";

}

public static void simpleMultiModalConversationCall()

throws ApiException, NoApiKeyException, UploadFileException {

MultiModalConversation conv = new MultiModalConversation();

MultiModalMessage userMessage = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(

Collections.singletonMap("image", "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"),

Collections.singletonMap("image", "https://dashscope.oss-cn-beijing.aliyuncs.com/images/tiger.png"),

Collections.singletonMap("text", "What do these images depict?"))).build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

// The API keys for different regions are different. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model("qwen3-vl-plus") // This example uses qwen3-vl-plus. You can replace the model name as needed. For a list of models, see https://www.alibabacloud.com/help/en/model-studio/getting-started/models

.messages(Arrays.asList(userMessage))

.build();

MultiModalConversationResult result = conv.call(param);

System.out.println(result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("text")); }

public static void main(String[] args) {

try {

simpleMultiModalConversationCall();

} catch (ApiException | NoApiKeyException | UploadFileException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}Response

These images show some animals and natural scenes.

1. First image: A woman and a dog are interacting on a beach. The woman is wearing a plaid shirt and sitting on the sand, and the dog is wearing a collar and extending its paw to shake hands with the woman.

2. Second image: A tiger is walking in a forest. The tiger's coat is orange with black stripes, and the background is trees and leaves.curl

# ======= Important =======

# The following is the base_url for the Singapore region. If you use a model in the Virginia region, change the base_url to https://dashscope-us.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# If you use a model in the China (Beijing) region, replace the base_url with: https://dashscope.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# The API keys for different regions are different. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

# === Delete this comment before execution ===

curl --location 'https://dashscope-intl.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation' \

--header "Authorization: Bearer $DASHSCOPE_API_KEY" \

--header 'Content-Type: application/json' \

--data '{

"model": "qwen3-vl-plus",

"input":{

"messages":[

{

"role": "user",

"content": [

{"image": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"},

{"image": "https://dashscope.oss-cn-beijing.aliyuncs.com/images/tiger.png"},

{"text": "What do these images show?"}

]

}

]

}

}'Response

{

"output": {

"choices": [

{

"finish_reason": "stop",

"message": {

"role": "assistant",

"content": [

{

"text": "These images show some animals and natural scenes. In the first image, a person and a dog are interacting on a beach. The second image is of a tiger walking in a forest."

}

]

}

}

]

},

"usage": {

"output_tokens": 81,

"input_tokens": 1277,

"image_tokens": 2497

},

"request_id": "ccf845a3-dc33-9cda-b581-20fe7dc23f70"

}Video understanding

Qwen-VL supports understanding video content. You can provide files in the form of an image list (video frames) or a video file. The following is an example of code for understanding an online video or image list that is specified by a URL. For more information about video limits or the number of images that can be passed in an image list, see the Video limits section.

Use the latest or a recent snapshot version of the model for better performance in understanding video files.

Video file

Qwen-VL analyzes content by extracting a sequence of frames from a video. The frame extraction frequency determines the level of detail in the model's analysis. Different SDKs have different frame extraction frequencies. You can control the frame extraction frequency using the fps parameter. This parameter specifies that one frame is extracted every [0.1, 10] and the default value is 2.0. We recommend that you set a higher fps for high-speed motion scenes and a lower fps for static or long videos.

Qwen-VL analyzes content by extracting a sequence of frames from a video. Use the following parameters to control the frame extraction policy:

fps: Controls the frequency. One frame every

seconds. The value range is [0.1, 10] and the default value is 2.0. High-speed motion scenes: Higher value for more details.

Static or long videos: Lower value for efficiency.

max_frames: The upper limit of frames extracted. When the number calculated based on fps exceeds max_frames, the system automatically and evenly samples frames to stay within the limit. This parameter is active only for the DashScope SDK.

OpenAI compatible

When you directly input a video file to Qwen-VL using the OpenAI SDK or HTTP method, you must set the"type"parameter in the user message to"video_url".

Python

import os

from openai import OpenAI

client = OpenAI(

# API keys vary by region. To get an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

# If the environment variable is not configured, replace the following line with your Model Studio API key: api_key="sk-xxx",

api_key=os.getenv("DASHSCOPE_API_KEY"),

# The following base_url is for the Singapore region. If you use a model in the US (Virginia) region, change the base_url to https://dashscope-us.aliyuncs.com/compatible-mode/v1

# If you use a model in the China (Beijing) region, replace the base_url with: https://dashscope.aliyuncs.com/compatible-mode/v1

base_url="https://dashscope-intl.aliyuncs.com/compatible-mode/v1",

)

completion = client.chat.completions.create(

model="qwen3-vl-plus",

messages=[

{

"role": "user",

"content": [

# When passing a video file directly, set the value of type to video_url

{

"type": "video_url",

"video_url": {

"url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241115/cqqkru/1.mp4"

},

"fps": 2

},

{

"type": "text",

"text": "What is the content of this video?"

}

]

}

]

)

print(completion.choices[0].message.content)Node.js

import OpenAI from "openai";

const openai = new OpenAI(

{

// API keys vary by region. To get an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

// If the environment variable is not configured, replace the following line with your Model Studio API key: apiKey: "sk-xxx"

apiKey: process.env.DASHSCOPE_API_KEY,

// The following baseURL is for the Singapore region. If you use a model in the US (Virginia) region, change the baseURL to https://dashscope-us.aliyuncs.com/compatible-mode/v1

// If you use a model in the China (Beijing) region, replace the baseURL with: https://dashscope.aliyuncs.com/compatible-mode/v1

baseURL: "https://dashscope-intl.aliyuncs.com/compatible-mode/v1"

}

);

async function main() {

const response = await openai.chat.completions.create({

model: "qwen3-vl-plus",

messages: [

{

role: "user",

content: [

// When passing a video file directly, set the value of type to video_url

{

type: "video_url",

video_url: {

"url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241115/cqqkru/1.mp4"

},

"fps": 2

},

{

type: "text",

text: "What is the content of this video?"

}

]

}

]

});

console.log(response.choices[0].message.content);

}

main();curl

# ======= Important =======

# API keys vary by region. To get an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

# The following base_url is for the Singapore region. If you use a model in the US (Virginia) region, change the base_url to https://dashscope-us.aliyuncs.com/compatible-mode/v1/chat/completions

# If you use a model in the China (Beijing) region, replace the base_url with: https://dashscope.aliyuncs.com/compatible-mode/v1/chat/completions

# === Delete this comment before execution ===

curl -X POST https://dashscope-intl.aliyuncs.com/compatible-mode/v1/chat/completions \

-H "Authorization: Bearer $DASHSCOPE_API_KEY" \

-H 'Content-Type: application/json' \

-d '{

"model": "qwen3-vl-plus",

"messages": [

{

"role": "user",

"content": [

{

"type": "video_url",

"video_url": {

"url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241115/cqqkru/1.mp4"

},

"fps":2

},

{

"type": "text",

"text": "What is the content of this video?"

}

]

}

]

}'DashScope

Python

import dashscope

import os

# The following base_url is for the Singapore region. If you use a model in the US (Virginia) region, change the base_url to https://dashscope-us.aliyuncs.com/api/v1

# If you use a model in the China (Beijing) region, replace the base_url with: https://dashscope.aliyuncs.com/api/v1

dashscope.base_http_api_url = 'https://dashscope-intl.aliyuncs.com/api/v1'

messages = [

{"role": "user",

"content": [

# The fps parameter controls the video frame extraction frequency, meaning one frame is extracted every 1/fps seconds. For complete usage, see https://www.alibabacloud.com/help/en/model-studio/use-qwen-by-calling-api?#2ed5ee7377fum

{"video": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241115/cqqkru/1.mp4","fps":2},

{"text": "What is the content of this video?"}

]

}

]

response = dashscope.MultiModalConversation.call(

# API keys vary by region. To get an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

# If the environment variable is not configured, replace the following line with your Model Studio API key: api_key ="sk-xxx"

api_key=os.getenv('DASHSCOPE_API_KEY'),

model='qwen3-vl-plus',

messages=messages

)

print(response.output.choices[0].message.content[0]["text"])Java

import java.util.Arrays;

import java.util.Collections;

import java.util.HashMap;

import java.util.Map;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

import com.alibaba.dashscope.utils.Constants;

public class Main {

static {

// The following base_url is for the Singapore region. If you use a model in the US (Virginia) region, change the base_url to https://dashscope-us.aliyuncs.com/api/v1

// If you use a model in the China (Beijing) region, replace the base_url with: https://dashscope.aliyuncs.com/api/v1

Constants.baseHttpApiUrl="https://dashscope-intl.aliyuncs.com/api/v1";

}

public static void simpleMultiModalConversationCall()

throws ApiException, NoApiKeyException, UploadFileException {

MultiModalConversation conv = new MultiModalConversation();

// The fps parameter controls the video frame extraction frequency, meaning one frame is extracted every 1/fps seconds. For complete usage, see https://www.alibabacloud.com/help/en/model-studio/use-qwen-by-calling-api?#2ed5ee7377fum

Map<String, Object> params = new HashMap<>();

params.put("video", "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241115/cqqkru/1.mp4");

params.put("fps", 2);

MultiModalMessage userMessage = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(

params,

Collections.singletonMap("text", "What is the content of this video?"))).build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

// If you use a model in the China (Beijing) region, you must use an API key from the China (Beijing) region. Get an API key at: https://www.alibabacloud.com/help/en/model-studio/get-api-key

// If the environment variable is not configured, replace the following line with your Model Studio API key: .apiKey("sk-xxx")

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model("qwen3-vl-plus")

.messages(Arrays.asList(userMessage))

.build();

MultiModalConversationResult result = conv.call(param);

System.out.println(result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("text"));

}

public static void main(String[] args) {

try {

simpleMultiModalConversationCall();

} catch (ApiException | NoApiKeyException | UploadFileException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}curl

# ======= Important =======

# The following base_url is for the Singapore region. If you use a model in the US (Virginia) region, change the base_url to https://dashscope-us.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# If you use a model in the China (Beijing) region, replace the base_url with: https://dashscope.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# API keys vary by region. To get an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

# === Delete this comment before execution ===

curl -X POST https://dashscope-intl.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation \

-H "Authorization: Bearer $DASHSCOPE_API_KEY" \

-H 'Content-Type: application/json' \

-d '{

"model": "qwen3-vl-plus",

"input":{

"messages":[

{"role": "user","content": [{"video": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241115/cqqkru/1.mp4","fps":2},

{"text": "What is the content of this video?"}]}]}

}'Image list

When a video is passed as a list of images (pre-extracted video frames), you can use the fps parameter to inform the model of the time interval between video frames. This helps the model better understand the sequence, duration, and dynamic changes of events. The model supports specifying the original video's frame rate using the fps parameter, which indicates that the video frames were extracted from the original video every

OpenAI compatible

When you input a video as a list of images to the Qwen-VL model using the OpenAI SDK or an HTTP request, you must set the"type"parameter in the user message to"video".

Python

import os

from openai import OpenAI

client = OpenAI(

# API keys vary by region. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

# If you have not configured an environment variable, replace the following line with your Model Studio API key: api_key="sk-xxx",

api_key=os.getenv("DASHSCOPE_API_KEY"),

# The following base_url is for the Singapore region. If you use a model in the US (Virginia) region, replace the base_url with https://dashscope-us.aliyuncs.com/compatible-mode/v1.

# If you use a model in the China (Beijing) region, replace the base_url with https://dashscope.aliyuncs.com/compatible-mode/v1.

base_url="https://dashscope-intl.aliyuncs.com/compatible-mode/v1",

)

completion = client.chat.completions.create(

model="qwen3-vl-plus", # This example uses qwen3-vl-plus. Replace the model name as needed. For a list of models, see https://www.alibabacloud.com/help/en/model-studio/models

messages=[{"role": "user","content": [

# When you input an image list, set the "type" parameter in the user message to "video".

{"type": "video","video": [

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/xzsgiz/football1.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/tdescd/football2.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/zefdja/football3.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/aedbqh/football4.jpg"],

"fps":2},

{"type": "text","text": "Describe the process shown in this video"},

]}]

)

print(completion.choices[0].message.content)Node.js

// Make sure that you have specified "type": "module" in the package.json file.

import OpenAI from "openai";

const openai = new OpenAI({

// API keys vary by region. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

// If you have not configured an environment variable, replace the following line with your Model Studio API key: apiKey: "sk-xxx",

apiKey: process.env.DASHSCOPE_API_KEY,

// The following baseURL is for the Singapore region. If you use a model in the US (Virginia) region, replace the baseURL with https://dashscope-us.aliyuncs.com/compatible-mode/v1.

// If you use a model in the China (Beijing) region, replace the baseURL with https://dashscope.aliyuncs.com/compatible-mode/v1.

baseURL: "https://dashscope-intl.aliyuncs.com/compatible-mode/v1"

});

async function main() {

const response = await openai.chat.completions.create({

model: "qwen3-vl-plus", // This example uses qwen3-vl-plus. Replace the model name as needed. For a list of models, see https://www.alibabacloud.com/help/en/model-studio/models

messages: [{

role: "user",

content: [

{

// When you input an image list, set the "type" parameter in the user message to "video".

type: "video",

video: [

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/xzsgiz/football1.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/tdescd/football2.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/zefdja/football3.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/aedbqh/football4.jpg"],

"fps": 2

},

{

type: "text",

text: "Describe the process shown in this video"

}

]

}]

});

console.log(response.choices[0].message.content);

}

main();curl

# ======= Important =======

# API keys vary by region. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

# The following base_url is for the Singapore region. If you use a model in the US (Virginia) region, replace the base_url with https://dashscope-us.aliyuncs.com/compatible-mode/v1/chat/completions.

# If you use a model in the China (Beijing) region, replace the base_url with https://dashscope.aliyuncs.com/compatible-mode/v1/chat/completions.

# === Delete this comment before you run the command ===

curl -X POST https://dashscope-intl.aliyuncs.com/compatible-mode/v1/chat/completions \

-H "Authorization: Bearer $DASHSCOPE_API_KEY" \

-H 'Content-Type: application/json' \

-d '{

"model": "qwen3-vl-plus",

"messages": [{"role": "user","content": [{"type": "video","video": [

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/xzsgiz/football1.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/tdescd/football2.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/zefdja/football3.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/aedbqh/football4.jpg"],

"fps":2},

{"type": "text","text": "Describe the process shown in this video"}]}]

}'DashScope

Python

import os

import dashscope

# The following base_http_api_url is for the Singapore region. If you use a model in the US (Virginia) region, replace the URL with https://dashscope-us.aliyuncs.com/api/v1.

# If you use a model in the China (Beijing) region, replace the URL with https://dashscope.aliyuncs.com/api/v1.

dashscope.base_http_api_url = 'https://dashscope-intl.aliyuncs.com/api/v1'

messages = [{"role": "user",

"content": [

# When you input an image list, the fps parameter applies to the Qwen2.5-VL and Qwen3-VL series models.

{"video":["https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/xzsgiz/football1.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/tdescd/football2.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/zefdja/football3.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/aedbqh/football4.jpg"],

"fps":2},

{"text": "Describe the process shown in this video"}]}]

response = dashscope.MultiModalConversation.call(

# API keys vary by region. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

# If you have not configured an environment variable, replace the following line with your Model Studio API key: api_key="sk-xxx",

api_key=os.getenv("DASHSCOPE_API_KEY"),

model='qwen3-vl-plus', # This example uses qwen3-vl-plus. Replace the model name as needed. For a list of models, see https://www.alibabacloud.com/help/en/model-studio/getting-started/models

messages=messages

)

print(response.output.choices[0].message.content[0]["text"])Java

// The version of the DashScope SDK must be 2.21.10 or later.

import java.util.Arrays;

import java.util.Collections;

import java.util.Map;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

import com.alibaba.dashscope.utils.Constants;

public class Main {

static {

// The following baseHttpApiUrl is for the Singapore region. If you use a model in the US (Virginia) region, replace the URL with https://dashscope-us.aliyuncs.com/api/v1.

// If you use a model in the China (Beijing) region, replace the URL with https://dashscope.aliyuncs.com/api/v1.

Constants.baseHttpApiUrl="https://dashscope-intl.aliyuncs.com/api/v1";

}

private static final String MODEL_NAME = "qwen3-vl-plus"; // This example uses qwen3-vl-plus. Replace the model name as needed. For a list of models, see https://www.alibabacloud.com/help/en/model-studio/getting-started/models

public static void videoImageListSample() throws ApiException, NoApiKeyException, UploadFileException {

MultiModalConversation conv = new MultiModalConversation();

// When you input an image list, the fps parameter applies to the Qwen2.5-VL and Qwen3-VL series models.

Map<String, Object> params = new HashMap<>();

params.put("video", Arrays.asList("https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/xzsgiz/football1.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/tdescd/football2.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/zefdja/football3.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/aedbqh/football4.jpg"));

params.put("fps", 2);

MultiModalMessage userMessage = MultiModalMessage.builder()

.role(Role.USER.getValue())

.content(Arrays.asList(

params,

Collections.singletonMap("text", "Describe the process shown in this video")))

.build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

// API keys vary by region. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

// If you have not configured an environment variable, replace the following line with your Model Studio API key: .apiKey("sk-xxx")

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model(MODEL_NAME)

.messages(Arrays.asList(userMessage)).build();

MultiModalConversationResult result = conv.call(param);

System.out.print(result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("text"));

}

public static void main(String[] args) {

try {

videoImageListSample();

} catch (ApiException | NoApiKeyException | UploadFileException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}curl

# ======= Important =======

# The following URL is for the Singapore region. If you use a model in the US (Virginia) region, replace the URL with https://dashscope-us.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation.

# If you use a model in the China (Beijing) region, replace the URL with https://dashscope.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation.

# API keys vary by region. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

# === Delete this comment before you run the command ===

curl -X POST https://dashscope-intl.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation \

-H "Authorization: Bearer $DASHSCOPE_API_KEY" \

-H 'Content-Type: application/json' \

-d '{

"model": "qwen3-vl-plus",

"input": {

"messages": [

{

"role": "user",

"content": [

{

"video": [

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/xzsgiz/football1.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/tdescd/football2.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/zefdja/football3.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/aedbqh/football4.jpg"

],

"fps":2

},

{

"text": "Describe the process shown in this video"

}

]

}

]

}

}'Pass local files (Base64 encoding or file path)

Qwen-VL provides two ways to upload local files: Base64 encoding and direct file path upload. Choose the upload method based on the file size and SDK type. For specific recommendations, see How to choose a file upload method. Both methods must meet the file requirements described in Image limits.

Base64 encoding upload

Convert the file to a Base64 encoded string and then pass it to the model. This method is supported by the OpenAI and DashScope SDKs, and HTTP requests.

File path upload

Pass the local file path directly to the model. This method is supported only by the DashScope Python and Java SDKs, not by DashScope HTTP or OpenAI-compatible methods.

Refer to the table below to specify the file path based on your programming language and operating system.

Image

Pass by file path

Python

import os

import dashscope

# The following is the base_url for the Singapore region. If you use a model in the Virginia region, change the base_url to https://dashscope-us.aliyuncs.com/api/v1

# If you use a model in the China (Beijing) region, replace the base_url with: https://dashscope.aliyuncs.com/api/v1

dashscope.base_http_api_url = 'https://dashscope-intl.aliyuncs.com/api/v1'

# Replace xxx/eagle.png with the absolute path of your local image.

local_path = "xxx/eagle.png"

image_path = f"file://{local_path}"

messages = [

{'role':'user',

'content': [{'image': image_path},

{'text': 'What is depicted in the image?'}]}]

response = dashscope.MultiModalConversation.call(

# The API keys for different regions are different. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

# If you have not configured the environment variable, replace the following line with your Model Studio API key: api_key="sk-xxx"

api_key=os.getenv('DASHSCOPE_API_KEY'),

model='qwen3-vl-plus', # This example uses qwen3-vl-plus. You can replace the model name as needed. For a list of models, see https://www.alibabacloud.com/help/en/model-studio/getting-started/models

messages=messages)

print(response.output.choices[0].message.content[0]["text"])Java

import java.util.Arrays;

import java.util.Collections;

import java.util.HashMap;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

import com.alibaba.dashscope.utils.Constants;

public class Main {

static {

// The following is the base_url for the Singapore region. If you use a model in the Virginia region, change the base_url to https://dashscope-us.aliyuncs.com/api/v1

// If you use a model in the China (Beijing) region, replace the base_url with: https://dashscope.aliyuncs.com/api/v1

Constants.baseHttpApiUrl="https://dashscope-intl.aliyuncs.com/api/v1";

}

public static void callWithLocalFile(String localPath)

throws ApiException, NoApiKeyException, UploadFileException {

String filePath = "file://"+localPath;

MultiModalConversation conv = new MultiModalConversation();

MultiModalMessage userMessage = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(new HashMap<String, Object>(){{put("image", filePath);}},

new HashMap<String, Object>(){{put("text", "What is depicted in the image?");}})).build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

// The API keys for different regions are different. To obtain an API key, see https://www.alibabacloud.com/help/en/model-studio/get-api-key

// If you have not configured the environment variable, replace the following line with your Model Studio API key: .apiKey("sk-xxx")

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model("qwen3-vl-plus") // This example uses qwen3-vl-plus. You can replace the model name as needed. For a list of models, see https://www.alibabacloud.com/help/en/model-studio/getting-started/models

.messages(Arrays.asList(userMessage))

.build();

MultiModalConversationResult result = conv.call(param);

System.out.println(result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("text"));}

public static void main(String[] args) {

try {

// Replace xxx/eagle.png with the absolute path of your local image

callWithLocalFile("xxx/eagle.png");

} catch (ApiException | NoApiKeyException | UploadFileException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}Pass in Base64 encoding

OpenAI compatible

Python

from openai import OpenAI

import os

import base64