If you want to use a separate Logtail process to collect logs from all containers on a Kubernetes node, you can install Logtail in a Kubernetes cluster in DaemonSet mode. This topic describes the implementation, limits, prerequisites, and procedure of collecting container text logs in DaemonSet mode.

Implementation

DaemonSet mode

In DaemonSet mode, only one Logtail container runs on a node in a Kubernetes cluster. You can use Logtail to collect logs from all containers on a node.

When a node is added to a Kubernetes cluster, the cluster automatically creates a Logtail container on the new node. When a node is removed from a Kubernetes cluster, the cluster automatically destroys the Logtail container on the node. DaemonSets and custom identifier-based machine groups eliminate the need to manually manage Logtail processes.

Container discovery

Before a Logtail container can collect logs from other containers, the Logtail container must identify and determine which containers are running. This process is called container discovery. In the container discovery phase, the Logtail container does not communicate with the kube-apiserver component of the Kubernetes cluster. Instead, the Logtail container communicates with the container runtime daemon of the node on which the Logtail container runs to obtain information about all containers on the node. This prevents the container discovery process from generating pressure on the kube-apiserver component.

When you use Logtail to collect logs, you can specify conditions such as namespaces, pod names, pod labels, and container environment variables to determine containers from which Logtail collects or does not collect logs.

File path mapping for containers

Pods in a Kubernetes cluster are isolated. As a result, the Logtail container in a pod cannot directly access the files of containers in a different pod. The file system of a container is created by mounting the file system of the container host to the container. A Logtail container can access any file on the container host only after the file system that includes the root directory of the container host is mounted to the Logtail container. This way, the Logtail container can collect logs from the files in the file system. The relationship between file paths in a container and file paths on the container host is called file path mapping.

For example, a file path in a container is /log/app.log. After file path mapping, the file path on the container host is /var/lib/docker/containers/<container-id>/log/app.log. By default, the file system that includes the root directory of the container host is mounted to the /logtail_host directory of the Logtail container. Therefore, the Logtail container collects logs from /logtail_host/var/lib/docker/containers/<container-id>/log/app.log.

Limits

Container runtime: Logtail can collect data only from the containers that use the Docker engine or containerd engine. For Docker containers, only overlay and overlay2 storage drivers are supported. If other storage drivers are used, you must mount a volume to the directory of logs. Then, a temporary directory is generated.

Volume mounting: If an File Storage NAS file system is mounted to the directory of logs by using a PersistentVolumeClaim (PVC), you cannot install Logtail in DaemonSet mode. In this case, we recommend that you install Logtail in Sidecar or Deployment mode. Then, Logtail can collect logs. For more information, see Collect text logs from Kubernetes containers in Sidecar mode.

Log file path:

The symbolic links of file paths in containers are not supported. You must specify an actual path as the collection directory.

If a volume is mounted to the data directory of an application container, you must specify the data directory or its subdirectory as the collection directory. For example, if a volume is mounted to the

/var/log/servicedirectory and you set the collection directory to/var/log, Logtail cannot collect logs from the /var/log directory. You must specify/var/log/serviceor its subdirectory as the collection directory.

Log collection stopping:

Docker: When a container is stopped, Logtail immediately releases the current container file handles. Then, the container can exit as expected. If collection latency exists due to issues such as network latency or resource overconsumption before the container is stopped, some logs that are collected before the container is stopped may be lost.

containerd: When a container is stopped, Logtail does not release the current container file handles until all logs are sent. In this period, the files remain open. If collection latency exists due to issues such as network latency or resource overconsumption, the container may fail to be destroyed in a timely manner.

Prerequisites

The Logtail components are installed. For more information, see Install Logtail components in an ACK cluster.

Ports 80 (HTTP) and 443 (HTTPS) for outbound traffic are enabled for the server on which Logtail is installed. If the server is an Elastic Computing Service (ECS) instance, you can reconfigure the related security group rules to enable the ports. For more information about how to configure a security group rule, see Add a security group rule.

Logs are continuously generated in the container from which you want to collect logs. Logtail collects only incremental logs. If a log file on your server is not updated after a Logtail configuration is delivered and applied to the server, Logtail does not collect logs from the file. For more information, see Read log files.

The required UNIX domain socket exists, and Logtail has access permissions on the UNIX domain socket. The UNIX domain socket varies based on the container engine.

Docker:

/run/docker.sockcontainerd:

/run/containerd/containerd.sock

Create a Logtail configuration

If you use a CustomResourceDefinition (CRD) to create a Logtail configuration and modify the Logtail configuration in the Simple Log Service console, the modification is not synchronized to the CRD. If you want to modify a Logtail configuration that is created by using a CRD, you must modify the CRD. If you modify the configuration in the Simple Log Service console, Logtail configuration inconsistency may occur.

Console

Log on to the Simple Log Service console.

In the Quick Data Import section, click Import Data. In the Import Data dialog box, click the Kubernetes - File card.

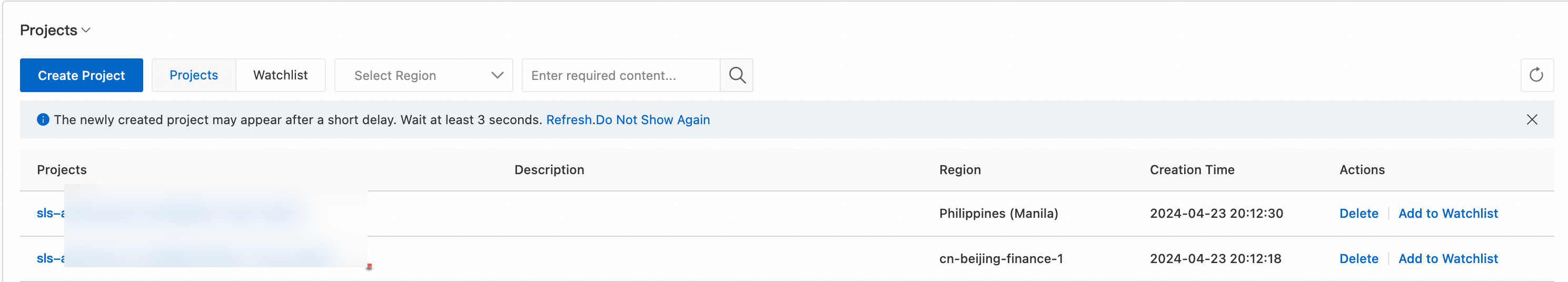

Select the required project and Logstore. Then, click Next. In this example, select the project that you use to install the Logtail components and the Logstore that you create.

In the Machine Group Configurations step, perform the following operations:

Use one of the following settings based on your business requirements:

- Important

Subsequent settings vary based on the preceding settings.

Confirm that the required machine groups are added to the Applied Server Groups section. Then, click Next. After you install Logtail components in a Container Service for Kubernetes (ACK) cluster, Simple Log Service automatically creates a machine group named

k8s-group-${your_k8s_cluster_id}. You can directly use this machine group.ImportantIf you want to create a machine group, click Create Machine Group. In the panel that appears, configure the parameters to create a machine group. For more information, see Use the ACK console.

If the heartbeat status of a machine group is FAIL, click Automatic Retry. If the issue persists, see How do I troubleshoot an error related to a Logtail machine group in a host environment?

Create a Logtail configuration and click Next. Simple Log Service starts to collect logs after the Logtail configuration is created.

NoteA Logtail configuration requires up to 3 minutes to take effect.

Create indexes and preview data. Then, click Next. By default, full-text indexing is enabled in Simple Log Service. You can also configure field indexes based on collected logs in manual mode or automatic mode. To configure field indexes in automatic mode, click Automatic Index Generation. This way, Simple Log Service automatically creates field indexes. For more information, see Create indexes.

ImportantIf you want to query all fields in logs, we recommend that you use full-text indexes. If you want to query only specific fields, we recommend that you use field indexes. This helps reduce index traffic. If you want to analyze fields, you must create field indexes. You must include a SELECT statement in your query statement for analysis.

Click Query Log. Then, you are redirected to the query and analysis page of your Logstore.

You must wait approximately 1 minute for the indexes to take effect. Then, you can view the collected logs on the Raw Logs tab. For more information, see Query and analyze logs.

(Recommended) CRD - AliyunPipelineConfig

Create a Logtail configuration

Only the Logtail components V0.5.1 or later support AliyunPipelineConfig.

To create a Logtail configuration, you need to only create a CR from the AliyunPipelineConfig CRD. After the Logtail configuration is created, it is automatically applied. If you want to modify a Logtail configuration that is created based on a CR, you must modify the CR.

Obtain the kubeconfig file of a cluster and use kubectl to connect to the cluster.

Run the following command to create a YAML file.

In the following command,

cube.yamlis a sample file name. You can specify a different file name based on your business requirements.vim cube.yamlEnter the following script in the YAML file and configure the parameters based on your business requirements.

ImportantThe value of the

configNameparameter must be unique in the Simple Log Service project that you use to install the Logtail components.You must configure a CR for each Logtail configuration. If multiple CRs are associated with the same Logtail configuration, the CRs other than the first CR do not take effect.

For more information about the parameters related to the

AliyunPipelineConfigCRD, see (Recommended) Use AliyunPipelineConfig to manage a Logtail configuration. In this example, the Logtail configuration includes settings for text log collection. For more information, see CreateLogtailPipelineConfig.Make sure that the Logstore specified by the config.flushers.Logstore parameter exists. You can configure the spec.logstore parameter to automatically create a Logstore.

Collect single-line text logs from specific containers

In this example, a Logtail configuration named

example-k8s-fileis created to collect single-line text logs from the containers whose names containappin a cluster. The file istest.LOG, and the path is/data/logs/app_1.The collected logs are stored in a Logstore named

k8s-file, which belongs to a project namedk8s-log-test.apiVersion: telemetry.alibabacloud.com/v1alpha1 # Create a CR from the ClusterAliyunPipelineConfig CRD. kind: ClusterAliyunPipelineConfig metadata: # Specify the name of the resource. The name must be unique in the current Kubernetes cluster. The name is the same as the name of the Logtail configuration that is created. name: example-k8s-file spec: # Specify the project to which logs are collected. project: name: k8s-log-test # Create a Logstore to store logs. logstores: - name: k8s-file # Configure the parameters for the Logtail configuration. config: # Configure the Logtail input plug-ins. inputs: # Use the input_file plug-in to collect text logs from containers. - Type: input_file # Specify the file path in the containers. FilePaths: - /data/logs/app_1/**/test.LOG # Enable the container discovery feature. EnableContainerDiscovery: true # Add conditions to filter containers. Multiple conditions are evaluated by using a logical AND. ContainerFilters: # Specify the namespace of the pod to which the required containers belong. Regular expression matching is supported. K8sNamespaceRegex: default # Specify the name of the required containers. Regular expression matching is supported. K8sContainerRegex: ^(.*app.*)$ # Configure the Logtail output plug-ins. flushers: # Use the flusher_sls plug-in to send logs to a specific Logstore. - Type: flusher_sls # Make sure that the Logstore exists. Logstore: k8s-file # Make sure that the endpoint is valid. Endpoint: cn-hangzhou.log.aliyuncs.com Region: cn-hangzhou TelemetryType: logsCollect multi-line text logs from all containers and use regular expressions to parse the logs

In this example, a Logtail configuration named

example-k8s-fileis created to collect multi-line text logs from all containers in a cluster. The file istest.LOG, and the path is/data/logs/app_1. The collected logs are parsed in JSON mode and stored in a Logstore namedk8s-file, which belongs to a project namedk8s-log-test.The sample log provided in the following example is read by the input_file plug-in in the

{"content": "2024-06-19 16:35:00 INFO test log\nline-1\nline-2\nend"}format. Then, the log is parsed based on a regular expression into{"time": "2024-06-19 16:35:00", "level": "INFO", "msg": "test log\nline-1\nline-2\nend"}.apiVersion: telemetry.alibabacloud.com/v1alpha1 # Create a CR from the ClusterAliyunPipelineConfig CRD. kind: ClusterAliyunPipelineConfig metadata: # Specify the name of the resource. The name must be unique in the current Kubernetes cluster. The name is the same as the name of the Logtail configuration that is created. name: example-k8s-file spec: # Specify the project to which logs are collected. project: name: k8s-log-test # Create a Logstore to store logs. logstores: - name: k8s-file # Configure the parameters for the Logtail configuration. config: # Specify the sample log. You can leave this parameter empty. sample: | 2024-06-19 16:35:00 INFO test log line-1 line-2 end # Configure the Logtail input plug-ins. inputs: # Use the input_file plug-in to collect multi-line text logs from containers. - Type: input_file # Specify the file path in the containers. FilePaths: - /data/logs/app_1/**/test.LOG # Enable the container discovery feature. EnableContainerDiscovery: true # Enable multi-line log collection. Multiline: # Specify the custom mode to match the beginning of the first line of a log based on a regular expression. Mode: custom # Specify the regular expression that is used to match the beginning of the first line of a log. StartPattern: \d+-\d+-\d+.* # Specify the Logtail processing plug-ins. processors: # Use the processor_parse_regex_native plug-in to parse logs based on the specified regular expression. - Type: processor_parse_regex_native # Specify the name of the input field. SourceKey: content # Specify the regular expression that is used for the parsing. Use capturing groups to extract fields. Regex: (\d+-\d+-\d+\s*\d+:\d+:\d+)\s*(\S+)\s*(.*) # Specify the fields that you want to extract. Keys: ["time", "level", "msg"] # Configure the Logtail output plug-ins. flushers: # Use the flusher_sls plug-in to send logs to a specific Logstore. - Type: flusher_sls # Make sure that the Logstore exists. Logstore: k8s-file # Make sure that the endpoint is valid. Endpoint: cn-hangzhou.log.aliyuncs.com Region: cn-hangzhou TelemetryType: logsRun the following command to apply the Logtail configuration. After the Logtail configuration is applied, Logtail starts to collect text logs from the specified containers and send the logs to Simple Log Service.

In the following command,

cube.yamlis a sample file name. You can specify a different file name based on your business requirements.kubectl apply -f cube.yamlImportantAfter logs are collected, you must create indexes. Then, you can query and analyze the logs in the Logstore. For more information, see Create indexes.

CRD - AliyunLogConfig

To create a Logtail configuration, you need to only create a CR from the AliyunLogConfig CRD. After the Logtail configuration is created, it is automatically applied. If you want to modify a Logtail configuration that is created based on a CR, you must modify the CR.

Obtain the kubeconfig file of a cluster and use kubectl to connect to the cluster.

Run the following command to create a YAML file.

In the following command,

cube.yamlis a sample file name. You can specify a different file name based on your business requirements.vim cube.yamlEnter the following script in the YAML file and configure the parameters based on your business requirements.

ImportantThe value of the

configNameparameter must be unique in the Simple Log Service project that you use to install the Logtail components.If multiple CRs are associated with the same Logtail configuration, the Logtail configuration is affected when you delete or modify one of the CRs. After a CR is deleted or modified, the status of other associated CRs becomes inconsistent with the status of the Logtail configuration in Simple Log Service.

For more information about CR parameters, see Use AliyunLogConfig to manage a Logtail configuration. In this example, the Logtail configuration includes settings for text log collection. For more information, see CreateConfig.

Collect single-line text logs from specific containers

In this example, a Logtail configuration named

example-k8s-fileis created to collect single-line text logs from the containers of all the pods whose names begin withappin the cluster. The file istest.LOG, and the path is/data/logs/app_1. The collected logs are stored in a Logstore namedk8s-file, which belongs to a project namedk8s-log-test.apiVersion: log.alibabacloud.com/v1alpha1 kind: AliyunLogConfig metadata: # Specify the name of the resource. The name must be unique in the current Kubernetes cluster. name: example-k8s-file namespace: kube-system spec: # Specify the name of the project. If you leave this parameter empty, the project named k8s-log-<your_cluster_id> is used. project: k8s-log-test # Specify the name of the Logstore. If the specified Logstore does not exist, Simple Log Service automatically creates a Logstore. logstore: k8s-file # Configure the parameters for the Logtail configuration. logtailConfig: # Specify the type of the data source. If you want to collect text logs, set the value to file. inputType: file # Specify the name of the Logtail configuration. configName: example-k8s-file inputDetail: # Specify the simple mode to collect text logs. logType: common_reg_log # Specify the log file path. logPath: /data/logs/app_1 # Specify the log file name. You can use wildcard characters (* and ?) when you specify the log file name. Example: log_*.log. filePattern: test.LOG # Set the value to true if you want to collect text logs from containers. dockerFile: true # Specify conditions to filter containers. advanced: k8s: K8sPodRegex: '^(app.*)$'Run the following command to apply the Logtail configuration. After the Logtail configuration is applied, Logtail starts to collect text logs from the specified containers and send the logs to Simple Log Service.

In the following command,

cube.yamlis a sample file name. You can specify a different file name based on your business requirements.kubectl apply -f cube.yamlImportantAfter logs are collected, you must create indexes. Then, you can query and analyze the logs in the Logstore. For more information, see Create indexes.

View Logtail configurations

Console

Log on to the Simple Log Service console.

In the Projects section, click the target project.

Choose . Click the > icon of the target Logtail configurations. Choose .

Click the target Logtail configurations to view the details of the configurations.

(Recommended) CRD - AliyunPipelineConfig

View all Logtail configurations created by using the AliyunPipelineConfig CRD

View the details of a Logtail configuration created by using the AliyunPipelineConfig CRD

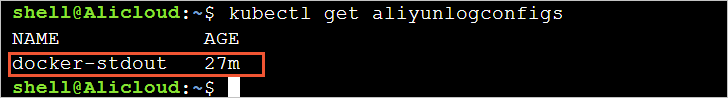

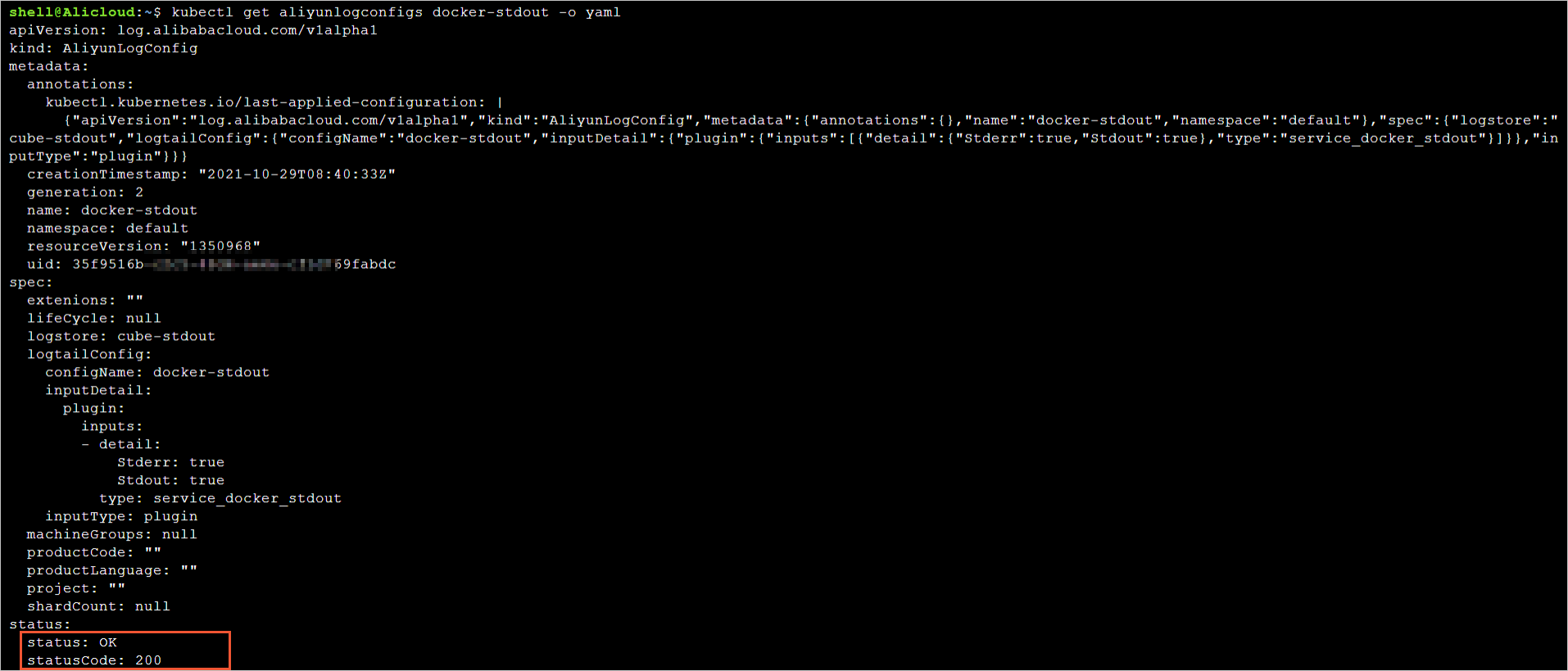

CRD - AliyunLogConfig

View all Logtail configurations created by using the AliyunLogConfig CRD

View the details of a Logtail configuration created by using the AliyunLogConfig CRD

Query and analyze the collected logs

In the Projects section of the Simple Log Service console, click the project that you want to manage to go to the details page of the project.

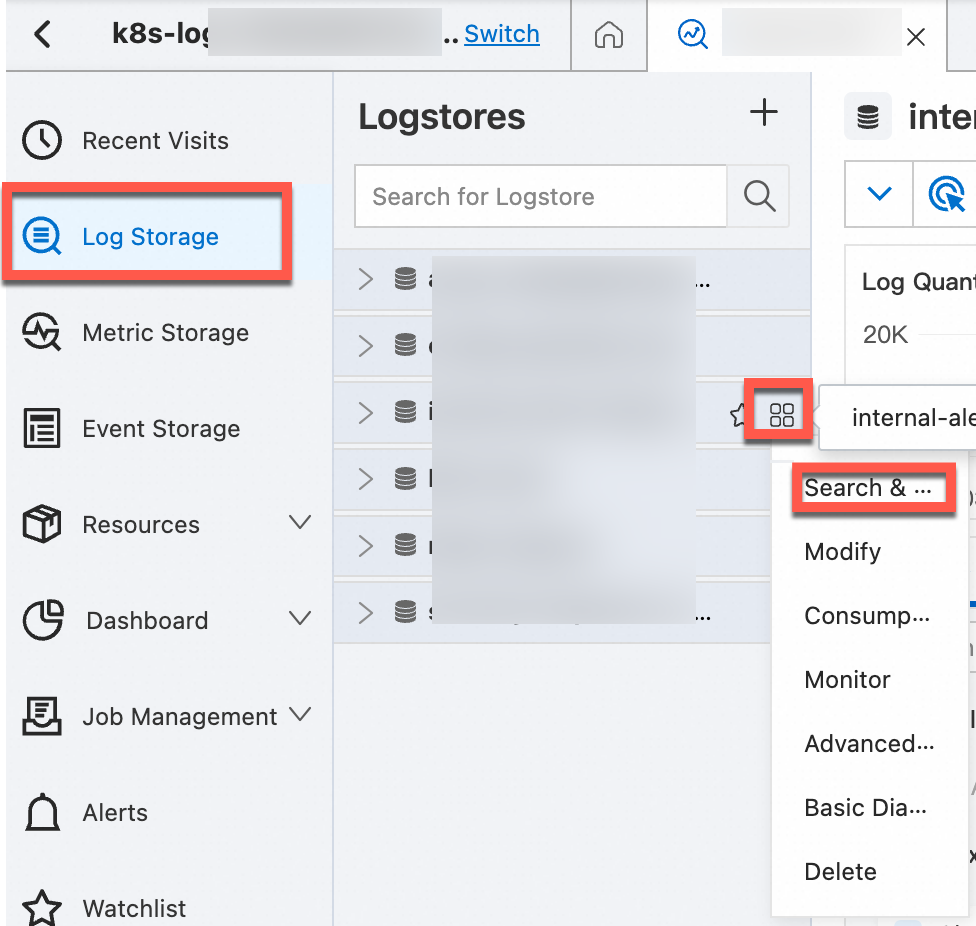

Find the Logstore that you want to manage, move the pointer over the Logstore, click the

icon, and then select Search & Analysis to view the logs of your Kubernetes cluster.

icon, and then select Search & Analysis to view the logs of your Kubernetes cluster.

Default fields in container text logs

The following table describes the fields that are included by default in each container text log.

Field name | Description |

__tag__:__hostname__ | The name of the container host. |

__tag__:__path__ | The log file path in the container. |

__tag__:_container_ip_ | The IP address of the container. |

__tag__:_image_name_ | The name of the image that is used by the container. |

__tag__:_pod_name_ | The name of the pod. |

__tag__:_namespace_ | The namespace to which the pod belongs. |

__tag__:_pod_uid_ | The unique identifier (UID) of the pod. |

References

If an exception occurs when you use Logtail to collect logs from containers, including standard containers and Kubernetes containers, see What do I do if errors occur when I collect logs from containers to troubleshoot the issue.

For information about how to collect stdout and stderr from Kubernetes containers, see Collect stdout and stderr from Kubernetes containers in DaemonSet mode.