Elastic Algorithm Service (EAS) of Platform for AI (PAI) provides the Scalable Job service for model training and inference. This service supports reusability and auto scaling. In model training scenarios in which multiple jobs are run concurrently, the Scalable Job service can help you reduce resource waste caused by frequent creation and release of instances. The Scalable Job service can also track the execution progress of each request in inference scenarios to implement task scheduling in a more efficient manner. This topic describes how to use the Scalable Job service.

Scenarios

Model training

Implementation

The system uses an architecture that decouples frontend and backend services to support resident frontend services and backend Scalable Job services.

Architecture benefits

In most cases, frontend services require few resources and low costs for deployment. You can deploy resident frontend services to avoid frequent creation of frontend services and reduce waiting time. The backend Scalable Job service allows you to run training tasks in a single instance multiple times. This prevents instances from being repeatedly started and released during multiple executions and improves service throughput. The Scalable Job service can also automatically scale based on the queue length to ensure efficient use of resources.

Model inference

In model inference scenarios, the Scalable Job service tracks the execution progress of each request to implement task scheduling in a more efficient manner.

In most cases, we recommend that you deploy inference services that take a longer period of time to respond as asynchronous inference services in EAS. However, asynchronous services have the following issues:

The queue service cannot preferentially send requests to idle instances, which may lead to insufficient resource utilization.

During a service scale-in, the queue service may remove instances that are still processing requests, which leads to the requests being interrupted and scheduled to another instance for execution again.

To resolve the preceding issues, the Scalable Job service implements the following optimization:

Optimization in subscription logic: ensures that requests are preferentially sent to idle instances. Before a Scalable Job instance is stopped or released, the instance is blocked for a period of time to ensure that the requests execution on the instance is completed.

Optimization in scaling logic: Different from the regular reporting mechanism of common monitoring services, the Scalable Job service uses a built-in monitoring service in the queue service to implement a dedicated monitoring link. This ensures that scale-outs and scale-ins are quickly triggered when the queue length exceeds or is below the threshold. The response time of the scaling is reduced from minutes to approximately 10 seconds.

Architecture

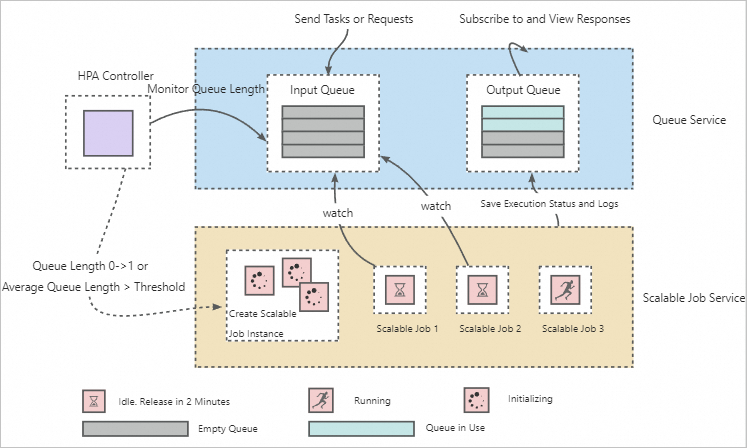

The architecture consists of the following sections: queue service, Horizontal Pod Autoscaling (HPA) controller, and Scalable Job.

How it works:

The queue service is used to decouple the sending and execution of requests or tasks. This enables that a single Scalable Job service can execute multiple requests or tasks.

The HPA controller is used to constantly monitor the number of pending training tasks and requests in the queue service to trigger automatic scaling of the Scalable Job instances. The following section describes the default auto scaling configurations for the Scalable Job service. For more information about the parameters, see Auto scaling.

{ "behavior":{ "onZero":{ "scaleDownGracePeriodSeconds":60 # The duration from the time when the scale-in is triggered to the time when all instances are removed. Unit: seconds. }, "scaleDown":{ "stabilizationWindowSeconds":1 # The duration from the time when the scale-in is triggered to the time when the scale-in starts. Unit: seconds. } }, "max":10, # The maximum number of scalable job instances. "min":1, # The minimum number of scalable job instances. "strategies":{ "avg_waiting_length":2 # The average queue length of each scalable job instance. } }

Deploy a service

Deploy an inference service

The process is similar to the process of creating an asynchronous inference service. Prepare a service configuration file based on the following example:

{

"containers": [

{

"image": "registry-vpc.cn-shanghai.aliyuncs.com/eas/eas-container-deploy-test:202010091755",

"command": "/data/eas/ENV/bin/python /data/eas/app.py"

"port": 8000,

}

],

"metadata": {

"name": "scalablejob",

"type": "ScalableJob",

"rpc.worker_threads": 4,

"instance": 1,

}

}Set type to ScalableJob to deploy the inference service as a scalable job. For information about other parameters, see Parameters of model services. For information about how to deploy an inference service, see Deploy online portrait service as a scalable job.

After you deploy the service, the system automatically creates a queue service and a scalable job and enables auto scaling.

Deploy a training service

The integrated deployment and independent deployment methods are supported. The following section describes how the deployment works and configuration details. For more information about the deployment method, see Deploy an auto-scaling Kohya training as a scalable job.

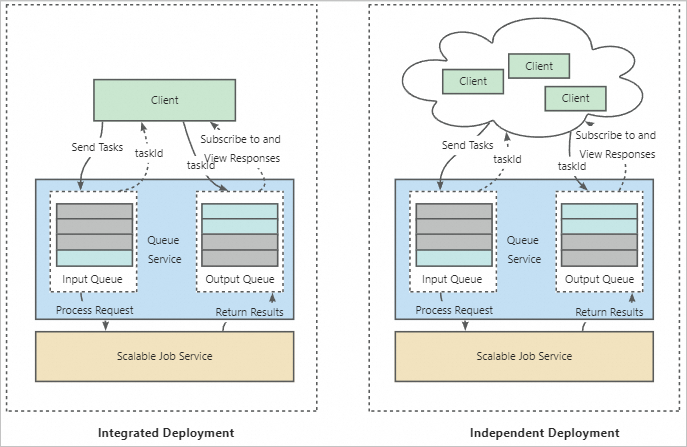

How the deployment works

Integrated deployment: EAS creates a frontend service in addition to a queue service and a Scalable Job service. The frontend service is used to receive requests and forward requests to the queue service. The frontend service functions as a client of the Scalable Job service. The Scalable Job service is associated with a unique frontend service. In this case, the Scalable Job service can only execute training tasks forwarded by the associated frontend service.

Independent deployment: Independent deployment is suitable for multi-user scenarios. In this mode, the Scalable Job service is used as a common backend service and can be associated with multiple frontend services. Each user can send training tasks from their frontend service. The backend Scalable Job service creates corresponding Scalable Job instances to execute training tasks and each job instance can execute multiple training tasks based on the sequence that they are submitted. This allows resource sharing among multiple users. You do not need to create training tasks multiple times, which effectively reduces the costs.

Configurations

When you deploy a Scalable Job service, you need to provide a custom image. Note that if you want to deploy Kohya-based services, you can use the Kohya_ss image that is preset in EAS. The image must contain all dependencies of the training task. The image is used as the execution environment of the training task. You do not need to configure the startup command or port number. If you need to perform initialization tasks before the training task, you can configure initialization commands. EAS creates a separate process in the Scalable Job instance to perform initialization tasks. For information about how to prepare a custom image, see Deploy a model service by using a custom image. Example preset image in EAS:

"containers": [ { "image": "eas-registry-vpc.cn-shanghai.cr.aliyuncs.com/pai-eas/kohya_ss:2.2" } ]Integrated deployment

Prepare a service configuration file. The following section provides a sample configuration file by using the preset Kohya_ss image in EAS.

{ "containers": [ { "image": "eas-registry-vpc.cn-shanghai.cr.aliyuncs.com/pai-eas/kohya_ss:2.2" } ], "metadata": { "cpu": 4, "enable_webservice": true, "gpu": 1, "instance": 1, "memory": 15000, "name": "kohya_job", "type": "ScalableJobService" }, "front_end": { "image": "eas-registry-vpc.cn-shanghai.cr.aliyuncs.com/pai-eas/kohya_ss:2.2", "port": 8001, "script": "python -u kohya_gui.py --listen 0.0.0.0 --server_port 8001 --data-dir /workspace --headless --just-ui --job-service" } }The following section describes the key parameters. For information about how to configure other parameters, see Parameters of model services.

Set type to ScalableJobService.

By default, the resource group used by the frontend service is the same as the resource group used by the Scalable Job service. The system allocates 2 vCPUs and 8 GB memory for the frontend service.

Customize the resource group or resources based on the following sample code:

{ "front_end": { "resource": "", # Modify the dedicated resource group that the frontend service uses. "cpu": 4, "memory": 8000 } }Customize the instance type that is used for deployment based on the following sample code:

{ "front_end": { "instance_type": "ecs.c6.large" } }

Independent deployment

Prepare a service configuration file. The following section provides a sample configuration file by using the preset Kohya_ss image in EAS.

{ "containers": [ { "image": "eas-registry-vpc.cn-shanghai.cr.aliyuncs.com/pai-eas/kohya_ss:2.2" } ], "metadata": { "cpu": 4, "enable_webservice": true, "gpu": 1, "instance": 1, "memory": 15000, "name": "kohya_job", "type": "ScalableJob" } }Set type to ScalableJobService. For information about how to configure other parameters, see Parameters of model services.

In this mode, you need to deploy a frontend service and implement a proxy in the frontend service that forwards the received requests to the queue of the Scalable Job service. This way, the frontend service and the backend Scalable Job service are associated. For more information, see the "Send data to the queue service" section in the Access queue service topic.

Call the service

To distinguish between the training service and the inference service, you need to configure a taskType:command/query field when you call the Scalable Job service. Parameters:

command: used to identify a training service.

query: used to identify an inference service.

When you call a service by using HTTP or an SDK, you need to explicitly specify taskType. Example:

Set tskType to query when you send an HTTP request:

curl http://166233998075****.cn-shanghai.pai-eas.aliyuncs.com/api/predict/scalablejob?taskType={Wanted_TaskType} -H 'Authorization: xxx' -D 'xxx'Use the tags parameter to specify taskType when you call an SDK:

# Create an input queue to send tasks or requests. queue_client = QueueClient('166233998075****.cn-shanghai.pai-eas.aliyuncs.com', 'scalabejob') queue_client.set_token('xxx') queue_client.init() tags = {"taskType": "wanted_task_type"} # Send a task or request to the input queue. index, request_id = inputQueue.put(cmd, tags)

Receive responses:

Inference service: You can use the SDK for the EAS queue service to obtain the results in the output queue. For more information, see the "Subscribe to the queue service" section in the Access queue service topic.

Training service: When you deploy the service, we recommend that you mount an Object Storage Service (OSS) bucket to store the training results in the OSS bucket for persistent storage. For more information, see Deploy a Kohya-based training as a scalable job.

Configure log collection

You can configure enable_write_log_to_queue to obtain real-time logs.

{

"scalable_job": {

"enable_write_log_to_queue": true

}

}In training scenarios, this configuration is enabled by default. The system writes real-time logs back to the output queue. You can use the SDK of the EAS queue service to obtain training logs in real time. For more information, see Deploy an auto-scaling Kohya training as a scalable job.

In inference scenarios, this configuration is disabled by default. The system outputs logs by using stdout.

References

For more information about the scenarios of the Scalable Job service, see the following topics: